S3 / Wasabi Backup

-

@olivierlambert From sources. Latest pull was last Friday, so 5.68.0/5.72.0.

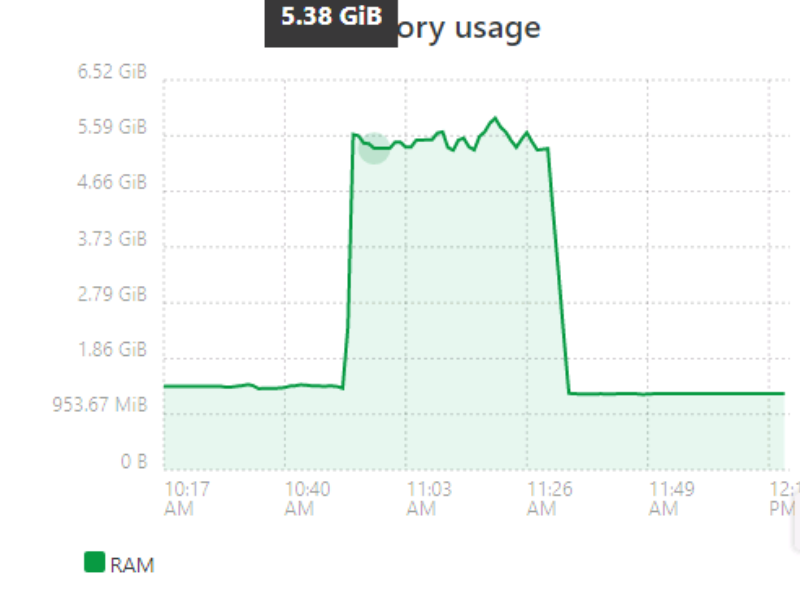

Memory usage is relatively stable around 1.4 GB (most of this morning, with

--max-old-space-size=2560) , balloons during a S3 backup job, and then goes back down to 1.4 GB when transfer is complete.

edit: The above was a backup without compression.

-

-

OK, DL'ed/Registered XOA, bumped it to 6 GB just in case (VM only, not the node process).

Updated to XOA latest channel, 5.51.1 (5.68.0/5.72.0). (p.s. thanks for the config backup/restore function. Needed to restart the VM or xo-server, but retained my S3 remote settings.)

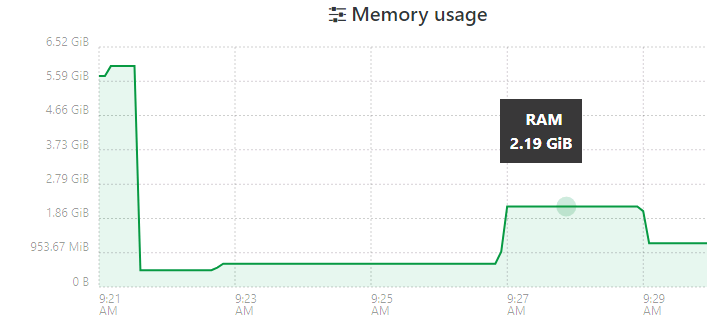

First is a pfSense VM, ~600 MB after zstd. The initial 5+GB usage is VM bootup.

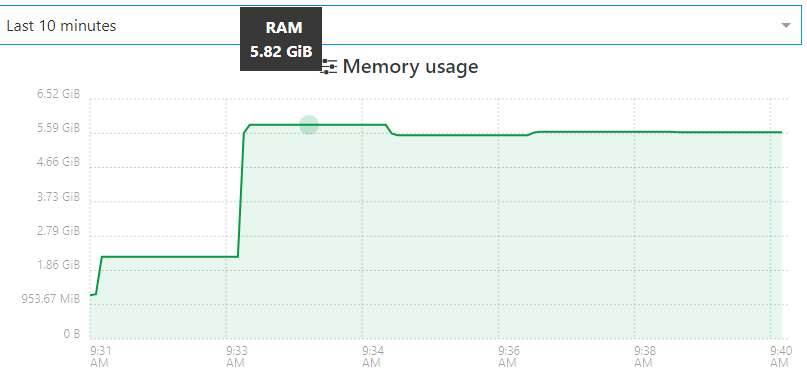

Next is the same VM that I used for the previous tests. ~7GB after ztsd. The job started around 9:30, where the initial ramp-up occurred (during snapshot and transfer start). Then it jumped further to 5+ GB.

That's about as clear as I can get in the 10 minute window. It finished, dropped down to 3.5GB, and then eventually back to 1.4GB.

-

@klou Did you try it without increasing the memory in XOA?

-

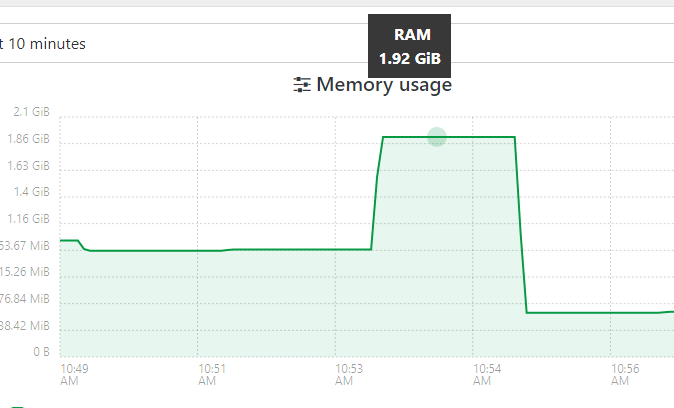

Just did, reduced to 2GB RAM. The pfSense Backup was about the same, except the post-backup idle state was around 900MB usage.

The 2nd VM bombed out with an "Interrupted" transfer status (similar to a few posts above).

-

@klou is it happening in a full backup? (this question will help me look at the right place in the code)

-

Yes, Full Backup, target is S3, both with or without compression.

(Side note: I didn't realize that Delta Backups were possible with S3. This could be significant in storage space. But I also assume that this is *nix VMs only?)

-

@klou

thanks.I assume all kinds of VMs can be backed up in delta. I don't have any connection between the VM type and the remote type.

-

If it helps, I'm reasonably certain that I was able to backup and upload similarly sized VMs previously without memory issues, before the 50GB+ chunking changes.

-

On it!

-

@klou, I see no obvious error, and I can't reproduce to really hunt it.

Since you're building from the sources, would you mind running a special test branch for me in a few days?

Right now, my working copy is tied up with something else that I would like to finish first.

Thanks,

Nicolas. -

@nraynaud Sorry about dropping this for a while. If you want to pick it back up, let me know and I'll try to test changes as well.

-

I'm getting this errors on big vms:

{ "data": { "mode": "full", "reportWhen": "failure" }, "id": "1605563266089", "jobId": "abfed8aa-b445-4c94-be5c-b6ed723792b8", "jobName": "amazonteste", "message": "backup", "scheduleId": "44840d13-2302-45ae-9460-53ed451183ed", "start": 1605563266089, "status": "failure", "infos": [ { "data": { "vms": [ "68b2bf30-8693-8419-20ab-18162d4042aa", "fbea839a-72ac-ca3d-51e5-d4248e363d72", "b24d8810-b7f5-5576-af97-6a6d5f9a8730" ] }, "message": "vms" } ], "tasks": [ { "data": { "type": "VM", "id": "68b2bf30-8693-8419-20ab-18162d4042aa" }, "id": "1605563266118:0", "message": "Starting backup of TEST. (abfed8aa-b445-4c94-be5c-b6ed723792b8)", "start": 1605563266118, "status": "failure", "tasks": [ { "id": "1605563266136", "message": "snapshot", "start": 1605563266136, "status": "success", "end": 1605563271882, "result": "a700da10-a281-a124-8283-970aa7675d39" }, { "id": "1605563271885", "message": "add metadata to snapshot", "start": 1605563271885, "status": "success", "end": 1605563271899 }, { "id": "1605563272085", "message": "waiting for uptodate snapshot record", "start": 1605563272085, "status": "success", "end": 1605563272287 }, { "id": "1605563272340", "message": "start VM export", "start": 1605563272340, "status": "success", "end": 1605563272362 }, { "data": { "id": "7847ac06-d46f-476a-84c1-608f9302cb67", "type": "remote" }, "id": "1605563272372", "message": "export", "start": 1605563272372, "status": "failure", "tasks": [ { "id": "1605563273029", "message": "transfer", "start": 1605563273029, "status": "failure", "end": 1605563715136, "result": { "message": "write EPIPE", "errno": "EPIPE", "code": "NetworkingError", "syscall": "write", "region": "eu-central-1", "hostname": "s3.eu-central-1.amazonaws.com", "retryable": true, "time": "2020-11-16T21:55:08.319Z", "name": "NetworkingError", "stack": "Error: write EPIPE\n at WriteWrap.onWriteComplete [as oncomplete] (internal/stream_base_commons.js:92:16)" } } ], "end": 1605563715348, "result": { "message": "write EPIPE", "errno": "EPIPE", "code": "NetworkingError", "syscall": "write", "region": "eu-central-1", "hostname": "s3.eu-central-1.amazonaws.com", "retryable": true, "time": "2020-11-16T21:55:08.319Z", "name": "NetworkingError", "stack": "Error: write EPIPE\n at WriteWrap.onWriteComplete [as oncomplete] (internal/stream_base_commons.js:92:16)" } }, { "id": "1605563717917", "message": "set snapshot.other_config[xo:backup:exported]", "start": 1605563717917, "status": "success", "end": 1605563721061 } ], "end": 1605564045507 }, { "data": { "type": "VM", "id": "fbea839a-72ac-ca3d-51e5-d4248e363d72" }, "id": "1605564045510", "message": "Starting backup of DESKTOPGREEN. (abfed8aa-b445-4c94-be5c-b6ed723792b8)", "start": 1605564045510, "status": "failure", "tasks": [ { "id": "1605564045533", "message": "snapshot", "start": 1605564045533, "status": "success", "end": 1605564047224, "result": "3e5fb1b5-c93e-f242-95a2-90757b38f727" }, { "id": "1605564047229", "message": "add metadata to snapshot", "start": 1605564047229, "status": "success", "end": 1605564047240 }, { "id": "1605564047429", "message": "waiting for uptodate snapshot record", "start": 1605564047429, "status": "success", "end": 1605564047631 }, { "id": "1605564047634", "message": "start VM export", "start": 1605564047634, "status": "success", "end": 1605564047652 }, { "data": { "id": "7847ac06-d46f-476a-84c1-608f9302cb67", "type": "remote" }, "id": "1605564047657", "message": "export", "start": 1605564047657, "status": "failure", "tasks": [ { "id": "1605564048778", "message": "transfer", "start": 1605564048778, "status": "failure", "end": 1605564534530, "result": { "message": "write EPIPE", "errno": "EPIPE", "code": "NetworkingError", "syscall": "write", "region": "eu-central-1", "hostname": "s3.eu-central-1.amazonaws.com", "retryable": true, "time": "2020-11-16T22:08:35.991Z", "name": "NetworkingError", "stack": "Error: write EPIPE\n at WriteWrap.onWriteComplete [as oncomplete] (internal/stream_base_commons.js:92:16)" } } ], "end": 1605564534693, "result": { "message": "write EPIPE", "errno": "EPIPE", "code": "NetworkingError", "syscall": "write", "region": "eu-central-1", "hostname": "s3.eu-central-1.amazonaws.com", "retryable": true, "time": "2020-11-16T22:08:35.991Z", "name": "NetworkingError", "stack": "Error: write EPIPE\n at WriteWrap.onWriteComplete [as oncomplete] (internal/stream_base_commons.js:92:16)" } }, { "id": "1605564535540", "message": "set snapshot.other_config[xo:backup:exported]", "start": 1605564535540, "status": "success", "end": 1605564538346 } ], "end": 1605571759789 }, { "data": { "type": "VM", "id": "b24d8810-b7f5-5576-af97-6a6d5f9a8730" }, "id": "1605571759883", "message": "Starting backup of grayLog. (abfed8aa-b445-4c94-be5c-b6ed723792b8)", "start": 1605571759883, "status": "failure", "tasks": [ { "id": "1605571759912", "message": "snapshot", "start": 1605571759912, "status": "success", "end": 1605571773047, "result": "600bf528-8c1d-5035-1876-615e1dd89fea" }, { "id": "1605571773051", "message": "add metadata to snapshot", "start": 1605571773051, "status": "success", "end": 1605571773081 }, { "id": "1605571773391", "message": "waiting for uptodate snapshot record", "start": 1605571773391, "status": "success", "end": 1605571773601 }, { "id": "1605571773603", "message": "start VM export", "start": 1605571773603, "status": "success", "end": 1605571773687 }, { "data": { "id": "7847ac06-d46f-476a-84c1-608f9302cb67", "type": "remote" }, "id": "1605571773719", "message": "export", "start": 1605571773719, "status": "failure", "tasks": [ { "id": "1605571774218", "message": "transfer", "start": 1605571774218, "status": "failure", "end": 1605572239807, "result": { "message": "write EPIPE", "errno": "EPIPE", "code": "NetworkingError", "syscall": "write", "region": "eu-central-1", "hostname": "s3.eu-central-1.amazonaws.com", "retryable": true, "time": "2020-11-17T00:17:01.143Z", "name": "NetworkingError", "stack": "Error: write EPIPE\n at WriteWrap.onWriteComplete [as oncomplete] (internal/stream_base_commons.js:92:16)" } } ], "end": 1605572239942, "result": { "message": "write EPIPE", "errno": "EPIPE", "code": "NetworkingError", "syscall": "write", "region": "eu-central-1", "hostname": "s3.eu-central-1.amazonaws.com", "retryable": true, "time": "2020-11-17T00:17:01.143Z", "name": "NetworkingError", "stack": "Error: write EPIPE\n at WriteWrap.onWriteComplete [as oncomplete] (internal/stream_base_commons.js:92:16)" } }, { "id": "1605572242195", "message": "set snapshot.other_config[xo:backup:exported]", "start": 1605572242195, "status": "success", "end": 1605572246834 } ], "end": 1605572705448 } ], "end": 1605572705499 }also delta backup have the same problem

-

@nraynaud on my env I received the following error:

The request signature we calculated does not match the signature you provided. Check your key and signing method. -

@cdbessig I fixed an error like that in version 5.51.1 (2020-10-14), are you using a version more recent than that?

Nicolas.

-

@nraynaud xo-server 5.66.1 xo-web 5.69.0

-

@cdbessig That's release is from 2020-09-04, so you should upgrade to take advantage of the latest fixes.

-

It's also explained very clearly here: https://xen-orchestra.com/docs/community.html (in the first point)

Always update to latest code available before reporting a problem