CEPH FS Storage Driver

-

Agree - unless we have enough interest from community to pack it or make it maintainable, it will stay a hack.

Also mentioning the command to apply patch

# patch -d/ -p1 < cephfs-8.patchEdit : updated patch strip level

-

Hello and thanks for the new patch. I understand that this is only a kind of hack or better a proof of concept. I do not want to use this for a productive system but only wanted to give it a try with nautilus and see what performance I can expect if this hack hopefully develops to a supported integration of Ceph.

I hoped that the patch might work for XCP-ng 8.0 as well but it seems it does not that easily. So I could also install a xenserver 7.6 and use the original patch and test how it works for me. I do not want you to invest too much work in the 8.0 adoption. So if you think testing the patch 8.0 does not really help you in any way, then please do not hesitate and tell me. Else if you are as well interested to test if it works with 8.0 here is what I found out:

The new patch does not work completely, there is a "patch unexpectedly ends in middle of line" -warning. On a fresh installed system:

# cd / # patch --verbose -p0 < /cephfs-8.patch mm... Looks like a unified diff to me... The text leading up to this was: -------------------------- |--- /opt/xensource/sm/nfs.py 20199-27 16:45:11.918853933 +0530 |+++ /opt/xensource/sm/nfs.py 20199-27 16:49:26.910379515 +0530 -------------------------- patching file /opt/xensource/sm/nfs.py Using Plan A... Hunk #1 succeeded at 137. Hunk #2 succeeded at 159. Hmm... The next patch looks like a unified diff to me... The text leading up to this was: -------------------------- |--- /opt/xensource/sm/NFSSR.py 20199-27 16:44:56.437557255 +0530 |+++ /opt/xensource/sm/NFSSR.py 20199-27 17:07:45.651188670 +0530 -------------------------- patching file /opt/xensource/sm/NFSSR.py Using Plan A... Hunk #1 succeeded at 137. Hunk #2 succeeded at 160. patch unexpectedly ends in middle of line Hunk #3 succeeded at 197 with fuzz 1. doneI can do a ceph.mount just like described before but when I instead try to create a new Ceph-FS-SR named "Ceph" in xencenter 8.0.0 I still get the error that SM has thrown a generic python exception.

The SMlog error log indicates that creating a NFS repos is tried with the given parameters and not a CephFS one:

... Sep 30 13:03:05 rzinstal4 SM: [975] sr_create {'sr_uuid': '79cc55db-ee9f-9cc0-8d94-8cdb2915363b', 'subtask_of': 'DummyRef:|6456510f-b2dd-4dcd-b6ab-eb5eb40ceb9c|SR.create', 'args': ['0'], 'host_ref': 'OpaqueRef:72898e54-20b2-4046-9825-94553457fa60', 'session_ref': 'OpaqueRef:8a0819af-8b91-4086-a9cf-04ae37dc8e79', 'device_config': {'server': '1.2.3.4', 'SRmaster': 'true', 'serverpath': '/base', 'options': 'name=admin,secretfile=/etc/ceph/admin.secret'}, 'command': 'sr_create', 'sr_ref': 'OpaqueRef:91c592f6-47a0-40d6-8a33-7fe01831145f'} Sep 30 13:03:05 rzinstal4 SM: [975] ['/usr/sbin/rpcinfo', '-p', '1.2.3.4'] Sep 30 13:03:05 rzinstal4 SM: [975] FAILED in util.pread: (rc 1) stdout: '', stderr: 'rpcinfo: can't contact portmapper: RPC: Remote system error - Connection refused Sep 30 13:03:05 rzinstal4 SM: [975] ' Sep 30 13:03:05 rzinstal4 SM: [975] Unable to obtain list of valid nfs versions ...I attached my /opt/xensource/sm/nfs.py file as well.

Thanks a lot

Rainer

nfs.py.txt -

@r1 said in CEPH FS Storage Driver:

patch -d/ -p0 < cephfs-8.patch

Please try

# patch -d/ -p0 < cephfs-8.patch -

I see that there is a call to

check_server_tcpwhich is expected to return True but not doing so in my patch. I'll modify it. -

The same patch is updated. Kindly download again and try with

# patch -d/ -p1 < cephfs-8.patch. -

Thanks for the new patch. Applying the patch does not report any errors or warnings. When I try to create a "NFS" SR I still get the generic python error message from xencenter however the error message in /var/log/SMlog has changed:

Oct 1 10:17:52 xenserver8 SM: [4975] lock: opening lock file /var/lock/sm/76ad6fee-a7a8-34e6-bcce-a53f69a677a9/sr Oct 1 10:17:52 xenserver8 SM: [4975] lock: acquired /var/lock/sm/76ad6fee-a7a8-34e6-bcce-a53f69a677a9/sr Oct 1 10:17:52 xenserver8 SM: [4975] sr_create {'sr_uuid': '76ad6fee-a7a8-34e6-bcce-a53f69a677a9', 'subtask_of': 'DummyRef:|c98cee36-24ff-4575-b7 43-3a84cd93ed17|SR.create', 'args': ['0'], 'host_ref': 'OpaqueRef:72898e54-20b2-4046-9825-94553457fa60', 'session_ref': 'OpaqueRef:175293cb-a791- 44be-bd5f-a4cb911adddb', 'device_config': {'server': '1.2.3.4', 'SRmaster': 'true', 'serverpath': '/base', 'options': 'name=admin,secretfil e=/etc/ceph/admin.secret'}, 'command': 'sr_create', 'sr_ref': 'OpaqueRef:a36f0371-1dc7-4685-a208-24e04a1c1e08'} Oct 1 10:17:52 xenserver8 SM: [4975] lock: released /var/lock/sm/76ad6fee-a7a8-34e6-bcce-a53f69a677a9/sr Oct 1 10:17:52 xenserver8 SM: [4975] ***** generic exception: sr_create: EXCEPTION <type 'exceptions.NameError'>, global name 'useroptions' is no t defined Oct 1 10:17:52 xenserver8 SM: [4975] File "/opt/xensource/sm/SRCommand.py", line 110, in run Oct 1 10:17:52 xenserver8 SM: [4975] return self._run_locked(sr) Oct 1 10:17:52 xenserver8 SM: [4975] File "/opt/xensource/sm/SRCommand.py", line 159, in _run_locked Oct 1 10:17:52 xenserver8 SM: [4975] rv = self._run(sr, target) Oct 1 10:17:52 xenserver8 SM: [4975] File "/opt/xensource/sm/SRCommand.py", line 323, in _run Oct 1 10:17:52 xenserver8 SM: [4975] return sr.create(self.params['sr_uuid'], long(self.params['args'][0])) Oct 1 10:17:52 xenserver8 SM: [4975] File "/opt/xensource/sm/NFSSR", line 216, in create Oct 1 10:17:52 xenserver8 SM: [4975] raise exn Oct 1 10:17:52 xenserver8 SM: [4975] Oct 1 10:17:52 xenserver8 SM: [4975] ***** NFS VHD: EXCEPTION <type 'exceptions.NameError'>, global name 'useroptions' is not defined Oct 1 10:17:52 xenserver8 SM: [4975] File "/opt/xensource/sm/SRCommand.py", line 372, in run Oct 1 10:17:52 xenserver8 SM: [4975] return self._run_locked(sr) Oct 1 10:17:52 xenserver8 SM: [4975] File "/opt/xensource/sm/SRCommand.py", line 159, in _run_locked Oct 1 10:17:52 xenserver8 SM: [4975] rv = self._run(sr, target) Oct 1 10:17:52 xenserver8 SM: [4975] File "/opt/xensource/sm/SRCommand.py", line 323, in _run Oct 1 10:17:52 xenserver8 SM: [4975] return sr.create(self.params['sr_uuid'], long(self.params['args'][0])) Oct 1 10:17:52 xenserver8 SM: [4975] File "/opt/xensource/sm/NFSSR", line 216, in create Oct 1 10:17:52 xenserver8 SM: [4975] raise exn Oct 1 10:17:52 xenserver8 SM: [4975] -

Looks like variable declaration issue. I had it working for XCP-NG 7.6... Give me some time to fix this and make it working for 8.0

-

@r1 Is this still in development; would like to get it working with 8.1?

Manually mounting the CephFS works, but the patch fails, probably not surprisingly; or is a GlusterFS approach a better option?

With Ceph Octopus and the cephadm approach setting Ceph up is really easy, so maybe this will become a more popular feature....?

-

@jmccoy555 I did not continue this. Will definitely rejuvenate it once 8.1 is released. Hopefully next week.

-

Notice: This is experimental and will be replaced by any updates to sm packageOk, so here is updated patch for XCP-NG 8.1

# cd / # wget "https://gist.githubusercontent.com/rushikeshjadhav/ea8a6e15c3b5e7f6e61fe0cb873173d2/raw/85acc83ec95cba5db7674572b79c403106b48a48/ceph-8.1.patch" # patch -p0 < ceph-8.1.patchApart from patch you would need to install

ceph-commonto obtainmount.ceph# yum install centos-release-ceph-nautilus --enablerepo=extras # yum install ceph-commonThere is no need of

ceph.koas now CephFS is part of kernel in 8.1.You can refer NFS SR (Ceph) creation screenshot in earlier post or use following

# xe sr-create type=nfs device-config:server=10.10.10.10 device-config:serverpath=/ device-config:options=name=admin,secretfile=/etc/ceph/admin.secret name-label=CephFSTo remove this and restore to normal state

# yum reinstall smNote: Keep secret in

/etc/ceph/admin.secretwith permission 600Edit: Hope to get this into a proper CephSR someday. Until then it must be considered for Proof of Concept only.

Changed ceph release repo to nautilus. -

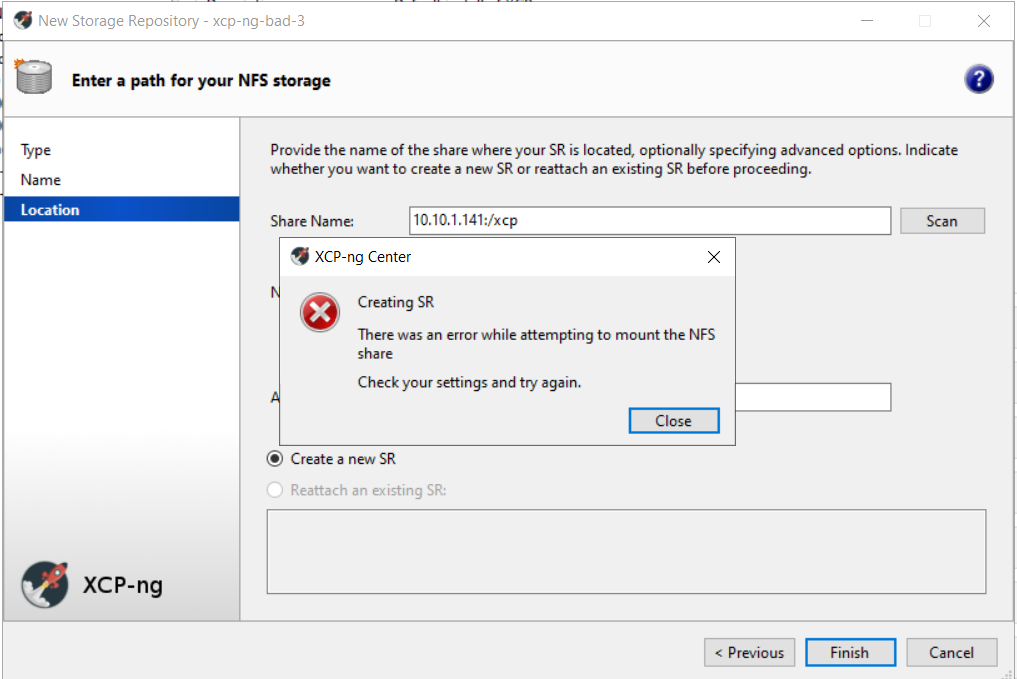

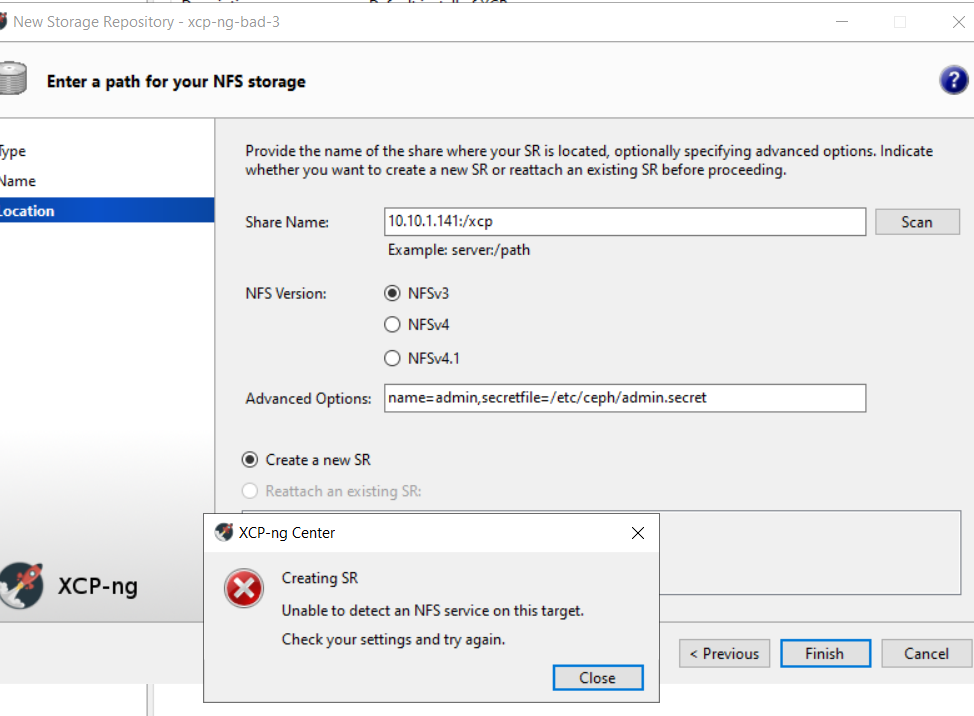

@r1 Hi, just given this a try, but with no luck....

Manual mount works and I can see files in /mnt/cephfs

mount -t ceph 10.10.1.141:/xcp /mnt/cephfs -o name=admin,secret=AQDZVn9eKD4hBBAAvf5GGWcRFQAZ1eqVPDynOQ==Trying as per the instructions.....

xe sr-create type=nfs device-config:server=10.10.1.141 device-config:serverpath=/xcp device-config:options=name=admin,secretfile=/etc/ceph/admin.secret name-label=CephFS Error code: SR_BACKEND_FAILURE_73 Error parameters: , NFS mount error [opterr=mount failed with return code 2],

And without the secret file just to check that wasn't the issue....

xe sr-create type=nfs device-config:server=10.10.1.141 device-config:serverpath=/xcp device-config:options=name=admin,secret=AQDZVn9eKD4hBBAAvf5GGWcRFQAZ1eqVPDynOQ== name-label=CephFS Error code: SR_BACKEND_FAILURE_108 Error parameters: , Unable to detect an NFS service on this target.,Last round in the log file

Apr 1 15:05:11 xcp-ng-bad-3 SM: [25476] lock: opening lock file /var/lock/sm/94fa22bb-e9a9-45b2-864a-392117b17891/sr Apr 1 15:05:11 xcp-ng-bad-3 SM: [25476] lock: acquired /var/lock/sm/94fa22bb-e9a9-45b2-864a-392117b17891/sr Apr 1 15:05:11 xcp-ng-bad-3 SM: [25476] sr_create {'sr_uuid': '94fa22bb-e9a9-45b2-864a-392117b17891', 'subtask_of': 'DummyRe f:|b72aac42-1790-40da-ba29-ed43a6554094|SR.create', 'args': ['0'], 'host_ref': 'OpaqueRef:c0ae3b47-b170-4d48-9397-3c973872a8b 2', 'session_ref': 'OpaqueRef:85070c69-888a-4827-8d19-ffd53944aa16', 'device_config': {'server': '10.10.1.141', 'SRmaster': ' true', 'serverpath': '/xcp', 'options': 'name=admin,secretfile=/etc/ceph/admin.secret'}, 'command': 'sr_create', 'sr_ref': 'O paqueRef:f775fe87-0d82-4029-a268-dd2cdf4feb27'} Apr 1 15:05:11 xcp-ng-bad-3 SM: [25476] _testHost: Testing host/port: 10.10.1.141,2049 Apr 1 15:05:11 xcp-ng-bad-3 SM: [25476] _testHost: Connect failed after 2 seconds (10.10.1.141) - [Errno 111] Connection ref used Apr 1 15:05:11 xcp-ng-bad-3 SM: [25476] Raising exception [108, Unable to detect an NFS service on this target.] Apr 1 15:05:11 xcp-ng-bad-3 SM: [25476] lock: released /var/lock/sm/94fa22bb-e9a9-45b2-864a-392117b17891/sr Apr 1 15:05:11 xcp-ng-bad-3 SM: [25476] ***** generic exception: sr_create: EXCEPTION <class 'SR.SROSError'>, Unable to dete ct an NFS service on this target. Apr 1 15:05:11 xcp-ng-bad-3 SM: [25476] File "/opt/xensource/sm/SRCommand.py", line 110, in run Apr 1 15:05:11 xcp-ng-bad-3 SM: [25476] return self._run_locked(sr) Apr 1 15:05:11 xcp-ng-bad-3 SM: [25476] File "/opt/xensource/sm/SRCommand.py", line 159, in _run_locked Apr 1 15:05:11 xcp-ng-bad-3 SM: [25476] rv = self._run(sr, target) Apr 1 15:05:11 xcp-ng-bad-3 SM: [25476] File "/opt/xensource/sm/SRCommand.py", line 323, in _run Apr 1 15:05:11 xcp-ng-bad-3 SM: [25476] return sr.create(self.params['sr_uuid'], long(self.params['args'][0])) Apr 1 15:05:11 xcp-ng-bad-3 SM: [25476] File "/opt/xensource/sm/NFSSR", line 198, in create Apr 1 15:05:11 xcp-ng-bad-3 SM: [25476] util._testHost(self.dconf['server'], NFSPORT, 'NFSTarget') Apr 1 15:05:11 xcp-ng-bad-3 SM: [25476] File "/opt/xensource/sm/util.py", line 915, in _testHost Apr 1 15:05:11 xcp-ng-bad-3 SM: [25476] raise xs_errors.XenError(errstring) Apr 1 15:05:11 xcp-ng-bad-3 SM: [25476] Apr 1 15:05:11 xcp-ng-bad-3 SM: [25476] ***** NFS VHD: EXCEPTION <class 'SR.SROSError'>, Unable to detect an NFS service on this target. Apr 1 15:05:11 xcp-ng-bad-3 SM: [25476] File "/opt/xensource/sm/SRCommand.py", line 372, in run Apr 1 15:05:11 xcp-ng-bad-3 SM: [25476] ret = cmd.run(sr) Apr 1 15:05:11 xcp-ng-bad-3 SM: [25476] File "/opt/xensource/sm/SRCommand.py", line 110, in run Apr 1 15:05:11 xcp-ng-bad-3 SM: [25476] return self._run_locked(sr) Apr 1 15:05:11 xcp-ng-bad-3 SM: [25476] File "/opt/xensource/sm/SRCommand.py", line 159, in _run_locked Apr 1 15:05:11 xcp-ng-bad-3 SM: [25476] rv = self._run(sr, target) Apr 1 15:05:11 xcp-ng-bad-3 SM: [25476] File "/opt/xensource/sm/SRCommand.py", line 323, in _run Apr 1 15:05:11 xcp-ng-bad-3 SM: [25476] return sr.create(self.params['sr_uuid'], long(self.params['args'][0])) Apr 1 15:05:11 xcp-ng-bad-3 SM: [25476] File "/opt/xensource/sm/NFSSR", line 198, in create Apr 1 15:05:11 xcp-ng-bad-3 SM: [25476] util._testHost(self.dconf['server'], NFSPORT, 'NFSTarget') Apr 1 15:05:11 xcp-ng-bad-3 SM: [25476] File "/opt/xensource/sm/util.py", line 915, in _testHost Apr 1 15:05:11 xcp-ng-bad-3 SM: [25476] raise xs_errors.XenError(errstring) Apr 1 15:05:11 xcp-ng-bad-3 SM: [25476]Is it still looking for a real NFS server?

edit: its logging port 2049 not 6789?

-

@jmccoy555 please refer the note about the secret to be put in a file.

-

@r1 tired that first, second code box and in XCP-ng center (I've moved the error box this time)

-

-

odd, no mount.cepth and I've just rerun yum install ceph-common and it's got dependency issues this time, so I guess that's not a great start!

[16:50 xcp-ng-bad-3 /]# yum install centos-release-ceph-luminous --enablerepo=extras Loaded plugins: fastestmirror Loading mirror speeds from cached hostfile * extras: mirror.mhd.uk.as44574.net Excluding mirror: updates.xcp-ng.org * xcp-ng-base: mirrors.xcp-ng.org Excluding mirror: updates.xcp-ng.org * xcp-ng-updates: mirrors.xcp-ng.org Package centos-release-ceph-luminous-1.1-2.el7.centos.noarch already installed and latest version Nothing to do [16:50 xcp-ng-bad-3 /]# yum install ceph-common Loaded plugins: fastestmirror Loading mirror speeds from cached hostfile Excluding mirror: updates.xcp-ng.org * xcp-ng-base: mirrors.xcp-ng.org Excluding mirror: updates.xcp-ng.org * xcp-ng-updates: mirrors.xcp-ng.org Resolving Dependencies --> Running transaction check ---> Package ceph-common.x86_64 2:12.2.11-0.el7 will be installed --> Processing Dependency: python-rgw = 2:12.2.11-0.el7 for package: 2:ceph-common-12.2.11-0.el7.x86_64 --> Processing Dependency: python-rbd = 2:12.2.11-0.el7 for package: 2:ceph-common-12.2.11-0.el7.x86_64 --> Processing Dependency: python-rados = 2:12.2.11-0.el7 for package: 2:ceph-common-12.2.11-0.el7.x86_64 --> Processing Dependency: python-cephfs = 2:12.2.11-0.el7 for package: 2:ceph-common-12.2.11-0.el7.x86_64 --> Processing Dependency: librbd1 = 2:12.2.11-0.el7 for package: 2:ceph-common-12.2.11-0.el7.x86_64 --> Processing Dependency: librados2 = 2:12.2.11-0.el7 for package: 2:ceph-common-12.2.11-0.el7.x86_64 --> Processing Dependency: libcephfs2 = 2:12.2.11-0.el7 for package: 2:ceph-common-12.2.11-0.el7.x86_64 --> Processing Dependency: python-requests for package: 2:ceph-common-12.2.11-0.el7.x86_64 --> Processing Dependency: python-prettytable for package: 2:ceph-common-12.2.11-0.el7.x86_64 --> Processing Dependency: libibverbs.so.1(IBVERBS_1.1)(64bit) for package: 2:ceph-common-12.2.11-0.el7.x86_64 --> Processing Dependency: libibverbs.so.1(IBVERBS_1.0)(64bit) for package: 2:ceph-common-12.2.11-0.el7.x86_64 --> Processing Dependency: libtcmalloc.so.4()(64bit) for package: 2:ceph-common-12.2.11-0.el7.x86_64 --> Processing Dependency: librbd.so.1()(64bit) for package: 2:ceph-common-12.2.11-0.el7.x86_64 --> Processing Dependency: libradosstriper.so.1()(64bit) for package: 2:ceph-common-12.2.11-0.el7.x86_64 --> Processing Dependency: libleveldb.so.1()(64bit) for package: 2:ceph-common-12.2.11-0.el7.x86_64 --> Processing Dependency: libibverbs.so.1()(64bit) for package: 2:ceph-common-12.2.11-0.el7.x86_64 --> Processing Dependency: libfuse.so.2()(64bit) for package: 2:ceph-common-12.2.11-0.el7.x86_64 --> Processing Dependency: libcephfs.so.2()(64bit) for package: 2:ceph-common-12.2.11-0.el7.x86_64 --> Processing Dependency: libceph-common.so.0()(64bit) for package: 2:ceph-common-12.2.11-0.el7.x86_64 --> Processing Dependency: libbabeltrace.so.1()(64bit) for package: 2:ceph-common-12.2.11-0.el7.x86_64 --> Processing Dependency: libbabeltrace-ctf.so.1()(64bit) for package: 2:ceph-common-12.2.11-0.el7.x86_64 --> Running transaction check ---> Package ceph-common.x86_64 2:12.2.11-0.el7 will be installed --> Processing Dependency: python-requests for package: 2:ceph-common-12.2.11-0.el7.x86_64 --> Processing Dependency: libibverbs.so.1(IBVERBS_1.1)(64bit) for package: 2:ceph-common-12.2.11-0.el7.x86_64 --> Processing Dependency: libibverbs.so.1(IBVERBS_1.0)(64bit) for package: 2:ceph-common-12.2.11-0.el7.x86_64 --> Processing Dependency: libtcmalloc.so.4()(64bit) for package: 2:ceph-common-12.2.11-0.el7.x86_64 --> Processing Dependency: libibverbs.so.1()(64bit) for package: 2:ceph-common-12.2.11-0.el7.x86_64 ---> Package fuse-libs.x86_64 0:2.9.2-10.el7 will be installed ---> Package leveldb.x86_64 0:1.12.0-5.el7.1 will be installed ---> Package libbabeltrace.x86_64 0:1.2.4-3.1.el7 will be installed ---> Package libcephfs2.x86_64 2:12.2.11-0.el7 will be installed --> Processing Dependency: libibverbs.so.1()(64bit) for package: 2:libcephfs2-12.2.11-0.el7.x86_64 ---> Package librados2.x86_64 1:0.94.5-2.el7 will be updated ---> Package librados2.x86_64 2:12.2.11-0.el7 will be an update --> Processing Dependency: libibverbs.so.1(IBVERBS_1.1)(64bit) for package: 2:librados2-12.2.11-0.el7.x86_64 --> Processing Dependency: libibverbs.so.1(IBVERBS_1.0)(64bit) for package: 2:librados2-12.2.11-0.el7.x86_64 --> Processing Dependency: liblttng-ust.so.0()(64bit) for package: 2:librados2-12.2.11-0.el7.x86_64 --> Processing Dependency: libibverbs.so.1()(64bit) for package: 2:librados2-12.2.11-0.el7.x86_64 ---> Package libradosstriper1.x86_64 2:12.2.11-0.el7 will be installed --> Processing Dependency: libibverbs.so.1()(64bit) for package: 2:libradosstriper1-12.2.11-0.el7.x86_64 ---> Package librbd1.x86_64 2:12.2.11-0.el7 will be installed --> Processing Dependency: libibverbs.so.1()(64bit) for package: 2:librbd1-12.2.11-0.el7.x86_64 ---> Package python-cephfs.x86_64 2:12.2.11-0.el7 will be installed ---> Package python-prettytable.noarch 0:0.7.2-1.el7 will be installed ---> Package python-rados.x86_64 2:12.2.11-0.el7 will be installed ---> Package python-rbd.x86_64 2:12.2.11-0.el7 will be installed ---> Package python-rgw.x86_64 2:12.2.11-0.el7 will be installed --> Processing Dependency: librgw2 = 2:12.2.11-0.el7 for package: 2:python-rgw-12.2.11-0.el7.x86_64 --> Processing Dependency: librgw.so.2()(64bit) for package: 2:python-rgw-12.2.11-0.el7.x86_64 --> Running transaction check ---> Package ceph-common.x86_64 2:12.2.11-0.el7 will be installed --> Processing Dependency: python-requests for package: 2:ceph-common-12.2.11-0.el7.x86_64 --> Processing Dependency: libibverbs.so.1(IBVERBS_1.1)(64bit) for package: 2:ceph-common-12.2.11-0.el7.x86_64 --> Processing Dependency: libibverbs.so.1(IBVERBS_1.0)(64bit) for package: 2:ceph-common-12.2.11-0.el7.x86_64 --> Processing Dependency: libtcmalloc.so.4()(64bit) for package: 2:ceph-common-12.2.11-0.el7.x86_64 --> Processing Dependency: libibverbs.so.1()(64bit) for package: 2:ceph-common-12.2.11-0.el7.x86_64 ---> Package libcephfs2.x86_64 2:12.2.11-0.el7 will be installed --> Processing Dependency: libibverbs.so.1()(64bit) for package: 2:libcephfs2-12.2.11-0.el7.x86_64 ---> Package librados2.x86_64 2:12.2.11-0.el7 will be an update --> Processing Dependency: libibverbs.so.1(IBVERBS_1.1)(64bit) for package: 2:librados2-12.2.11-0.el7.x86_64 --> Processing Dependency: libibverbs.so.1(IBVERBS_1.0)(64bit) for package: 2:librados2-12.2.11-0.el7.x86_64 --> Processing Dependency: libibverbs.so.1()(64bit) for package: 2:librados2-12.2.11-0.el7.x86_64 ---> Package libradosstriper1.x86_64 2:12.2.11-0.el7 will be installed --> Processing Dependency: libibverbs.so.1()(64bit) for package: 2:libradosstriper1-12.2.11-0.el7.x86_64 ---> Package librbd1.x86_64 2:12.2.11-0.el7 will be installed --> Processing Dependency: libibverbs.so.1()(64bit) for package: 2:librbd1-12.2.11-0.el7.x86_64 ---> Package librgw2.x86_64 2:12.2.11-0.el7 will be installed --> Processing Dependency: libibverbs.so.1()(64bit) for package: 2:librgw2-12.2.11-0.el7.x86_64 ---> Package lttng-ust.x86_64 0:2.10.0-1.el7 will be installed --> Processing Dependency: liburcu-cds.so.6()(64bit) for package: lttng-ust-2.10.0-1.el7.x86_64 --> Processing Dependency: liburcu-bp.so.6()(64bit) for package: lttng-ust-2.10.0-1.el7.x86_64 --> Running transaction check ---> Package ceph-common.x86_64 2:12.2.11-0.el7 will be installed --> Processing Dependency: python-requests for package: 2:ceph-common-12.2.11-0.el7.x86_64 --> Processing Dependency: libibverbs.so.1(IBVERBS_1.1)(64bit) for package: 2:ceph-common-12.2.11-0.el7.x86_64 --> Processing Dependency: libibverbs.so.1(IBVERBS_1.0)(64bit) for package: 2:ceph-common-12.2.11-0.el7.x86_64 --> Processing Dependency: libtcmalloc.so.4()(64bit) for package: 2:ceph-common-12.2.11-0.el7.x86_64 --> Processing Dependency: libibverbs.so.1()(64bit) for package: 2:ceph-common-12.2.11-0.el7.x86_64 ---> Package libcephfs2.x86_64 2:12.2.11-0.el7 will be installed --> Processing Dependency: libibverbs.so.1()(64bit) for package: 2:libcephfs2-12.2.11-0.el7.x86_64 ---> Package librados2.x86_64 2:12.2.11-0.el7 will be an update --> Processing Dependency: libibverbs.so.1(IBVERBS_1.1)(64bit) for package: 2:librados2-12.2.11-0.el7.x86_64 --> Processing Dependency: libibverbs.so.1(IBVERBS_1.0)(64bit) for package: 2:librados2-12.2.11-0.el7.x86_64 --> Processing Dependency: libibverbs.so.1()(64bit) for package: 2:librados2-12.2.11-0.el7.x86_64 ---> Package libradosstriper1.x86_64 2:12.2.11-0.el7 will be installed --> Processing Dependency: libibverbs.so.1()(64bit) for package: 2:libradosstriper1-12.2.11-0.el7.x86_64 ---> Package librbd1.x86_64 2:12.2.11-0.el7 will be installed --> Processing Dependency: libibverbs.so.1()(64bit) for package: 2:librbd1-12.2.11-0.el7.x86_64 ---> Package librgw2.x86_64 2:12.2.11-0.el7 will be installed --> Processing Dependency: libibverbs.so.1()(64bit) for package: 2:librgw2-12.2.11-0.el7.x86_64 ---> Package userspace-rcu.x86_64 0:0.10.0-3.el7 will be installed --> Finished Dependency Resolution Error: Package: 2:ceph-common-12.2.11-0.el7.x86_64 (centos-ceph-luminous) Requires: python-requests Error: Package: 2:ceph-common-12.2.11-0.el7.x86_64 (centos-ceph-luminous) Requires: libibverbs.so.1(IBVERBS_1.0)(64bit) Error: Package: 2:libcephfs2-12.2.11-0.el7.x86_64 (centos-ceph-luminous) Requires: libibverbs.so.1()(64bit) Error: Package: 2:librbd1-12.2.11-0.el7.x86_64 (centos-ceph-luminous) Requires: libibverbs.so.1()(64bit) Error: Package: 2:ceph-common-12.2.11-0.el7.x86_64 (centos-ceph-luminous) Requires: libibverbs.so.1()(64bit) Error: Package: 2:ceph-common-12.2.11-0.el7.x86_64 (centos-ceph-luminous) Requires: libibverbs.so.1(IBVERBS_1.1)(64bit) Error: Package: 2:librados2-12.2.11-0.el7.x86_64 (centos-ceph-luminous) Requires: libibverbs.so.1(IBVERBS_1.0)(64bit) Error: Package: 2:librados2-12.2.11-0.el7.x86_64 (centos-ceph-luminous) Requires: libibverbs.so.1()(64bit) Error: Package: 2:librados2-12.2.11-0.el7.x86_64 (centos-ceph-luminous) Requires: libibverbs.so.1(IBVERBS_1.1)(64bit) Error: Package: 2:libradosstriper1-12.2.11-0.el7.x86_64 (centos-ceph-luminous) Requires: libibverbs.so.1()(64bit) Error: Package: 2:librgw2-12.2.11-0.el7.x86_64 (centos-ceph-luminous) Requires: libibverbs.so.1()(64bit) Error: Package: 2:ceph-common-12.2.11-0.el7.x86_64 (centos-ceph-luminous) Requires: libtcmalloc.so.4()(64bit) You could try using --skip-broken to work around the problem You could try running: rpm -Va --nofiles --nodigest [16:51 xcp-ng-bad-3 /]# -

Not sure if its the best idea, but this did the trick

yum install ceph-common --enablerepo=base -

Oh ok.. I know why...

mount.cephcame in nautilus release. I have edited the post to install nautilus.Unlike RBD, CephFS gives browsable datastore + thin disks and is super easy to scale up/down in capacity with immediate reflection. I'd spend some time later to implement "Scan" and have a proper SR driver.

Thanks for testing and feel free to raise if you come across further issues.

-

Hi @r1 I've only had a quick play so far but it appears to work quite well.

I've shutdown a Ceph MDS node and it fails over to one of the other nodes and keeps working away.

I am running my Ceph cluster in VMs (at the moment one per host with the second SATA controller on the motherboard passed-through) so have shut down all nodes, rebooted my XCP-ng server, booted up the Ceph cluster and was able to reconnect the Ceph SR, so that's all good.

Unfortunately at the moment the patch breaks reconnecting the NFS SR, I think because the scan function is broken??, but reverting with

yum reinstall smfixes that, so not a big issue but is going to make things a bit more difficult until that is sorted after a host reboot. -

@jmccoy555 said in CEPH FS Storage Driver:

Unfortunately at the moment the patch breaks reconnecting the NFS SR,

It did not intend to.. I'll recheck on this.

-

@r1 ok, I'm only saying this as it didn't reconnect (the NFS server was up prior to boot) and if you try to add a new one the Scan button returns an error.