CEPH FS Storage Driver

-

Notice: This is experimental and will be replaced by any updates to sm packageOk, so here is updated patch for XCP-NG 8.1

# cd / # wget "https://gist.githubusercontent.com/rushikeshjadhav/ea8a6e15c3b5e7f6e61fe0cb873173d2/raw/85acc83ec95cba5db7674572b79c403106b48a48/ceph-8.1.patch" # patch -p0 < ceph-8.1.patchApart from patch you would need to install

ceph-commonto obtainmount.ceph# yum install centos-release-ceph-nautilus --enablerepo=extras # yum install ceph-commonThere is no need of

ceph.koas now CephFS is part of kernel in 8.1.You can refer NFS SR (Ceph) creation screenshot in earlier post or use following

# xe sr-create type=nfs device-config:server=10.10.10.10 device-config:serverpath=/ device-config:options=name=admin,secretfile=/etc/ceph/admin.secret name-label=CephFSTo remove this and restore to normal state

# yum reinstall smNote: Keep secret in

/etc/ceph/admin.secretwith permission 600Edit: Hope to get this into a proper CephSR someday. Until then it must be considered for Proof of Concept only.

Changed ceph release repo to nautilus. -

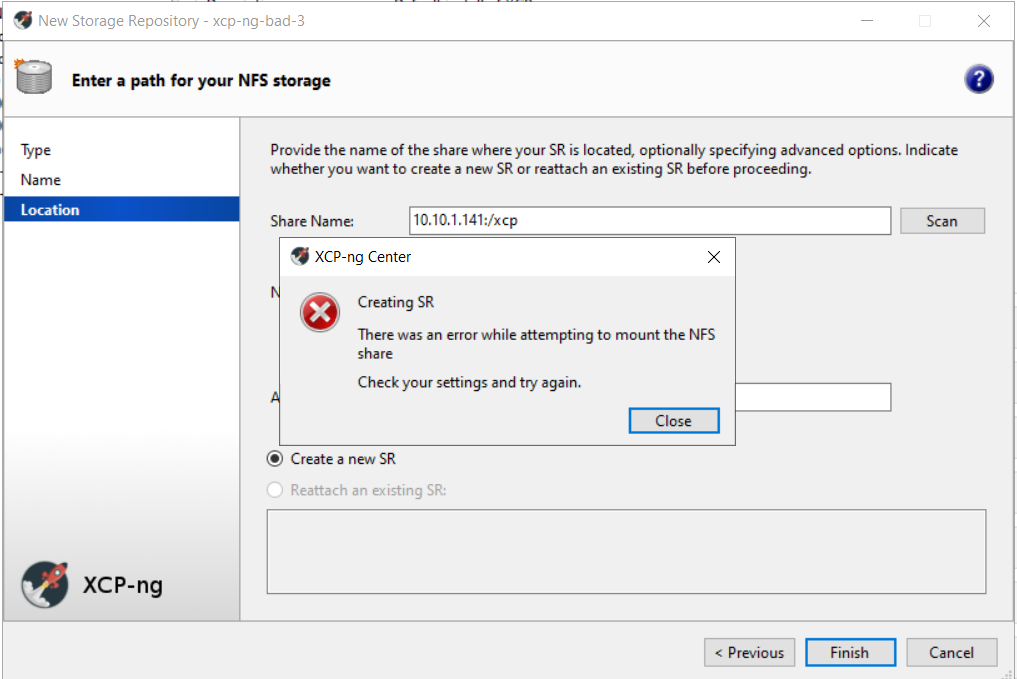

@r1 Hi, just given this a try, but with no luck....

Manual mount works and I can see files in /mnt/cephfs

mount -t ceph 10.10.1.141:/xcp /mnt/cephfs -o name=admin,secret=AQDZVn9eKD4hBBAAvf5GGWcRFQAZ1eqVPDynOQ==Trying as per the instructions.....

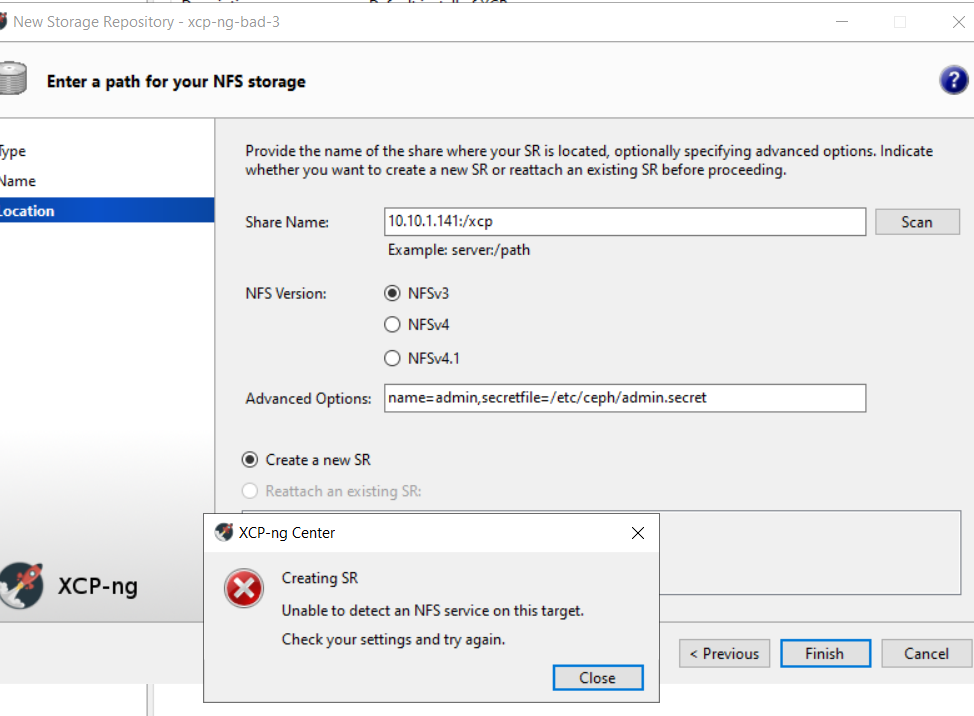

xe sr-create type=nfs device-config:server=10.10.1.141 device-config:serverpath=/xcp device-config:options=name=admin,secretfile=/etc/ceph/admin.secret name-label=CephFS Error code: SR_BACKEND_FAILURE_73 Error parameters: , NFS mount error [opterr=mount failed with return code 2],

And without the secret file just to check that wasn't the issue....

xe sr-create type=nfs device-config:server=10.10.1.141 device-config:serverpath=/xcp device-config:options=name=admin,secret=AQDZVn9eKD4hBBAAvf5GGWcRFQAZ1eqVPDynOQ== name-label=CephFS Error code: SR_BACKEND_FAILURE_108 Error parameters: , Unable to detect an NFS service on this target.,Last round in the log file

Apr 1 15:05:11 xcp-ng-bad-3 SM: [25476] lock: opening lock file /var/lock/sm/94fa22bb-e9a9-45b2-864a-392117b17891/sr Apr 1 15:05:11 xcp-ng-bad-3 SM: [25476] lock: acquired /var/lock/sm/94fa22bb-e9a9-45b2-864a-392117b17891/sr Apr 1 15:05:11 xcp-ng-bad-3 SM: [25476] sr_create {'sr_uuid': '94fa22bb-e9a9-45b2-864a-392117b17891', 'subtask_of': 'DummyRe f:|b72aac42-1790-40da-ba29-ed43a6554094|SR.create', 'args': ['0'], 'host_ref': 'OpaqueRef:c0ae3b47-b170-4d48-9397-3c973872a8b 2', 'session_ref': 'OpaqueRef:85070c69-888a-4827-8d19-ffd53944aa16', 'device_config': {'server': '10.10.1.141', 'SRmaster': ' true', 'serverpath': '/xcp', 'options': 'name=admin,secretfile=/etc/ceph/admin.secret'}, 'command': 'sr_create', 'sr_ref': 'O paqueRef:f775fe87-0d82-4029-a268-dd2cdf4feb27'} Apr 1 15:05:11 xcp-ng-bad-3 SM: [25476] _testHost: Testing host/port: 10.10.1.141,2049 Apr 1 15:05:11 xcp-ng-bad-3 SM: [25476] _testHost: Connect failed after 2 seconds (10.10.1.141) - [Errno 111] Connection ref used Apr 1 15:05:11 xcp-ng-bad-3 SM: [25476] Raising exception [108, Unable to detect an NFS service on this target.] Apr 1 15:05:11 xcp-ng-bad-3 SM: [25476] lock: released /var/lock/sm/94fa22bb-e9a9-45b2-864a-392117b17891/sr Apr 1 15:05:11 xcp-ng-bad-3 SM: [25476] ***** generic exception: sr_create: EXCEPTION <class 'SR.SROSError'>, Unable to dete ct an NFS service on this target. Apr 1 15:05:11 xcp-ng-bad-3 SM: [25476] File "/opt/xensource/sm/SRCommand.py", line 110, in run Apr 1 15:05:11 xcp-ng-bad-3 SM: [25476] return self._run_locked(sr) Apr 1 15:05:11 xcp-ng-bad-3 SM: [25476] File "/opt/xensource/sm/SRCommand.py", line 159, in _run_locked Apr 1 15:05:11 xcp-ng-bad-3 SM: [25476] rv = self._run(sr, target) Apr 1 15:05:11 xcp-ng-bad-3 SM: [25476] File "/opt/xensource/sm/SRCommand.py", line 323, in _run Apr 1 15:05:11 xcp-ng-bad-3 SM: [25476] return sr.create(self.params['sr_uuid'], long(self.params['args'][0])) Apr 1 15:05:11 xcp-ng-bad-3 SM: [25476] File "/opt/xensource/sm/NFSSR", line 198, in create Apr 1 15:05:11 xcp-ng-bad-3 SM: [25476] util._testHost(self.dconf['server'], NFSPORT, 'NFSTarget') Apr 1 15:05:11 xcp-ng-bad-3 SM: [25476] File "/opt/xensource/sm/util.py", line 915, in _testHost Apr 1 15:05:11 xcp-ng-bad-3 SM: [25476] raise xs_errors.XenError(errstring) Apr 1 15:05:11 xcp-ng-bad-3 SM: [25476] Apr 1 15:05:11 xcp-ng-bad-3 SM: [25476] ***** NFS VHD: EXCEPTION <class 'SR.SROSError'>, Unable to detect an NFS service on this target. Apr 1 15:05:11 xcp-ng-bad-3 SM: [25476] File "/opt/xensource/sm/SRCommand.py", line 372, in run Apr 1 15:05:11 xcp-ng-bad-3 SM: [25476] ret = cmd.run(sr) Apr 1 15:05:11 xcp-ng-bad-3 SM: [25476] File "/opt/xensource/sm/SRCommand.py", line 110, in run Apr 1 15:05:11 xcp-ng-bad-3 SM: [25476] return self._run_locked(sr) Apr 1 15:05:11 xcp-ng-bad-3 SM: [25476] File "/opt/xensource/sm/SRCommand.py", line 159, in _run_locked Apr 1 15:05:11 xcp-ng-bad-3 SM: [25476] rv = self._run(sr, target) Apr 1 15:05:11 xcp-ng-bad-3 SM: [25476] File "/opt/xensource/sm/SRCommand.py", line 323, in _run Apr 1 15:05:11 xcp-ng-bad-3 SM: [25476] return sr.create(self.params['sr_uuid'], long(self.params['args'][0])) Apr 1 15:05:11 xcp-ng-bad-3 SM: [25476] File "/opt/xensource/sm/NFSSR", line 198, in create Apr 1 15:05:11 xcp-ng-bad-3 SM: [25476] util._testHost(self.dconf['server'], NFSPORT, 'NFSTarget') Apr 1 15:05:11 xcp-ng-bad-3 SM: [25476] File "/opt/xensource/sm/util.py", line 915, in _testHost Apr 1 15:05:11 xcp-ng-bad-3 SM: [25476] raise xs_errors.XenError(errstring) Apr 1 15:05:11 xcp-ng-bad-3 SM: [25476]Is it still looking for a real NFS server?

edit: its logging port 2049 not 6789?

-

@jmccoy555 please refer the note about the secret to be put in a file.

-

@r1 tired that first, second code box and in XCP-ng center (I've moved the error box this time)

-

-

odd, no mount.cepth and I've just rerun yum install ceph-common and it's got dependency issues this time, so I guess that's not a great start!

[16:50 xcp-ng-bad-3 /]# yum install centos-release-ceph-luminous --enablerepo=extras Loaded plugins: fastestmirror Loading mirror speeds from cached hostfile * extras: mirror.mhd.uk.as44574.net Excluding mirror: updates.xcp-ng.org * xcp-ng-base: mirrors.xcp-ng.org Excluding mirror: updates.xcp-ng.org * xcp-ng-updates: mirrors.xcp-ng.org Package centos-release-ceph-luminous-1.1-2.el7.centos.noarch already installed and latest version Nothing to do [16:50 xcp-ng-bad-3 /]# yum install ceph-common Loaded plugins: fastestmirror Loading mirror speeds from cached hostfile Excluding mirror: updates.xcp-ng.org * xcp-ng-base: mirrors.xcp-ng.org Excluding mirror: updates.xcp-ng.org * xcp-ng-updates: mirrors.xcp-ng.org Resolving Dependencies --> Running transaction check ---> Package ceph-common.x86_64 2:12.2.11-0.el7 will be installed --> Processing Dependency: python-rgw = 2:12.2.11-0.el7 for package: 2:ceph-common-12.2.11-0.el7.x86_64 --> Processing Dependency: python-rbd = 2:12.2.11-0.el7 for package: 2:ceph-common-12.2.11-0.el7.x86_64 --> Processing Dependency: python-rados = 2:12.2.11-0.el7 for package: 2:ceph-common-12.2.11-0.el7.x86_64 --> Processing Dependency: python-cephfs = 2:12.2.11-0.el7 for package: 2:ceph-common-12.2.11-0.el7.x86_64 --> Processing Dependency: librbd1 = 2:12.2.11-0.el7 for package: 2:ceph-common-12.2.11-0.el7.x86_64 --> Processing Dependency: librados2 = 2:12.2.11-0.el7 for package: 2:ceph-common-12.2.11-0.el7.x86_64 --> Processing Dependency: libcephfs2 = 2:12.2.11-0.el7 for package: 2:ceph-common-12.2.11-0.el7.x86_64 --> Processing Dependency: python-requests for package: 2:ceph-common-12.2.11-0.el7.x86_64 --> Processing Dependency: python-prettytable for package: 2:ceph-common-12.2.11-0.el7.x86_64 --> Processing Dependency: libibverbs.so.1(IBVERBS_1.1)(64bit) for package: 2:ceph-common-12.2.11-0.el7.x86_64 --> Processing Dependency: libibverbs.so.1(IBVERBS_1.0)(64bit) for package: 2:ceph-common-12.2.11-0.el7.x86_64 --> Processing Dependency: libtcmalloc.so.4()(64bit) for package: 2:ceph-common-12.2.11-0.el7.x86_64 --> Processing Dependency: librbd.so.1()(64bit) for package: 2:ceph-common-12.2.11-0.el7.x86_64 --> Processing Dependency: libradosstriper.so.1()(64bit) for package: 2:ceph-common-12.2.11-0.el7.x86_64 --> Processing Dependency: libleveldb.so.1()(64bit) for package: 2:ceph-common-12.2.11-0.el7.x86_64 --> Processing Dependency: libibverbs.so.1()(64bit) for package: 2:ceph-common-12.2.11-0.el7.x86_64 --> Processing Dependency: libfuse.so.2()(64bit) for package: 2:ceph-common-12.2.11-0.el7.x86_64 --> Processing Dependency: libcephfs.so.2()(64bit) for package: 2:ceph-common-12.2.11-0.el7.x86_64 --> Processing Dependency: libceph-common.so.0()(64bit) for package: 2:ceph-common-12.2.11-0.el7.x86_64 --> Processing Dependency: libbabeltrace.so.1()(64bit) for package: 2:ceph-common-12.2.11-0.el7.x86_64 --> Processing Dependency: libbabeltrace-ctf.so.1()(64bit) for package: 2:ceph-common-12.2.11-0.el7.x86_64 --> Running transaction check ---> Package ceph-common.x86_64 2:12.2.11-0.el7 will be installed --> Processing Dependency: python-requests for package: 2:ceph-common-12.2.11-0.el7.x86_64 --> Processing Dependency: libibverbs.so.1(IBVERBS_1.1)(64bit) for package: 2:ceph-common-12.2.11-0.el7.x86_64 --> Processing Dependency: libibverbs.so.1(IBVERBS_1.0)(64bit) for package: 2:ceph-common-12.2.11-0.el7.x86_64 --> Processing Dependency: libtcmalloc.so.4()(64bit) for package: 2:ceph-common-12.2.11-0.el7.x86_64 --> Processing Dependency: libibverbs.so.1()(64bit) for package: 2:ceph-common-12.2.11-0.el7.x86_64 ---> Package fuse-libs.x86_64 0:2.9.2-10.el7 will be installed ---> Package leveldb.x86_64 0:1.12.0-5.el7.1 will be installed ---> Package libbabeltrace.x86_64 0:1.2.4-3.1.el7 will be installed ---> Package libcephfs2.x86_64 2:12.2.11-0.el7 will be installed --> Processing Dependency: libibverbs.so.1()(64bit) for package: 2:libcephfs2-12.2.11-0.el7.x86_64 ---> Package librados2.x86_64 1:0.94.5-2.el7 will be updated ---> Package librados2.x86_64 2:12.2.11-0.el7 will be an update --> Processing Dependency: libibverbs.so.1(IBVERBS_1.1)(64bit) for package: 2:librados2-12.2.11-0.el7.x86_64 --> Processing Dependency: libibverbs.so.1(IBVERBS_1.0)(64bit) for package: 2:librados2-12.2.11-0.el7.x86_64 --> Processing Dependency: liblttng-ust.so.0()(64bit) for package: 2:librados2-12.2.11-0.el7.x86_64 --> Processing Dependency: libibverbs.so.1()(64bit) for package: 2:librados2-12.2.11-0.el7.x86_64 ---> Package libradosstriper1.x86_64 2:12.2.11-0.el7 will be installed --> Processing Dependency: libibverbs.so.1()(64bit) for package: 2:libradosstriper1-12.2.11-0.el7.x86_64 ---> Package librbd1.x86_64 2:12.2.11-0.el7 will be installed --> Processing Dependency: libibverbs.so.1()(64bit) for package: 2:librbd1-12.2.11-0.el7.x86_64 ---> Package python-cephfs.x86_64 2:12.2.11-0.el7 will be installed ---> Package python-prettytable.noarch 0:0.7.2-1.el7 will be installed ---> Package python-rados.x86_64 2:12.2.11-0.el7 will be installed ---> Package python-rbd.x86_64 2:12.2.11-0.el7 will be installed ---> Package python-rgw.x86_64 2:12.2.11-0.el7 will be installed --> Processing Dependency: librgw2 = 2:12.2.11-0.el7 for package: 2:python-rgw-12.2.11-0.el7.x86_64 --> Processing Dependency: librgw.so.2()(64bit) for package: 2:python-rgw-12.2.11-0.el7.x86_64 --> Running transaction check ---> Package ceph-common.x86_64 2:12.2.11-0.el7 will be installed --> Processing Dependency: python-requests for package: 2:ceph-common-12.2.11-0.el7.x86_64 --> Processing Dependency: libibverbs.so.1(IBVERBS_1.1)(64bit) for package: 2:ceph-common-12.2.11-0.el7.x86_64 --> Processing Dependency: libibverbs.so.1(IBVERBS_1.0)(64bit) for package: 2:ceph-common-12.2.11-0.el7.x86_64 --> Processing Dependency: libtcmalloc.so.4()(64bit) for package: 2:ceph-common-12.2.11-0.el7.x86_64 --> Processing Dependency: libibverbs.so.1()(64bit) for package: 2:ceph-common-12.2.11-0.el7.x86_64 ---> Package libcephfs2.x86_64 2:12.2.11-0.el7 will be installed --> Processing Dependency: libibverbs.so.1()(64bit) for package: 2:libcephfs2-12.2.11-0.el7.x86_64 ---> Package librados2.x86_64 2:12.2.11-0.el7 will be an update --> Processing Dependency: libibverbs.so.1(IBVERBS_1.1)(64bit) for package: 2:librados2-12.2.11-0.el7.x86_64 --> Processing Dependency: libibverbs.so.1(IBVERBS_1.0)(64bit) for package: 2:librados2-12.2.11-0.el7.x86_64 --> Processing Dependency: libibverbs.so.1()(64bit) for package: 2:librados2-12.2.11-0.el7.x86_64 ---> Package libradosstriper1.x86_64 2:12.2.11-0.el7 will be installed --> Processing Dependency: libibverbs.so.1()(64bit) for package: 2:libradosstriper1-12.2.11-0.el7.x86_64 ---> Package librbd1.x86_64 2:12.2.11-0.el7 will be installed --> Processing Dependency: libibverbs.so.1()(64bit) for package: 2:librbd1-12.2.11-0.el7.x86_64 ---> Package librgw2.x86_64 2:12.2.11-0.el7 will be installed --> Processing Dependency: libibverbs.so.1()(64bit) for package: 2:librgw2-12.2.11-0.el7.x86_64 ---> Package lttng-ust.x86_64 0:2.10.0-1.el7 will be installed --> Processing Dependency: liburcu-cds.so.6()(64bit) for package: lttng-ust-2.10.0-1.el7.x86_64 --> Processing Dependency: liburcu-bp.so.6()(64bit) for package: lttng-ust-2.10.0-1.el7.x86_64 --> Running transaction check ---> Package ceph-common.x86_64 2:12.2.11-0.el7 will be installed --> Processing Dependency: python-requests for package: 2:ceph-common-12.2.11-0.el7.x86_64 --> Processing Dependency: libibverbs.so.1(IBVERBS_1.1)(64bit) for package: 2:ceph-common-12.2.11-0.el7.x86_64 --> Processing Dependency: libibverbs.so.1(IBVERBS_1.0)(64bit) for package: 2:ceph-common-12.2.11-0.el7.x86_64 --> Processing Dependency: libtcmalloc.so.4()(64bit) for package: 2:ceph-common-12.2.11-0.el7.x86_64 --> Processing Dependency: libibverbs.so.1()(64bit) for package: 2:ceph-common-12.2.11-0.el7.x86_64 ---> Package libcephfs2.x86_64 2:12.2.11-0.el7 will be installed --> Processing Dependency: libibverbs.so.1()(64bit) for package: 2:libcephfs2-12.2.11-0.el7.x86_64 ---> Package librados2.x86_64 2:12.2.11-0.el7 will be an update --> Processing Dependency: libibverbs.so.1(IBVERBS_1.1)(64bit) for package: 2:librados2-12.2.11-0.el7.x86_64 --> Processing Dependency: libibverbs.so.1(IBVERBS_1.0)(64bit) for package: 2:librados2-12.2.11-0.el7.x86_64 --> Processing Dependency: libibverbs.so.1()(64bit) for package: 2:librados2-12.2.11-0.el7.x86_64 ---> Package libradosstriper1.x86_64 2:12.2.11-0.el7 will be installed --> Processing Dependency: libibverbs.so.1()(64bit) for package: 2:libradosstriper1-12.2.11-0.el7.x86_64 ---> Package librbd1.x86_64 2:12.2.11-0.el7 will be installed --> Processing Dependency: libibverbs.so.1()(64bit) for package: 2:librbd1-12.2.11-0.el7.x86_64 ---> Package librgw2.x86_64 2:12.2.11-0.el7 will be installed --> Processing Dependency: libibverbs.so.1()(64bit) for package: 2:librgw2-12.2.11-0.el7.x86_64 ---> Package userspace-rcu.x86_64 0:0.10.0-3.el7 will be installed --> Finished Dependency Resolution Error: Package: 2:ceph-common-12.2.11-0.el7.x86_64 (centos-ceph-luminous) Requires: python-requests Error: Package: 2:ceph-common-12.2.11-0.el7.x86_64 (centos-ceph-luminous) Requires: libibverbs.so.1(IBVERBS_1.0)(64bit) Error: Package: 2:libcephfs2-12.2.11-0.el7.x86_64 (centos-ceph-luminous) Requires: libibverbs.so.1()(64bit) Error: Package: 2:librbd1-12.2.11-0.el7.x86_64 (centos-ceph-luminous) Requires: libibverbs.so.1()(64bit) Error: Package: 2:ceph-common-12.2.11-0.el7.x86_64 (centos-ceph-luminous) Requires: libibverbs.so.1()(64bit) Error: Package: 2:ceph-common-12.2.11-0.el7.x86_64 (centos-ceph-luminous) Requires: libibverbs.so.1(IBVERBS_1.1)(64bit) Error: Package: 2:librados2-12.2.11-0.el7.x86_64 (centos-ceph-luminous) Requires: libibverbs.so.1(IBVERBS_1.0)(64bit) Error: Package: 2:librados2-12.2.11-0.el7.x86_64 (centos-ceph-luminous) Requires: libibverbs.so.1()(64bit) Error: Package: 2:librados2-12.2.11-0.el7.x86_64 (centos-ceph-luminous) Requires: libibverbs.so.1(IBVERBS_1.1)(64bit) Error: Package: 2:libradosstriper1-12.2.11-0.el7.x86_64 (centos-ceph-luminous) Requires: libibverbs.so.1()(64bit) Error: Package: 2:librgw2-12.2.11-0.el7.x86_64 (centos-ceph-luminous) Requires: libibverbs.so.1()(64bit) Error: Package: 2:ceph-common-12.2.11-0.el7.x86_64 (centos-ceph-luminous) Requires: libtcmalloc.so.4()(64bit) You could try using --skip-broken to work around the problem You could try running: rpm -Va --nofiles --nodigest [16:51 xcp-ng-bad-3 /]# -

Not sure if its the best idea, but this did the trick

yum install ceph-common --enablerepo=base -

Oh ok.. I know why...

mount.cephcame in nautilus release. I have edited the post to install nautilus.Unlike RBD, CephFS gives browsable datastore + thin disks and is super easy to scale up/down in capacity with immediate reflection. I'd spend some time later to implement "Scan" and have a proper SR driver.

Thanks for testing and feel free to raise if you come across further issues.

-

Hi @r1 I've only had a quick play so far but it appears to work quite well.

I've shutdown a Ceph MDS node and it fails over to one of the other nodes and keeps working away.

I am running my Ceph cluster in VMs (at the moment one per host with the second SATA controller on the motherboard passed-through) so have shut down all nodes, rebooted my XCP-ng server, booted up the Ceph cluster and was able to reconnect the Ceph SR, so that's all good.

Unfortunately at the moment the patch breaks reconnecting the NFS SR, I think because the scan function is broken??, but reverting with

yum reinstall smfixes that, so not a big issue but is going to make things a bit more difficult until that is sorted after a host reboot. -

@jmccoy555 said in CEPH FS Storage Driver:

Unfortunately at the moment the patch breaks reconnecting the NFS SR,

It did not intend to.. I'll recheck on this.

-

@r1 ok, I'm only saying this as it didn't reconnect (the NFS server was up prior to boot) and if you try to add a new one the Scan button returns an error.

-

Hi @r1 Just ttied this on my pool of two servers, but no luck. Should it work? I've verified the same command on a host not in a pool and it works fine.

[14:20 xcp-ng-bad-1 /]# xe sr-create type=nfs device-config:server=10.10.1.141,10.10.1.142,10.10.1.143 device-config:serverpath=/xcp device-config:options=name=xcp,secretfile=/etc/ceph/xcp.secret name-label=CephFS Error: Required parameter not found: host-uuid [14:20 xcp-ng-bad-1 /]# xe sr-create type=nfs device-config:server=10.10.1.141,10.10.1.142,10.10.1.143 device-config:serverpath=/xcp device-config:options=name=xcp,secretfile=/etc/ceph/xcp.secret name-label=CephFS host-uuid=c6977e4e-972f-4dcc-a71f-42120b51eacf Error code: SR_BACKEND_FAILURE_140 Error parameters: , Incorrect DNS name, unable to resolve.,I've verified ceph.mount works, and I can manually mount with the

mountcommand./var/log/SMlog

Apr 4 14:32:02 xcp-ng-bad-1 SM: [25369] lock: opening lock file /var/lock/sm/fa472dc0-f80b-b667-99d8-0b36cb01c5d4/sr Apr 4 14:32:02 xcp-ng-bad-1 SM: [25369] lock: acquired /var/lock/sm/fa472dc0-f80b-b667-99d8-0b36cb01c5d4/sr Apr 4 14:32:02 xcp-ng-bad-1 SM: [25369] sr_create {'sr_uuid': 'fa472dc0-f80b-b667-99d8-0b36cb01c5d4', 'subtask_of': 'DummyRef:|cf1a6d4a-fca9-410e-8307-88e3421bff4e|SR.create', 'args': ['0'], 'host_ref': 'OpaqueRef:5527aabc-8bd0-416e-88bf-b6a0cb2b72b1', 'session_ref': 'OpaqueRef:49495fa6-ec85-4340-b59d-ee1f037c0bb7', 'device_config': {'server': '10.10.1.141', 'SRmaster': 'true', 'serverpath': '/xcp', 'options': 'name=xcp,secretfile=/etc/ceph/xcp.secret'}, 'command': 'sr_create', 'sr_ref': 'OpaqueRef:f57d72be-8465-4a79-87c4-84a34c93baac'} Apr 4 14:32:02 xcp-ng-bad-1 SM: [25369] _testHost: Testing host/port: 10.10.1.141,2049 Apr 4 14:32:02 xcp-ng-bad-1 SM: [25369] _testHost: Connect failed after 2 seconds (10.10.1.141) - [Errno 111] Connection refused Apr 4 14:32:02 xcp-ng-bad-1 SM: [25369] Raising exception [108, Unable to detect an NFS service on this target.] Apr 4 14:32:02 xcp-ng-bad-1 SM: [25369] lock: released /var/lock/sm/fa472dc0-f80b-b667-99d8-0b36cb01c5d4/sr Apr 4 14:32:02 xcp-ng-bad-1 SM: [25369] ***** generic exception: sr_create: EXCEPTION <class 'SR.SROSError'>, Unable to detect an NFS service on this target. Apr 4 14:32:02 xcp-ng-bad-1 SM: [25369] File "/opt/xensource/sm/SRCommand.py", line 110, in run Apr 4 14:32:02 xcp-ng-bad-1 SM: [25369] return self._run_locked(sr) Apr 4 14:32:02 xcp-ng-bad-1 SM: [25369] File "/opt/xensource/sm/SRCommand.py", line 159, in _run_locked Apr 4 14:32:02 xcp-ng-bad-1 SM: [25369] rv = self._run(sr, target) Apr 4 14:32:02 xcp-ng-bad-1 SM: [25369] File "/opt/xensource/sm/SRCommand.py", line 323, in _run Apr 4 14:32:02 xcp-ng-bad-1 SM: [25369] return sr.create(self.params['sr_uuid'], long(self.params['args'][0])) Apr 4 14:32:02 xcp-ng-bad-1 SM: [25369] File "/opt/xensource/sm/NFSSR", line 198, in create Apr 4 14:32:02 xcp-ng-bad-1 SM: [25369] util._testHost(self.dconf['server'], NFSPORT, 'NFSTarget') Apr 4 14:32:02 xcp-ng-bad-1 SM: [25369] File "/opt/xensource/sm/util.py", line 915, in _testHost Apr 4 14:32:02 xcp-ng-bad-1 SM: [25369] raise xs_errors.XenError(errstring) Apr 4 14:32:02 xcp-ng-bad-1 SM: [25369] Apr 4 14:32:02 xcp-ng-bad-1 SM: [25369] ***** NFS VHD: EXCEPTION <class 'SR.SROSError'>, Unable to detect an NFS service on this target. Apr 4 14:32:02 xcp-ng-bad-1 SM: [25369] File "/opt/xensource/sm/SRCommand.py", line 372, in run Apr 4 14:32:02 xcp-ng-bad-1 SM: [25369] ret = cmd.run(sr) Apr 4 14:32:02 xcp-ng-bad-1 SM: [25369] File "/opt/xensource/sm/SRCommand.py", line 110, in run Apr 4 14:32:02 xcp-ng-bad-1 SM: [25369] return self._run_locked(sr) Apr 4 14:32:02 xcp-ng-bad-1 SM: [25369] File "/opt/xensource/sm/SRCommand.py", line 159, in _run_locked Apr 4 14:32:02 xcp-ng-bad-1 SM: [25369] rv = self._run(sr, target) Apr 4 14:32:02 xcp-ng-bad-1 SM: [25369] File "/opt/xensource/sm/SRCommand.py", line 323, in _run Apr 4 14:32:02 xcp-ng-bad-1 SM: [25369] return sr.create(self.params['sr_uuid'], long(self.params['args'][0])) Apr 4 14:32:02 xcp-ng-bad-1 SM: [25369] File "/opt/xensource/sm/NFSSR", line 198, in create Apr 4 14:32:02 xcp-ng-bad-1 SM: [25369] util._testHost(self.dconf['server'], NFSPORT, 'NFSTarget') Apr 4 14:32:02 xcp-ng-bad-1 SM: [25369] File "/opt/xensource/sm/util.py", line 915, in _testHost Apr 4 14:32:02 xcp-ng-bad-1 SM: [25369] raise xs_errors.XenError(errstring) Apr 4 14:32:02 xcp-ng-bad-1 SM: [25369] -

@jmccoy555 said in CEPH FS Storage Driver:

device-config:server=10.10.1.141,10.10.1.142,10.10.1.143

Are you sure that's supposed to work?

Try using only one IP-address and see if the command works as intended. -

@r1 said in CEPH FS Storage Driver:

while for CEPHSR we can use #mount.ceph addr1,addr2,addr3,addr4:remotepath localpath

Yep, as far as I know that is how you configure the failover, and as I said it works (with one or more IPs) from a host not in a pool.

p.s. yes I also tried with one IP to just be sure.

-

@jmccoy555 Can you try the latest patch?

Before applying it restore to normal state

# yum reinstall sm# cd / # wget "https://gist.githubusercontent.com/rushikeshjadhav/ea8a6e15c3b5e7f6e61fe0cb873173d2/raw/dabe5c915b30a0efc932cab169ebe94c17d8c1ca/ceph-8.1.patch" # patch -p0 < ceph-8.1.patch # yum install centos-release-ceph-nautilus --enablerepo=extras # yum install ceph-commonNote: Keep secret in

/etc/ceph/admin.secretwith permission 600To handle the NFS port conflict, specifying port is mandatory e.g.

device-config:serverport=6789Ceph Example:

# xe sr-create type=nfs device-config:server=10.10.10.10,10.10.10.26 device-config:serverpath=/ device-config:serverport=6789 device-config:options=name=admin,secretfile=/etc/ceph/admin.secret name-label=CephNFS Example:

# xe sr-create type=nfs device-config:server=10.10.10.5 device-config:serverpath=/root/nfs name-label=NFS -

@r1 Tried from my pool....

[14:38 xcp-ng-bad-1 /]# xe sr-create type=nfs device-config:server=10.10.1.141,10.10.1.142,10.10.1.143 device-config:serverpath=/xcp device-config:serverport=6789 device-config:options=name=xcp,secretfile=/etc/ceph/xcp.secret name-label=CephFS host-uuid=c6977e4e-972f-4dcc-a71f-42120b51eacf Error code: SR_BACKEND_FAILURE_1200 Error parameters: , not all arguments converted during string formatting,Apr 6 14:38:26 xcp-ng-bad-1 SM: [8906] lock: opening lock file /var/lock/sm/a6e19bdc-0831-4d87-087d-86fca8cfb6fd/sr Apr 6 14:38:26 xcp-ng-bad-1 SM: [8906] lock: acquired /var/lock/sm/a6e19bdc-0831-4d87-087d-86fca8cfb6fd/sr Apr 6 14:38:26 xcp-ng-bad-1 SM: [8906] sr_create {'sr_uuid': 'a6e19bdc-0831-4d87-087d-86fca8cfb6fd', 'subtask_of': 'DummyRef:|572cd61e-b30c-48cb-934f-d597218facc0|SR.create', 'args': ['0'], 'host_ref': 'OpaqueRef:5527aabc-8bd0-416e-88bf-b6a0cb2b72b1', 'session_ref': 'OpaqueRef:e83f61b2-b546-4f22-b14f-31b5d5e7ae4f', 'device_config': {'server': '10.10.1.141,10.10.1.142,10.10.1.143', 'serverpath': '/xcp', 'SRmaster': 'true', 'serverport': '6789', 'options': 'name=xcp,secretfile=/etc/ceph/xcp.secret'}, 'command': 'sr_create', 'sr_ref': 'OpaqueRef:f77a27d8-d427-4c68-ab26-059c1c576c30'} Apr 6 14:38:26 xcp-ng-bad-1 SM: [8906] _testHost: Testing host/port: 10.10.1.141,6789 Apr 6 14:38:26 xcp-ng-bad-1 SM: [8906] ['/usr/sbin/rpcinfo', '-p', '10.10.1.141'] Apr 6 14:38:26 xcp-ng-bad-1 SM: [8906] FAILED in util.pread: (rc 1) stdout: '', stderr: 'rpcinfo: can't contact portmapper: RPC: Remote system error - Connection refused Apr 6 14:38:26 xcp-ng-bad-1 SM: [8906] ' Apr 6 14:38:26 xcp-ng-bad-1 SM: [8906] Unable to obtain list of valid nfs versions Apr 6 14:38:26 xcp-ng-bad-1 SM: [8906] lock: released /var/lock/sm/a6e19bdc-0831-4d87-087d-86fca8cfb6fd/sr Apr 6 14:38:26 xcp-ng-bad-1 SM: [8906] ***** generic exception: sr_create: EXCEPTION <type 'exceptions.TypeError'>, not all arguments converted during string formatting Apr 6 14:38:26 xcp-ng-bad-1 SM: [8906] File "/opt/xensource/sm/SRCommand.py", line 110, in run Apr 6 14:38:26 xcp-ng-bad-1 SM: [8906] return self._run_locked(sr) Apr 6 14:38:26 xcp-ng-bad-1 SM: [8906] File "/opt/xensource/sm/SRCommand.py", line 159, in _run_locked Apr 6 14:38:26 xcp-ng-bad-1 SM: [8906] rv = self._run(sr, target) Apr 6 14:38:26 xcp-ng-bad-1 SM: [8906] File "/opt/xensource/sm/SRCommand.py", line 323, in _run Apr 6 14:38:26 xcp-ng-bad-1 SM: [8906] return sr.create(self.params['sr_uuid'], long(self.params['args'][0])) Apr 6 14:38:26 xcp-ng-bad-1 SM: [8906] File "/opt/xensource/sm/NFSSR", line 222, in create Apr 6 14:38:26 xcp-ng-bad-1 SM: [8906] raise exn Apr 6 14:38:26 xcp-ng-bad-1 SM: [8906] Apr 6 14:38:26 xcp-ng-bad-1 SM: [8906] ***** NFS VHD: EXCEPTION <type 'exceptions.TypeError'>, not all arguments converted during string formatting Apr 6 14:38:26 xcp-ng-bad-1 SM: [8906] File "/opt/xensource/sm/SRCommand.py", line 372, in run Apr 6 14:38:26 xcp-ng-bad-1 SM: [8906] ret = cmd.run(sr) Apr 6 14:38:26 xcp-ng-bad-1 SM: [8906] File "/opt/xensource/sm/SRCommand.py", line 110, in run Apr 6 14:38:26 xcp-ng-bad-1 SM: [8906] return self._run_locked(sr) Apr 6 14:38:26 xcp-ng-bad-1 SM: [8906] File "/opt/xensource/sm/SRCommand.py", line 159, in _run_locked Apr 6 14:38:26 xcp-ng-bad-1 SM: [8906] rv = self._run(sr, target) Apr 6 14:38:26 xcp-ng-bad-1 SM: [8906] File "/opt/xensource/sm/SRCommand.py", line 323, in _run Apr 6 14:38:26 xcp-ng-bad-1 SM: [8906] return sr.create(self.params['sr_uuid'], long(self.params['args'][0])) Apr 6 14:38:26 xcp-ng-bad-1 SM: [8906] File "/opt/xensource/sm/NFSSR", line 222, in create Apr 6 14:38:26 xcp-ng-bad-1 SM: [8906] raise exn Apr 6 14:38:26 xcp-ng-bad-1 SM: [8906]Will try from my standalone host with a reboot later to see if the NFS reconnect issue has gone.

-

@jmccoy555 I have

rpcbindservice running. Can you check on your ceph node? -

@r1 yep, that's it,

rpcbindis needed. I have a very minimal Debian 10 VM hosting my Ceph (dockers) as is now the way with Octopus.Also had to swap the

host-uuid=withshared=truefor it to connect to all hosts within the pool (might be useful for the notes).Will test and also check that everything is good after a reboot and report back.

-

Just to report back..... So far so good. I've moved over a few VDIs and not had any problems.

I've rebooted hosts and Ceph nodes and all is good.

NFS is also all good now.

Hope this gets merges soon so I don't have to worry about updates

On a side note, I've also set up two pools, one of SSDs and one of HDDs using File Layouts to assign different directories (VM SRs) to different pools.

-

@jmccoy555 glad to know. I don't have much knowledge on File Layouts but that looks good.

NFS edits won't be merged as that was just for POC. Working on a dedicated CephFS SR driver which hopefully won't be impacted due to

smor other upgrades. Keep watching this space.