Kubernetes cluster recipes not seeing nodes

-

Hi,

This error can occur when something went wrong during the installation.

Is there a config file located in "$HOME/.kube/"?

-

@GabrielG said in Kubernetes cluster recipes not seeing nodes:

Is there a config file located in "$HOME/.kube/"?

I do have a config file in the Kubernetes Master node. in

/home/debian/.kube/configbut not in any of the Kubernetes node. Is that normal? Should I copy the config file to the nodes? -

There is no need to copy this file into the worker nodes.

Were you able to see or capture any error message during the installation?

-

@GabrielG the installation took a very long time so I left it. When I came back only the master was up and running. The nodes were down. I powered them up manually

-

@GabrielG Do you have any suggestion on how to fix the cluster?

-

It's hard to say without knowing what went wrong during the installation.

First, I would say to check if the config file

/home/debian/.kube/configis the same as/etc/kubernetes/admin.confand ifdebianis correctly assign as the owner of the file. -

@GabrielG the file content are identical but the file ownership is different.

admin.confis owned by root and not 'debian'. Should it be debian?debian@master:~/.kube$ pwd /home/debian/.kube debian@master:~/.kube$ ls -la total 20 drwxr-xr-x 3 root root 4096 Mar 21 13:36 . drwxr-xr-x 4 debian debian 4096 Mar 21 13:36 .. drwxr-x--- 4 root root 4096 Mar 21 13:36 cache -rw------- 1 debian debian 5638 Mar 21 13:36 configdebian@master:/etc/kubernetes$ pwd /etc/kubernetes debian@master:/etc/kubernetes$ ls -la total 44 drwxr-xr-x 4 root root 4096 Mar 21 13:36 . drwxr-xr-x 77 root root 4096 Mar 27 04:07 .. -rw------- 1 root root 5638 Mar 21 13:36 admin.conf -rw------- 1 root root 5674 Mar 21 13:36 controller-manager.conf -rw------- 1 root root 1962 Mar 21 13:36 kubelet.conf drwxr-xr-x 2 root root 4096 Mar 21 13:36 manifests drwxr-xr-x 3 root root 4096 Mar 21 13:36 pki -rw------- 1 root root 5622 Mar 21 13:36 scheduler.conf -

@fred974 said in Kubernetes cluster recipes not seeing nodes:

Should it be debian?

No, only the

/home/debian/.kube/configis meant to be owned bydebianuser.Are you using kubectl with

debianuser or with therootuser? -

@GabrielG said in Kubernetes cluster recipes not seeing nodes:

Are you using kubectl with debian user or with the root user?

I was using the root account

I tried with the debian user and I now get something

I tried with the debian user and I now get somethingdebian@master:~$ kubectl get nodes NAME STATUS ROLES AGE VERSION master Ready control-plane 5d23h v1.26.3 node-2 Ready <none> 5d23h v1.26.3I have created a cluster with 1x master and 3x nodes. Should the output of the command above return 2 nodes?

-

Yes, you should have something like that:

debian@master:~$ kubectl get nodes NAME STATUS ROLES AGE VERSION master Ready control-plane 6m52s v1.26.3 node-1 Ready <none> 115s v1.26.3 node-2 Ready <none> 2m47s v1.26.3 node-3 Ready <none> 2m36s v1.26.3Are all worker nodes vm started? What's the output of

kubectl get events? -

@GabrielG Sorry for the late reply. Here is what I have.

debian@master:~$ kubectl get nodes NAME STATUS ROLES AGE VERSION master Ready control-plane 7d22h v1.26.3 node-2 Ready <none> 7d22h v1.26.3and

debian@master:~$ kubectl get events No resources found in default namespace. -

Thank you.

Are all VMs started?

What's the output of

kubectl get pods --all-namespaces? -

@GabrielG said in Kubernetes cluster recipes not seeing nodes:

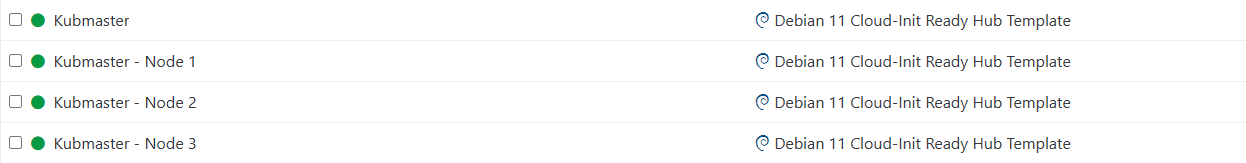

Are all VMs started?

Yes, all the VMs are up and running

@GabrielG said in Kubernetes cluster recipes not seeing nodes:

What's the output of kubectl get pods --all-namespaces?

debian@master:~$ kubectl get pods --all-namespaces NAMESPACE NAME READY STATUS RESTARTS AGE kube-flannel kube-flannel-ds-mj4n6 1/1 Running 2 (3d ago) 8d kube-flannel kube-flannel-ds-vtd2k 1/1 Running 2 (6d19h ago) 8d kube-system coredns-787d4945fb-85867 1/1 Running 2 (6d19h ago) 8d kube-system coredns-787d4945fb-dn96g 1/1 Running 2 (6d19h ago) 8d kube-system etcd-master 1/1 Running 2 (6d19h ago) 8d kube-system kube-apiserver-master 1/1 Running 2 (6d19h ago) 8d kube-system kube-controller-manager-master 1/1 Running 2 (6d19h ago) 8d kube-system kube-proxy-fmjnv 1/1 Running 2 (6d19h ago) 8d kube-system kube-proxy-gxsrs 1/1 Running 2 (3d ago) 8d kube-system kube-scheduler-master 1/1 Running 2 (6d19h ago) 8dThank you very much

-

@GabrielG Do you think I should delete all the VMs and reun the deploy recipe again? Also is it normal that I no longer have the option to set a network CIDR like before?

-

You can do that but it won't help us to understand what when wrong during the installation of the worker nodes 1 and 3.

Can you show me what's the output of

sudo cat /var/log/messagesfor each nodes (master and workers)?Concerning the CIDR, we are now using flannel as Container Network Interface, which uses a default CIDR (10.244.0.0/16) allocated to the pods network.

-

@GabrielG said in Kubernetes cluster recipes not seeing nodes:

Can you show me what's the output of sudo cat /var/log/messages for each nodes (master and workers)?

From the master:

debian@master:~$ sudo cat /var/log/messages Mar 26 00:10:18 master rsyslogd: [origin software="rsyslogd" swVersion="8.2102.0" x-pid="572" x-info="https://www.rsyslog.com"] rsyslogd was HUPedFrom node1:

https://pastebin.com/xrqPd88VFrom node2:

https://pastebin.com/aJch3diHFrom node3:

https://pastebin.com/Zc1y42NA -

Thank you, I'll take a look tomorrow.

Is it the whole output for the master?

-

@GabrielG yes, all of it

-

@GabrielG did you get a chance to look at the log I provided? Any clues?

-

Hi,

Nothing useful. Maybe you can try to delete the VMs and redeploy the cluster.