NVIDIA Tesla M40 for AI work in Ubuntu VM - is it a good idea?

-

I built an AI vm a little while ago running ollama on Ubuntu 24.04. I have no GPU in that host so I threw 32GB RAM and 32 cores at it and it works, slowly. I knew that a GPU is really the way to go, especially if I want to be able to do image generation. Then today I saw this article. https://blog.briancmoses.com/2024/09/self-hosting-ai-with-spare-parts.html

A GPU for around $90 could be interesting. Any of you guys have a guess at the difficulty of getting an NVIDIA Tesla M40 (https://www.ebay.com/itm/274978990880) to work in an Ubuntu VM in a PowerEdge 730xd host? I see that the card has power requirements but don't know if the supplies in the server take care of that since this card is 10 years old and the server likely is too, and the card is designed for server use - maybe it wouldn't need tweaking.

Am I insane to be considering this?

-

@CodeMercenary Probably not insane if you want to learn using ollama or other LLM frameworks for inference. But the M40 is an ageing GPU with a low compute capability (v5.2), so with time, it might not be supported any more by platforms like ollama, vLLM, llama.cpp or aprhodite (did not check if they actually support that GPU, but Ollama has support for the M40). I doubt that you get an acceptable performance for stable diffusion (image generation) or training/fine-tuning. But what could you expect for $90?

The card has a power consumption (TDP) of 250W which is compatible with the 16x PCIe slot of riser #2. You have to be extra careful with the cable as it is not a standard cable. While most would suggest power supplies of 1100W for the Dell R730 to be on the save side, I run two P40s with 750W power supplies in a Dell R720. But I also power limit the card to 140W with little effect on the performance and have light workloads and no batch processing.

-

@gskger Thanks for the input. I also have dual 750W power supplies and I'd be totally fine limiting the power of the card. I'm not looking for crazy performance, just better than what I get with CPUs and enabling things that are a lot harder to even get working with CPU only, like stable diffusion.

I will absolutely trade performance for less noise and heat in my small server closet. Ideally I'd want the GPU card to consume nothing and need very minimal cooling if I'm not actively running a task in ollama. I can currently hear the fans spool up in that server every time I give ollama a task to run. I don't mind the extra noise when doing something as long as it doesn't permanently make the server more noisy.

I do have concerns about possibly needing external cooling for it as suggested in the article, I'd love to not need active cooling. If limiting the power consumption means that the existing case airflow is sufficient then I'd be happy with that.

-

@CodeMercenary The M40 is a server card and (physically) compatible with the R730, so no extra cooling is required (and possible). The downside is that the R730 most likely will still go full blast on all fans regardless of the actual power consumption since the server can not read the GPUs temperature. But there are scripts to manage the fan speeds based on server or GPU temperature. And once you have ollama installed, you can ask it how to write that code

-

@gskger Great to know, thank you.

I actually already have Ollama installed and running with Open WebUI so I can ask it ahead of time to see what it would be like. I've installed some more code specific models that are better suited to that kind of question.

Running the case fans full blast all the time would be a non-starter so I'm glad you let me know that as I count the costs of attempting this.

-

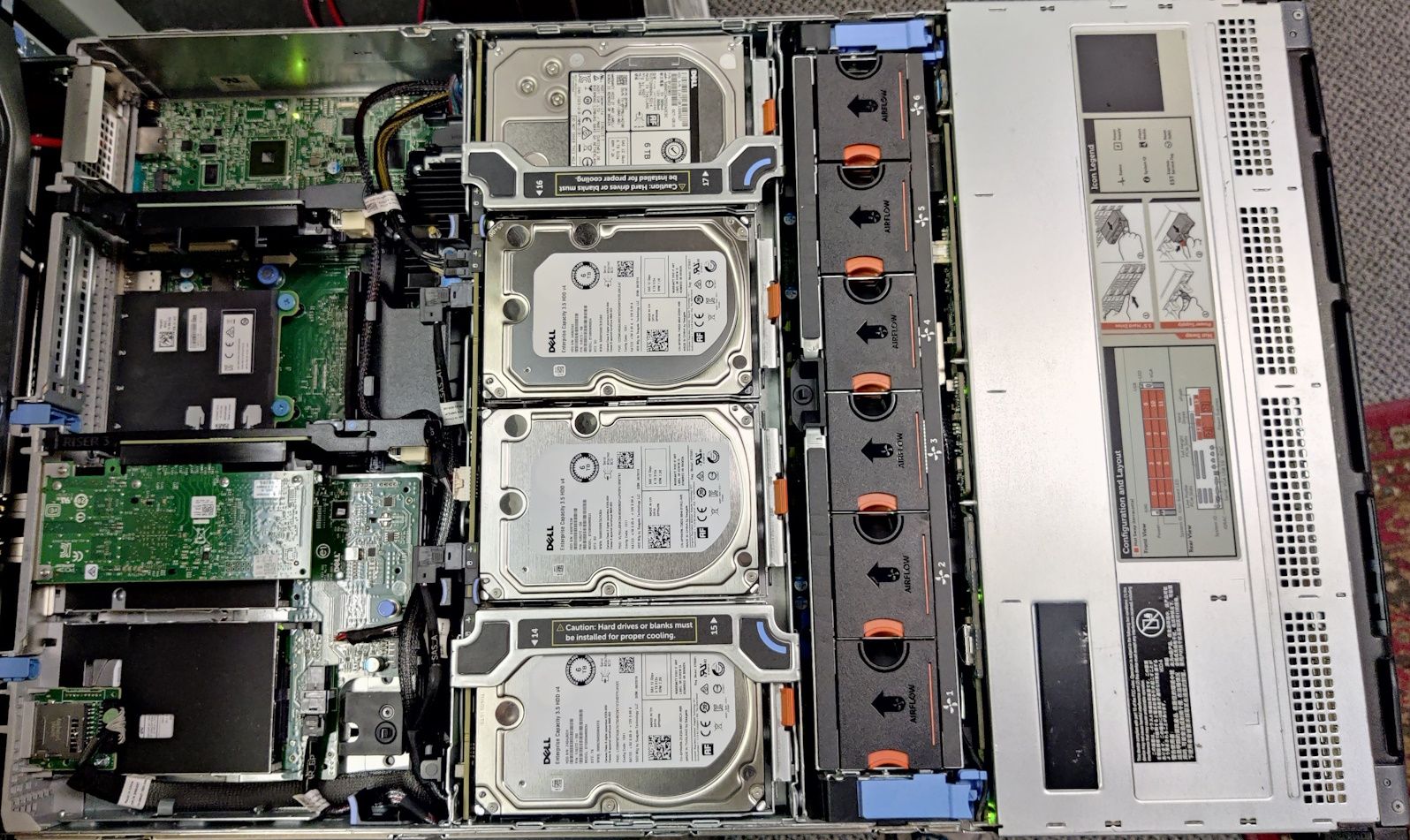

@gskger I'm so glad you put pics of your server in that other post. I looked at it last month when you posted it but when I looked at it again yesterday, I realized that those cards might not fit in my R730xd because I have the center drive rack with 4 extra internal drives. I'm concerned that one of those cards would not work with those drives in place since they basically totally cover the memory and CPUs. I'm also concerned that with those center drives, the airflow out of the row of case fans is less direct and might cause heat problems for a video card like that.

-

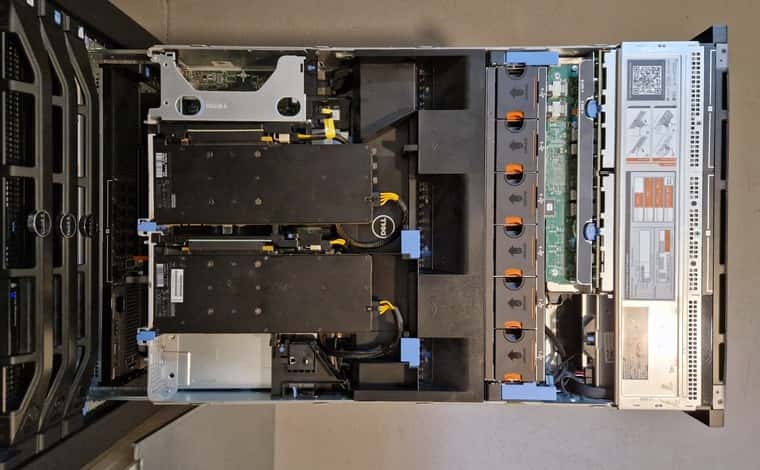

@CodeMercenary I didn't know there was a midboard hard drive option for the R730xd - cool. You could always install a couple Nvidia T4s, but they only come with 16GB of VRAM and are much more expensive compared to the P40s. For reference, I've added a top view of the R720 with two P40s installed.

-

@gskger Yeah, looks like it would be too tight. Ouch, those T4s are an order of magnitude more expensive. I'm definitely not interested in going that route.

-

@CodeMercenary I got my hands on a Nvidia RTX A2000 12GB (around 310€ used on Ebay) which might be an option, depending on what you want to do. It is a dual slot low profile GPU with 12GB VRAM, a max power consumption of 70W and active cooling. With a compute capability of 8.3 (P40: 6.1, M40: 5.2) it is fully supported by

ollama. While 12GB is only 50% of one P40 with 24GB VRAM, it runs small LLMs nicely and with a high token per second rate. It can almost run the Llama3.2 11b vision model (11b-instruct-q4_K_M) using the GPU with only 3% offloaded to CPU. I will start testing this card during the weekend and can share some results if that would help.

-

@gskger said in NVIDIA Tesla M40 for AI work in Ubuntu VM - is it a good idea?:

Nvidia RTX A2000 12GB

I am curious how your testing goes. That sounds like a great card. Not as expensive as the T4 so might be more reasonable for me to consider.

-

@CodeMercenary Did some tests with the A2000 and as expected, the 12GB VRAM is the biggest limitation. Used vanilla installations of Ollama and ComfyUI with no tweaking or optimization. Especially in stable diffusion, the A2000 is about three times faster compared to the P40, but that is to be expected. I have added some results below.

Stable Diffusion tests

A2000 1024x1024, batch 1, iterations 30, cfg 4.0, euler 1.4s/it 1024x1024, batch 4, iterations 30, cfg 4.0, euler 2.5s/it = 0.6s/it P40 1024x1024, batch 1, iterations 30, cfg 4.0, euler 2.8s/it 1024x1024, batch 4, iterations 30, cfg 4.0, euler 12.1s/it = 3s/itInference tests

A2000 qwen2.5:14b 21 token/sec qwen2.5-coder:14b 21 token/sec llama3.2:3b-Q8 50 token/sec llama3.2:3b-Q4 60 token/sec P40 qwen2.5:14b 17 token/sec qwen2.5-coder:14b 17 token/sec llama3.2:3b-Q8 40 token/sec llama3.2:3b-Q4 48 token/secDuring heavy testing, the A2000 reached 70°C and the P40 reached 60°C both with the Dell R720 set to automatic fan control.