More than 64 vCPU on Debian11 VM and AMD EPYC

-

Hello,

I came across a similar case as described in https://xcp-ng.org/forum/topic/4689/xcp-8-2-vcpus-max-settings

I have server with 2x AMD EPYC 7532. I give 128 vCPUs to Debian11 VM, but it sees only 64 of them.xl vcpu-listshows the upper half of CPUs in paused state. Server is running XCP 8.2, kernel 4.19.19-7.0.14.1 (updated around 4th of December last year).

I managed to fix this by installing "generic" kernel in the VM (linux-image-5.10.0-21-amd64), instead of the usual -cloud (VM comes with 5.10.0-20-cloud-amd64).When I tried to turn off ACPI as suggested in the post I mentioned, cloud-image sees only one CPU. However,

acpi=offin grub is needed for the generic image in order to see all. I haven't played withparamson the VM.I saw few posts here about the vCPU issue, but people are running Intel. Anyone with AMD and similar experience?

Thanks in advance.

-

I think that might be a Xen limitation

-

Yes, indeed, for now, you can't have more 64vCPU per guest. It's a complex problem (between Xen and the toolstack) regarding the CPU topology.

Some deep rework is needed and this is only possible on future Xen versions. I wouldn't expect a solution before next year.

-

Thanks for the fast reply, is there a documentation/statement about it?

I managed to have more than 64 vCPU with the regular kernel (not the cloud one). I was just curious if anyone has similar experience with the combo AMD/Debian11/cloud kernel.

-

My source is one of the main Xen dev

If you have working setups with more than 64 vCPUs, I'm curious!

If you have working setups with more than 64 vCPUs, I'm curious! -

@olivierlambert I have a working VM (booted) that shows 2 sockets, 64 cpu each. I am running sysbench with --max-threads=128 and it shows load 128.

I played a bit, with normal acpi in the VM OS and disabled acpi in xexe vm-param-set platform:acpi=0results are the same. I am attaching lscpu and dmesg from the second case (acpi is untouched in the VM, but disabled withxecommand)Let me know if anything else is needed.

-

On second thought, I was not clear in the beginning. I don't expect to see 1 socket with 128 vCPU in the VM, but maybe 2 sockets with splitted vCPUs between them if I assign more than 64 to the VM. Initially I had 1 socket with 64 CPU and after turning ACPI off (either in grub or in the VM config itself), secondary socket appeared in the VM with the rest of the CPUs. More funny, turning ACPI off while running the cloud kernel of Debian makes the VM see one CPU only.

-

I am replying since Olivier liked to hear from others with a lot of cores.

We are running XCP-ng 8.1 on a host with dual AMD Epyc 7713 64 core processors. With hyperthreading it is a total of 256 hyperthreading cores. Since we only are able to assign up to 128 cores to a VM, we have turned hyperthreading off. The VM is running Ubuntu 18. We should probably lower the number of vcpus to 120 or something for best performance, but at the moment it is 128.

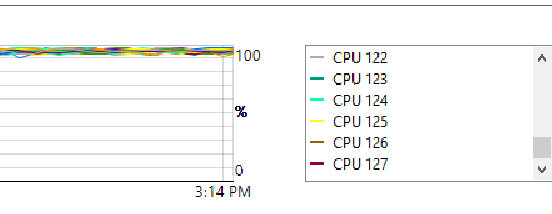

We can see in the Performance Graphs that all the cores are active:

The output of lscpu is:

lspci 00:00.0 Host bridge: Intel Corporation 440FX - 82441FX PMC [Natoma] (rev 02) 00:01.0 ISA bridge: Intel Corporation 82371SB PIIX3 ISA [Natoma/Triton II] 00:01.1 IDE interface: Intel Corporation 82371SB PIIX3 IDE [Natoma/Triton II] 00:01.2 USB controller: Intel Corporation 82371SB PIIX3 USB [Natoma/Triton II] ( rev 01) 00:01.3 Bridge: Intel Corporation 82371AB/EB/MB PIIX4 ACPI (rev 01) 00:02.0 VGA compatible controller: Device 1234:1111 00:03.0 SCSI storage controller: XenSource, Inc. Xen Platform Device (rev 02) hansb@FVCOM-U18:~$ lscpu Architecture: x86_64 CPU op-mode(s): 32-bit, 64-bit Byte Order: Little Endian CPU(s): 128 On-line CPU(s) list: 0-127 Thread(s) per core: 1 Core(s) per socket: 64 Socket(s): 2 NUMA node(s): 1 Vendor ID: AuthenticAMD CPU family: 25 Model: 1 Model name: AMD EPYC 7713 64-Core Processor Stepping: 1 CPU MHz: 1996.267 BogoMIPS: 3992.57 Hypervisor vendor: Xen Virtualization type: full L1d cache: 32K L1i cache: 32K L2 cache: 512K L3 cache: 262144K NUMA node0 CPU(s): 0-127 Flags: fpu vme de pse tsc msr pae mce cx8 apic sep mtrr pge mca cm ov pat pse36 clflush mmx fxsr sse sse2 ht syscall nx mmxext fxsr_opt pdpe1gb rdt scp lm rep_good nopl cpuid extd_apicid pni pclmulqdq ssse3 fma cx16 sse4_1 sse4_ 2 x2apic movbe popcnt aes xsave avx f16c rdrand hypervisor lahf_lm cmp_legacy cr 8_legacy abm sse4a misalignsse 3dnowprefetch bpext ibpb vmmcall fsgsbase bmi1 av x2 smep bmi2 rdseed adx smap clflushopt clwb sha_ni xsaveopt xsavec xgetbv1 xsav es clzero xsaveerptr arat umip rdpid -

I'm getting stuck with this too - on Debian 11 VM - DL 580 with 4 x Xeon E7-8880 v4 + 3 Samsung 990 Pro 4TB with RAID 1.

Effectively the XCP-NG host has 176 "cores" i.e. with the hyperthreading. But I'm only able to use 64 of them. I was also only able to configure the VM with 120 cores too as 30 with 4 sockets. (Physical architecture has 4 sockets), but I think only 64 actually work.

So I'm compiling AOSP, for a clean build, VM is sticking at max CPU for 30 minutes and I would dearly like to reduce that time, as it could be a compile after a tiny change, so progress is painfully slow. The other thing is the linking phase of this build, I'm only seeing 7000 IOPs with the last 10 minute display. I realize this may under read as the traffic could be quite "bursty" but, having 3 mirrored Samsung 990 Pro drives I would expect more. This makes this part heavily disk bound, the over all process takes 70 minutes.

-

If you are heavily relying on disk perf, either:

- use multiple VDIs and RAID0 them (you'll have more than doubling perf because tapdisk is single threaded)

- PCI passthrough a drive to the VM

-

@olivierlambert said in More than 64 vCPU on Debian11 VM and AMD EPYC:

If you are heavily relying on disk perf, either:

- use multiple VDIs and RAID0 them (you'll have more than doubling perf because tapdisk is single threaded)

- PCI passthrough a drive to the VM

another option is to do NVMeOF and SR-IOV on the NIC, pretty similar performance to bare metal with PCI passthrough, yet one NVMe can be divided between VMs (if it supports namespaces) and you can attach NVMe from more than one source to the VM (for redundancy)

-

DPU is also an option (it's exactly what we do with Kalray's DPUs)

-

@alexredston What kernel do you use? Can you show the kernel boot parameters (

/proc/cmdline) - in our case we used the Debian11 image from their website which hadcloudkernel and acpi=on by default. Once we switched to regular kernel and turned off acpi, we saw all the vCPUs in the VM. -

@olivierlambert what exactly do you support from kalray? Could you tell more?

-

-

@olivierlambert interesting, however where is the benefit over nvmeof + sriov doable on a mellanox cx3 or better cx5 and up? Offloading dom0 to specialized hardware is interesting, but what I see in these articles is basically equal to connecting to nvmeof target via sriov nic, doable already for quite a while without any changes in xcp-ng?

-

It's using local NVMe and split them, no need for external storage (but you can also use remote NVMe like in oF but also potentially multiple hosts in HCI mode)/

-

@olivierlambert with NVMeOF I can split them easily too (target per namespace), and actually I get redundancy compared to local device (connect to two targets on different hosts and RAID1 them in VM). Some newer NVMe support SR-IOV natively too, so no additional hardware would be needed to split it and pass through to VMs (I did not test this though). I'm not sure of the price of these cards, but CX3 are really cheap, while CX5/6 are getting more affordable too.

-

If you can afford a dedicated storage, sure

For local, DPU is a good option (and it should be less than 1,5k€ per card, probably less)

For local, DPU is a good option (and it should be less than 1,5k€ per card, probably less) -

@olivierlambert @POleszkiewicz Thanks to you both for all of these ideas - I will have a go at changing the kernel and moving the NVMe to pass through in the first instance. Will report back on results.