PCI Passthrough of Nvidia GPU and USB add-on card

-

Yes. Some of the PCI capabilities are beyond the "standard" PCI configuration space of 256 bytes per BDF (PCI device). And unfortunatly the "enhanced" configuration access method is not provided yet (it's ongoing work) for HVM guests by XEN. It would require from QEMU (xen related part) the chipset emulation which offers an access to such method, such as Q35.

Very probably, windows drivers for these devices are not happy to not access these fields, so this is potentially the reason of malfunctionning for these devices.

The good way to confirm this would be to try to passthrough these devices to Linux guests, so we could possibly add some extended traces. And possibly passthrough these devices to PVH Linux guest and see how they are handled (PVH guest do not use QEMU for PCI bus emulation)

-

@andSmv Ok, so if the machine model isn't Q35 a Windows VM with a modern GPU passthrough wont be able to output video to a monitor?

I tried passing the devices through to an Ubuntu 22.04 VM, installed nvidia drivers but no luck, the USB devices work but the monitor just stays off. daemon.log snippit from the Ubuntu VM:

Mar 13 14:23:55 xcp-ng squeezed: [debug||4 ||xenops] watch /data/updated <- Mon Mar 13 15:23:55 2023 Mar 13 14:24:01 xcp-ng squeezed[1338]: [195.83] watch /data/updated <- 1 Mar 13 14:24:01 xcp-ng squeezed: [debug||4 ||xenops] watch /data/updated <- 1 Mar 13 14:24:01 xcp-ng ovs-ofctl: ovs|00001|ofp_util|WARN|Negative value -1 is not a valid port number. Mar 13 14:24:01 xcp-ng ovs-vsctl: ovs|00001|vsctl|INFO|Called as ovs-vsctl --timeout=30 -- --if-exists del-port tap5.0 Mar 13 14:24:02 xcp-ng tapback[8597]: frontend.c:216 768 front-end supports persistent grants but we don't Mar 13 14:24:02 xcp-ng tapdisk[8587]: received 'sring connect' message (uuid = 5) Mar 13 14:24:02 xcp-ng tapdisk[8587]: connecting VBD 5 domid=5, devid=768, pool (null), evt 33, poll duration 1000, poll idle threshold 50 Mar 13 14:24:02 xcp-ng tapdisk[8587]: ring 0x1b38810 connected Mar 13 14:24:02 xcp-ng tapdisk[8587]: sending 'sring connect rsp' message (uuid = 5) Mar 13 14:24:03 xcp-ng qemu-dm-5[8842]: [00:08.0] Write-back to unknown field 0xc4 (partially) inhibited (0x00000000) Mar 13 14:24:03 xcp-ng qemu-dm-5[8842]: [00:08.0] If the device doesn't work, try enabling permissive mode Mar 13 14:24:03 xcp-ng qemu-dm-5[8842]: [00:08.0] (unsafe) and if it helps report the problem to xen-devel Mar 13 14:24:03 xcp-ng qemu-dm-5[8842]: [00:07.0] Write-back to unknown field 0x44 (partially) inhibited (0x00) Mar 13 14:24:03 xcp-ng qemu-dm-5[8842]: [00:07.0] If the device doesn't work, try enabling permissive mode Mar 13 14:24:03 xcp-ng qemu-dm-5[8842]: [00:07.0] (unsafe) and if it helps report the problem to xen-devel Mar 13 14:24:05 xcp-ng squeezed[1338]: [200.14] domid 5 just started a guest agent (but has no balloon driver); calibrating memory-offset = 0 KiB Mar 13 14:24:05 xcp-ng squeezed: [debug||3 ||xenops] domid 5 just started a guest agent (but has no balloon driver); calibrating memory-offset = 0 KiB Mar 13 14:24:05 xcp-ng squeezed[1338]: [200.14] watch /memory/memory-offset <- 0 Mar 13 14:24:05 xcp-ng squeezed: [debug||4 ||xenops] watch /memory/memory-offset <- 0 Mar 13 14:24:05 xcp-ng squeezed[1338]: [200.14] Xenctrl.domain_setmaxmem domid=5 max=6292480 (was=6347776) Mar 13 14:24:05 xcp-ng squeezed: [debug||3 ||xenops] Xenctrl.domain_setmaxmem domid=5 max=6292480 (was=6347776) Mar 13 14:24:11 xcp-ng squeezed[1338]: [206.39] watch /data/updated <- Mon Mar 13 14:24:11 2023 Mar 13 14:24:11 xcp-ng squeezed: [debug||4 ||xenops] watch /data/updated <- Mon Mar 13 14:24:11 2023 -

@jevan223 This is not about the real hardware. This is about the emulated chipset offered by QEMU to HVM guests (which is the case with Windows VM)

QEMU actually emulates 2 chipset to its guests

-

i440fx: basic PCI bus with CAM access

-

Q35: enhanced PCI bus with ECAM access (and thus access to PCI-e capabiliites).

The problem is that Q35 is not supported by xen-dependant parts in QEMU code, so only i440fx is emulated for XEN HVM guests. We are actually working to enable Q35 in XEN, but this is a work in progress.

Well, this is a hypothesis which needs to be confirmed, but by the look of a lspci output, there's a good chance that's the reason

-

-

Maybe @andyhhp would confirm the hypothesis?

-

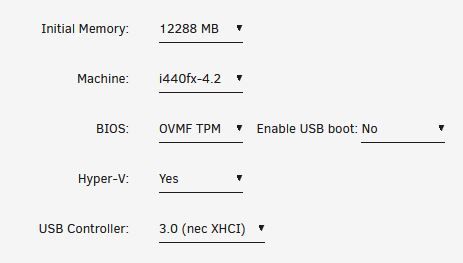

I've been running Unraid for a number of years, but wanted to migrate some VM's to XCP-ng to take advantage of backups, snapshots, and zfs. Looking back at my Unraid VM template settings that used this GPU for video passthrough, it was using i440fx and working great, although there were a few hoops to jump through to get it working:

The XML file of the VM needed to be edited to map the GPUs virtual video and audio devices to the same slot, and enable multifunction to get both video and audio working together.

Because its an Nvidia GPU, it also required a graphics ROM Bios to be passed through to the VM with the Nvidia header removed.

Since the nvidia driver installed correctly on the XCP-ng VM without error code 43 I assume the card was passed to the VM correctly... That's about the extent of my knowledge, thought I'd share if it helps with the hypothesis

-

@jevan223 Well, if you confirm it worked well on i440fx that probably the hypothesis is wrong. Whas it kvm-qemu virtualization?

-

@andSmv yes, unraid uses kvm, here's the xml file for reference:

<?xml version='1.0' encoding='UTF-8'?> <domain type='kvm'> <name>Win10</name> <uuid>xxxxxxxxxxxxxx</uuid> <description>1TB NVME</description> <metadata> <vmtemplate xmlns="unraid" name="Windows 10" icon="windows.png" os="windows10"/> </metadata> <memory unit='KiB'>12582912</memory> <currentMemory unit='KiB'>12582912</currentMemory> <memoryBacking> <nosharepages/> </memoryBacking> <vcpu placement='static'>6</vcpu> <cputune> <vcpupin vcpu='0' cpuset='7'/> <vcpupin vcpu='1' cpuset='19'/> <vcpupin vcpu='2' cpuset='8'/> <vcpupin vcpu='3' cpuset='20'/> <vcpupin vcpu='4' cpuset='9'/> <vcpupin vcpu='5' cpuset='21'/> </cputune> <os> <type arch='x86_64' machine='pc-i440fx-4.2'>hvm</type> <loader readonly='yes' type='pflash'>/usr/share/qemu/ovmf-x64/OVMF_CODE-pure-efi-tpm.fd</loader> <nvram>/etc/libvirt/qemu/nvram/xxxxxuuidxxxxxx_VARS-pure-efi-tpm.fd</nvram> </os> <features> <acpi/> <apic/> <hyperv mode='custom'> <relaxed state='on'/> <vapic state='on'/> <spinlocks state='on' retries='8191'/> <vendor_id state='on' value='none'/> </hyperv> </features> <cpu mode='host-passthrough' check='none' migratable='on'> <topology sockets='1' dies='1' cores='3' threads='2'/> <cache mode='passthrough'/> <feature policy='require' name='topoext'/> </cpu> <clock offset='localtime'> <timer name='hypervclock' present='yes'/> <timer name='hpet' present='no'/> </clock> <on_poweroff>destroy</on_poweroff> <on_reboot>restart</on_reboot> <on_crash>restart</on_crash> <devices> <emulator>/usr/local/sbin/qemu</emulator> <disk type='file' device='disk'> <driver name='qemu' type='raw' cache='writeback'/> <source file='/mnt/user/domains/Windows 10/vdisk1.img'/> <target dev='hdc' bus='virtio'/> <boot order='1'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x04' function='0x0'/> </disk> <disk type='file' device='cdrom'> <driver name='qemu' type='raw'/> <target dev='hdb' bus='ide'/> <readonly/> <address type='drive' controller='0' bus='0' target='0' unit='1'/> </disk> <controller type='usb' index='0' model='nec-xhci' ports='15'> <address type='pci' domain='0x0000' bus='0x00' slot='0x07' function='0x0'/> </controller> <controller type='virtio-serial' index='0'> <address type='pci' domain='0x0000' bus='0x00' slot='0x03' function='0x0'/> </controller> <controller type='pci' index='0' model='pci-root'/> <controller type='ide' index='0'> <address type='pci' domain='0x0000' bus='0x00' slot='0x01' function='0x1'/> </controller> <interface type='bridge'> <mac address='xxxxxxxmacxxxxx'/> <source bridge='br0'/> <model type='virtio-net'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x02' function='0x0'/> </interface> <serial type='pty'> <target type='isa-serial' port='0'> <model name='isa-serial'/> </target> </serial> <console type='pty'> <target type='serial' port='0'/> </console> <channel type='unix'> <target type='virtio' name='org.qemu.guest_agent.0'/> <address type='virtio-serial' controller='0' bus='0' port='1'/> </channel> <input type='tablet' bus='usb'> <address type='usb' bus='0' port='1'/> </input> <input type='mouse' bus='ps2'/> <input type='keyboard' bus='ps2'/> <tpm model='tpm-tis'> <backend type='emulator' version='2.0' persistent_state='yes'/> </tpm> <audio id='1' type='none'/> <hostdev mode='subsystem' type='pci' managed='yes'> <driver name='vfio'/> <source> <address domain='0x0000' bus='0x07' slot='0x00' function='0x0'/> </source> <address type='pci' domain='0x0000' bus='0x00' slot='0x0a' function='0x0'/> </hostdev> <hostdev mode='subsystem' type='pci' managed='yes'> <driver name='vfio'/> <source> <address domain='0x0000' bus='0x0c' slot='0x00' function='0x0'/> </source> <rom file='/mnt/user/domains/Vbios/MSI.GTX1660Super.6144.191029.rom'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x05' function='0x0' multifunction='on'/> </hostdev> <hostdev mode='subsystem' type='pci' managed='yes'> <driver name='vfio'/> <source> <address domain='0x0000' bus='0x0c' slot='0x00' function='0x1'/> </source> <address type='pci' domain='0x0000' bus='0x00' slot='0x05' function='0x1'/> </hostdev> <memballoon model='none'/> </devices> </domain> -

This is way way outside of a normal-ish looking server usecase. I'm honestly surprised you've got anything to function...

To start with, you're probably booting Xen with

console=vga(because that's the default). It will be handed over to dom0 too, so start by going through the bootloader configuration and making sure that neither Xen nor dom0 are trying to use the display at all.I suspect this is the root cause of the display going periodically back to black.

-

@andyhhp I do have a 2nd GPU set as the primary output device in bios, and Xen uses it to display the console.. would Xen or dom0 try to use both GPU's?

-

Hi @jevan223 , I tried to do something very similar with an AMD card and USB card a few years ago. Got the AMD card working but couldn't get the USB card to pass-through. How did you manage that?

-

@jmccoy555 I followed the instructions here for passing through my USB PCIe card (2 port usb 3.0 Vantec card). You have to use a PCIe USB card, trying to passthrough an onboard usb controller just doesn't work.

Did you do anything special to pass through your GPU and enable video out? Was performance close to bare metal?

Sadly not getting this to work was a show stopper for me, I had to migrate to another server 'virtual environment' which did allow me to pass both video cards through to separate VM's, with TPM support for Windows 11 VM's as a bonus. That being said, I prefer XCP-ng and the XO interface, and would switch back if I could.

-

@jevan223 no nothing special for AMD cards. It just shows in XO as assignable. Used my WX4100 today but I was using a RX580 before.

I am using a PCIe card but no joy. I've got NICs and SAS & SATA controllers also passed through so I know that is all working. So maybe is my card that doesn't want to play. Could you link your specific card please?

I have passed through individual USB devices and that works nicely, but it's a bit of a faf to do.

Performance seams to be great. Just waiting for some LAN KVM devices to play with now!

-

@jmccoy555 The card I used was this one here, but it also worked with a no-name brand one I bought off newegg years ago.

Thanks, I may have to try again with an AMD card down the road!

-

@jevan223 Thanks. So looks like yours is Renesas uPD720201 and mine is ASMedia -ASM1142. Might try another card then. Though I don't really want a collection lying around gathering dust

oh well.

oh well. -

So no luck

lspci | grep USB 00:14.0 USB controller: Intel Corporation C610/X99 series chipset USB xHCI Host Controller (rev 05) 00:1a.0 USB controller: Intel Corporation C610/X99 series chipset USB Enhanced Host Controller #2 (rev 05) 00:1d.0 USB controller: Intel Corporation C610/X99 series chipset USB Enhanced Host Controller #1 (rev 05) 04:00.0 USB controller: Renesas Technology Corp. uPD720201 USB 3.0 Host Controller (rev 03)xl pci-assignable-list 0000:00:11.4 0000:04:00.0vm.start { "id": "da3eae3e-a67a-f0e2-f113-dd67f65baef1", "bypassMacAddressesCheck": false, "force": false } { "code": "INTERNAL_ERROR", "params": [ "xenopsd internal error: Cannot_add(0000:04:00.0, Xenctrlext.Unix_error(30, \"1: Operation not permitted\"))" ], "call": { "method": "VM.start", "params": [ "OpaqueRef:63acb07f-292f-4062-820f-98ea4934b653", false, false ] }, "message": "INTERNAL_ERROR(xenopsd internal error: Cannot_add(0000:04:00.0, Xenctrlext.Unix_error(30, \"1: Operation not permitted\")))", "name": "XapiError", "stack": "XapiError: INTERNAL_ERROR(xenopsd internal error: Cannot_add(0000:04:00.0, Xenctrlext.Unix_error(30, \"1: Operation not permitted\"))) at Function.wrap (/home/node/xen-orchestra/packages/xen-api/src/_XapiError.js:16:12) at /home/node/xen-orchestra/packages/xen-api/src/transports/json-rpc.js:35:27 at AsyncResource.runInAsyncScope (async_hooks.js:197:9) at cb (/home/node/xen-orchestra/node_modules/bluebird/js/release/util.js:355:42) at tryCatcher (/home/node/xen-orchestra/node_modules/bluebird/js/release/util.js:16:23) at Promise._settlePromiseFromHandler (/home/node/xen-orchestra/node_modules/bluebird/js/release/promise.js:547:31) at Promise._settlePromise (/home/node/xen-orchestra/node_modules/bluebird/js/release/promise.js:604:18) at Promise._settlePromise0 (/home/node/xen-orchestra/node_modules/bluebird/js/release/promise.js:649:10) at Promise._settlePromises (/home/node/xen-orchestra/node_modules/bluebird/js/release/promise.js:729:18) at _drainQueueStep (/home/node/xen-orchestra/node_modules/bluebird/js/release/async.js:93:12) at _drainQueue (/home/node/xen-orchestra/node_modules/bluebird/js/release/async.js:86:9) at Async._drainQueues (/home/node/xen-orchestra/node_modules/bluebird/js/release/async.js:102:5) at Immediate.Async.drainQueues [as _onImmediate] (/home/node/xen-orchestra/node_modules/bluebird/js/release/async.js:15:14) at processImmediate (internal/timers.js:464:21) at process.topLevelDomainCallback (domain.js:147:15) at process.callbackTrampoline (internal/async_hooks.js:129:24)" }Any ideas anyone before I give up and send the card back......

EDIT

OK, computer says NO!!!! - This indicates that your device is using RMRR (opens new window). Intel IOMMU does not allow DMA to these devices (opens new window)and therefore PCI passthrough is not supported

EDIT 2

Could we patch the kernel too?

https://github.com/kiler129/relax-intel-rmrr/blob/master/README.md#other-distros