Suggestions for new servers

-

I gave up on the hardware controller because in case of failure I need to have one in reserve for data recovery.

-

@dariosplit While that is true, it's still safer to do that than software RAID, at least in this setup. In my experience hardware RAID controller failures are super rare, have literally never come across one that wasn't like 10+ years old.

-

@planedrop I also don't see the point of XCP installed on SDD in RAID1 with HW controller. When and if it breaks, install it again.

I also prefer SR on local storage using one NVMe unit and continuous replication to another. -

@dariosplit I mean, it still would be best to avoid breaking it in the first place, it's not always going to be as simple as reinstall and you're good to go, things can get damaged if an SSD dies during use etc.... Could end up requiring restore from backup etc... I'd just avoid that in production.

-

I have to say, the recommendation to use hardware based RAID is somewhat shocking. You're throwing away massive amounts of performance by funneling all potentially hundreds of lanes worth of NVMe disks into a single controller with a measly 16 lanes. SDS is the future, wether that be through something like md or ZFS.

-

@xxbiohazrdxx Sure, but why do you need a massive amount of storage on a local hypervisor? That's when it's time for a SAN.

-

@planedrop Well, more importantly, with Hardware Raid, you often get blind and Hotswap capabilities.

With Software Raid, you need to prepare the system by telling md to eject a disk and then replace said disk in the array with its replacement.

Software Raid is very good, but has its drawbacks.

-

@DustinB This is also a good point, they have their ups and downs. I do think eventually hardware RAID will completely die, but that's still a ways off. Software is certainly what I would call overall superior, but hardware RAID still has good use cases for the time being.

-

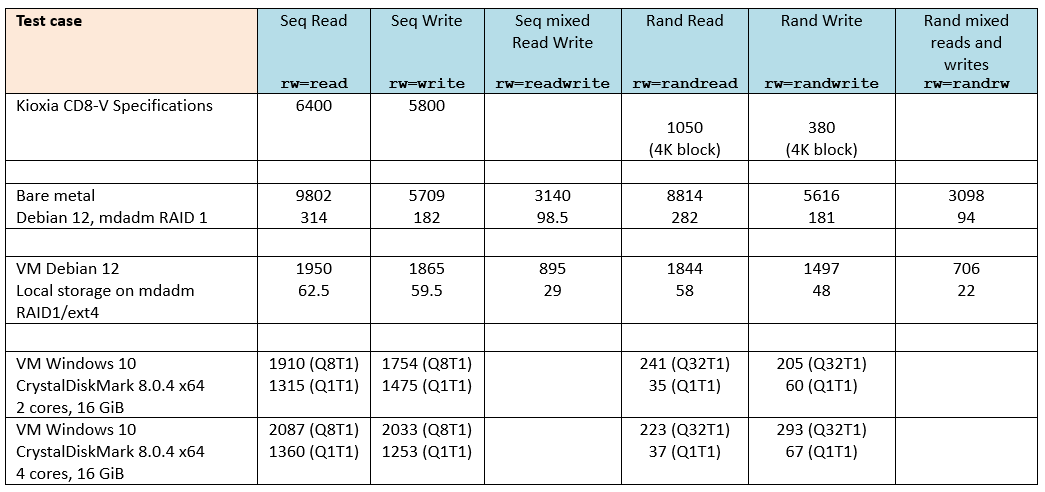

I've done some benchmarks with my new servers and want to share results with you.

Server:

- CPU: 2 x Intel Gold 5317

- RAM: 512GB DDR4-3200

- XCP-ng: 8.3-beta2

fio parameters common to all tests:

--direct=1 --rw=randwrite --filename=/mnt/md0/test.io --size=50G --ioengine=libaio --iodepth=64 --time_based --numjobs=4 --bs=32K --runtime=60 --eta-newline=10VM Debian: 4 vCPU, 4 GB Memory, tools installed

VM Windows: 2/4 vCPU, 4 GB Memory, tools installedResults:

- First line: Troughtput/Bandwidth (MiB/s)

- Second line: IOPS: KIOPS (only linux)

Considerations:

- On bare metal I get full disk performance: approx. double read speed due to RAID1.

- On VM the bandwidth and IOPS are approx 20% of bare metal values

- On VM the bottleneck is tapdisk process (CPU at 100%) and can handle approx 1900 MB/s

-

Just wanted to say thank you for this thread. I am in the midst of evaluating ESXi alternatives and trying ProxMox as well as XCP-NG.

I just installed XCP-NG this morning and have been trying to figure out if I should be doing hardware RAID vs ZFS raid. This thread provided the answer I needed. Hardware Raid on my Dell Power Edge Servers is easy and reliable.

Thank you again!

-

This post is deleted!