@dinhngtu Thanks for your help.

I solved to install XS Tools uninstalling old XS version 9.0, running XenClean and installing version 9.4.

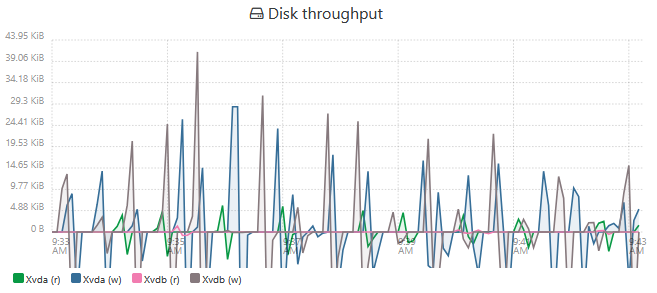

Also Disk stats now are correct.

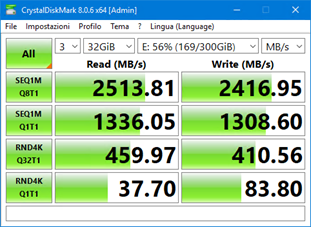

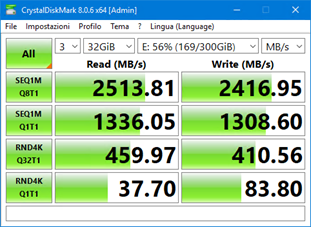

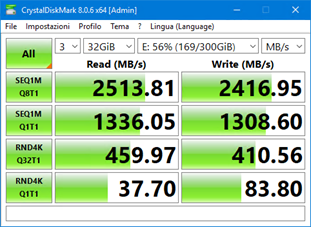

Now I get these good performances:

@dinhngtu Thanks for your help.

I solved to install XS Tools uninstalling old XS version 9.0, running XenClean and installing version 9.4.

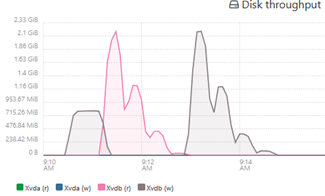

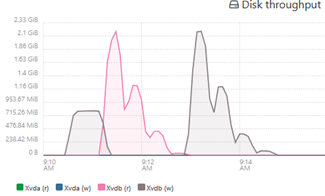

Also Disk stats now are correct.

Now I get these good performances:

@dinhngtu Thanks for your help.

I solved to install XS Tools uninstalling old XS version 9.0, running XenClean and installing version 9.4.

Also Disk stats now are correct.

Now I get these good performances:

@dinhngtu

In device manager disks are reported as "QEMU NVMe Ctrl".

Now i discover "Citrix Hypervisor PV Tools 9.0.42" are already installed (in App & Features), but in XOA i see "No Xen tools detected".

Should i try to uninstall "Citrix Hypervisor PV Tools 9.0.42" and install 9.4.0 version?

On my xcp-ng 8.3 hosts I've an old Windows server 2019 VM without guest tools installed (Virtualizzation mode is HVM).

Tried to install citrix tools 9.3.3 and 9.4.0 (drivers + management agent).

After tools setup and reboot the VM does not start anymore (stay indefinitely on rotating dots screen).

Am I missing or doing wrong something?

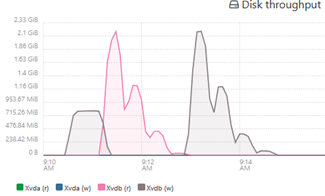

For your knowledge, this VM has also strange "Disk throughput" stats with negative values (all other VMs on the host are ok). I don't know why...

Thanks

Is there an ETA for fully functional deploy (on local storage) with differential backup, live migration, statistics and so on?

Perhaps with 8.3 stable release?

I'm interested mainly because of bit rotting detection of ZFS.

@michael-manley Hi Michael, have you any plan to release a new version working with latest xcp-ng 8.3?

@MathieuRA said in XO - enable PCI devices for pass-through - should it work already or not yet?:

So the error is "normal".

XOA tries to evacuate your host before restarting it, but your host's VMs have "nowhere to go" since you don't have another host.

Are you using XO from sources?

IMHO If there isn't a host where to evacuate the running VMs, the reboot procedure should simply notify the user and shutdown the VMs.

Why a disk/SR/VDI performance graph is not available on stats page for host/VM?

Thanks

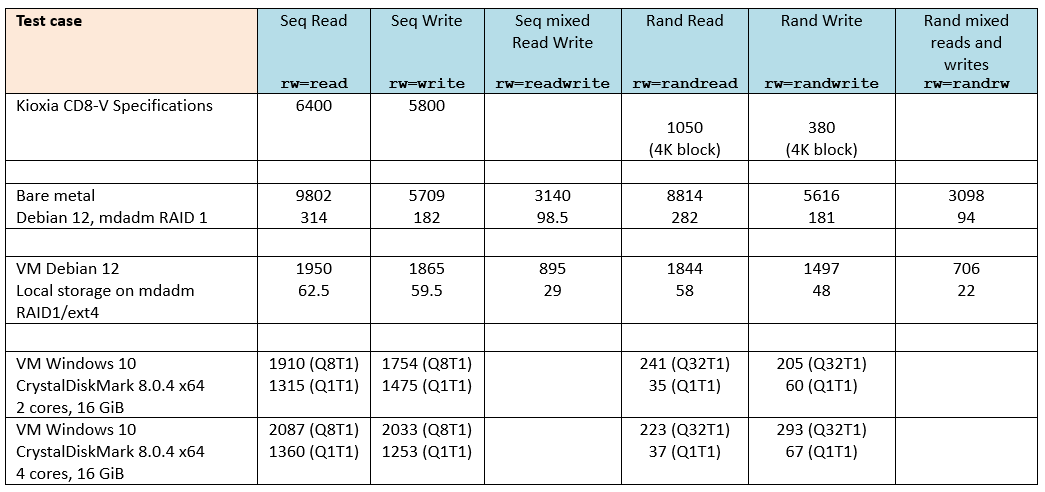

I've done some benchmarks with my new servers and want to share results with you.

Server:

fio parameters common to all tests:

--direct=1 --rw=randwrite --filename=/mnt/md0/test.io --size=50G --ioengine=libaio --iodepth=64 --time_based --numjobs=4 --bs=32K --runtime=60 --eta-newline=10

VM Debian: 4 vCPU, 4 GB Memory, tools installed

VM Windows: 2/4 vCPU, 4 GB Memory, tools installed

Results:

Considerations:

I suppose i can install new servers with xcp-ng-8.3.0-beta2-test3.iso and then update to rc/stable release with yum update.

Am i correct?

Regards

Paolo

My new servers will be available in the next week. The full specs are:

Planned setup (KISS):

Questions / your opinion about:

Thanks