Grant Table in Xen

Hello there! I'm Damien, an XCP-ng developer at Vates but also doing a PhD with the aim of improving storage performances on Xen. For my first blog post here, I'd like to talk about one of the interfaces used in the Xen hypervisor. This interface allows the exchange of data between guests: the Grant Table.

The Grant Table is a secured shared memory interface where one domain gives access to a part of its memory to another domain. To ensure secure access from a foreign guest to this memory, Xen is used as a (secure) mediator.

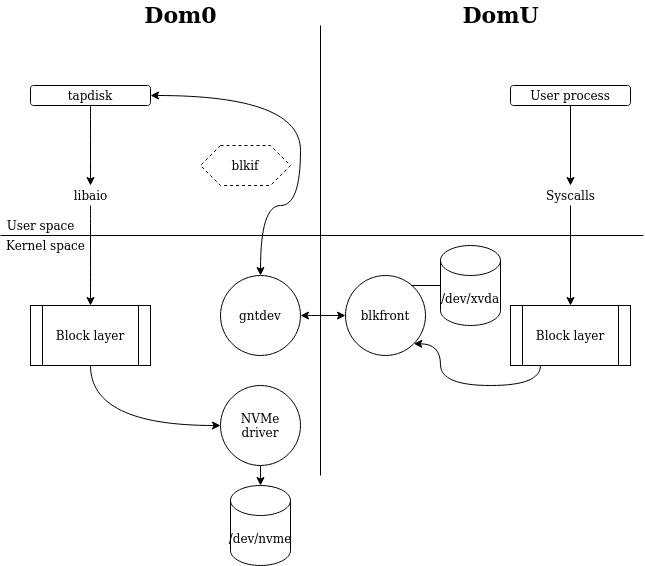

One usage example of this is tapdisk. It is the software in Dom0 getting block storage requests from the paravirtualized (PV) disk driver blkfront (those that appears under /dev/xvd[a-z]) and ensures that the requests end up on storage:

Disk requests are exchanged between the blkfront driver and the backend tapdisk over the grant table using a protocol called blkif.

blkif is the storage paravirtualization protocol in XCP-ng (and Xen in general). It uses a block driver in the guest kernel, xen-blkfront, which transmits requests through a producer-consumer ring in a shared memory segment. With the producer being the guest frontend driver and the consumer being a backend in another domain, which in XCP-ng is a backend called tapdisk.

This shared memory buffer is, you guessed it, using the Grant Table. In fact, blkif uses Grants exclusively to exchange data after the initial XenBus connection. First, to access the shared ring where the requests are given. Second, every request transmitted from the frontend to the backend contains one or multiple grant references pointing to the data of said requests in the guest memory.

Anyway, the Grant Table interface is something that the kernel can manipulate to grant access or to access a foreign page. It can also be used in userspace which I will be talking about next, with (I hope) simple code examples.

From inside the kernel

The userspace use case will be analyzed in the next section. We'll start on the kernel side. Buckle up!

Low level working

It's a 2 stage thing: you might want to share a page or to access a page. The mechanism is a bit different between those two, for obvious security reasons.

From a guest wanting to share a page

A domain wanting to share a page of memory would just need to write information into its grant table. The grant table is an array of structures, with the grant ref being the index in that array.

Example from the Linux kernel drivers/xen/grant-table.c:

static void gnttab_update_entry_v2(grant_ref_t ref, domid_t domid,

unsigned long frame, unsigned int flags)

{

gnttab_shared.v2[ref].hdr.domid = domid;

gnttab_shared.v2[ref].full_page.frame = frame;

wmb(); /* Hypervisor concurrent accesses. */

gnttab_shared.v2[ref].hdr.flags = GTF_permit_access | flags;

}

That structure contains enough information for Xen to be able identify the page of memory and reference it when the other side eventually asks for access.

From a guest wanting to access a page

For a domain to access a grant (really, a page of memory) shared to it by another domain, it first needs to request that Xen maps the grant into its memory. To be able to identify which grant it is, it needs two things: the domid of the other domain and the grant reference.

The domid of the other domain will identify in which grant table Xen needs to look. Then the grant reference identifies the entry in that grant table.

Using this information, the domain makes a grant-table hypercall asking Xen to map the foreign page into its own address space.

The structure given to Xen in the hypercall containing the parameters looks like this:

struct gnttab_map_grant_ref {

/* IN parameters. */

uint64_t host_addr;

uint32_t flags; /* GNTMAP_* */

grant_ref_t ref;

domid_t dom;

/* OUT parameters. */

int16_t status; /* => enum grant_status */

grant_handle_t handle;

uint64_t dev_bus_addr;

};Convenience wrappers of the Linux kernel

An interface is exposed for all kernel subsystems to be able to use grant tables in a more easy way than having to directly perform hypercalls and deal with the memory operations needed by the kernel memory system.

For example, granting access to a frame can be done with gnttab_grant_foreign_access.

int gnttab_grant_foreign_access(domid_t domid, unsigned long frame,

int readonly);While mapping a foreign page can be done with gnttab_map_refs

int gnttab_map_refs(struct gnttab_map_grant_ref *map_ops,

struct gnttab_map_grant_ref *kmap_ops,

struct page **pages, unsigned int count);

From userspace

Another use case, the one used in tapdisk, is accessing foreign memory from userspace with a "normal" application.

Of course, we can't manipulate an interface hosted in the kernel directly. That is why xen-gntdev and xen-gntalloc exists.

These two drivers are in the mainline Linux kernel and should be easily available on any Linux system.

They both expose the grant table functionalities to userspace using a ioctl and a mmap interface:

gntallocpermits you to allocate a shared memory page and obtain a grant reference.gntdevexposes the ability to map a foreign page using a grant reference and the DomId of the other VM.

From the guest wanting to share a page

First, you have to include the header for using the driver:

#include <xen/gntalloc.h>Second, you want to have a file descriptor opened on the driver node.

int gntalloc_fd = open("/dev/xen/gntalloc", O_RDWR);

if(gntalloc_fd < 0){

//Error: maybe you need to load the driver :)

}Then you need to allocate a struct ioctl_gntalloc_alloc_gref and set the input into it before calling an ioctl with a pointer to it as parameter.

struct ioctl_gntalloc_alloc_gref* gref = malloc(sizeof(struct ioctl_gntalloc_alloc_gref));

if(gref == NULL){

//Error: couldn't allocate buffer

}

gref->domid = domid; //DomID of the other side

gref->count = count; //Number of grant we want to allocate

gref->flags = GNTALLOC_FLAG_WRITABLE;

int err = ioctl(gntalloc_fd, IOCTL_GNTALLOC_ALLOC_GREF, gref);

if(err < 0){

free(gref);

//Error: ioctl failed

}Finally, you want to call mmap using the returned value inside the structure by ioctl.

char* shpages = mmap(NULL, len, PROT_READ | PROT_WRITE, MAP_SHARED, gntalloc_fd, gref->index);

if(shpages == MAP_FAILED){

//Error: mapping the grants failed

}len is the size in bytes to map, a grant being PAGE_SIZE bytes. It can be obtained by multiplying PAGE_SIZE with the number of grants.

After that, access the mapped memory like any shared memory segment.

sprintf(shpages, "Hello, World!");Before cleaning and quitting, we need to keep the memory in our control until we know that the other side has freed it.

From a guest wanting to access a shared page

The "other side", the other guest accessing the memory, needs the grant reference and the DomId of the domain sharing its memory.

They need to be communicated to this domain using another communication channel, e.g. Xenstore.

First, you need to include the header files:

#include <xen/grant_table.h>

#include <xen/gntdev.h>After that, you need to open a file descriptor on the gntdev node.

int gntdev_fd = open("/dev/xen/gntdev", O_RDWR);

if(gntdev_fd < 0){

//Error: Maybe you need to load the driver

}After that, you need to make an ioctl to the gntdev driver using this data.

In the following code, nb_grant is the number of grant reference, refid is an array of grant reference and domid is the other domain domid.

struct ioctl_gntdev_map_grant_ref* gref = malloc(

sizeof(struct ioctl_gntdev_map_grant_ref) +

(nb_grant-1) * sizeof(struct ioctl_gntdev_grant_ref));

if(gref == NULL){

//Error: Allocation failed

}

gref->count = nb_grant;

for(i = 0; i < nb_grant; ++i){

struct ioctl_gntdev_grant_ref* ref = &gref->refs[i];

ref->domid = domid;

ref->ref = refid[i];

}

int err = ioctl(gntdev_fd, IOCTL_GNTDEV_MAP_GRANT_REF, gref);

if(err < 0){

//Error: Our ioctl failed

}This first call to the gntdev driver gives us an index in the struct ioctl_gntdev_map_grant_ref structure. This index, like for gntalloc, needs to be used in a mmap call.

char* shbuf = mmap(NULL, nb_grant*PAGE_SIZE, PROT_READ | PROT_WRITE, MAP_SHARED, gntdev_fd, gref->index);

if(shbuf == MAP_FAILED){

//Error: Failed mapping the grant references

}After that, you can read and write memory in the same way you would a "normal" shared memory segment.

printf("%s\n", shbuf);Your terminal should then print you the string you put into the guest side.

After using a shared memory segment

After having access to foreign memory, we need to release access to this shared memory segment.

For the domain accessing it, we can simply use munmap and IOCTL_GNTDEV_UNMAP_GRANT_REF on the ioctl interface.

The unmapping with unmap instructs the kernel to update the process address space.

err = munmap(shbuf, nb_grant*PAGE_SIZE)

if(err != 0){

//Error: Unmapping failed

}Then the ioctl call instructs the gntdev driver to transmit the message to Xen.

struct ioctl_gntdev_unmap_grant_ref ugref;

ugref.index = index;

ugref.count = nb_grant;

int ret = ioctl(gntdev_fd, IOCTL_GNTDEV_UNMAP_GRANT_REF, &ugref);

if(ret < 0){

//Error: Failed gntdev unmap

}For the domain granting access, it's almost similar:

munmap(shpages, len);struct ioctl_gntalloc_dealloc_gref dgref;

dgref.index = gref->index;

dgref.count = gref->count;

int ret = ioctl(gntalloc_fd, IOCTL_GNTALLOC_DEALLOC_GREF, &dgref);

if(ret < 0){

//Error: Deallocating grant ref failed

}In both cases, the index is the one given by the allocation/mapping call and count is the number of grants.

Conclusion

Using this simple interface, you can imagine all the protocols and applications that you might want to build on top of such a system. This interface allows you to have a secure interface to share memory with another piece of software running in another virtual machine alongside our applications. The hypervisor being the trusted partner in our case: so only what the guest wants to share is accessible by the other side.

Another interesting thing: the frontend can be a kernel driver while the backend can be a userspace application like in tapdisk. It is in this way easier to code and maintain such an application, at least in the backend side.

A concrete application of relying on this interface and a standardized protocol, i.e. blkif, we can easily change the storage backend to something more powerful or using different technology. The example being using a backend inside SPDK, a userspace NVMe driver used by multiple solution with lots of neat functionalities. Which is… really fast! You'll learn more about that in the near future, stay tuned 😉

I hope this blog post was clear enough and can be used for easy understanding. You can find the example code for this post on my GitHub: https://github.com/Nambrok/grant_table_blog_code

If you have any questions join us on the XCP-ng forums, it's a good place to discuss XCP-ng and Xen, its central engine.