First SMAPIv3 driver is available in preview

As per the title: our first SMAPIv3 driver is available in preview, and it's based on top of ZFS! This development marks a significant milestone in our commitment to enhancing storage management in XCP-ng.

Our journey with SMAPIv3 began with an introduction to its possibilities through our previous blog post:

Since that initial effort, we have actively sought collaboration with the XenServer Business Unit (formerly Citrix), aiming to accelerate the development process through a partnership. Despite several meetings and discussions, the focus from their side remained on non-open-source plugins/drivers for the SMAPIv3, diverging from our vision of an open and community-driven ecosystem.

Given the stagnation in collaborative efforts, we decided it was time to forge our own path. Our team has worked diligently to bring you this preview, allowing the community to test, provide feedback, and help shape the future of this project.

🧭 The plan

At the heart of our new SMAPIv3 initiative is the goal to roll out an initial driver and engage our community in its real-world testing. This approach is not just about leveraging the collective expertise of our users but also about refining and enhancing the driver based on your invaluable feedback. Here’s how we’re planning to proceed.

First, our initial release of today: we are starting with a local driver, which simplifies the development and testing phases, enabling us to deliver results faster. This initial release serves as the foundation upon which further enhancements and broader capabilities will be built. We choose ZFS because:

- It's stable and powerful: ZFS is renowned for its robust feature set and stability, making it an excellent backbone for our ambitious project.

- It's very flexible: the versatility in configuring ZFS challenges us to develop a truly agnostic SMAPIv3 driver. In the future, our goal is to create a framework/SMAPIv3 that not only supports ZFS but is also compatible with a wide array of storage options like LVM, iSCSI, Fiber Channel, and NVMeoF. ZFS is just a step in that direction!

Then, after gathering the feedback and working on differential export, we'll leverage this expertise to move even forward: currently, we utilize blktap for the raw data path, which is effective but not without its limitations. As we look forward, we are considering significant changes or alternatives to this approach, like using qemu-dp maybe, bringing more features without changing the functional aspects of this first driver. This means we'll be able to also develop later a file-based storage options might later utilize qemu-dp with .qcow2 files to better suit different storage types and needs.

Through this phased and thoughtful approach, we aim to build a driver that not only meets the current demands but also anticipates future storage trends and technologies. We are excited to embark on this journey with you, our community, and we eagerly await your feedback and contributions as we move forward!

🧑🔧 How to test it

Obviously, this driver is available only for XCP-ng 8.3. As you'll see, it's very straightforward to install it: the package is already in our repositories.

Installation

First, install the package and load the ZFS module:

yum install xcp-ng-xapi-storage-volume-zfsvolmodprobe zfsFinally, you need to restart the toolstack, either via Xen Orchestra of via the host CLI: xe-toolstack-restart.

That's it, you are ready to create your first SMAPIv3 SR, based on ZFS!

SR creation

Now you can create the Storage Repository (SR), we'll see how to do it. We'll show you with the xe, on the host you just installed the package. Let's see this example on a single device called /dev/sdb:

xe sr-create host-uuid=<HOST UUID> type=zfs-vol name-label="SR ZFS-VOL" content-type=user shared=false device-config:device=/dev/sdbThis will return the UUID of the freshly created SR. You can also check that the zpool has been created:

zpool list

NAME SIZE ALLOC FREE CKPOINT EXPANDSZ FRAG CAP DEDUP HEALTH ALTROOT

sr-02da4b94-17f7-42ba-d09d-89042ebd92e3 49.5G 152K 49.5G - - 0% 0% 1.00x ONLINE /var/run/sr-mountYou are now all set, and you can create disks on this SR, like with any other SR type. For example, create a VM and select the new SR, and install it as usual!

Advanced SR creation

Obviously, this is a pretty simple example with a single device. To avoid too much complexity on the creation side, we also added the capability to create a SR on a pre-configured ZFS pool, eg using RAIDZ, mirroring and such. In that case, you can pass directly the name of the pre-existing target pool. For example, if you decide to create your ZFS pool this way:

zpool create my_complex_zpool mirror /dev/sda /dev/sdb mirror /dev/sdc /dev/sddThen, you'll be able to "pass" this pool as being the new SR directly:

xe sr-create host-uuid=<HOST UUID> type=zfs-vol name-label="SR ZFS-VOL" content-type=user shared=false device-config:zpool=<my_complex_zpool_name>Alternatively, you can also pass the zpool creation argument inside the SR create command, for example:

xe sr-create host-uuid=<HOST UUID> type=zfs-vol name-label="SR ZFS-VOL" content-type=user shared=false device-config:vdev="mirror /dev/sda /dev/sdb mirror /dev/sdc /dev/sdd"It's not sure which method we'll keep on the long run, but at least for now, there's enough flexibility to do whatever you want "underneath" with a ZFS pool.

📷 Usage

You can use this SR almost like a regular SMAPIv1 SR. You can do all these actions on your VDI:

- Creation: any kind of size you want (no limitation in size, see below)

- Snapshot & clone: regular VM snapshot & clone with all its disks on it, or just snapshot the disk, even revert it!

- Size: grow a VDI in size. It's fully thin provisioned!

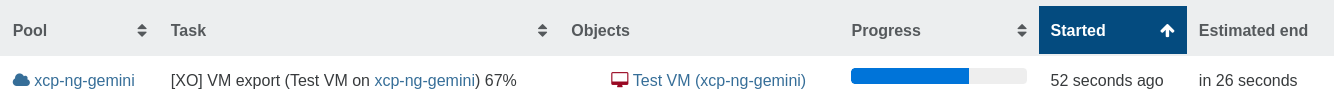

- Import & Export: import & export your VM active disk in XVA format.

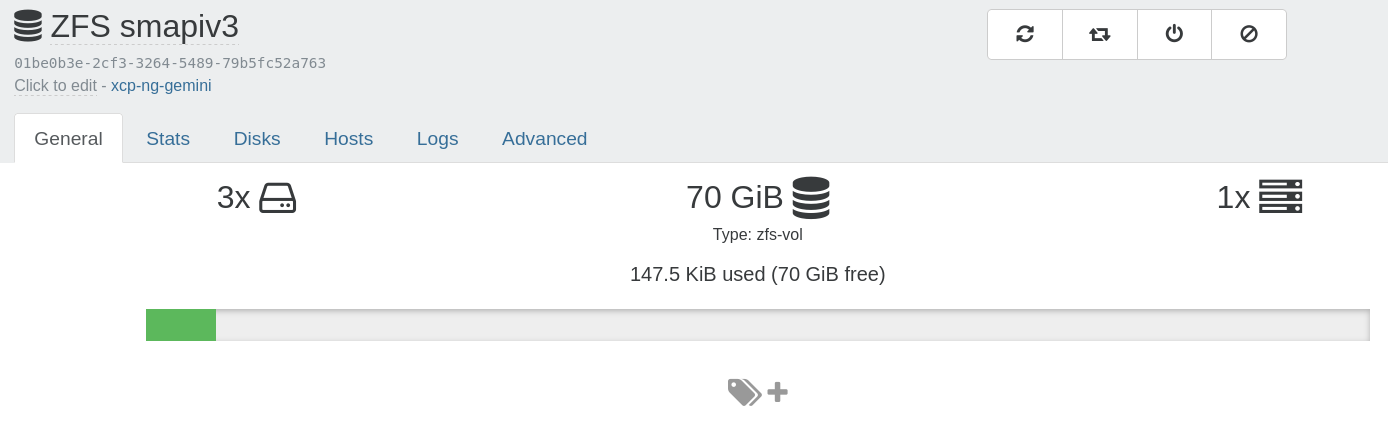

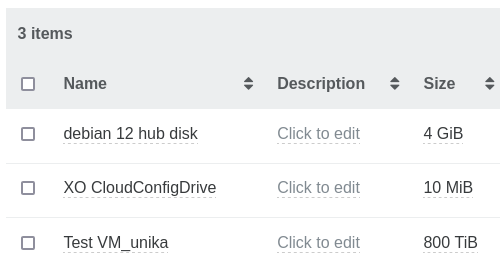

In case you wonder about large VDIs, on our small 70GiB thin provisioned storage repository:

Exporting and import XVA is also a cool way to play with this new SR by sending VMs coming from your backup or another SRs:

Current limitations

As excited as we are about this first release of our SMAPIv3 driver, it's important to note a few areas where it's not quite up to speed yet. Currently, you won't be able to use this SR type for live or offline storage motion, nor can you make differential backups using Xen Orchestra. Also, while setting up VMs, remember that the fast clone option isn’t going to work with this initial driver: so you'll want to uncheck that in XO.

Also, there's some specific ZFS limitation: snapshot and clones must alternate in a chain. It means no clone of clone, no snapshot of snapshot. This means snapshots cannot be used for copy or export!

Finally, there's no SR statistics available at the moment.

We’re well aware of these gaps, and we're on it! Releasing the driver now, even with these limitations, is crucial for us. It lets us gather your insights and experiences, which in turn helps us take those next steps more effectively. Each piece of feedback is vital, pushing us forward as we refine the driver to better meet your needs and enhance its capabilities.

⏭️ What's next

We’re just getting started with our new SMAPIv3 driver, and your feedback is going to be key in making sure we’re moving in the right direction.

So please test it and report in here:

As we incorporate your insights, we’re learning a ton about the best choices for components, API designs, and more, all of which will help us bring even more to the table in the coming months. Next up, we’re tackling file-based storage, and down the line, shared block storage is the big challenge we aim to conquer.

We’re also looking into the best ways to make this driver easy to integrate across different systems. Right now, it looks like Network Block Device (NBD) might be our best bet for achieving that. Stay tuned and keep those suggestions coming:we’re building this together, and your input is invaluable as we chart the future of virtualization.