XCP-ng High Availability: a guide

This article provides an introduction to the VM High Availability (HA) mechanisms in XCP-ng, detailing how you can leverage these features to ensure continuous operation of your virtual machines (VMs).

Implementing VM High Availability is no simple task. The primary challenge lies in reliably detecting when a server has truly failed to prevent unpredictable behavior. However, that's just one piece of the puzzle. For instance, if you lose network connectivity but retain access to shared storage, how do you ensure that multiple hosts don't simultaneously write to the storage, risking data corruption?

This is where XCP-ng, with its robust API (XAPI), excels. It expertly manages these scenarios, safeguarding your VMs in various failure cases. In this article, we'll explore how to protect your VMs under different conditions, using real-world examples for clarity.

⚠️ A word of caution before you begin

Before diving into HA configuration, it's crucial to understand that High Availability is a complex feature that demands careful consideration and a stable infrastructure. Here are a few points to keep in mind:

- HA is complex: High Availability introduces additional layers of complexity to your infrastructure. You must fully understand how HA operates before enabling it, as misconfigurations or misunderstandings can lead to unexpected issues. The overhead of maintaining HA is often underestimated.

- Infrastructure stability is key: your network and shared storage must be rock-solid. If your infrastructure isn't stable, HA can do more harm than good, potentially leading to frequent host fencing and more downtime than if HA were disabled. For example, losing access to shared storage can trigger host fencing, affecting all your VMs.

- Consider simplicity for smaller setups: for smaller environments, managing without HA might be easier. At Vates, we often choose not to use HA, relying instead on basic monitoring. This approach works well for us and might for you too.

- Need vs. Want: make sure you truly need HA before implementing it. If you decide it's necessary, proceed with caution and follow this guide closely.

🔧 How XCP-ng High Availability works

The HA mechanism in XCP-ng relies on the concept of a pool, where hosts communicate their status and share data:

- Host failure: if a host fails, it is detected by the pool master.

- Master failure: if the pool master fails, another host automatically takes over as the master.

To ensure a host is genuinely unreachable, XCP-ng uses multiple heartbeat mechanisms. Checking network connectivity alone isn’t enough; shared storage must also be monitored. Each host regularly writes data blocks to a dedicated Virtual Disk Image (VDI) on shared storage, following the "Dead Man's Switch" principle. This ensures that HA is only configured with shared storage (iSCSI, Fiber Channel, XOSTOR or NFS) to prevent simultaneous writes to VM disks, which could lead to data corruption.

Here’s how XCP-ng handles different failure scenarios:

- Loss of both network and storage heartbeats: the host is considered unreachable, and the HA plan is initiated.

- Loss of storage but not network: If the host can communicate with a majority of the pool members, it stays active. This prevents any harm to the data since it cannot write to VM disks. However, if the host is isolated (unable to reach the majority of the pool), it initiates a reboot.

- Loss of network but not storage (worst case): The host recognizes itself as problematic and initiates a reboot procedure, ensuring data integrity through fencing.

🏗️ Requirements

Enabling HA in XCP-ng requires thorough planning and validation of several prerequisites:

- Pool-Level HA only: HA can only be configured at the pool level, not across different pools.

- Minimum of 3 hosts recommended: While HA can function with just 2 XCP-ng servers in a pool, we recommend using at least 3 to prevent issues such as a split-brain scenario. With only 2 hosts, an equal split could result in one host being randomly fenced.

- Shared storage requirements: You must have shared storage available, including at least one iSCSI, NFS, XOSTOR or Fiber Channel LUN with a minimum size of 356 MB for the heartbeat Storage Repository (SR). The HA mechanism creates two volumes on this SR:

- A 4 MB heartbeat volume for monitoring host status.

- A 256 MB metadata volume to store pool master information for master failover situations.

- Dedicated heartbeat SR optional: While it's not necessary to dedicate a separate SR for the heartbeat, you can choose to do so. Alternatively, you can use the same SR that hosts your VMs.

- Unsupported storage for heartbeat: Storage using SMB or iSCSI authenticated via CHAP cannot be used as the heartbeat SR.

- Static IP addresses: Ensure that all hosts have static IP addresses to avoid disruptions from DHCP servers potentially reassigning IPs.

- Dedicated bonded interface recommended: For optimal reliability, we recommend using a dedicated bonded interface for the HA management network.

- VM agility for HA protection: For a VM to be protected by HA, it must meet certain agility requirements:

- The VM’s virtual disks must reside on shared storage, such as iSCSI, NFS, or Fibre Channel LUN, which is also necessary for the storage heartbeat.

- The VM must support live migration.

- The VM should not have a local DVD drive connection configured.

- The VM’s network interfaces should be on pool-wide networks.

If you create VLANs and bonded interfaces via the CLI, they might not be active or properly connected, causing a VM to appear non-agile and, therefore, unprotected by HA. Use the pif-plug command in the CLI to activate VLAN and bond PIFs, ensuring the VM becomes agile. Additionally, the xe diagnostic-vm-status CLI command can help identify why a VM isn’t agile, allowing you to take corrective action as needed.

⚙️ Configuring HA in XCP-ng

You can check the status or enable/disable HA directly in Xen Orchestra or via XCP-ng CLI.

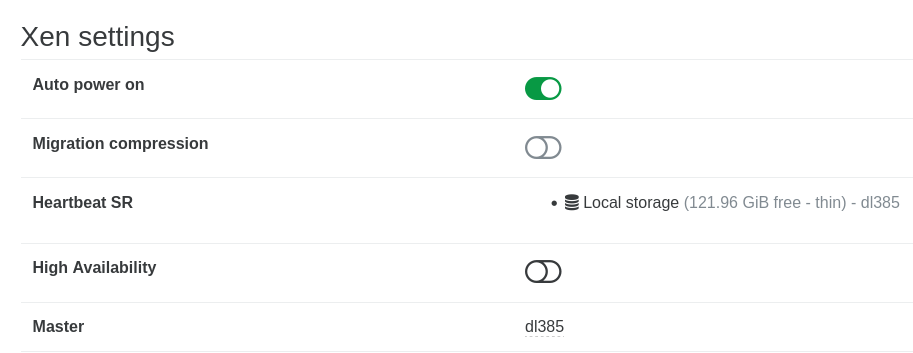

Configure the pool

To check if HA is enabled in your pool, in Xen Orchestra:

- in the pool view, there's a dedicated "cloud" icon with a green check when HA is enabled

- in the Pool/Advanced tab, there's a toggle (enabled/disabled) depending if HA is enabled or not.

To enable HA, you can just toggle the button, which gives you a SR selector as Heartbear SR. If you want to do it via the CLI:

xe pool-ha-enable heartbeat-sr-uuids=<SR_UUID>Once enabled, HA status will be displayed with the green toggle. That's it!

Maximum host failure tolerance

Consider how many host failures your pool can tolerate. For a two-host pool, the maximum number is one. If you lose one host, maintaining HA on the remaining host becomes impossible.

XCP-ng can calculate this value for you:

xe pool-ha-compute-max-host-failures-to-tolerate

In our example, this returns 1. If necessary, you can manually set the failure tolerance:

xe pool-param-set ha-host-failures-to-tolerate=1 uuid=<Pool_UUID>

If more hosts fail than this number, the system will raise an over-commitment alert.

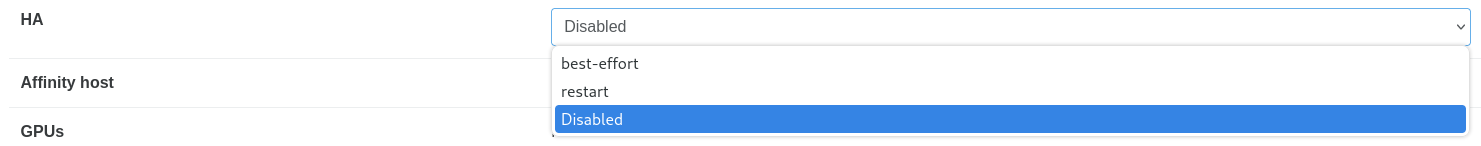

Configuring a VM for HA

Setting up a VM for HA is straightforward in Xen Orchestra. Navigate to the VM’s Advanced tab, use the HA selector and that's it!

Alternatively, you can use the CLI:

xe vm-param-set uuid=<VM_UUID> ha-restart-priority=restart

VM HA modes

In XCP-ng, you can choose between three HA modes: restart, best-effort, and disabled:

- Restart: if a protected VM cannot be immediately restarted after a server failure, HA will attempt to restart the VM when additional capacity becomes available in the pool. However, there is no guarantee that this attempt will be successful.

- Best-Effort: for VMs configured with best-effort, HA will try to restart them on another host if their original host goes offline. This attempt occurs only after all VMs set to the "restart" mode have been successfully restarted. HA will make only one attempt to restart a best-effort VM; if it fails, no further attempts will be made.

- Disabled: if an unprotected VM or its host is stopped, HA does not attempt to restart the VM.

Start order

The start order defines the sequence in which XCP-ng HA attempts to restart protected VMs following a failure. The order property of each protected VM determines this sequence.

While the order property can be set for any VM, HA only uses it for VMs marked as protected. The order value is an integer, with the default set to 0, indicating the highest priority. VMs with an order value of 0 are restarted first, and those with higher values are restarted later in the sequence.

You can set the order property value of a VM via the command-line interface:

xe vm-param-set uuid=<VM UUID> order=<number>Configure HA timeout

The HA timeout represents the duration during which networking or storage might be inaccessible to the hosts in your pool. If any XCP-ng server cannot regain access to networking or storage within the specified timeout period, it may self-fence and restart. The default timeout is 60 seconds, but you can adjust this value using the following command to suit your needs:

xe pool-param-set uuid=<pool UUID> other-config:default_ha_timeout=<timeout in seconds> 🧪 Behavior and testing HA

It's important to understand HA behavior and potential actions done automatically in case of host, network or storage failure.

VM shutdown behavior

If you manually shut down a VM via Xen Orchestra, XCP-ng recognizes this action and stops the VM as intended. However, if the VM is shut down from within its guest OS (e.g., via console or SSH), XCP-ng considers it an anomaly and automatically restarts the VM. This ensures that a VM isn’t left unavailable due to an unintentional shutdown.

Host failure scenarios

Let's consider three different failure scenarios on a host named lab1 in a pool with two hosts (lab1 and lab2):

- Hard shutdown: physically power off the host. The VM will automatically start on the other host (

lab2) once XAPI detects the failure. - Storage disconnection: unplug the iSCSI storage link on

lab1. The VM will lose access to its disks, andlab1will recognize the storage failure. The fencing mechanism will reboot the host, and the VM will restart onlab2. - Network disconnection: unplug the management network cable from

lab1. Sincelab1can’t communicate with the pool, it will trigger the fencing process, and the VM will start onlab2.

🎯 Conclusion

XCP-ng provides a robust solution for High Availability, protecting your VMs under various failure conditions. However, HA is not a one-size-fits-all solution and may not be necessary for every environment. Consider your infrastructure’s stability and your true needs before enabling HA.