QCOW2 in XCP-ng: engineering a new storage path

Adding QCOW2 support as an alternative to the VHD format in XCP-ng was a major undertaking. In this article, we’ll walk you through the challenges we faced, what’s already changed under the hood, and what’s still on the roadmap.

There were three major parts on XCP-ng that needed to be adapted to handle the new format:

- blktap, the software handling VM disk requests

- the SMAPI, the storage stack of the hypervisor

- the XAPI, the brain of XCP-ng

blktap: building the backend of QCOW2

Most other hypervisor use VirtIO Qemu implementation for their VM paravirtualized devices, meaning a easy access to the QCOW2 format. In XCP-ng, the VM datapath is very different from what's become to be expected from QEMU/KVM-based hypervisors.

We have in the past written about the Grant Table in Xen and quickly discussed about the storage backend: blktap (that we sometime call tapdisk).

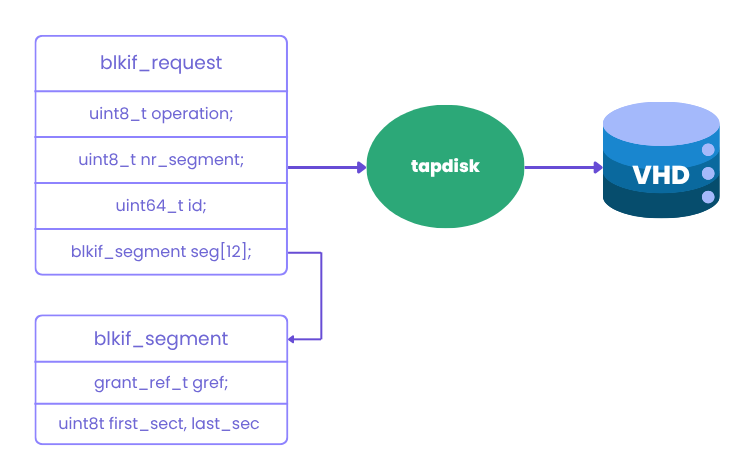

blktap is a backend driver that handles block I/O requests from virtual machines. It receives Xen's blkif requests from the driver frontend in the guest VM and converts them into operations on disk image formats such as VHD.

Here the blkif_request is coming from the VM xen_blkfront driver using the Grant Table to transfer data, with tapdisk consuming those requests to transform them in a VHD access.

As such, one of the first part to support QCOW2 meant adding a QCOW2 backend in tapdisk.

This backend is represented in the code by a new library named libqcow2. Remember this name because we will use it in the following sections. libqcow2 is a port of the qemu's code of qcow2 that links to tapdisk dynamically. The library puts a clear limit on the qcow2 code (as libvhd does on VHD backend) and brings the robustness and many features of the qemu code base.

The work on QCOW2 backend was also an opportunity to work on new features inside tapdisk. Those features are not released yet but are on the process for the next releases:

- Discard support: this feature allows the backend to send

discard/trimcommand to the block device. This allows a qcow2 file on a FileSR to shrink in size if the guest deletes data with the corresponding command (only supported by QCOW2 backend). - Persistent grants support: this is a feature of the

blkifprotocol that is often supported by the blkif frontend in the guest but not supported on thetapdiskside. This allow the backend to keep the grant tables mapped, saving expensivemap/unmapoperations, for more performance.

SMAPI: rethinking image format handling

The other component that needed big changes was the SMAPI, the storage manager stack. The SMAPI is the stack handling VDIs (Virtual Disk Image) and SRs (Storage Repository) in XCP-ng.

Adding QCOW2 meant adding handling multiple type of image in the SMAPI which previously handled only VHD (and RAW in some cases). It meant decoupling the VDI logic from VHD specifics.

We created an abstraction layer we called cowutil that implemented what the SM was expecting from a CopyOnWrite image format. The advantage of this new abstraction allows the addition of other image formats more easily in the SMAPI, provided of course that they are also supported in blktap.

The main difficulty was changing the storage stack expectations for VHD behaviors and adapting the QCOW2 part to the storage stack expectations.

Some things were similar, some things easier with QCOW2 and of course, some things more complicated with QCOW2.

The hardest part are the differences for coalescing the disks images between QCOW2 and VHD. VHD and QCOW2 are really different in this aspect.

Coalesce: reengineering the snapshot merges

Like previously said, the main difference between QCOW2 and VHD format are the coalesce working differently.

In the SMAPI, we are used to coalescing VHD up the chain with a leaf VDI being in active use using an external process without issues. Problem is with QCOW2 if you try to coalesce with a qemu-img commit command on an active chain, the chain will end up corrupted.

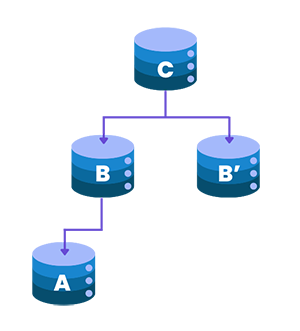

In the example above, we delete the snapshot B', B needs to be merged in C. While VHD supports coalescing a chain even when the base disk is in use, QCOW2 requires a different approach.

With a QCOW2 image, the coalesce need to be done by the same process that is opening the chain, in our case: tapdisk. It essentially meant it needed to be able to run the QCOW2 commit code from libqcow2 and give us the interface to call to follow the coalesce process.

We also needed to be able to tell when we should call qemu-img or a tapdisk command without risking to corrupt our images. We called offline coalesce using qemu-img directly when the VDI is not used, while we called online coalesce when the VDI is in use by tapdisk.

In the SMAPI, when a VDI is enabled on a host we have a key in the VDI leaf sm-config which gives us the host OpaqueRef, a host identifier.

OpaqueRefare a kind of UUID that are used inside the XAPI, we have multiple kinds of identifier in the XAPI, most of which you see as user are just called UUID. But inside when you use the API, you refer to object through another identifier we call theOpaqueRef. It looks like a UUID but withOpaqueRef:as prefix.

For example, we have the VDI UUID8a7b2ce2-5360-4d5a-ac2e-5768e6ad62c3->OpaqueRef:2a2c29a8-bc07-b93e-acd0-c9a0f9f22376. We would use the UUID when asked for a UUID and the OpaqueRef when asked for aVDI refby the XAPI.

So this host identifier allows us to know where the VDI is active and allows the Garbage Collector (GC) to ask this host to run tapdisk coalesce for this same VDI.

But this means that we need to be able to stop an ongoing GC when we want to use the VDI. To answer this need, we modified the VDI activation to check if any coalesce on its chain is ongoing and to notify the SR master (meaning the pool master for a shared SR) that coalesce should be interrupted. The VDI activation didn't previously know about the VDI chain, it only cared about the leaf VDI that it would give to tapdisk and it would then have been the one to follow the chain. So we needed to gain new logic in this part of the code.

So an offline coalesce would be interrupted by the VDI activation code, while an online coalesce would be interrupted by tapdisk closing. The GC would temporarily fail this coalesce and try again. If everything is working as expected an offline coalesce would be interrupted and the next run of the GC would see the VDI in use and run an online coalesce on the correct host.

So as we saw, the new coalesce mechanism for QCOW2 has multiple different steps compared to VHD that are needed to ensure the safety of the QCOW2 images while retaining a normal behavior for VHD image that could be on the same SR.

XAPI: adapting the higher layers

It is the stack handling most of everything in XCP-ng, it's what Xen Orchestra is interacting with.

We couldn't do everything in the SMAPI, even though we did a lot with just the SMAPI. (We had no changes on the XAPI side for the two first alpha of QCOW2 support.)

The XAPI has some interactions with VHD, mainly with migration but also to present the difference of two related VDI. It's a change needed by Xen Orchestra to be able to do delta backups.

But there is also a change in RRDD to allow to get the disk usage data when the VDI is QCOW2.

What's next

We are almost feature complete with QCOW2 VDI support, the next steps are improving performance and improving stability.

Improving performance comes in two aspects:

- blktap performance

- SMAPI performance

Like we previously discussed in the blktap part, we have a few things planned to improve storage performance, as well as features like discard that are allowing to reduce the space usage on file-based SRs.

For the SMAPI part, we have made some changes, a big improvement to come is that some logic that was done in the python part of stack has been externalized to some new C binary improving performance on VDI action up to 60 times. Here, you can see the improvement on obtaining data for one big QCOW2:

Python 0m32.475s

C 0m0.718sIt will have the effect of making the usage of any QCOW2 VDI a lot snappier.

For improving stability, we are investing a lot of time in writing a comprehensive suite of tests that will ensure improved stability in the future.