Thanks @Bastien-Nollet and @olivierlambert .

I'm going to do some tests and write the results. Thank you so much.

Best posts made by cbaguzman

-

RE: There are any commands that allow me to verify the integrity of the backup files?

-

There are any commands that allow me to verify the integrity of the backup files?

Hi, it's great to be back!

Until now, I've been performing backups on NFS media without the "Encrypt all new data sent to this remote" option and without the "Store backup as multiple data blocks instead of a whole VHD file." option. This allowed me to perform integrity checks on the backup files using a script that utilizes "vhd-cli check"and "xva-validate".

Now I need to change the backup methods by enabling "Encrypt all new data sent to this remote" and "Store backup as multiple data blocks instead of a whole VHD file.".

My question is whether there are any commands that allow me to verify the integrity of the backup files from the command line in this new scenario. I have VMs that are several GB in size, so using Auto Restore Check is not an option for me.

-

RE: Watchdog for reboot VM when it's broken(no respond).

Hello, finally I installed wachtdog in my VM. I have Linux Ubuntu there.

I used xen_wdt module in watchdog.

It is whatchdog configuration:

"/etc/default/watchdog"

# Start watchdog at boot time? 0 or 1 run_watchdog=1 # Start wd_keepalive after stopping watchdog? 0 or 1 run_wd_keepalive=1 # Load module before starting watchdog watchdog_module="xen_wdt" # Specify additional watchdog options here (see manpage)./etc/watchdog.conf

#ping = 8.8.8.8 ping = 192.16.171.254 interface = eth0 file = /var/log/syslog change = 1407 # Uncomment to enable test. Setting one of these values to '0' disables it. # These values will hopefully never reboot your machine during normal use # (if your machine is really hung, the loadavg will go much higher than 25) max-load-1 = 24 #max-load-5 = 18 #max-load-15 = 12 # Note that this is the number of pages! # To get the real size, check how large the pagesize is on your machine. #min-memory = 1 #allocatable-memory = 1 #repair-binary = /usr/sbin/repair #repair-timeout = 60 #test-binary = #test-timeout = 60 # The retry-timeout and repair limit are used to handle errors in a more robust # manner. Errors must persist for longer than retry-timeout to action a repair # or reboot, and if repair-maximum attempts are made without the test passing a # reboot is initiated anyway. #retry-timeout = 60 #repair-maximum = 1 watchdog-device = /dev/watchdog # Defaults compiled into the binary #temperature-sensor = #max-temperature = 90 # Defaults compiled into the binary admin = root interval = 20 logtick = 1 log-dir = /var/log/watchdog # This greatly decreases the chance that watchdog won't be scheduled before # your machine is really loaded realtime = yes priority = 1 # Check if rsyslogd is still running by enabling the following line #pidfile = /var/run/rsyslogd.pidI broke my SO and watchdog reset my vm.

I wait help with this.

Latest posts made by cbaguzman

-

RE: There are any commands that allow me to verify the integrity of the backup files?

@florent , I tried the option of running "vhd-cli raw alias.vhd /dev/null".

I was reading that vhd-cli raw copies the entire VM disk from the backup to /dev/null, reading the incremental backup file and the parent backup.

But something seems off here.

The VM disk in the backup has 133GB of block files within /data. And when I ran the tool, it took 7 seconds. The backup is on a USB hard drive (HDD), so I suspect the read speeds aren't higher than 30-60MB/s.

Just reading all the blocks should take at least 40 minutes.

Did I misunderstand how vhd-cli raw works?

Tank you for your attention.

-

RE: There are any commands that allow me to verify the integrity of the backup files?

Thank you @florent and @olivierlambert

One of the initial alternatives I considered was verifying the integrity of each incremental backup. The idea was that the first backup file would verify all the blocks that make up the backed-up VDI, and subsequent delta backups would only verify the new blocks belonging to the delta. (But I couldn't find a way to do this.)

For now, I think I can only verify the integrity of an .alias.vhd file from a delta backup, which will also include the blocks from the parent backup, by mounting alias.vhd with xo-fuse-vhd.

Now I'm going to test the suggestion that @florent sent me.

Any suggestions are welcome.

Thank you very much.

-

RE: There are any commands that allow me to verify the integrity of the backup files?

@Bastien-Nollet

I usedvhd-cli infowithout the--chainoption and it worked correctly. Thank you.But

vhd-cli infoisn't the solution for me.From what I've researched so far, it seems that to have cross-control of the backups, I'll have to use

xo-fuse-vhdto mount the backupaliase.vhdand then rundd if=/mnt/vhd0 of=/dev/null bs=4M status=progressto verify the consistency of all the blocks in the backup.The problem with this mechanism is that each time the verification is run, it checks the entire VDI and not just the incremental blocks of the

aliase.vhdfile, making it very resource-intensive for daily use.Any suggestions would be welcome.

-

RE: There are any commands that allow me to verify the integrity of the backup files?

Hello @olivierlambert, @bastien-nollet, Hello Everyone !!

I used vhd-cli with its new arguments and it worked ok.

vhd-cli check --chain 'file:///run/xo-server/mounts/11934fec-f3a1-4f7f-a78d-00eeb1b39654?useVhdDirectory=true&encryptionKey=%22O1xt1ZTRE%5Qq%3X%3D1NZ%26%3ZZQo8%2DD%29s5xt%3OOko%22' 'xo-vm-backups/abc3e130-923e-619e-4fdc-59bcda088586/vdis/cecc4489-722a-4f79-b5e6-c050f63f2761/d489173c-028a-46f0-b712-f4a7c5594c8f/20260108T160552Z.alias.vhd' ok: xo-vm-backups/abc3e130-923e-619e-4fdc-59bcda088586/vdis/cecc4489-722a-4f79-b5e6-c050f63f2761/d489173c-028a-46f0-b712-f4a7c5594c8f/20260108T160552Z.alias.vhdBut I discovered that using vhd-cli in this way only checks the physical structure of the alias.vhd file. It doesn't check the integrity of the blocks or the total number of blocks to detect a missing block. (It doesn't work like it does with huge monolithic .vhd files, where it detects errors with the slightest modification.)

So I opted to split the solution:

-

I check the integrity of the filesystem where the files are stored (using btrfs scrub).

-

I verify that the number of blocks written in the backup is correct (that no blocks are missing).

-

I verify that the written blocks haven't been modified since they were written.

For point 2, I found (AI) that I could use "vhd-cli info" to find out the total number of blocks in the backup (I don't have much information about it), but when I run it, I get the following error. I suspect it doesn't work with (Encrypt all new data sent to this remote):

vhd-cli info --chain 'file:///run/xo-server/mounts/11934fec-f3a1-4f7f-a78d-00eeb1b39654?useVhdDirectory=true&encryptionKey=%22O1xt1ZTRE%5Qq%3X%3D1NZ%26%3ZZQo8%2DD%29s5xt%3OOko%22' 'xo-vm-backups/abc3e130-923e-619e-4fdc-59bcda088586/vdis/cecc4489-722a-4f79-b5e 6-c050f63f2761/d489173c-028a-46f0-b712-f4a7c5594c8f/20260108T160552Z.alias.vhd' ✖ Unhandled remote type Error: Unhandled remote type at getHandler (/usr/lib/node_modules/vhd-cli/node_modules/@xen-orchestra/fs/dist/index.js:48:11) at getSyncedHandler (/usr/lib/node_modules/vhd-cli/node_modules/@xen-orchestra/fs/dist/index.js:54:19) at Object.info (/usr/lib/node_modules/vhd-cli/commands/info.js:98:24) at Object.runCommand (/usr/lib/node_modules/vhd-cli/index.js:32:13) at /usr/lib/node_modules/vhd-cli/node_modules/exec-promise/index.js:57:13 at new Promise (<anonymous>) at execPromise (/usr/lib/node_modules/vhd-cli/node_modules/exec-promise/index.js:56:10) at Object.<anonymous> (/usr/lib/node_modules/vhd-cli/index.js:41:1) at Module._compile (node:internal/modules/cjs/loader:1706:14) at Object..js (node:internal/modules/cjs/loader:1839:10)Could you please help me with this? I'm not sure if I'm on the right track. Perhaps there are other ways I'm missing.

-

-

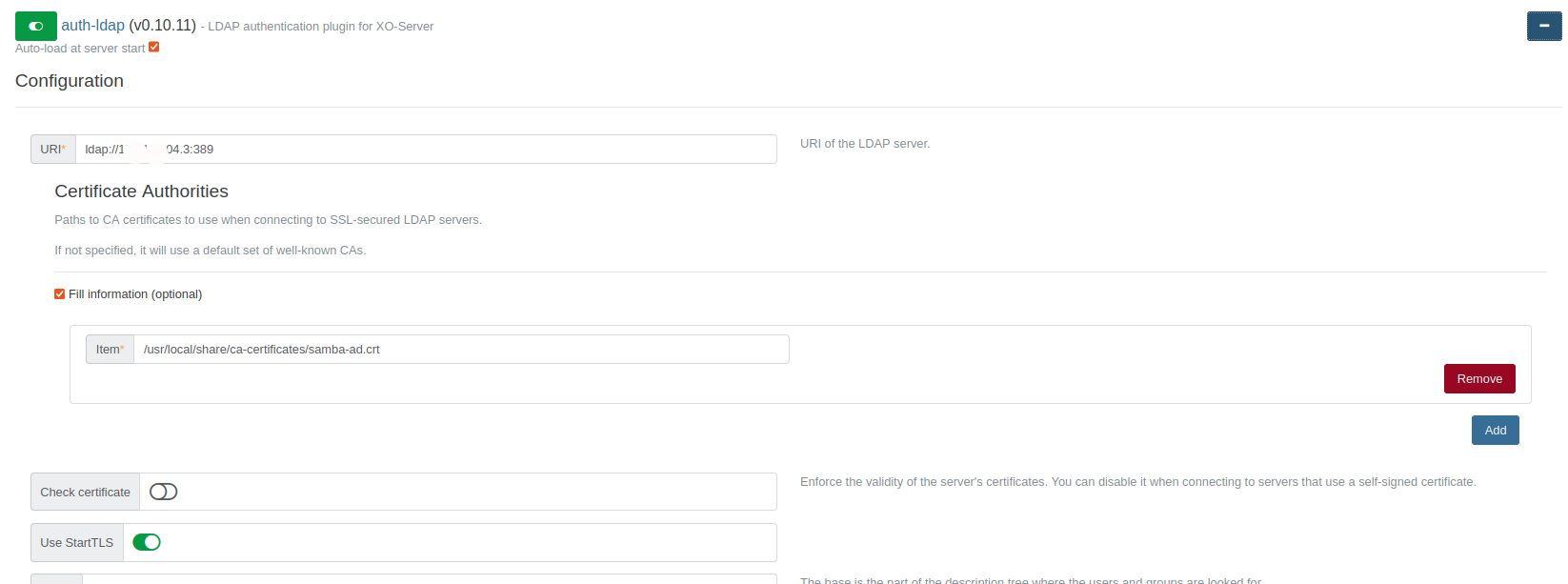

In ldap-auth plugin "Check Certificate" option only works with SSL certificates?

Hello, happy Wednesday!

I'm implementing the ldap-auth plugin. I've configured it and it works correctly with the "Use StartTLS" option; I can test it and it works fine.

However, to improve security, I enabled "Check Certificate," and now it stops connecting to LDAP. I specified the CA certificate address, but it still doesn't work.

I have this same configuration implemented in pfSense and it works fine.

could you help me with this?

My question is whether the "Check Certificate" option only works with SSL certificates or if it also works for SSL and StartTLS.?

)

) -

RE: There are any commands that allow me to verify the integrity of the backup files?

Thanks @Bastien-Nollet and @olivierlambert .

I'm going to do some tests and write the results. Thank you so much. -

There are any commands that allow me to verify the integrity of the backup files?

Hi, it's great to be back!

Until now, I've been performing backups on NFS media without the "Encrypt all new data sent to this remote" option and without the "Store backup as multiple data blocks instead of a whole VHD file." option. This allowed me to perform integrity checks on the backup files using a script that utilizes "vhd-cli check"and "xva-validate".

Now I need to change the backup methods by enabling "Encrypt all new data sent to this remote" and "Store backup as multiple data blocks instead of a whole VHD file.".

My question is whether there are any commands that allow me to verify the integrity of the backup files from the command line in this new scenario. I have VMs that are several GB in size, so using Auto Restore Check is not an option for me.

-

Watchdog on XCP Host

Hello, I read in xen-command-line about watchdog on host.

I want configure watchdog and watchdog_timeout but I don't know how.

Someone used it? Where do I write this parameters ?(I suspect maybe in grub )

Thanks Everyone.

-

XCP on intel i9 12th or 13th generation

Hello everyone.

I thing buy a new CPU for use with xcp-ng, but I have a doubts about how XCP works with Hybrid CPUs (P-Cores and E-Cores) .

Ej. xcp use all Cores or only P-Cores? How xcp works with the VMs and P-Cores and E-Cores

How xcp works with the VMs and P-Core and E-Core?

someone know about it?

Thanks Every One.