@stormi

Im sorry, it wasnt clear. What I meant is XCP-ng Center. If that code has been updated, or just a question of compiling it.

Best posts made by technot

-

RE: XCP-ng 8.2.0 beta now available!

Latest posts made by technot

-

RE: machine type

This is great news.

Looking forward to testing this functionality on a xcp-ng server in the future:) -

RE: best performing filesystem

@olivierlambert

Yeah, I did not mean to post this as a negative thing.

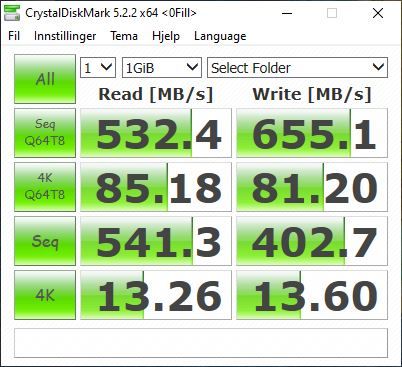

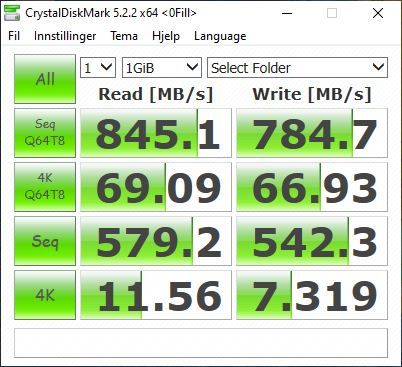

Currently Xen VMs are limited by one thread tapdisk, and will loose this fight if you compare servers with only a couple of vms. But With a multitude of vms, this image evens out, and probably even end up in Xen's favour.That last bench was just added for "show". My main point was that I can allready see a difference with Xens performance. I used to run passthrough, but as you see from my benching of xcp-ng 8.2, having Xen controll the raid gave my vm better performance then my old bench of 7.6 where I was passing the controller to a dedicated NFS vm serving as SR for all other VMs.

That image was reverse a couple years ago

-

RE: machine type

@olivierlambert

No rush, I am not even expecting it to ever happen.

Xen works well as-is for server usage. And you know, maybe thats great too.

Kvm/Qemu for desktop, and Xen for servers.

Better devs focus on keeping Xen secure and updated, then spreading thin to add features most of Xen's userbase dont even need and devs cant keep updated.On the other hand, GPGPU workloads is quickly gaining ground, so I actually believe this feature could be very important for Xen in the future.

Consumer grade GPUs are super cheap, and having good support within Xen could potentially make it a lot easier for startups and small company's to use GPUs on a larger scale.

I am afraid Kvm will "win" users if it keeps lagging behind Xen on features.

I guess we allready seen it happen(amazon aws comes to mind...).

I will still be choosing Xen wherever applicable

keep up the great and important work you all do, Xcp-ng has my love.

-

RE: machine type

I took some time to test Q35 on qemu+kvm on ubuntu.

Q35 is amazing. Not only can I now use the newest amd driver(20.9.2) as opposed to 18.4.1 that was the highest driver I could make work with xen/i440, but Q35 also seem to be handling FLR perfectly. Using i440 I would often have to shutdown dom0 to make the GPU reset and be able to use it in again in a vm after a vm reboot. With Q35 I have not had a single reset issue, and I have tried hard. I have rebooted the vm repeatedly both soft and hard, and not once has it locked up on reset.

I also did a test with i440 on KVM to see if my issue with driver and reset bug was related to kvm/xen or the emulated chipset i440 vs q35. And the issues are the same with i440 on kvm, would not accept driver above 18.4.1 and hard reset of VM would cause gpu reset issue, only fixable by power cycling host(reboot is not enough. complete shutdown is needed).

I therefore conclude the chipset is the key factor.

I do have to wonder why xen is having a hardtime making Q35 work tho, concidering Xen basicly is/was using modified qemu. I am thinking making Q35 work for Xen has not been a priority. But it really should!

This works so well, Ive decided to build a new desktop to use this. -

RE: best performing filesystem

I ran a test with 8.2 and apparently things changed. I am now getting better performance with dom0 handling the raid controller.

Instead of destroying the raid and going zfs, I kept it equal and did one test before and one after I switched the control of the lsi megaraid from guest to dom0.

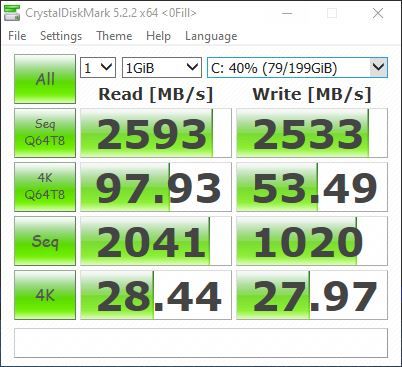

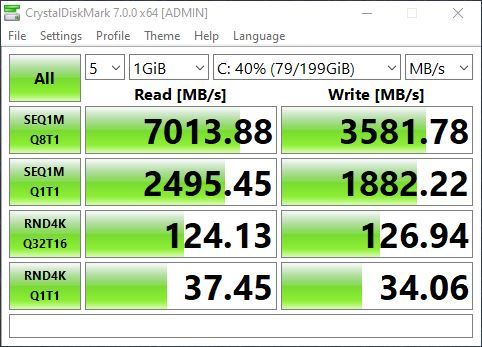

However, after doing the bench, I destroyed the raid and made it raid0, and installed ubuntu 20.10 and kvm/qemu mostly becouse I wanted to try out emulated Q35 chipset in a hvmpv setting as discussion in a previous thread. I will post some details in that thread about my experience with emulated Q35.

But to finish this post, here is a bench with raid0, but on ubuntu using qemu+kvm. All drivers are VirtIO.

yeah, I know, the more vms running, the more xen vs kvm would balance out.

But if your only running 1 or two IO intensive vms, and the rest are ideling a lot. It sure means better performance with kvm for those users.

-

RE: best performing filesystem

I dont remember the exact numbers, it was quite some time ago, when xcp-ng 7.6 was fresh. But I belive it was in the ballpark of 2-300MB/s difference for both read/write meassuring with CrystalDiskMark. But I will run the benchmark again before and after I do the mentioned reinstall for comparison.

Was planning to do it this weekend, but havent recieved the new SSD yet.

After the benchmark, I might reconfigure to raid 0 and daily backup to cloud.Another question tho. A typical bottleneck can often be tapdisk limited to using one core/thread. But what if I give a VM in need for maximum throughput 2 VHD's and then have windows software raid0 those drives.

Would dom0 be handling each VHD with a tapdisk process each, or would it still be one process handling both VHD's connected to the VM? -

best performing filesystem

What would be the reccomended way to get decent iops and high throughput.

I have a lsi megaraid with 8 sata drives connected currently. 3tb drives and set to raid 10.

Formated as ext4, and xcp-ng uses it to save .vhd files.Originally I let dom0 handle it, but performance was a bit on the low end. So I ended up passing the controller to a ubuntu guest, and export it to dom0 as nfs, and to other vms as samba for files that are shared/available on multiple vms.

xcp-ng is running from a small 120gb ssd, contaning the ubuntu vm only.During the weekend I plan on reinstalling xcp in uefi mode on a new 1tb ssd, and everything from the raid is temporary backedup to cloud. So this will be a good time to make any changes.

I read good things about zfs, but from what I can tell, its kind of useless with so few disks. Also it preferes to not run ontop of hardware raid(?).

So going the zfs path would probably mean destroying the raid and let zfs handle the disks directly, giving dom0 12-16gb ram, and maybe(?) the small 120gb ssd for l2arc.Any advice?

-

choice of vbios for pci passthrough

Hi.

I just saw that with KVM booting an UEFI guest with a gpu passthrough, its possible to choose a vbios rom to be loaded.

Is this something that could become a possibility for xcp-ng/xen further down the road, after windows UEFI guest is working ? -

RE: XCP-ng 8.2.0 beta now available!

@stormi

Im sorry, it wasnt clear. What I meant is XCP-ng Center. If that code has been updated, or just a question of compiling it. -

RE: XCP-ng 8.2.0 beta now available!

I dont know if this is expected behaviour, after upgrading all the way from 7.6 to 8.2 beta. But on a windows guest I have an AMD gpu in full pci passthrough, the device didnt show up after boot. And instead there was another device showing fault.

Intel 8237sb pci to usb universal host controller, was throwing an error 43.

And under Display adapters, there was only the microsoft remote emulated and a "Microsoft Basic" devices showing.

I reinstalled the AMD driver, and rebooted. And it came back to life.Also, I must say the vms feel snappier. Faster boots, and less delay/latency. But I have not meassured pre/post, so its only a feeling!:D