Maybe there are additional problems. I just noticed one of our rolling-snapshot task started twice again.

This time it's even more weird, both jobs are listed as successfull.

The first job displays just nothing in the detail window:

json:

{

"data": {

"mode": "full",

"reportWhen": "failure"

},

"id": "1583917200011",

"jobId": "22050542-f6ec-4437-a031-0600260197d7",

"jobName": "odoo_hourly",

"message": "backup",

"scheduleId": "ab9468b4-9b8a-44a0-83a8-fc6b84fe8a95",

"start": 1583917200011,

"status": "success",

"end": 1583917207066

}

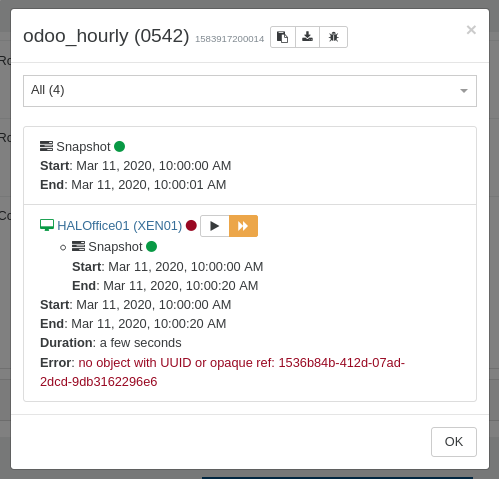

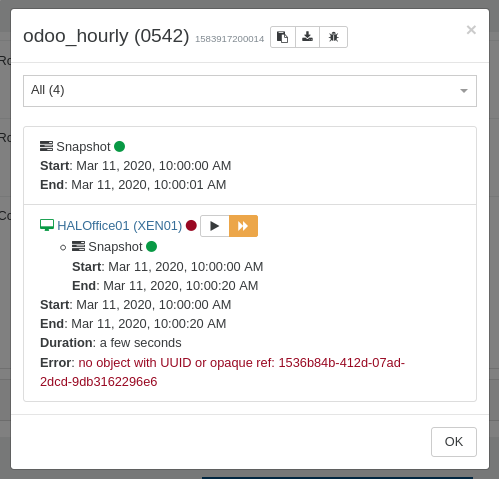

The second job shows 4? VMs but only consists of one VM which failed, but is displayed as successful

json:

{

"data": {

"mode": "full",

"reportWhen": "failure"

},

"id": "1583917200014",

"jobId": "22050542-f6ec-4437-a031-0600260197d7",

"jobName": "odoo_hourly",

"message": "backup",

"scheduleId": "ab9468b4-9b8a-44a0-83a8-fc6b84fe8a95",

"start": 1583917200014,

"status": "success",

"tasks": [

{

"id": "1583917200019",

"message": "snapshot",

"start": 1583917200019,

"status": "success",

"end": 1583917201773,

"result": "32ba0201-26bd-6f29-c507-0416129058b4"

},

{

"id": "1583917201780",

"message": "add metadata to snapshot",

"start": 1583917201780,

"status": "success",

"end": 1583917201791

},

{

"id": "1583917206856",

"message": "waiting for uptodate snapshot record",

"start": 1583917206856,

"status": "success",

"end": 1583917207064

},

{

"data": {

"type": "VM",

"id": "b6b63fab-bd5f-8640-305d-3565361b213f"

},

"id": "1583917200022",

"message": "Starting backup of HALOffice01. (22050542-f6ec-4437-a031-0600260197d7)",

"start": 1583917200022,

"status": "failure",

"tasks": [

{

"id": "1583917200028",

"message": "snapshot",

"start": 1583917200028,

"status": "success",

"end": 1583917220144,

"result": "62e06abc-6fca-9564-aad7-7933dec90ba3"

},

{

"id": "1583917220148",

"message": "add metadata to snapshot",

"start": 1583917220148,

"status": "success",

"end": 1583917220167

}

],

"end": 1583917220405,

"result": {

"message": "no object with UUID or opaque ref: 1536b84b-412d-07ad-2dcd-9db3162296e6",

"name": "Error",

"stack": "Error: no object with UUID or opaque ref: 1536b84b-412d-07ad-2dcd-9db3162296e6\n at Xapi.apply (/opt/xen-orchestra/packages/xen-api/src/index.js:573:11)\n at Xapi.getObject (/opt/xen-orchestra/packages/xo-server/src/xapi/index.js:128:24)\n at Xapi.deleteVm (/opt/xen-orchestra/packages/xo-server/src/xapi/index.js:692:12)\n at iteratee (/opt/xen-orchestra/packages/xo-server/src/xo-mixins/backups-ng/index.js:1268:23)\n at /opt/xen-orchestra/@xen-orchestra/async-map/src/index.js:32:17\n at Promise._execute (/opt/xen-orchestra/node_modules/bluebird/js/release/debuggability.js:384:9)\n at Promise._resolveFromExecutor (/opt/xen-orchestra/node_modules/bluebird/js/release/promise.js:518:18)\n at new Promise (/opt/xen-orchestra/node_modules/bluebird/js/release/promise.js:103:10)\n at /opt/xen-orchestra/@xen-orchestra/async-map/src/index.js:31:7\n at arrayMap (/opt/xen-orchestra/node_modules/lodash/_arrayMap.js:16:21)\n at map (/opt/xen-orchestra/node_modules/lodash/map.js:50:10)\n at asyncMap (/opt/xen-orchestra/@xen-orchestra/async-map/src/index.js:30:5)\n at BackupNg._backupVm (/opt/xen-orchestra/packages/xo-server/src/xo-mixins/backups-ng/index.js:1265:13)\n at runMicrotasks (<anonymous>)\n at processTicksAndRejections (internal/process/task_queues.js:97:5)\n at handleVm (/opt/xen-orchestra/packages/xo-server/src/xo-mixins/backups-ng/index.js:734:13)"

}

}

],

"end": 1583917207065

}

So the results are clearly wrong, job is ran twice, this time at the same point in time.

Would it help to delete and recreate all of the backup jobs?

What extra information I could offer to get this issue fixed?

Already thinking about setting up another new xo-server from scratch now.

please help and advice,

thanks Toni