MTU problems with VxLAN

-

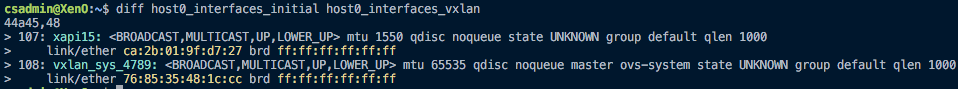

When I create the vxlan and look at the host consoles, I don't see a tunnel interface appear. Here is the diff between the interfaces before and after VXLAN creation:

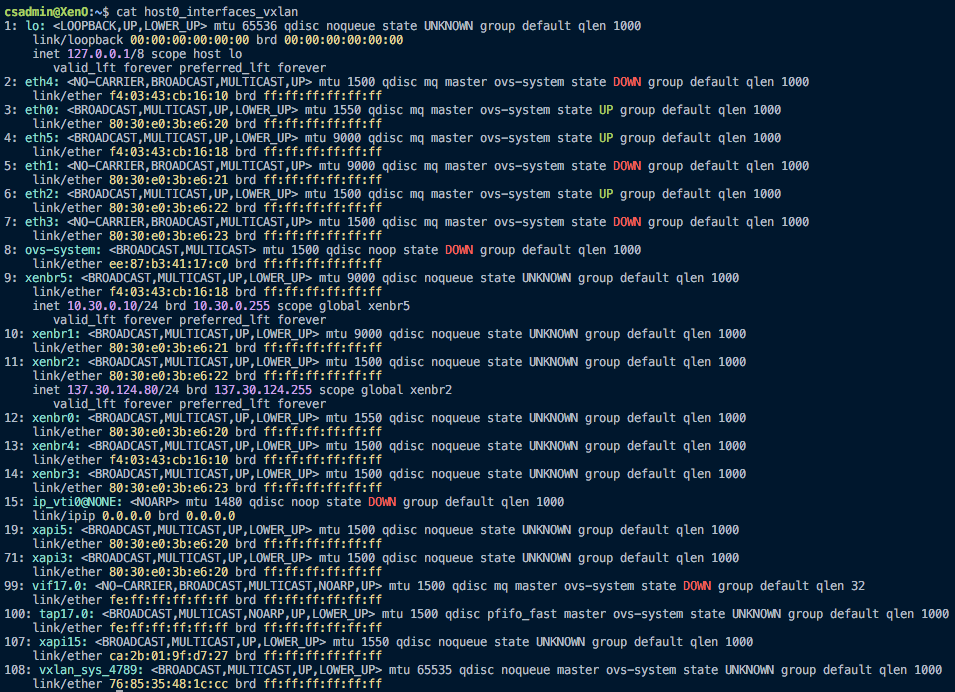

And the full list of interfaces post-VXLAN creation, just in case you want to see it:

I tried assigning an IP address to

vxlan_sys_4789on both hosts, and pinging between them. This was unsuccessful. I repeated this method with thexapi15interface, and was able to ping with packets well over 1500 bytes (I think the largest value that I tried was 2000 bytes), although the first 4 ICMP packets were lost every time. -

My bad I meant

xapiinstead of tunnel... So you test the correct interface!

If you successed between 2 hosts it means the tunnels are working, the issue is between the VM & the host. To confirm it can you try to ping from a VM to its resident host on the private network?Can you show the network interfaces of the VM as well?

Thanks

-

This post is deleted! -

@BenjiReis I think that I have gotten to the bottom of the issue, although I'm not sure why its happening or how to fix it.

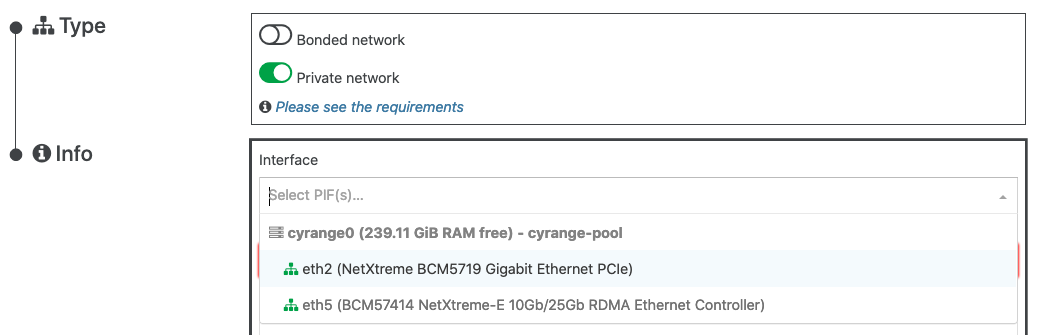

When creating the VxLAN (MTU 1550), I am presented with the option to use 2 PIFs:eth2andeth5.eth5is the desired interface, as it is configured to use jumbo frames (9000 MTU), and it is the one that I selected. I destroyed and recreated the VXLAN just to be sure of this. I noticed that when the two VMs are on the same host, they can use the VXLAN with their default MTU of 1500 transparently. However, once I migrate one of the VMs to a different host, packets are dropped until I set the MTU to 1450 within the VMs. Knowing this, I suspected that there was an MTU of 1500 somewhere along the line causing the packets to be lost.

Here is how I discovered the issue: I set the VM MTUs to 1450, and monitored each PIF on the host consoles usingiftopwhile runningiperfon the VMs to test bandwidth, and found that the VXLAN is using the wrong PIF. It is usingeth2, which has a 1500 MTU, thus causing the packets to be lost. I have no idea why, but Xen Orchestra or XCP-ng is assigning the VXLAN to use the wrong PIF. -

Digging deeper, I think I am developing a hunch for why this isn't working. Our pool currently has 5 servers, two of which were unfortunately purchased at a later date and are not a perfect match with the other 3.

eth5only runs on hosts 1, 2, and 3. We though that this wouldn't matter, and that Xen Orchestra would simply keep the hosts that use the VxLAN on the hosts which useeth5. I took a look at the Xen Orchestra logs, and saw this:Sep 16 01:11:10 XenO xo-server[130952]: 2020-09-16T06:11:10.116Z xo:xo-server:sdn-controller INFO Private network registered { privateNetwork: '234880c5-c3fd-466d-9e6a-f9fbaa36700a' } Sep 16 01:11:10 XenO xo-server[130952]: 2020-09-16T06:11:10.182Z xo:xo-server:sdn-controller INFO New network created { Sep 16 01:11:10 XenO xo-server[130952]: privateNetwork: '234880c5-c3fd-466d-9e6a-f9fbaa36700a', Sep 16 01:11:10 XenO xo-server[130952]: network: 'vxlan', Sep 16 01:11:10 XenO xo-server[130952]: pool: 'cyrange-pool' Sep 16 01:11:10 XenO xo-server[130952]: } Sep 16 01:11:10 XenO xo-server[130952]: 2020-09-16T06:11:10.187Z xo:xo-server:sdn-controller ERROR Can't create tunnel: no available PIF { Sep 16 01:11:10 XenO xo-server[130952]: pifDevice: 'eth5', Sep 16 01:11:10 XenO xo-server[130952]: pifVlan: '-1', Sep 16 01:11:10 XenO xo-server[130952]: network: 'vxlan', Sep 16 01:11:10 XenO xo-server[130952]: host: 'cyrange4', Sep 16 01:11:10 XenO xo-server[130952]: pool: 'cyrange-pool' Sep 16 01:11:10 XenO xo-server[130952]: } Sep 16 01:11:10 XenO xo-server[130952]: 2020-09-16T06:11:10.189Z xo:xo-server:sdn-controller ERROR Can't create tunnel: no available PIF { Sep 16 01:11:10 XenO xo-server[130952]: pifDevice: 'eth5', Sep 16 01:11:10 XenO xo-server[130952]: pifVlan: '-1', Sep 16 01:11:10 XenO xo-server[130952]: network: 'vxlan', Sep 16 01:11:10 XenO xo-server[130952]: host: 'cyrange5', Sep 16 01:11:10 XenO xo-server[130952]: pool: 'cyrange-pool' Sep 16 01:11:10 XenO xo-server[130952]: }eth2, however, runs on all 5 hosts. Maybe the sdn-controller is defaulting back toeth2once it sees thateth5is unavailable on hosts 4 and 5? -

It seems like the simplest fix, for now, would be to use a different PIF that runs across all 5 hosts and supports jumbo frames. We actually have one (

eth0) that fits these requirements, but it isn't offered in the dropdown list when I go to create the private network:

Any thoughts on why this might be? -

Hmm not having

eth5would indeed be a problem, but I don't see howeth2could have been used for creating the VxLAN.

The rule to be able to create a private network on an interface is:

the interface must be etiher physical, a bond master or a vlan interface

the interface must have an IP and NOT be a bond slave.Does

eth0match those criteria? -

@BenjiReis We have corrected our configuration so that

eth5is on all of the hosts, and has an adequate MTU (9000). It does have a static IP address onxenbr5, and I believe it satisfies the requirements you listed. Here are the network interfaces for one of the hosts, which has a vxlan and a VM connected to both the private network and another network.1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000 link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo valid_lft forever preferred_lft forever 2: eth4: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc mq master ovs-system state DOWN group default qlen 1000 link/ether f4:03:43:cb:09:e0 brd ff:ff:ff:ff:ff:ff 3: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1550 qdisc mq master ovs-system state UP group default qlen 1000 link/ether 80:30:e0:3b:d5:90 brd ff:ff:ff:ff:ff:ff 4: eth5: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 9000 qdisc mq master ovs-system state UP group default qlen 1000 link/ether f4:03:43:cb:09:e8 brd ff:ff:ff:ff:ff:ff 5: eth1: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN group default qlen 1000 link/ether 80:30:e0:3b:d5:91 brd ff:ff:ff:ff:ff:ff 6: eth2: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq master ovs-system state UP group default qlen 1000 link/ether 80:30:e0:3b:d5:92 brd ff:ff:ff:ff:ff:ff 7: eth3: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc mq master ovs-system state DOWN group default qlen 1000 link/ether 80:30:e0:3b:d5:93 brd ff:ff:ff:ff:ff:ff 8: ovs-system: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN group default qlen 1000 link/ether 76:8b:d6:46:ba:ec brd ff:ff:ff:ff:ff:ff 9: xenbr5: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 9000 qdisc noqueue state UNKNOWN group default qlen 1000 link/ether f4:03:43:cb:09:e8 brd ff:ff:ff:ff:ff:ff inet 10.30.0.12/24 brd 10.30.0.255 scope global xenbr5 valid_lft forever preferred_lft forever 10: xenbr0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1550 qdisc noqueue state UNKNOWN group default qlen 1000 link/ether 80:30:e0:3b:d5:90 brd ff:ff:ff:ff:ff:ff 11: xenbr2: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UNKNOWN group default qlen 1000 link/ether 80:30:e0:3b:d5:92 brd ff:ff:ff:ff:ff:ff inet 137.30.124.82/24 brd 137.30.124.255 scope global xenbr2 valid_lft forever preferred_lft forever 12: xenbr4: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UNKNOWN group default qlen 1000 link/ether f4:03:43:cb:09:e0 brd ff:ff:ff:ff:ff:ff 13: xenbr3: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UNKNOWN group default qlen 1000 link/ether 80:30:e0:3b:d5:93 brd ff:ff:ff:ff:ff:ff 14: ip_vti0@NONE: <NOARP> mtu 1480 qdisc noop state DOWN group default qlen 1000 link/ipip 0.0.0.0 brd 0.0.0.0 20: xapi5: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UNKNOWN group default qlen 1000 link/ether 80:30:e0:3b:d5:90 brd ff:ff:ff:ff:ff:ff 25: xapi2: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1550 qdisc noqueue state UNKNOWN group default qlen 1000 link/ether fa:c1:41:78:32:6f brd ff:ff:ff:ff:ff:ff 26: vxlan_sys_4789: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 65535 qdisc noqueue master ovs-system state UNKNOWN group default qlen 1000 link/ether 5a:d3:89:b8:7b:8f brd ff:ff:ff:ff:ff:ff 27: vif2.0: <BROADCAST,MULTICAST,NOARP,UP,LOWER_UP> mtu 1500 qdisc mq master ovs-system state UP group default qlen 32 link/ether fe:ff:ff:ff:ff:ff brd ff:ff:ff:ff:ff:ff 28: vif2.1: <BROADCAST,MULTICAST,NOARP,UP,LOWER_UP> mtu 1550 qdisc mq master ovs-system state UP group default qlen 32 link/ether fe:ff:ff:ff:ff:ff brd ff:ff:ff:ff:ff:ffAnd here are the interfaces from within the VM:

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000 link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo valid_lft forever preferred_lft forever inet6 ::1/128 scope host valid_lft forever preferred_lft forever 2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP group default qlen 1000 link/ether 9e:e9:1a:4e:e4:ba brd ff:ff:ff:ff:ff:ff inet 137.30.122.109/24 brd 137.30.122.255 scope global dynamic eth0 valid_lft 4696sec preferred_lft 4696sec inet6 fe80::9ce9:1aff:fe4e:e4ba/64 scope link valid_lft forever preferred_lft forever 3: eth1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc mq state UP group default qlen 1000 link/ether f6:ce:ed:a7:18:68 brd ff:ff:ff:ff:ff:ff inet 10.1.1.32/24 scope global eth1 valid_lft forever preferred_lft forever inet6 fe80::f4ce:edff:fea7:1868/64 scope link valid_lft forever preferred_lft foreverEven after configuring

eth5on all of the hosts, the VxLAN traffic continues to be routed througheth2, and I don't see any traffic flowing through thexapiinterface at all. There are a lot of errors appearing related to openvswitch in the Xen Orchestra logs as well:Sep 17 12:51:17 XenO xo-server[25920]: 2020-09-17T17:51:17.324Z xo:xo-server:sdn-controller:ovsdb-client ERROR No bridge found for network { network: 'vxlan', host: 'cyrange4' } Sep 17 12:51:17 XenO xo-server[25920]: 2020-09-17T17:51:17.326Z xo:xo-server:sdn-controller:ovsdb-client ERROR No result for select { Sep 17 12:51:17 XenO xo-server[25920]: columns: [ '_uuid', 'name' ], Sep 17 12:51:17 XenO xo-server[25920]: table: 'Bridge', Sep 17 12:51:17 XenO xo-server[25920]: where: [ [ 'external_ids', 'includes', [Array] ] ], Sep 17 12:51:17 XenO xo-server[25920]: host: 'cyrange2' Sep 17 12:51:17 XenO xo-server[25920]: } Sep 17 12:51:17 XenO xo-server[25920]: 2020-09-17T17:51:17.326Z xo:xo-server:sdn-controller:ovsdb-client ERROR No bridge found for network { network: 'vxlan', host: 'cyrange2' } Sep 17 12:51:18 XenO xo-server[25920]: 2020-09-17T17:51:18.691Z xo:xo-server:sdn-controller:ovsdb-client ERROR No result for select { Sep 17 12:51:18 XenO xo-server[25920]: columns: [ '_uuid', 'name' ], Sep 17 12:51:18 XenO xo-server[25920]: table: 'Bridge', Sep 17 12:51:18 XenO xo-server[25920]: where: [ [ 'external_ids', 'includes', [Array] ] ], Sep 17 12:51:18 XenO xo-server[25920]: host: 'cyrange1' Sep 17 12:51:18 XenO xo-server[25920]: } Sep 17 12:51:18 XenO xo-server[25920]: 2020-09-17T17:51:18.691Z xo:xo-server:sdn-controller:ovsdb-client ERROR No result for select { Sep 17 12:51:18 XenO xo-server[25920]: columns: [ '_uuid', 'name' ], Sep 17 12:51:18 XenO xo-server[25920]: table: 'Bridge', Sep 17 12:51:18 XenO xo-server[25920]: where: [ [ 'external_ids', 'includes', [Array] ] ], Sep 17 12:51:18 XenO xo-server[25920]: host: 'cyrange0' Sep 17 12:51:18 XenO xo-server[25920]: }The following lines also stand out when we try to create VMs that use the private network:

Sep 17 13:02:38 XenO xo-server[25920]: 2020-09-17T18:02:38.925Z xo:xo-server:sdn-controller INFO Unable to add host to network: no tunnel available { network: 'vxlan', host: 'cyrange1', pool: 'cyrange-pool' } Sep 17 13:02:43 XenO xo-server[25920]: 2020-09-17T18:02:43.947Z xo:xo-server:sdn-controller INFO Unable to add host to network: no tunnel available { network: 'vxlan', host: 'cyrange1', pool: 'cyrange-pool' } Sep 17 13:02:49 XenO xo-server[25920]: 2020-09-17T18:02:49.563Z xo:xo-server:sdn-controller INFO Unable to add host to network: no tunnel available { network: 'vxlan', host: 'cyrange2', pool: 'cyrange-pool' } Sep 17 13:02:59 XenO xo-server[25920]: 2020-09-17T18:02:59.592Z xo:xo-server:sdn-controller INFO Unable to add host to network: no tunnel available { network: 'vxlan', host: 'cyrange2', pool: 'cyrange-pool' }Sorry for the wall of text, just wanted to provide you with as much debugging information as possible. Additionally, when I run

iftopon either of thexapiinterfaces while doing a test withiperf, I don't see any traffic flowing through at all, leading me to suspect that the VMs are not even using the tunnels. -

Ok so now, all your hosts have an eth5 NIC that matches the criteria for creating a private network right?

What you can do now is reload the SDN controller so that it recreates the private network, if it doesn't work, you can delete the private network and create a new one on eth5 now that the environment is correctly configured. -

I think the

eth5NIC has the correct properties, the only thing that I'm unclear on is whether it needs an IP address itself or ifxenbr5having the IP address is good enough. When I look at each host's network information in Xen Orchestra, I do see an IP address assigned toeth5.We tried to start with as clean of a slate as possible. We restarted all of the physical hosts, restarted xo-server, reloaded the sdn-controller plugin, recreated the vxlan, and then recreated the VMs, and we still have the same problem with the traffic intended for the vxlan flowing through

eth2. Looking at the logs, at the exact moment that we attempt to create the vxlan, we see the following two types of errors, for all of the hosts:Sep 18 03:00:18 XenO xo-server[37374]: 2020-09-18T08:00:18.611Z xo:xo-server:sdn-controller:ovsdb-client ERROR No bridge found for network { network: 'vxlan', host: 'cyrange2' } Sep 18 03:00:18 XenO xo-server[37374]: 2020-09-18T08:00:18.611Z xo:xo-server:sdn-controller:ovsdb-client ERROR No result for select { Sep 18 03:00:18 XenO xo-server[37374]: columns: [ '_uuid', 'name' ], Sep 18 03:00:18 XenO xo-server[37374]: table: 'Bridge', Sep 18 03:00:18 XenO xo-server[37374]: where: [ [ 'external_ids', 'includes', [Array] ] ], Sep 18 03:00:18 XenO xo-server[37374]: host: 'cyrange5' Sep 18 03:00:18 XenO xo-server[37374]: } -

That's strange. Do you have an XOA (even the free version would be enough) running on this pool?

If not please set one up and open support ticket, you can do it even as a free user.This will allow me to access your pool and see what's what in person, it'll be easier

-

I don't, but I can get one set up for Monday. Thanks!

-

Perfect, see you on monday on the support ticket then!

Meanwhile, have a nice week end

-

The issue has been fixed on this branch, it'll be merged into master soon.