best performing filesystem

-

What would be the reccomended way to get decent iops and high throughput.

I have a lsi megaraid with 8 sata drives connected currently. 3tb drives and set to raid 10.

Formated as ext4, and xcp-ng uses it to save .vhd files.Originally I let dom0 handle it, but performance was a bit on the low end. So I ended up passing the controller to a ubuntu guest, and export it to dom0 as nfs, and to other vms as samba for files that are shared/available on multiple vms.

xcp-ng is running from a small 120gb ssd, contaning the ubuntu vm only.During the weekend I plan on reinstalling xcp in uefi mode on a new 1tb ssd, and everything from the raid is temporary backedup to cloud. So this will be a good time to make any changes.

I read good things about zfs, but from what I can tell, its kind of useless with so few disks. Also it preferes to not run ontop of hardware raid(?).

So going the zfs path would probably mean destroying the raid and let zfs handle the disks directly, giving dom0 12-16gb ram, and maybe(?) the small 120gb ssd for l2arc.Any advice?

-

@technot when you say performance was a bit on the low end when dom0 handled the drive, how low compared to when the controller is passthrough?

For ZFS, it is still good with few number of disks but the performance won't be high than raid10 until you have more vdevs (which means striping across multiple vdevs). You do need to passthrough the disks to dom0, so you'll have to destroy the raid as you mentioned and the more ram the better for ZFS, usually around 1GB per 1TB of storage for good caching performance.

One thing to note, you can't use ZFS for dom0 yet so you still need another drive for XCP-ng.

-

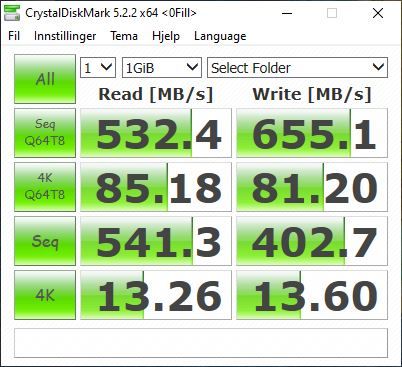

I dont remember the exact numbers, it was quite some time ago, when xcp-ng 7.6 was fresh. But I belive it was in the ballpark of 2-300MB/s difference for both read/write meassuring with CrystalDiskMark. But I will run the benchmark again before and after I do the mentioned reinstall for comparison.

Was planning to do it this weekend, but havent recieved the new SSD yet.

After the benchmark, I might reconfigure to raid 0 and daily backup to cloud.Another question tho. A typical bottleneck can often be tapdisk limited to using one core/thread. But what if I give a VM in need for maximum throughput 2 VHD's and then have windows software raid0 those drives.

Would dom0 be handling each VHD with a tapdisk process each, or would it still be one process handling both VHD's connected to the VM? -

I am not sure, may be one of the devs can answer that question.

-

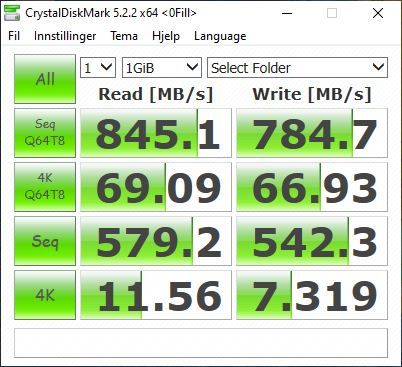

I ran a test with 8.2 and apparently things changed. I am now getting better performance with dom0 handling the raid controller.

Instead of destroying the raid and going zfs, I kept it equal and did one test before and one after I switched the control of the lsi megaraid from guest to dom0.

However, after doing the bench, I destroyed the raid and made it raid0, and installed ubuntu 20.10 and kvm/qemu mostly becouse I wanted to try out emulated Q35 chipset in a hvmpv setting as discussion in a previous thread. I will post some details in that thread about my experience with emulated Q35.

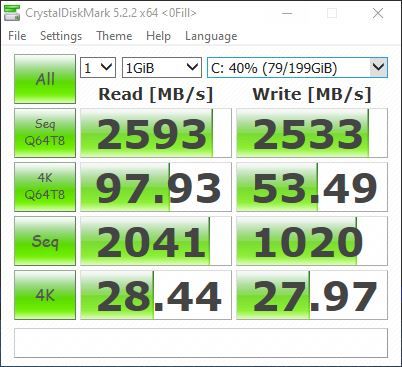

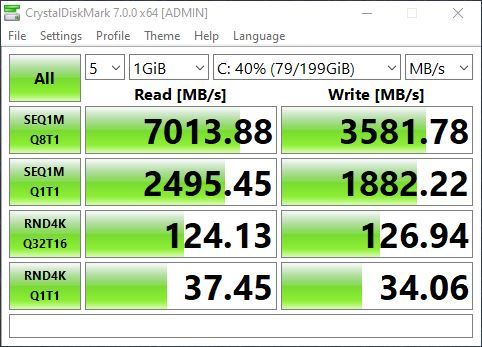

But to finish this post, here is a bench with raid0, but on ubuntu using qemu+kvm. All drivers are VirtIO.

yeah, I know, the more vms running, the more xen vs kvm would balance out.

But if your only running 1 or two IO intensive vms, and the rest are ideling a lot. It sure means better performance with kvm for those users.

-

This is already known and related directly how Xen is working (and having more isolation).

In a case where you absolutely need more IOPS/disk perf, the traditional Xen device model should be by passed, eg using passthrough.

Note this is an active field of research, even here. It's hard to have both security and performances.

-

@olivierlambert

Yeah, I did not mean to post this as a negative thing.

Currently Xen VMs are limited by one thread tapdisk, and will loose this fight if you compare servers with only a couple of vms. But With a multitude of vms, this image evens out, and probably even end up in Xen's favour.That last bench was just added for "show". My main point was that I can allready see a difference with Xens performance. I used to run passthrough, but as you see from my benching of xcp-ng 8.2, having Xen controll the raid gave my vm better performance then my old bench of 7.6 where I was passing the controller to a dedicated NFS vm serving as SR for all other VMs.

That image was reverse a couple years ago

-

Yes, and this is good news!