Nvidia Quadro P400 not working on Ubuntu server via GPU/PCIe passthrough

-

Hi all, I'm hoping someone can help me.

Hardware specs:

- ASUS P8B WS

- Xeon E3-1230 v2

- 10GB ECC RAM

- Haupagge WinTV QuadHD (TV Tuner)

- Nvidia Quadro P400

VM: Ubuntu Server 20.04 LTS

What I was hoping to do was use PCI/GPU passthrough to pass through the GPU and TV Tuner to a Ubuntu server running Plex media server. I was able to get the TV tuner working and it could scan channels, etc, and the GPU was also detected by Ubuntu, however no matter what I did I could not get the latest Nvidia drivers working and some of the hardware details aren't complete.

I tried installing from the official Ubuntu PPA, the Nvidia PPA, I tried upgrading Ubuntu to the latest release 21.10. I tried 21.04. I tried the 20.04 LTS version. I tried older drivers like 430, 450, 460, 465 and 470 and no matter what I did I could not get the GPU to function correctly through XCP-NG.

I know there's no hardware problem as I have pretty much given up and it's currently doing hardware transcoding just fine on a Ubuntu 20.04 install on bare metal.

I'd be willing to give XCP-NG one last try if anyone can give me any suggestions as what could be wrong.

I'm not sure if it's the same thing, but I tried both passing through the PCI devices in the CLI as well as trying the method of selecting the Nvidia GPU from the list in XOA after I had disabled the GPU from the hypervisor. Neither method worked. I also tried assigning the GPU through XCP-NG center Windows program.

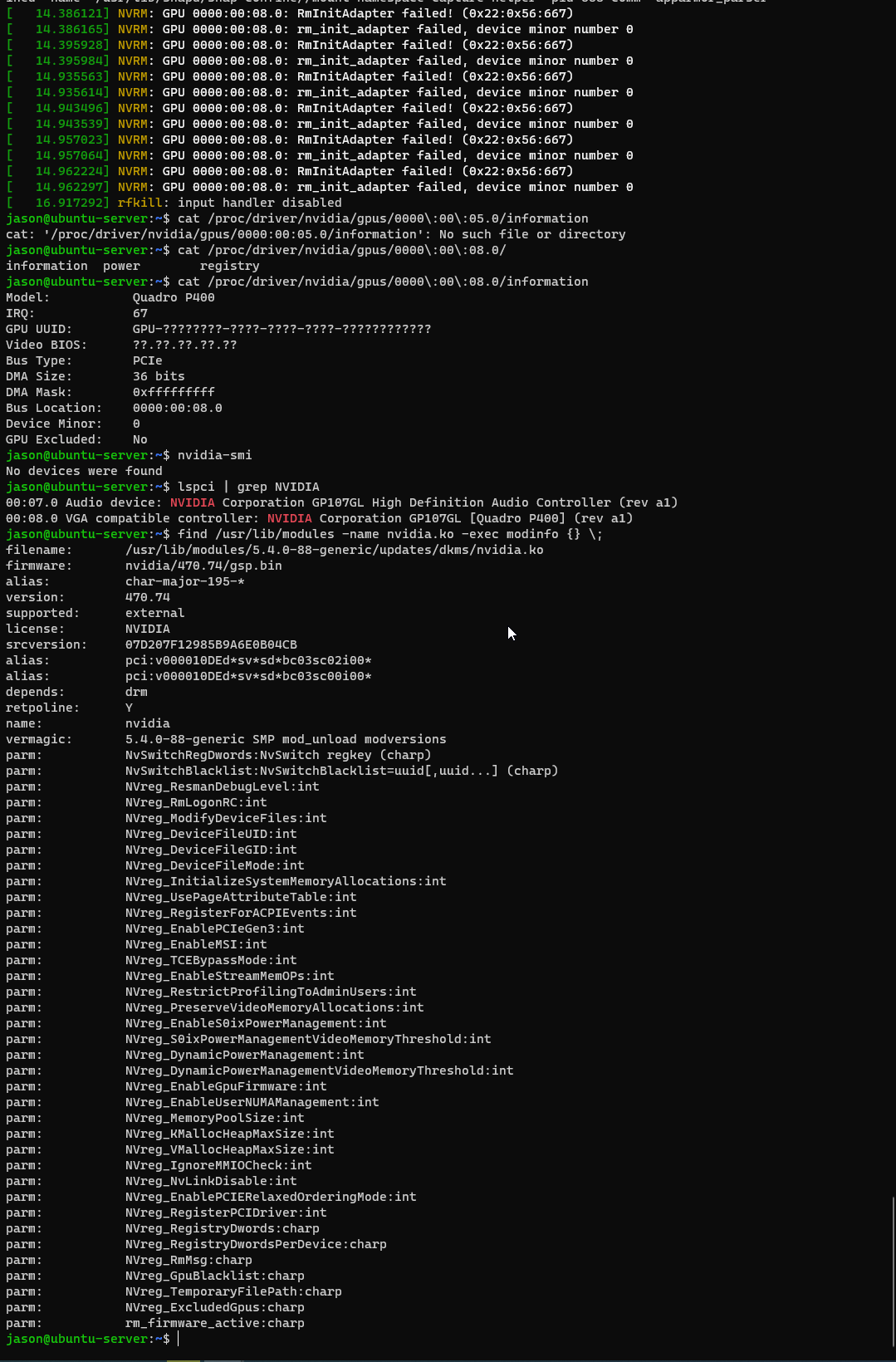

This screenshot shows a few different commands. You can see the error messages in dmesg as well as the GPU UUID and Video BIOS appear as blank when inspecting the device information under /proc.

-

@pyroteq Having the same issue here, the GPU is in passthrough mode and seen by the VM. Also the NVidia drivers are installed succesfully, but Ubuntu 20.04 still says when executing nvidia-smi that 'no devices were found'.

It works flawless on Proxmox, but everytime I wanna get back to XCP-NG this one thing is holding me from fully migrating to XCP-NG.Either something is going wrong in the process or I am just stupid to see the solution...

-

Could anyone try with real server hardware? (ie with compliant IOMMU/BIOS/firmware and such?)

I don't have any Quadro card in my lab to do more tests

-

@olivierlambert I am currently doing this on a Dell R620.

Is this what you understand under 'real server hardware'?

I can share the logs if you want, which I am currently getting as well.

The GPU is currently passthrough to my Ubuntu machine and it is visible under the 'lspci' command as well as the installed driver under 'lspci -v' but when I execute nvidia-smi it says that 'no devices were found'. Maybe the card isn't hidden or something? -

Yes, relatively old, but it should work on a R620 I suppose. What's your CPU?

-

@olivierlambert I have a E5-2660v2 CPU in it

Here are some logs:

(All executed on the Ubuntu 20.04 VM)output lspci command:

root@plexmediaserver:/home/thefrisianclause# lspci 00:00.0 Host bridge: Intel Corporation 440FX - 82441FX PMC [Natoma] (rev 02) 00:01.0 ISA bridge: Intel Corporation 82371SB PIIX3 ISA [Natoma/Triton II] 00:01.1 IDE interface: Intel Corporation 82371SB PIIX3 IDE [Natoma/Triton II] 00:01.2 USB controller: Intel Corporation 82371SB PIIX3 USB [Natoma/Triton II] (rev 01) 00:01.3 Bridge: Intel Corporation 82371AB/EB/MB PIIX4 ACPI (rev 01) 00:02.0 VGA compatible controller: Device 1234:1111 00:03.0 SCSI storage controller: XenSource, Inc. Xen Platform Device (rev 02) 00:05.0 Audio device: NVIDIA Corporation GP107GL High Definition Audio Controller (rev a1) 00:06.0 VGA compatible controller: NVIDIA Corporation GP107GL [Quadro P400] (rev a1)output lspci -v command:

root@plexmediaserver:/home/thefrisianclause# lspci 00:00.0 Host bridge: Intel Corporation 440FX - 82441FX PMC [Natoma] (rev 02) 00:01.0 ISA bridge: Intel Corporation 82371SB PIIX3 ISA [Natoma/Triton II] 00:01.1 IDE interface: Intel Corporation 82371SB PIIX3 IDE [Natoma/Triton II] 00:01.2 USB controller: Intel Corporation 82371SB PIIX3 USB [Natoma/Triton II] (rev 01) 00:01.3 Bridge: Intel Corporation 82371AB/EB/MB PIIX4 ACPI (rev 01) 00:02.0 VGA compatible controller: Device 1234:1111 00:03.0 SCSI storage controller: XenSource, Inc. Xen Platform Device (rev 02) 00:05.0 Audio device: NVIDIA Corporation GP107GL High Definition Audio Controller (rev a1) 00:06.0 VGA compatible controller: NVIDIA Corporation GP107GL [Quadro P400] (rev a1)Output nvidia-smi:

root@plexmediaserver:/home/thefrisianclause# nvidia-smi No devices were foundOutput dmesg | grep NVRM:

root@plexmediaserver:/home/thefrisianclause# dmesg | grep NVRM [ 4.311552] NVRM: loading NVIDIA UNIX x86_64 Kernel Module 470.82.00 Thu Oct 14 10:24:40 UTC 2021 [ 10.215141] NVRM: GPU 0000:00:06.0: RmInitAdapter failed! (0x22:0x56:667) [ 10.215199] NVRM: GPU 0000:00:06.0: rm_init_adapter failed, device minor number 0 [ 10.235168] NVRM: GPU 0000:00:06.0: RmInitAdapter failed! (0x22:0x56:667) [ 10.235232] NVRM: GPU 0000:00:06.0: rm_init_adapter failed, device minor number 0 [ 10.862962] NVRM: GPU 0000:00:06.0: RmInitAdapter failed! (0x22:0x56:667) [ 10.863028] NVRM: GPU 0000:00:06.0: rm_init_adapter failed, device minor number 0 [ 10.878876] NVRM: GPU 0000:00:06.0: RmInitAdapter failed! (0x22:0x56:667) [ 10.878936] NVRM: GPU 0000:00:06.0: rm_init_adapter failed, device minor number 0 [ 10.887869] NVRM: GPU 0000:00:06.0: RmInitAdapter failed! (0x22:0x56:667) [ 10.887926] NVRM: GPU 0000:00:06.0: rm_init_adapter failed, device minor number 0 [ 10.896816] NVRM: GPU 0000:00:06.0: RmInitAdapter failed! (0x22:0x56:667) [ 10.897793] NVRM: GPU 0000:00:06.0: rm_init_adapter failed, device minor number 0 [ 16.687238] NVRM: GPU 0000:00:06.0: RmInitAdapter failed! (0x22:0x56:667) [ 16.687340] NVRM: GPU 0000:00:06.0: rm_init_adapter failed, device minor number 0 [ 16.695624] NVRM: GPU 0000:00:06.0: RmInitAdapter failed! (0x22:0x56:667) [ 16.695700] NVRM: GPU 0000:00:06.0: rm_init_adapter failed, device minor number 0 [ 726.217617] NVRM: GPU 0000:00:06.0: RmInitAdapter failed! (0x22:0x56:667) [ 726.217652] NVRM: GPU 0000:00:06.0: rm_init_adapter failed, device minor number 0 [ 726.224431] NVRM: GPU 0000:00:06.0: RmInitAdapter failed! (0x22:0x56:667) [ 726.224463] NVRM: GPU 0000:00:06.0: rm_init_adapter failed, device minor number 0 [ 4202.108878] NVRM: GPU 0000:00:06.0: RmInitAdapter failed! (0x22:0x56:667) [ 4202.108980] NVRM: GPU 0000:00:06.0: rm_init_adapter failed, device minor number 0 [ 4202.116910] NVRM: GPU 0000:00:06.0: RmInitAdapter failed! (0x22:0x56:667) [ 4202.117008] NVRM: GPU 0000:00:06.0: rm_init_adapter failed, device minor number 0I hope this can help us resolve this issue....

-

@thefrisianclause said in Nvidia Quadro P400 not working on Ubuntu server via GPU/PCIe passthrough:

rm_init_adapter failed, device minor number 0

lol funny story: https://forum.proxmox.com/threads/gpu-passthrough-woes-rm_init_adapter-failed-device-minor-number-0.70205/

-

@olivierlambert Yes indeed and it got no further reactions...

For Proxmox I followed this guide: https://www.reddit.com/r/homelab/comments/b5xpua/the_ultimate_beginners_guide_to_gpu_passthrough/

And it worked out of the box very solid, of course this can be different for XCP-NG.

EDIT: I also tried different NVidia drivers with no luck...

-

Nice to see a bit of action in this thread after a few weeks. It seems both of use are using Ivy Bridge CPU's, I wonder if the issue is related to our CPU's or chipsets?

I notice in the Reddit thread you posted it mentions something about the E3-12xx CPU's:

IMPORTANT ADDITIONAL COMMANDS

You might need to add additional commands to this line, if the passthrough ends up failing. For example, if you're using a similar CPU as I am (Xeon E3-12xx series), which has horrible IOMMU grouping capabilities, and/or you are trying to passthrough a single GPU.

These additional commands essentially tell Proxmox not to utilize the GPUs present for itself, as well as helping to split each PCI device into its own IOMMU group. This is important because, if you try to use a GPU in say, IOMMU group 1, and group 1 also has your CPU grouped together for example, then your GPU passthrough will fail.Is it possible that same GRUB config is required here? Sorry, I'm probably not much help when it comes to hardware troubleshooting on Linux.

GRUB_CMDLINE_LINUX_DEFAULT="quiet intel_iommu=on iommu=pt pcie_acs_override=downstream,multifunction nofb nomodeset video=vesafb:off,efifb:off"I don't have the faintest idea of what any of this does or if it could help.

-

Yeah I am having this problem for almost 2 years now, as I have been trying to migrate to XCP-NG on and off to see if I can get this to work. So far I couldnt and I was pushed back to Proxmox.

The part of the steps that you are referring to are the steps that need to be done on the Proxmox host itself, although in this case the GPU is actually seen by the VM so the passthrough steps provided in this documentation: https://xcp-ng.org/docs/compute.html#pci-passthrough are working. Also the documentation part you are referring to are for E3-12xx CPU's, mine is an E5, so I dont have to do these steps and havent done those when on Proxmox.

But when you installed the Nvidia drivers on lets say in my case an ubuntu 20.04 VM, it does install it succesfully but for some reason nvidia-smi says 'no devices were found' while the VM sees the GPU (Quadro P400) and does confirm that the nvidia drivers have been installed.

So I am a bit clueless as where this error could be, as I have searched alot of forms right now about this particular problem but without succes. Some even say that the card could be 'dead' while it does work on Proxmox and even VMware ESXi.

-

I truly hope the community can assist in there, I have also no idea

-

Well community bring it on!

Curious on what you guys think about this and how to resolve this issue... -

Okay so did some test/troubleshooting again, did not do anything. Tried multiple nvidia drivers but no luck still the nvidia-smi error 'no devices were found'.

And still this error occurs:

[ 3.244947] NVRM: loading NVIDIA UNIX x86_64 Kernel Module 495.29.05 Thu Sep 30 16:00:29 UTC 2021 [ 4.061597] NVRM: GPU 0000:00:09.0: RmInitAdapter failed! (0x22:0x56:751) [ 4.064473] NVRM: GPU 0000:00:09.0: rm_init_adapter failed, device minor number 0 [ 4.074551] NVRM: GPU 0000:00:09.0: RmInitAdapter failed! (0x22:0x56:751) [ 4.074650] NVRM: GPU 0000:00:09.0: rm_init_adapter failed, device minor number 0 [ 28.791682] NVRM: GPU 0000:00:09.0: RmInitAdapter failed! (0x22:0x56:751) [ 28.791750] NVRM: GPU 0000:00:09.0: rm_init_adapter failed, device minor number 0 [ 28.799413] NVRM: GPU 0000:00:09.0: RmInitAdapter failed! (0x22:0x56:751) [ 28.799476] NVRM: GPU 0000:00:09.0: rm_init_adapter failed, device minor number 0 [ 37.904451] NVRM: GPU 0000:00:09.0: RmInitAdapter failed! (0x22:0x56:751) [ 37.904532] NVRM: GPU 0000:00:09.0: rm_init_adapter failed, device minor number 0 [ 37.913443] NVRM: GPU 0000:00:09.0: RmInitAdapter failed! (0x22:0x56:751) [ 37.913527] NVRM: GPU 0000:00:09.0: rm_init_adapter failed, device minor number 0I have no idea on what this is and how to resolve this. I only have this issue with XCP-NG. ESXi and Proxmox work just fine without any errors. Could it be a kernel parameter which I am missing in the hypervisor?

-

CentOS8/RockyLinux 8.5 apparently has the same issue. I believe it has something to do with the passthrough handled by XCP-NG for some reason...

-

I'm having the exact same problem on my R630 with a E5-2620V3. Tested on Ubuntu 20.04. Tried using the latest drivers (470) as well as the future build (495) with the same results of no device being found when running nvidia-smi. Card has been confirmed working on a baremetal windows machine as well as inside a proxmox VM. Happy to do anything needed to help get this figured out.

-

Same error message?

-

@olivierlambert yes I'm getting this

[ 5.091321] NVRM: loading NVIDIA UNIX x86_64 Kernel Module 470.82.00 Thu Oct 14 10:24:40 UTC 2021 [ 46.870183] NVRM: GPU 0000:00:05.0: RmInitAdapter failed! (0x22:0x56:667) [ 46.870225] NVRM: GPU 0000:00:05.0: rm_init_adapter failed, device minor number 0 [ 46.877175] NVRM: GPU 0000:00:05.0: RmInitAdapter failed! (0x22:0x56:667) [ 46.877214] NVRM: GPU 0000:00:05.0: rm_init_adapter failed, device minor number 0 [ 53.426603] NVRM: GPU 0000:00:05.0: RmInitAdapter failed! (0x22:0x56:667) [ 53.426701] NVRM: GPU 0000:00:05.0: rm_init_adapter failed, device minor number 0 [ 53.433752] NVRM: GPU 0000:00:05.0: RmInitAdapter failed! (0x22:0x56:667) [ 53.433830] NVRM: GPU 0000:00:05.0: rm_init_adapter failed, device minor number 0 [ 412.663599] NVRM: GPU 0000:00:05.0: RmInitAdapter failed! (0x22:0x56:667) [ 412.663716] NVRM: GPU 0000:00:05.0: rm_init_adapter failed, device minor number 0 [ 412.671452] NVRM: GPU 0000:00:05.0: RmInitAdapter failed! (0x22:0x56:667) [ 412.671554] NVRM: GPU 0000:00:05.0: rm_init_adapter failed, device minor number 0 -

Okay thanks for the feedback.

-

Well I went back to Proxmox now and it works just fine on a Ubuntu VM. The passthrough works as expected... So I have no idea in what is going on within XCP-NG, as it should work as well?

-

At this point, you best option is to ask on Xen devel mailing list. I have the feeling it's a very low level problem on Xen itself.

In the meantime, I'll try to get my hand on a P400 for our lab.