Sugestion for better GPU assignment to vms

-

@olivierlambert with the RTX 3060 i just used the GPU tab in advance options for the vm and it just worked. i followed this part of the documentation for the k80

https://xcp-ng.org/docs/compute.html#pci-passthrough -

I'm not sure to see where you used that, can you do a screenshot?

Also for the k80, you did pass the whole PCIe device then?

-

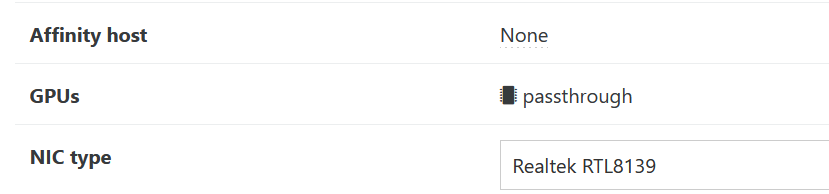

@olivierlambert for the RTX3060 i just used this tab

For the k80 i did what i used some of the basic commands from when I used kvm like lscpi and lspci -nnk to get the device IDs and blacklisted them from dom0

-

I have 0 recollection on how we managed to display GPUs in the advanced view, and how what command we relied to display a list. Nor why the k80 isn't displayed in there

Does it ring any bell to @pdonias or @Rajaa-BARHTAOUI ?

-

@olivierlambert no the k80 does show up but it passes through the whole device pcie device. i just passthrough the both of the 2 gpu cores on the k80 to 2 vms. i think the k80 has a pcie switch or something like that.

-

@brodiecyber honestly when i first saw it i was shocker as i was under the impression to do single passthrough you had to do it manually

-

@olivierlambert hello any news on how gpu pass-through was incorporated seemingly under the radar.

-

I pinged @Rajaa-BARHTAOUI and @pdonias so I'm waiting for them to answer

-

So far I am using K80, P100 and V100 GPUs only on oVirt.

Those cards actually have no error 43 issues with Nvidia drivers, because they are not "consumer" cards and have always been permitted to run inside VMs via pass-through. I believe Nvidia has also relaxed the rules in more recent drivers, so even GTX/RTX cards might work these days (I haven't yet tried this again). On KVM I used to have to use special clauses in the KVM config file to trick the Nvidia driver not to throw error 43.

One thing to note on the K80 is that it's been deprecated recently and anything newer than CUDA 11.4 (and the matching driver) won't support it any more: I found out the hard way just doing a "yum update" on a CentOS7 base.

Since the K80 and Tesla type GPUs don't have a video port, you'll have to do remote gaming, which can be quite a complex beast. But for a demo I used the Singularity benchmark from Unigine (on Linux) with VirtGL to run it on a V100 in a DC half-way across the country on an iGPU-only ultrabook in game-mode: most colleagues unfortunately didn't even understand what was going on there and how it was demoing both the feasability and the limitations of cloud gaming (the graphics performance was impressive, but the latencies still terrible).

-

@olivierlambert In VM/advanced tab, we display the VGPUs list of the hvm VM maybe that's why the k80 is not displayed. Should we change

GPUstovGPUson the interface? -

@olivierlambert thanks for the reply but would it be really necessary to change it because i mean both gpus will pass-through the whole k80 and the rtx3060. My only problem was while the k80 DOES SHOW UP under the gpu tab. my issue was that i cant pass-through individual gpu cores on the k80 via XO it only allows for the pass-through of the whole pcie card that meaning only the whole gpu can be pass-through an to single vm. whilst if you do the passthrough manyally you can assign 1 gpu core to one vm and gpu core 2 to another vm at the same time. I thought thi could also open the door for more pass-through of pcie devices for the future and maybe even an option for usb in XO

-

@Rajaa-BARHTAOUI thanks for the reply

-

This post is deleted! -

@rajaa-b Yes ?

-

@brodiecyber I apologize for leaving the above comment by mistake.

-

So I got a GTX 1080ti to be passed through to a Windows 10 VM with CUDA 11.6 working on a Haswell Xeon E5-2696 v3 workstation.

I had to do it the very manual way, as described here and the nice GUI on XOA never worked for me (no GPU offered for pass-through): it's one of the few areas where oVirt is actually better so far.

Within that document it states "WARNING Due to a proprietary piece of code in XenServer, XCP-ng doesn't have (yet) support for NVIDIA vGPUs" and I have some inkling as to what that refers to and I don't know if the situation is much better for AMD or Intel GPUs.

For CUDA compute workloads, there is essentially no restriction any longer, Nvidia stopped boycoting the use of "consumer" GPUs inside VMs and the famous "error 43" seems a thing of the past.

For gaming or 3D support inside apps, you need a solution somewhat similar to how dGPUs operate in many notebooks: There the Nvidia dGPU output isn't really connected to any physical output port, instead the iGPU instead mirrors the dGPU output via a screen-copy operation: that seems to be both cheaper and even more energy efficient than physically switching the display output via some extra hardware and allows to put dGPUs in notebook completely to sleep on 2D-only workloads.

On Linux there is a workaround using VirtGL/TigerVNC which works quite ok with native Linux games or Steam-Proton, on Windows I've tried using Steam RemoteGaming. The problem is that it will use the XOA console to determine screen resolution and that limits it to 1024x768.

In short: The type of dGPU partitioning support that VDI (virtual desktop infrastructure) oriented Quadro GPUs offer, requires not just the hardware (which would seem to be in all consumer and Tesla chips and not even fused off), but the proper drivers to work and this functionality (as opposed to CUDA support) hasn't been made widely available by Nvidia, probably because it's an extremly high-priced secure CAD market.

Others who might use this stuff are cloud gaming services and who knows what Nvidia might make possible outside contracts, but I'd be rather sceptical you'd get much gaming use out of your K80--which is quite a shame these days, because in terms of hardware it's quite an FP32 beast and at least not artificially rate limited at FP64.

-

@abufrejoval Hello with regard to the warning of proprietary code in xen server I believe that is only if you want to use VGPU on NVIDIA cards. What im talking about is pure pcie pass-through. What im focused on i better support for pcie pass-through of say usb or network card. and let it be esaly stubbed and then assigned to vms in XO. With regard to the k80 im using it not just for gaming but gpu accelerated containers and Kubernetes

-

@rajaa-b no problem