Xen Orchestra Quick Deploy Fails

-

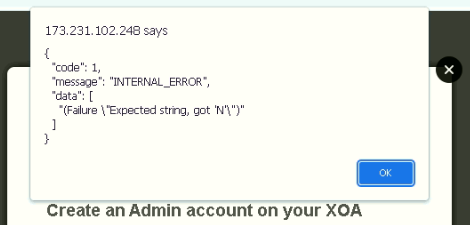

I'm getting an error when attempting to quick deploy XOA on a new xcp-ng server install.

When I try to select a storage pool the drop down menu doesn't show any options. My default SR is set as the same drive that I installed to (mirrored RAID on a pair of SSDs).

If I click through it lets me continue with the process but then errors out. See attached screenshot.

Any help would be appreciated.

Edit to add: After the first time experiencing this error I did a clean reinstall of the server. The issue repeated in exactly the same way.

-

Have you reviewed this thread?

-

@Danp Thank you, that's very helpful. It appears to be the same issue. xe sr-list results look exactly the same. My lsblk results look like this:

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT sdd 8:48 0 7.3T 0 disk ├─sdd2 8:50 0 7.3T 0 part └─sdd1 8:49 0 2G 0 part sdb 8:16 0 447.1G 0 disk └─md127 9:127 0 447.1G 0 raid1 ├─md127p5 259:4 0 4G 0 md /var/log ├─md127p3 259:2 0 405.6G 0 md ├─md127p1 259:0 0 18G 0 md / ├─md127p6 259:5 0 1G 0 md [SWAP] ├─md127p4 259:3 0 512M 0 md /boot/efi └─md127p2 259:1 0 18G 0 md sde 8:64 0 7.3T 0 disk ├─sde2 8:66 0 7.3T 0 part └─sde1 8:65 0 2G 0 part sdc 8:32 0 447.1G 0 disk └─md127 9:127 0 447.1G 0 raid1 ├─md127p5 259:4 0 4G 0 md /var/log ├─md127p3 259:2 0 405.6G 0 md ├─md127p1 259:0 0 18G 0 md / ├─md127p6 259:5 0 1G 0 md [SWAP] ├─md127p4 259:3 0 512M 0 md /boot/efi └─md127p2 259:1 0 18G 0 md sda 8:0 0 465.8G 0 disk └─sda1 8:1 0 465.8G 0 partBased on what I saw in that linked thread, I tried issuing the following command:

xe sr-create name-label="Local" type=ext device-config-device=/dev/md127 share=false content-type=userTo which the response was:

The SR operation cannot be performed because a device underlying the SR is in use by the server.Any suggestion as to what I've done wrong, or what my next step should be?

-

@peterbrunton said in Xen Orchestra Quick Deploy Fails:

md127

Pretty sure you should use

md127p3instead. -

@Danp Good catch, can't believe I missed that.

Unfortunately, that produced a new error;

Error code: SR_BACKEND_FAILURE_77 Error parameters: , Logical Volume group creation failed,From some googling around it appears the issue is that the disks still have a zfs_member tag on them. lsblk -f produces this:

NAME FSTYPE LABEL UUID MOUNTPOINT sdd zfs_memb ├─sdd2 zfs_memb data 305869021769108511 └─sdd1 zfs_memb sdb linux_ra localhost:127 1d7d1927-dfdb-c8a8-4af1-b25c2b355a7a └─md127 zfs_memb boot-pool 17799519329276801846 ├─md127p5 ext3 logs-efkbam 0deeb614-6788-45f9-92b7-0f6acc05da6d /var/log ├─md127p3 zfs_memb boot-pool 17799519329276801846 ├─md127p1 ext3 root-efkbam 62e0f462-29f8-4c2a-8df7-bed45c144a4a / ├─md127p6 swap swap-efkbam 26a6ce99-d4fd-43d1-8a27-79bddafb8707 [SWAP] ├─md127p4 vfat BOOT-EFKBAM 0B9B-C4C7 /boot/efi └─md127p2 sde zfs_memb ├─sde2 zfs_memb data 305869021769108511 └─sde1 zfs_memb sdc linux_ra localhost:127 1d7d1927-dfdb-c8a8-4af1-b25c2b355a7a └─md127 zfs_memb boot-pool 17799519329276801846 ├─md127p5 ext3 logs-efkbam 0deeb614-6788-45f9-92b7-0f6acc05da6d /var/log ├─md127p3 zfs_memb boot-pool 17799519329276801846 ├─md127p1 ext3 root-efkbam 62e0f462-29f8-4c2a-8df7-bed45c144a4a / ├─md127p6 swap swap-efkbam 26a6ce99-d4fd-43d1-8a27-79bddafb8707 [SWAP] ├─md127p4 vfat BOOT-EFKBAM 0B9B-C4C7 /boot/efi └─md127p2 sda └─sda1So it appears my next step is sorting that out. Unfortunately the only way of clearing these tags that I've found so far seems to be wiping the drives completely and starting over? If so that's going to suck as I don't have physical access to the machine right now.

If anyone has any suggestions for removing errant zfs_member tags (this machine previously hosted a TrueNAS install) without wiping the whole damn machine, I'd be eager to hear them. If not it'll have to wait until I'm back in the office I guess.

Thank you again @Danp for your help.