How to kubernetes on xcp-ng (csi?)

-

Update in case someone comes across this posting looking for the same answer. I needed a solution sooner than later and am so far thrilled with truenas core, a free solution if you've got a box to spare. It has several providers which can be used with kubernetes.

Moved my large drives from my two xcp-ng servers over to truenas.

Working solution: Kubernetes nodes as vms on xcp-ng, Kubernetes storage provisioner hosted via truenas core.

-

@olivierlambert said in How to kubernetes on xcp-ng (csi?):

To help k8s integration with XCP-ng/XO we already have plans to write a Node driver for Rancher.

OMG that would be amazing!

-

Stay tuned but work started

-

@olivierlambert Do you have any news regarding xo CSI storage provider for kubernetes?

-

Not yet sadly, the persons tasked to do it aren't giving any news since a while. Internally, we are working on our Project Pyrgos to help deploy easily k8s clusters.

-

@olivierlambert Thanks for the prompt reply! Fingers crossed for the Project Pyrgos!

-

@olivierlambert any update to this and the pyrgos project?

-

Yes, June's release came with new features, like selecting the Kubernetes version. We got multi control planes before, static IPs and such.

Take a look at our blog posts to see what's going on, there's not a new feature per month (yet) but it's moving forward

-

@olivierlambert ok seen there was another blog post about it here https://xen-orchestra.com/blog/xen-orchestra-5-84/

ok cool, thanks

-

Next steps take a bit more time because it's about storing the cluster key safely to be able (then) to use XO to make basic queries on it (like current version and such), which is the first step to prepare the automated node upgrade/replacement

-

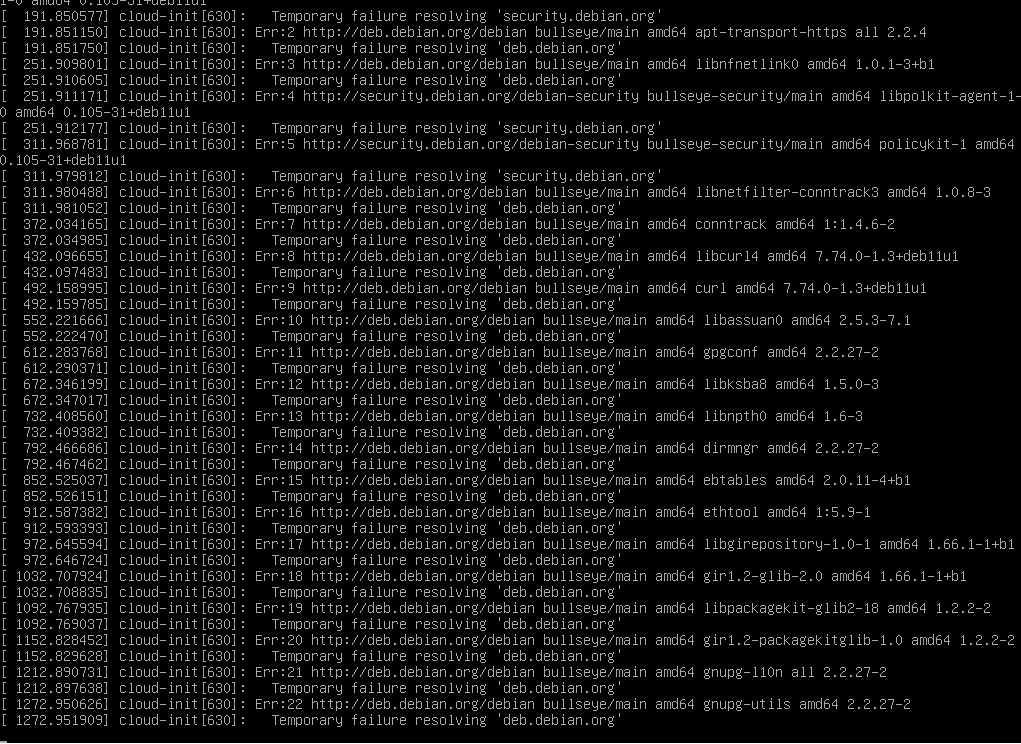

Trying to build a cluster from the hub, bit it is giving me "Err: http://deb.debian.org/debian bullseye/main amd65 ... ... Temporary failure resolvig deb.debian.org"

Probably because the VM gets an 169.254.0.2 apipa ip. Both setting up an static IP or DHCP is giving me the same issue. -

Can you try on

latestrelease channel? -

@olivierlambert said in How to kubernetes on xcp-ng (csi?):

Can you try on

latestrelease channel?Samething, again apipa ip.

Trying to login on the machine, is it the admin : admin?

-

On console I am getting "Failed to start Execute cloud user/final scripts."

suddenly it has an ip address, but the installation has failed.

-

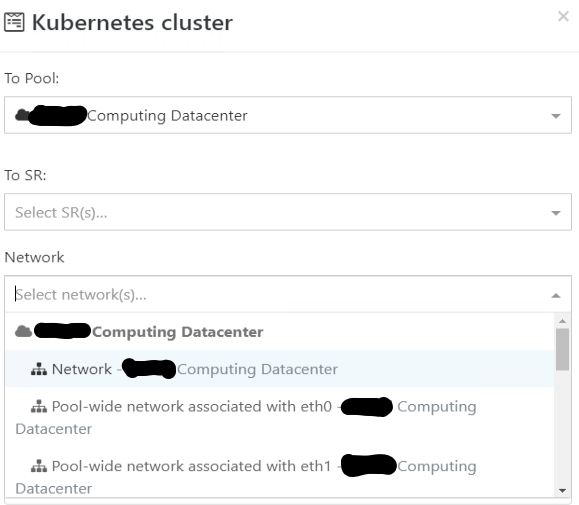

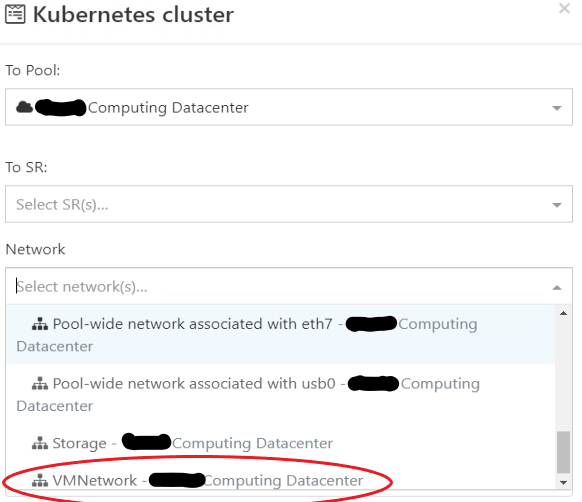

There's no default user/pass for obvious security reasons. Are you sure the VMs are deployed in the right network where you have a DHCP server (for example)?

Also pinging @shinuza

-

I've been using this with NVMe on 3 Dell 7920 boxen with PCI passthru.

https://github.com/piraeusdatastore/piraeus-operator

It worked well enough that I installed the rest of the NVMe slots to have 7TB per node. I pin the master kubernetes nodes each to a physical node, I use 3 so I can roll updates and patches. The masters serve the storage out to containers - so the workers are basically "storage-less". Those worker nodes can move around. All the networking is 10G with 4 interfaces, so I have one specifically as the backend for this.

Just one note on handing devices to the operator - I use raw NVMe disk.

There can't be any partition or PV on the device. I put a PV on, then erase it so the disk is wiped. Then the operator finds the disk usable an initializes. It tries to not use a disk that seems in use already.I also played a bit with XOSTOR but on spinning rust. Its really robust with the DRBD backend once you get used to working with it. Figuring out object relationships will have you maybe drink more than usual.

-

@Theoi-Meteoroi said in How to kubernetes on xcp-ng (csi?):

I've been using this with NVMe on 3 Dell 7920 boxen with PCI passthru.

https://github.com/piraeusdatastore/piraeus-operator

It worked well enough that I installed the rest of the NVMe slots to have 7TB per node. I pin the master kubernetes nodes each to a physical node, I use 3 so I can roll updates and patches. The masters serve the storage out to containers - so the workers are basically "storage-less". Those worker nodes can move around. All the networking is 10G with 4 interfaces, so I have one specifically as the backend for this.

Just one note on handing devices to the operator - I use raw NVMe disk.

There can't be any partition or PV on the device. I put a PV on, then erase it so the disk is wiped. Then the operator finds the disk usable an initializes. It tries to not use a disk that seems in use already.I also played a bit with XOSTOR but on spinning rust. Its really robust with the DRBD backend once you get used to working with it. Figuring out object relationships will have you maybe drink more than usual.

Did you use the built-in Recipes to create the kubernetes cluster? I tried NVMe, iCSCI, SSD, NFS Share. All the same thing.

-

You have a network issue (well, a DNS one) inside your VM, are you using the right network?

-

@olivierlambert said in How to kubernetes on xcp-ng (csi?):

You have a network issue (well, a DNS one) inside your VM, are you using the right network?

Feel so dumb. When creating a VM, usually the top Network is the correct one. For Kubernetes, I had to scroll all the way down and select the correct network.

-

Is it working correctly now?