Intel iGPU passthough

-

Tried docker/kubernetes Manjaro(just to switch to a later kernel)

Changed it to run as root.

Changed the permissions on the /dev/dri to 777.

Its just weird as it clearly sees the device.

Actually got it to repond with FFMPEG

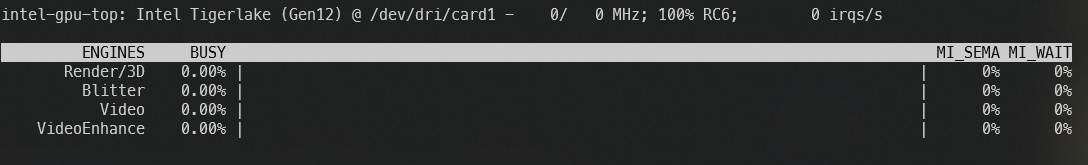

It gives an error and the GPU stays like that so it hangs, which is what happens to Plex too I guess.

-

@bullerwins Why would the driver have to be world writable (permissions 777) which seems like a security risk unless the /dev directory itself isn't. But still, a writable driver area seems very strange.

-

It was to rule out any permission problems.

But it seems more on the iommu side of things where it goes wrong.

I’ve just tried the same on proxmox and it works right away. (Well not right away, like you need to do some grub adjustments and load modules) Not sure what I can do to fix it. Does the xcp ng kernel take these iommu grub settings too?

what I can do to fix it. Does the xcp ng kernel take these iommu grub settings too?GRUB_CMDLINE_LINUX_DEFAULT="quiet intel_iommu=on iommu=pt"

and these modules

vfio

vfio_iommu_type1

vfio_pci

vfio_virqfd -

@xerxist I honestly do not know, but it seems it cannot hurt to try.

-

Giving this another try

I couldn't find those modules so this is probably not something in xcp-ng.

Is your vm running in EFI or BIOS?

-

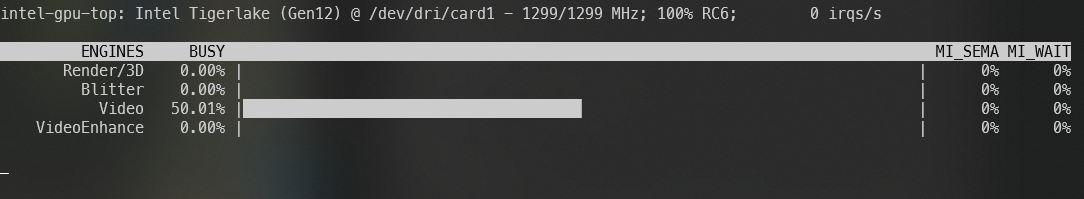

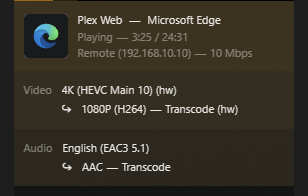

Got it working !!!!!

Changed the VM from UEFI to BIOS and it started working.

Still though why would it need it be BIOS instead of UEFI

Need to do some more testing as I also disabled something in the BIOS of the NUC11 for ASPM to so I'll bring up another VM with UEFI and test it again.

-

@xerxist Good news! Some boot mechanisms will work one way or the other, or in some cases, even either one of them.

It's also important to keep the BIOS up-to-date. -

Strange it works on UEFI too now.

Only thing that changed is "ASPM off" in the BIOS of the NUC.

Need to try that too on the NUC 13. -

NUC13 is still a no go with ASPM turned off.

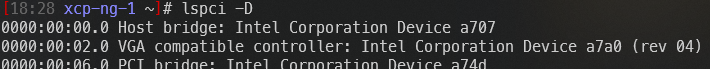

Not sure if the kernel needs to recognize it as it doesn't give me the type like on the NUC11

But the NUC11 is confirmed working fine BIOS or UEFI

-

@xerxist in BIOS mode, i would say it was the default for my ubuntu VM

-

Seems you would need at least Kernel > 5.15 for this to work on the NUC 12-13.

Not sure what got implemented/fixed there but it would need to be back ported for this to work. -

@xerxist my ubuntu 22.04 install came with kernel 5.15, i have it updated regularly but it doens't update the kernel it seems. But newer fresh installs of ubuntu 22.04 install a newer kernel. I'll check out if the kernel needs to be manually updated

-

Not in the VM itself.

I even went to kernel 6.6 and try it in there, all give the same issue.Something on the hypervisor side in the kernel I meant. This is 4.x something with allot of backports.

I'll probably just wait a wile before moving full XCP-NG.

But its a very nice system

-

@xerxist have you tried with the 8.3 beta of XCP-ng? I believe it's got a newer kernel maybe?

-

Yes I'm running 8.3 Beta

-

Is this just the mediated (gvt-g?) device passthrough, so the XCP side/server maintains video but a VM can make use of the resources as well?

I am very interested in this (plex, frigate type use) as a stepping stone away from Proxmox.

Thanks

-

In my testing of this, iGPU passthru works fine in Linux but in Windows the device will show an error in the device manager, disable/enabling the drive in the device manager will allow it to work, until next reboot.

-

@bullerwins @xerxist @flakpyro

What are you using for display output on the host since you're passing the iGPU to the VM?

-

@CJ Im running server grade hardware that has remote lights out management with iKVM support. Otherwise yeah you would lose access to the display output.

-

No output just need the Intel quick sync.