Epyc VM to VM networking slow

-

@john-c If the test goes well, this is what we'll do. Buy a 10G NIC (4 ports)

-

@manilx said in Epyc VM to VM networking slow:

@john-c If the test goes well, this is what we'll do. Buy a 10G NIC (2 ports)

I've bonded a 4 Port NIC (though in my case 1GB/s only as its all my home network can handle), into 2 LACP bonds. But if done with a 10G NIC 4 Port it will really fly with 2 pair LACP (IEE802.3ad) bonds. When I refer to 2 pair LACP (IEE802.3ad) bonds, its meaning 2 10G ports per bond, with 2 bonds; to really unleash its performance.

For example 536FLR FlexFabric 10Gb 4-port adapter from HPE is a 4 port 10G NIC, or another suitable NIC with 4 ports. Though can be a 2 port 10G NIC (without LACP - due to 1 port for each network), but will thus have less of a speed increase effect.

https://www.hpe.com/psnow/doc/c04939487.html?ver=13

https://support.hpe.com/hpesc/public/docDisplay?docId=a00018804en_us&docLocale=en_US -

@manilx P.S. Seem like the slow backup speed WAS related to the EPYC bug as I suggested a while ago.....

Should have tested this "workaround" a LONG time ago

-

@john-c Trued to test a new backup on the NPB7.

I restored the latest XOA backup to that host (didn't want to move the original one from the business pool).

On trying to test the backup I get: Error: feature Unauthorized

???I've spun up a XO instance in the meantime on the Intel test host and imported settings from XOA.

Run the same backup on both hosts to the same QNAP.

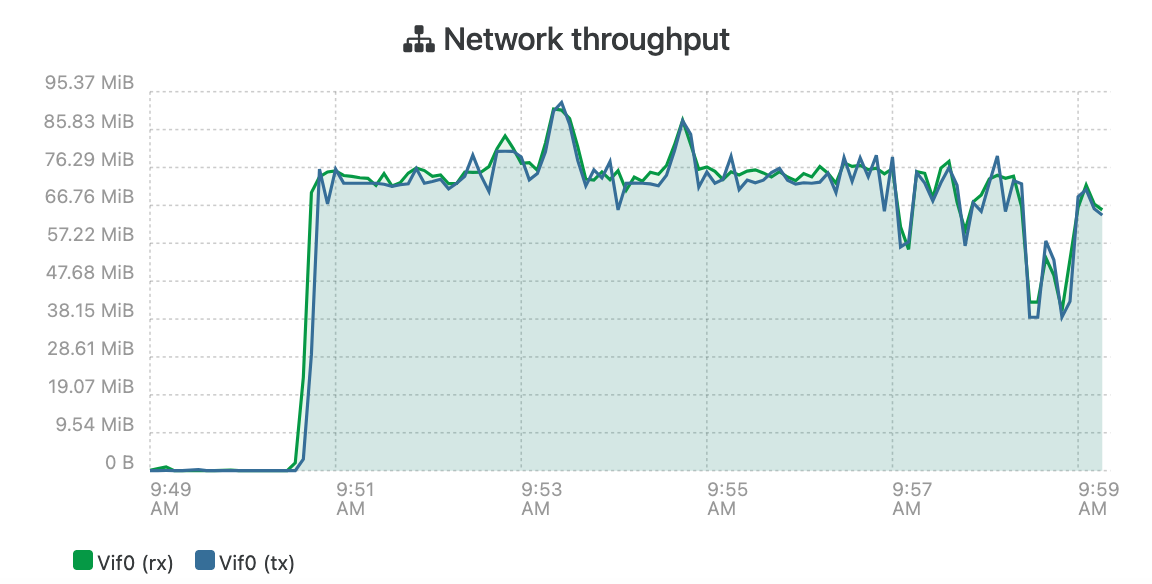

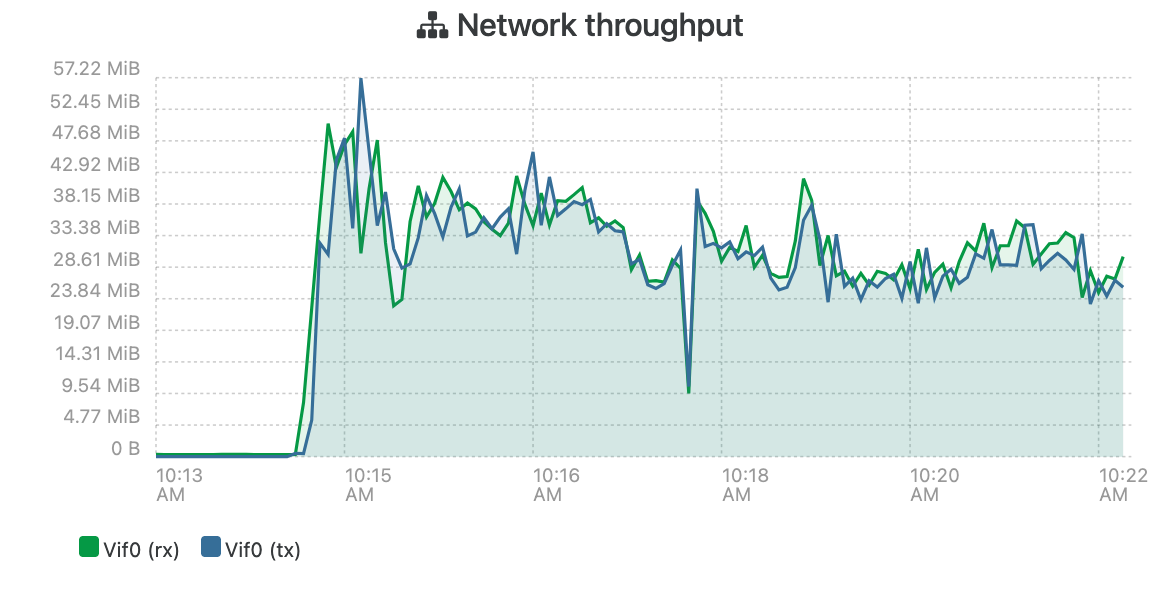

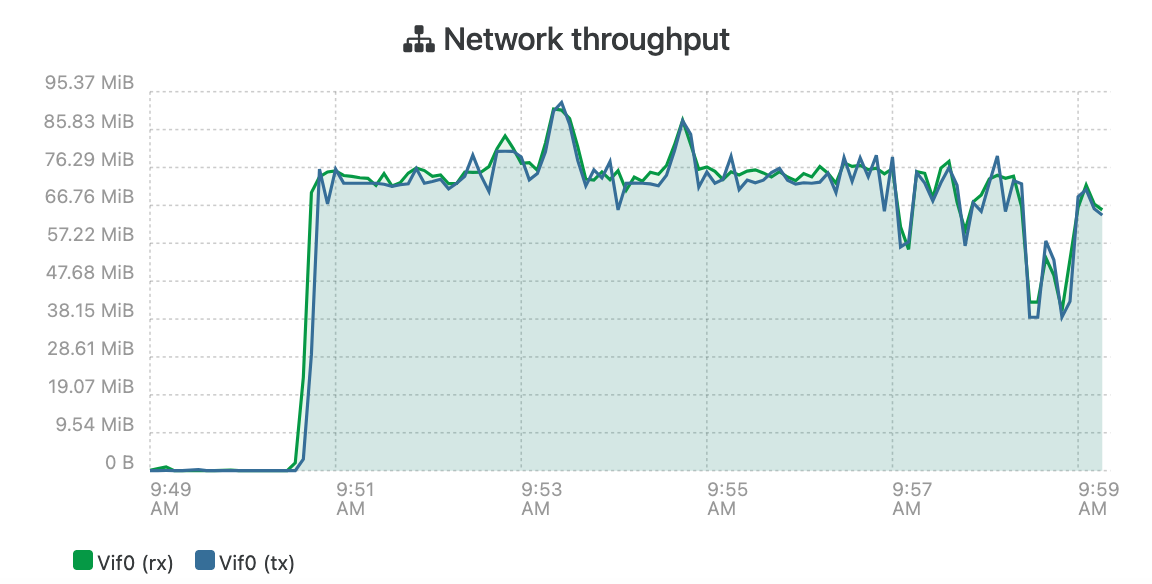

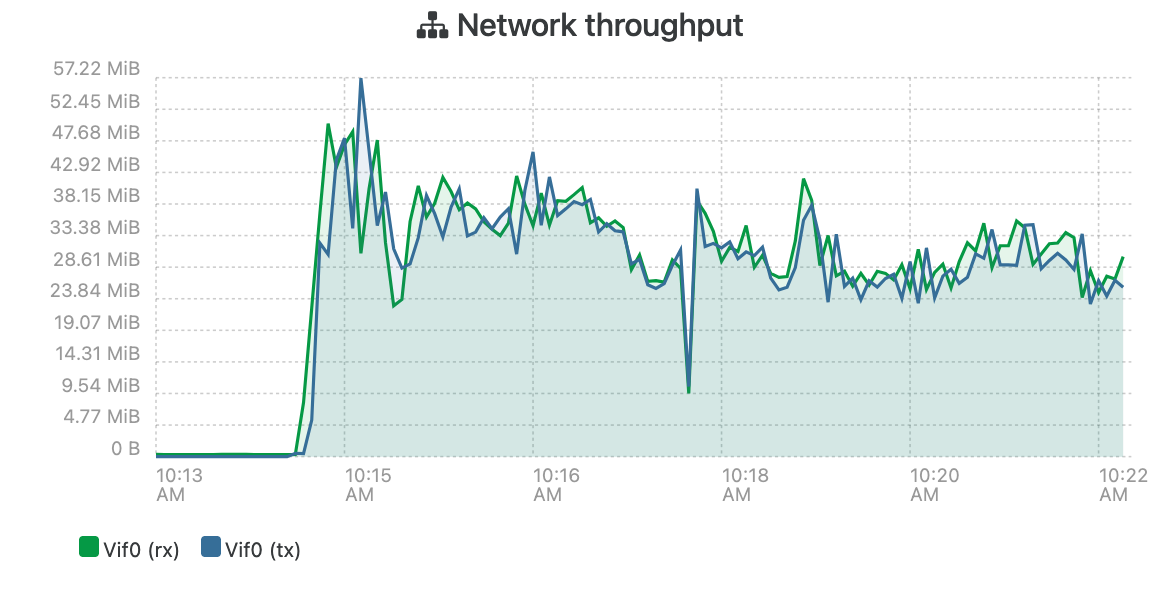

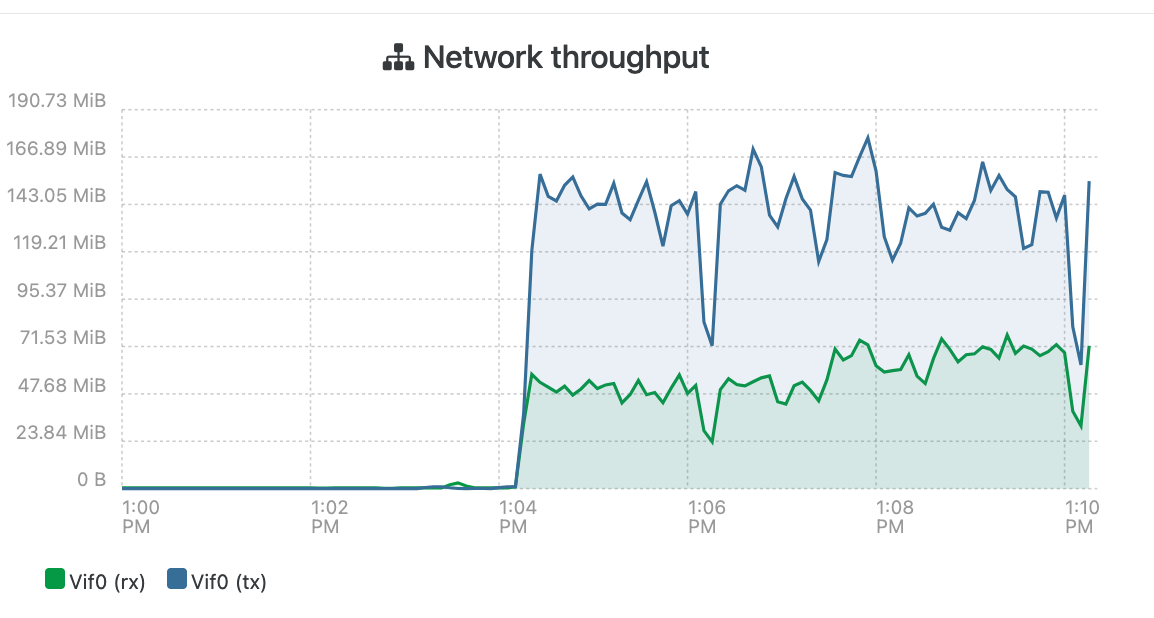

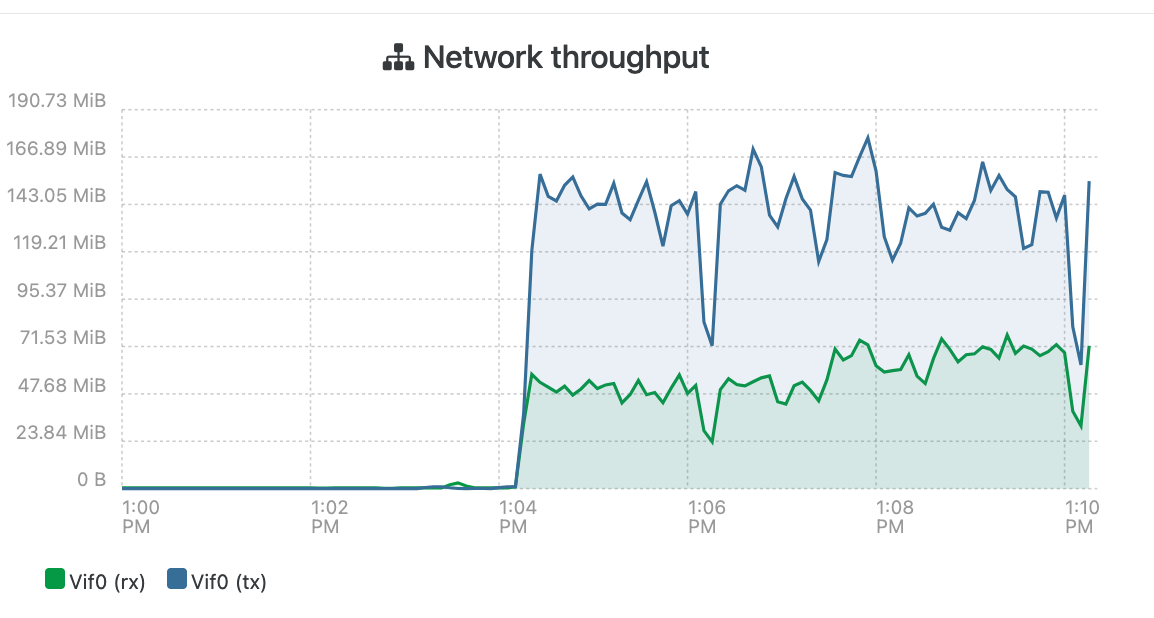

NPB7 Intel host connected via 2,5G:

HP EPYC host connected via 10G:

The difference is apparent!

I will now dettach the older HP from the backup pool and install it as an isolated pool and run XOA from there. Will order 10G NIC's

Now why does XOA error with "Error: feature Unauthorized" when I try to run backups from there??

-

@manilx said in Epyc VM to VM networking slow:

@john-c Trued to test a new backup on the NPB7.

I restored the latest XOA backup to that host (didn't want to move the original one from the business pool).

On trying to test the backup I get: Error: feature Unauthorized

???I've spun up a XO instance in the meantime on the Intel test host and imported settings from XOA.

Run the same backup on both hosts to the same QNAP.

NPB7 Intel host connected via 2,5G:

HP EPYC host connected via 10G:

The difference is apparent!

I will now dettach the older HP from the backup pool and install it as an isolated pool and run XOA from there. Will order 10G NIC's

Now why does XOA error with "Error: feature Unauthorized" when I try to run backups from there??

It's likely because the license is attached to the EPYC instance of the XO/XOA. The license can only be bound to one appliance at a time, and is currently bound to the EPYC instance. Your HPE Intel instance is only available as unlicensed or the Free Edition, until license is re-bound from the EPYC pool's instance.

Anyway overnight I realised to maintain the the availability of XO/XOA, during updates on the host it would need a second host for the dedicated server to join its pool. This would allow for RPU on the XO/XOA host when updating its XCP-ng instance.

https://xen-orchestra.com/docs/license_management.html#rebind-xo-license

-

@john-c License moved. All fine.

Backup Intel host running on 1G NIC's (for the time being) bonded lacp.

Already faster than before.

I have an XO instance running on a Proxmox host to be able to manage the pools when the main XOA is down (updates etc), so I'm good there and don't need another (2nd) backup host (would be crazy overkill).

-

@manilx said in Epyc VM to VM networking slow:

@john-c License moved. All fine.

Backup Intel host running on 1G NIC's (for the time being) bonded lacp.

Already faster than before.

I have an XO instance running on a Proxmox host to be able to manage the pools when the main XOA is down (updates etc), so I'm good there and don't need another (2nd) backup host (would be crazy overkill).

I mean have the Proxmox host as XCP-ng then and have it join the XO/XOA's pool, preferably if they are they same in hardware, components. That way when the HPE ProLiant DL360 Gen10 is down for updates, the XO/XOA VM can migrate between them live as required. So you can have RPU on the dedicated XO/XOA Intel based hosts.

-

@john-c Proxmox host is a Protectli. All good. XOA will be on the single Intel host pool, no need for redundancy here.

XO on Proxmox for emergencies.....Remember: this is ALL a WORKAROUND for the stupid AMD EPYC bug!!!!!!

Not in the least the final solution.The final is XOA running on our EPYC production pool as it was

-

@manilx said in Epyc VM to VM networking slow:

@john-c Proxmox host is a Protectli. All good. XOA will be on the single Intel host pool, no need for redundancy here.

XO on Proxmox for emergencies.....Remember: this is ALL a WORKAROUND for the stupid AMD EPYC bug!!!!!!

Not in the least the final solution.The final is XOA running on our EPYC production pool as it was

Alright in that case just the HPE ProLiant DL360 Gen10 as dedicated XO/XOA host. But bear in mind that when its updating the XCP-ng installed on it, the host will be unavailable and thus that instance of XO/XOA until the booting after reboot is complete.

-

@john-c Yes, obviously. For that I have XO on a mini-pc

-

@john-c @olivierlambert

One of our standard backup jobs. This is a 100% increase!!! On 1G lacp bond. Instead of 10G on EPYC host!1,5 yrs battling with this and in the end it's all due to the same issue as we now see.

-

@manilx it is deffo interesting to see more proof that this but may be wider than expected.

-

@manilx said in Epyc VM to VM networking slow:

@john-c @olivierlambert

One of our standard backup jobs. This is a 100% increase!!! On 1G lacp bond. Instead of 10G on EPYC host!1,5 yrs battling with this and in the end it's all due to the same issue as we now see.

Don't forget to also post the comparison and screenshot when you have fitted the 10G 4 Port NIC with the 2 lacp bonds on the Intel HPE!

-

@john-c WILL DO! I've told purchasing to order the card you recommended. Let's see how long that'll take....

EDIT: ordered on Amazon. Expect to be here 1st week Nov.

Will report back then pinging you.

-

@manilx said in Epyc VM to VM networking slow:

@john-c WILL DO! I've told purchasing to order the card you recommended. Let's see how long that'll take....

EDIT: ordered on Amazon. Expect to be here 1st week Nov.

Will report back then pinging you.

The Friday (1st November 2024) after this months update to Xen Orchestra or is it the week after?

-

@manilx I've been waiting for your ping back with the report. Following you saying the first week in November 2024, now in the beginning of the 2nd week in November 2024.

I'm wondering how's it going please, anything holding it up?

-

@john-c Hi. Ordered from Amazon that day and after more than 2 weeks order was cancelled without notice from supplier. Reordered from another one and I'm still waiting....

Not easy to get one. -

@manilx said in Epyc VM to VM networking slow:

@john-c Hi. Ordered from Amazon that day and after more than 2 weeks order was cancelled without notice from supplier. Reordered from another one and I'm still waiting....

Not easy to get one.Thanks for your reply. I hope it goes well this time, anyway if it still proves difficult then you can go for another quad port 10Gbe NIC which is compatible to do the LACP 2 bond with.

If the selected quad port 10Gbe NIC is available on general sale, then you can get it through the supplier who provided you with your HPE Care Packs.

-

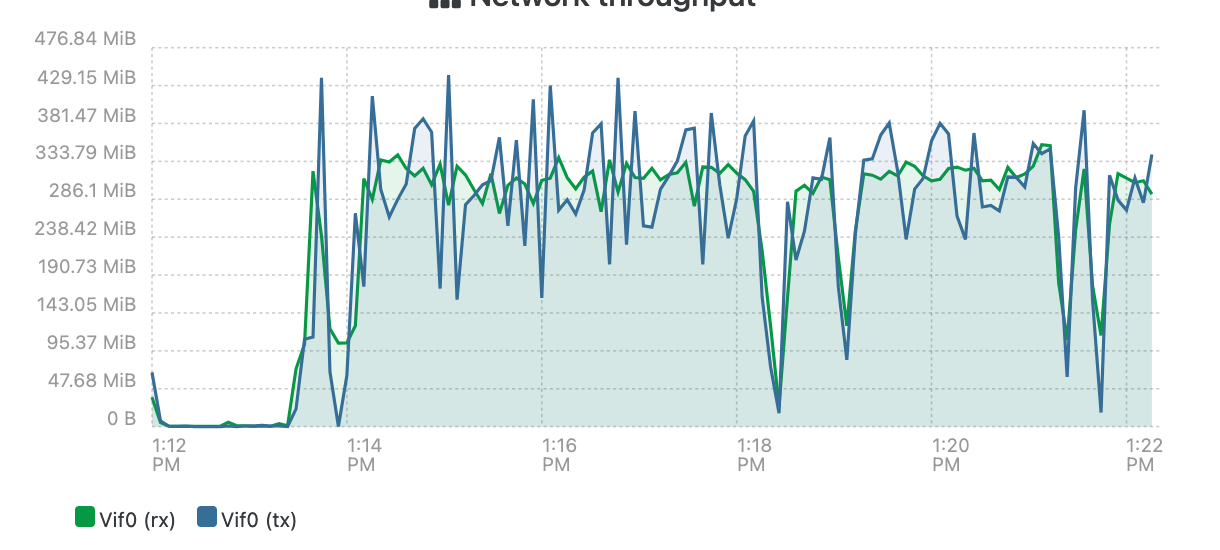

@john-c @olivierlambert Now we're talking!!!

Here are the results of a 2 VM Delta/NBD backup (initial one) using 2 10GB NICS in bond:

WHAT a difference, when we run XOA on an Intel host instead of an EPYC one, with backups.

I've told this from the beginning, that the slow backup speeds were due to the EPYC issue (as I got 200+ MB/s @home with measily Protectli's on 10G)

Looking on what the Synology gets: I get up to 500+ MB/s during the backup!

-

It's a good discovery that having XOA outside the pool can make the backup performance much better.

How is the problem solving going for the root cause? We too have quite poor network performance and would really like to see the end of this. Can we get a summary of the actions taken so far and what the prognosis is for a solution?

Did anyone try plain Xen on a new 6.x kernel to see if the networking is the same there?