[Old thread] XCP-ng Windows PV tools 9.0.9030 Testsign released: now with Rust-based Xen Guest Agent

-

Please see the answers below:

Will this installer remove the Xenserver drivers if they are already installed? Or should I use the cleaner first?

No, the installer will not remove existing XenServer drivers. You must use the included XenClean tools first.

The instructions say to remove secure boot, then run a powershell script, isn't this going to cause a problem with Windows 11 and maybe Server 2025? Can secure boot be turned back on after the powershell script has been run?

No, since our drivers are testsigned. Running Windows in testsigned mode should pose no problems on a non-production system.

I'm trying to slice out some time to work with Server 2025 in my XCP-ng lab so I can move forward in my production system when I'm comfortable with the upgrade. I'm on 8.3 stable if that matters, I could move to 8.3 testing if needed for these drivers/management agent. I'd really like to move away from Xenserver drivers because I just don't trust them to remain available in the long term.

The drivers are tested on 8.2 and 8.3, you shouldn't need to upgrade your hosts.

I'm also trying to keep my Server 2025 in UEFI, Secureboot, and vTPM going forward. We are going to be forced sooner or later so I might as well get familiar with any problems that might appear now while I can test.

We're trying our best to get our drivers signed by Microsoft for Secure Boot compatibility as soon as possible. Please stay tuned for updates.

-

Hmmm... I'm going to need to download on another computer, I'm getting a virus detected error. If you have a .ps1 script that elevates privileges, this is probably the cause. I've seen this before with Windows debloat tools (even signed ones). I'll log into a linux machine after I go set something up in another building and take a look.

Secure boot signing is probably a big hurdle, Chris Titus mentioned how much of a battle it was for him to get his Windows debloat tool signed.

I've also read a bunch on secure boot signing with the Fog server project, it sounded like it was a huge issue to deal with and a non-trivial amount of money. There is a workaround, but not for a production level thing like your drivers/agent would need to have.

I'll have to find some time next week to give this a try on one of my test VMs, if they crash, no big deal and I'll build them again.

I will make one suggestion... Can you make these available as an ISO? That way we can drop them right into our ISO SR and mount just like inserting a CD. I've been doing this with the Xenserver drivers and it works out really nice, any other tools that might be useful can also go in there.

-

@Greg_E With which file and AV are you getting a detection? I'll submit a false positive report.

And yes, getting drivers signed by Microsoft has been a massive issue that I (and other Windows software vendors) are encountering.

I've uploaded an ISO file to the release. You could also generate one yourself with WinCDEmu or a similar tool. (I deleted the ZIP file by accident and had to reupload, the hashes should be the same)

-

It is probably Trellix, but hard to say since Trellix operates on top of Defender. It blocks the download from starting and I didn't have time to dig into it to see what was happening.

Browser was Chrome if it matters.

Chris Titus has similar problems with the exe version of his windows tool, and he paid to get that tool signed.

-

@dinhngtu said in XCP-ng Windows PV tools 9.0.9030 Testsign released: now with Rust-based Xen Guest Agent:

9.0.9000.0, we're interested in hearing about your upgrade experience to 9.0.9030

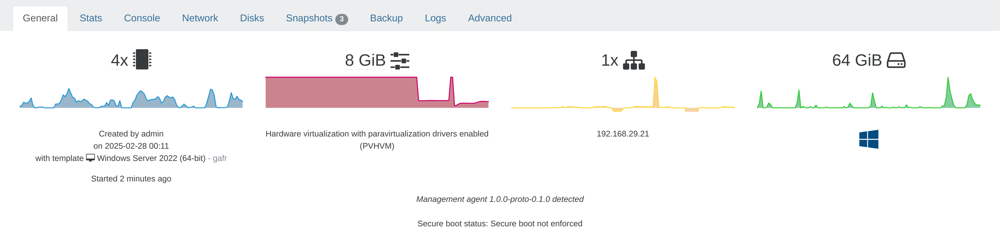

I tested the new tools today on a fresh Windows Server 2022 VM.

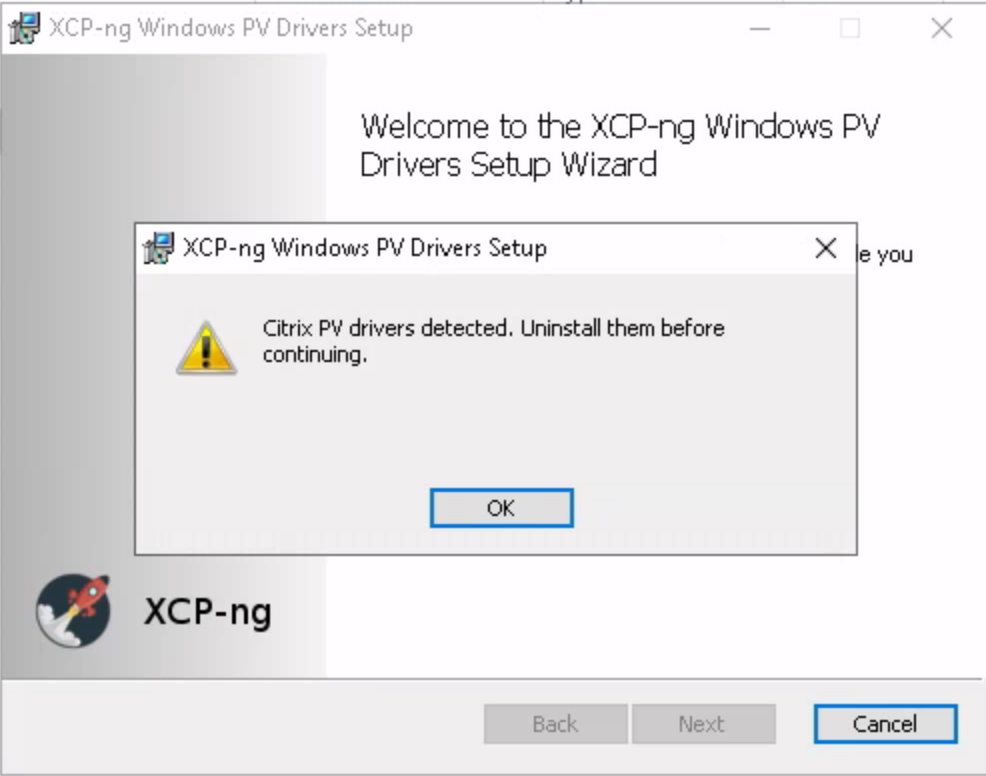

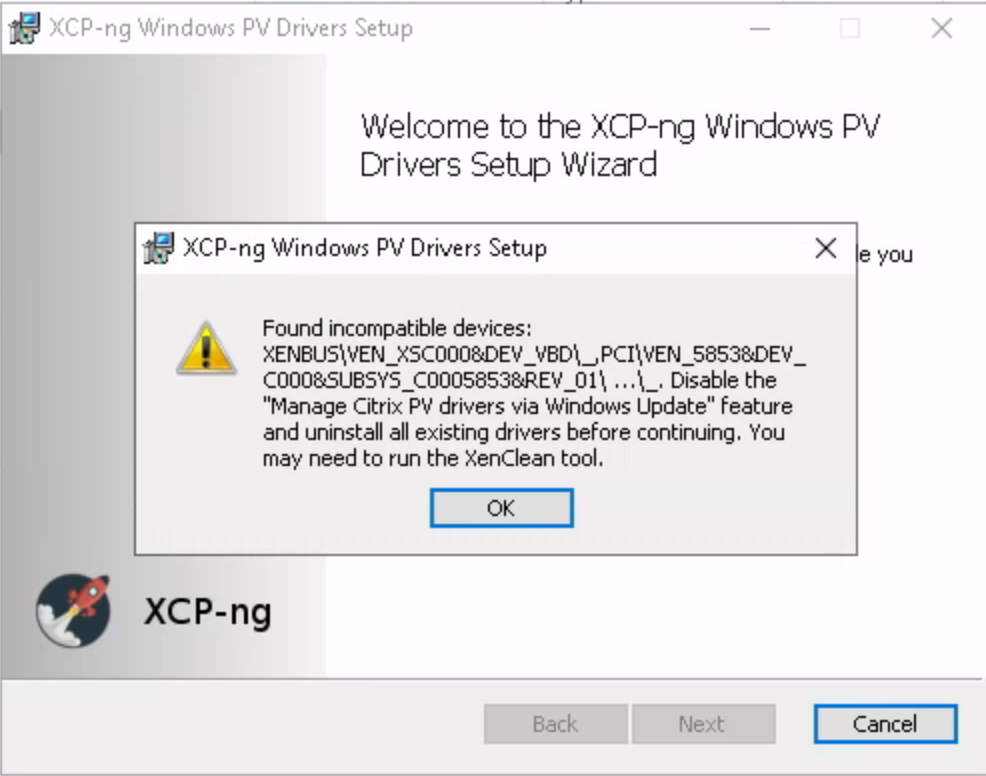

Coming from 9.0.9000.0 I was given an error message that warned of incompatible drivers already installed and I had to run the XenClean utility first, then reboot and finally install 9.0.9030. Everything went smoothly.

The only thing I would expect differently is a menu entry in the Start Menu that links to the tools installed and repairing or removing them, I guess.

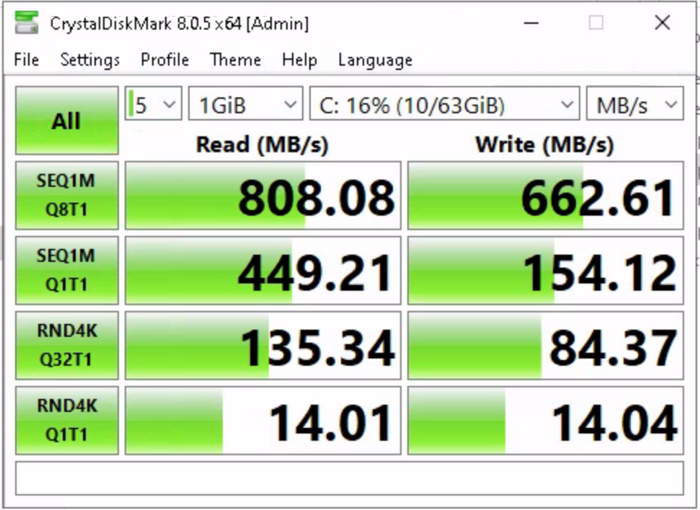

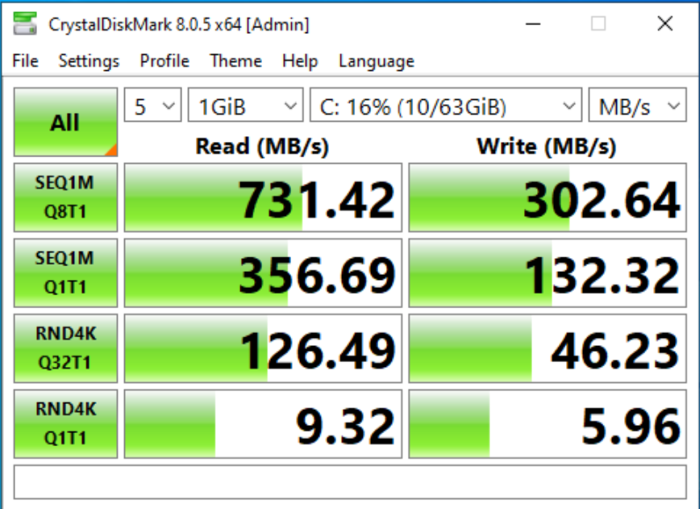

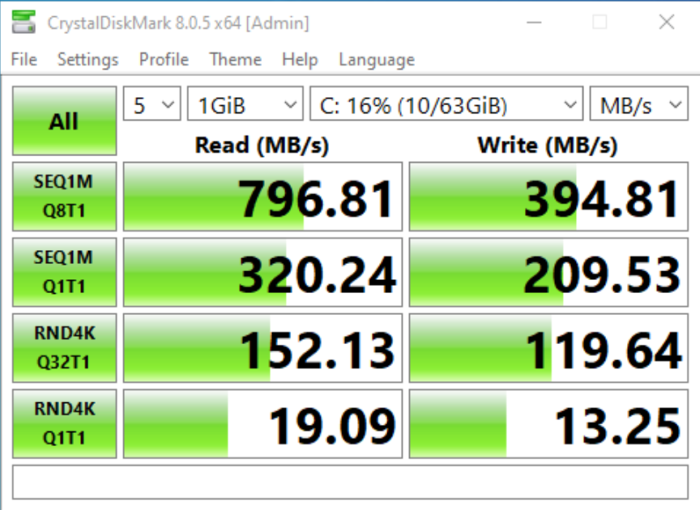

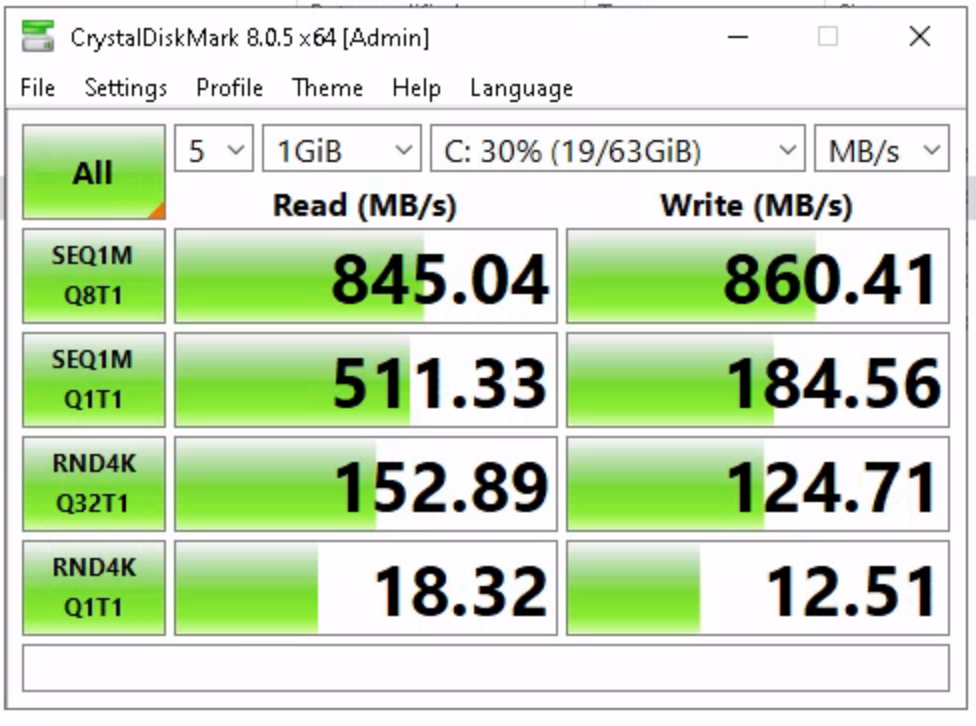

After that I ran some benchmark out of curiosity. The VM storage is on a XFS NVMe. Somehow it seems I used to get better performance with the 9.0.9000.0 version.

XCP-ng Tools v9.0.9000.0 aka

XCP-ng Tools v9.0.9030

Citrix Guest Tools v9.4.0

-

@guiand888 Thanks very much for testing. Did the IP/guest version reporting in XO work for you? The incompatible drivers warning is a bit unexpected, I'll try to fix that.

For the disk performance, did you install with the Xen PV disk drivers option enabled? If yes, it might just be per-test variance (which happens a lot with Xen PV drivers), as the sequential write speed differed a lot between 9000.0 and 9030/Citrix guest tools.

-

@dinhngtu Thank you for your help. Please see this thread. https://xcp-ng.org/forum/post/90424

I am using windows server 2025, with the new Alpha to remove the 2TB limit with qcows. I am experiencing a significant speed degradation using your drivers here and the 'official' Xen drivers. My post include videos with details.

My reason for testing this is, we are an SMB with a windows share of 8TB on a server. I would like to move to xcp-ng while being able to use this large volume for our file share. Any ideas? -

Move the shared file to a NAS/SAN and map the drive letter to the clients?

An 8TB drive size is going to be all kinds of unhappy if you want to snapshot or backup that VM.

Exception to this might be a large database that needs fast access to the data, but even there I would expect some kind of shared storage to be at least as fast as a giant VM.

-

@Greg_E said in XCP-ng Windows PV tools 9.0.9030 Testsign released: now with Rust-based Xen Guest Agent:

Move the shared file to a NAS/SAN and map the drive letter to the clients?

An 8TB drive size is going to be all kinds of unhappy if you want to snapshot or backup that VM.

Exception to this might be a large database that needs fast access to the data, but even there I would expect some kind of shared storage to be at least as fast as a giant VM.

I understand. I've been thinking about it. But the windows VM would just see the VDI as a second disk. It will host quickbooks and office files with no virtual machines on the storage drive.

They said to test it, so I did. I may be a corner case, but its testing nonetheless. Worst case I could just do two different VDI's at 2TB each if i decide on xcp-ng.

-

Hi @dinhngtu ,

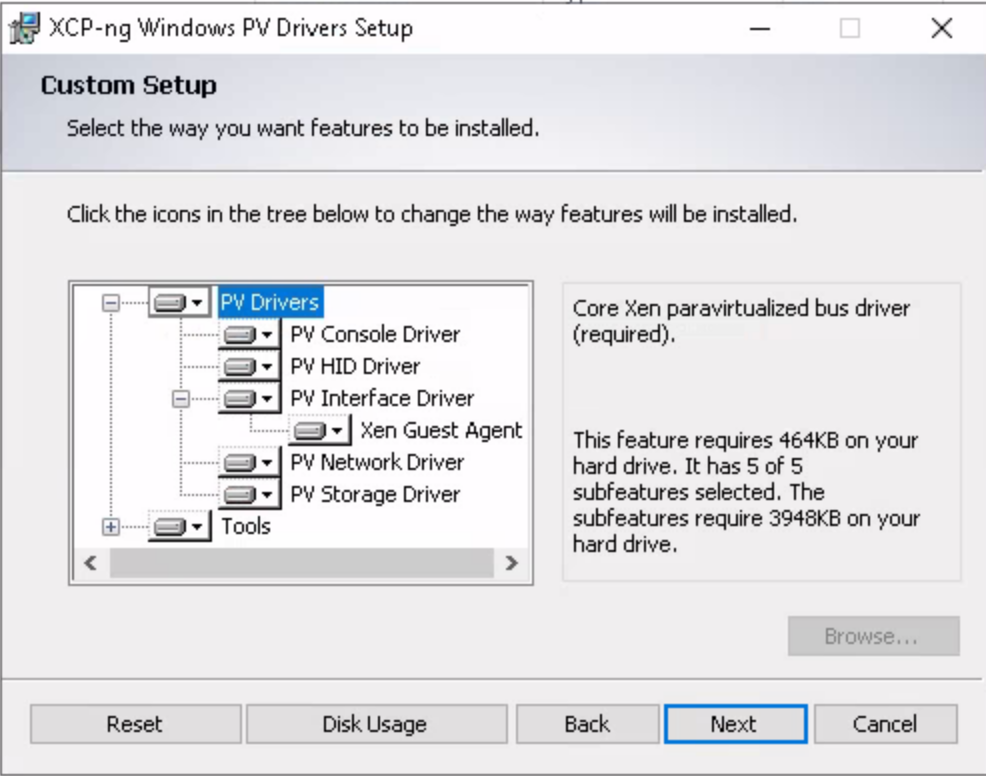

Yes, I installed the PV drivers. I made sure everything was selected in the list of functions offered by the installer.

Yes, I installed the PV drivers. I made sure everything was selected in the list of functions offered by the installer.

IPv4 address reported correctly

IPv4 address reported correctlyI did some additional test, this time starting from a test VM that had the XenServer tools installed.

- Started a test VM with the Xenserver tools installed (version correctly reported in XO as Management agent 9.4.0-146 detected)

- Ran Xenclean

- Had to do another reboot to disable the "Manage Citrix PV drivers via Windows Update"

- VM got a blue screen

- Rebooted again and hit "Continue" after getting the "Windows repair" screen. VM booted successfully

- Had to run XenClean again as some driver still seem to be present

- Then finally I could install v9.0.9030

- The IP is correct. Not sure about the tools version but it seems it's not?

Before XenClean

Step 5

Step 6

v9.0.9030 reporting in XO

v9.0.9030 reporting in XO

Another benchmark with v9.0.9030I ran another benchmark and it seems you are right re. the variance. The host I test on is not an idle host, although the load is low - could that be the reason?

Other suggestions:

You could add a check that the testsign script is run from 64-bit Powershell version. If run from the 32-bit Powershell the following error will occur:The term 'bcdedit.exe' is not recognized as the name of a cmdlet.

It is easy to run the 32-bit version by accident because it is usually the first one that comes up in the Windows menu search results.Hope this helps!

-

@guiand888 Thank you for the feedback. Just a note: before running XenClean, please turn off the "Manage Citrix PV drivers via Windows Update" option; otherwise, Windows will try to reinstall Citrix drivers as soon as it's rebooted.

For the storage perf, Xen PV does fluctuate a lot at I/O performance (both network and storage), so the real performance would be better seen with long benchmark runs (depending on your I/O backend).

-

@dinhngtu An issue i ran into in testing the final signed release.

On a Server 2025 VM after installing the tools the version is properly displayed in XOA, however after a migration the version is not displayed and instead "Management agent not detected" is shown, memory stats also stop displaying in XOA. After restarting the Rust tools service in windows however XOA picks up on the tools running within the VM again.

-

@flakpyro It's a known issue, a new version with fixes will be released soon.

-

@dinhngtu Thanks. We plan to migrate all Windows VMs from the Citrix tools down the road and only have a handful of VMs running these so far so will maybe hold off until the next version. Have been running the Linux rust tools for over a year with zero issues..