I decided to burn my lab down because of some trouble and wanted to start fresh to isolate any problems I may have created in the past. Wiped the disks, built a new NFS share ready for work, installed the XCP-ng OS and configured networking for the management interface on the local machines, all normal stuff. Went back to my desk and logged into XO Lite on each machine, using console --> xsconsole I was able to get all the hosts into a pool, and add an ISO and NFS SR, all seemed to work.

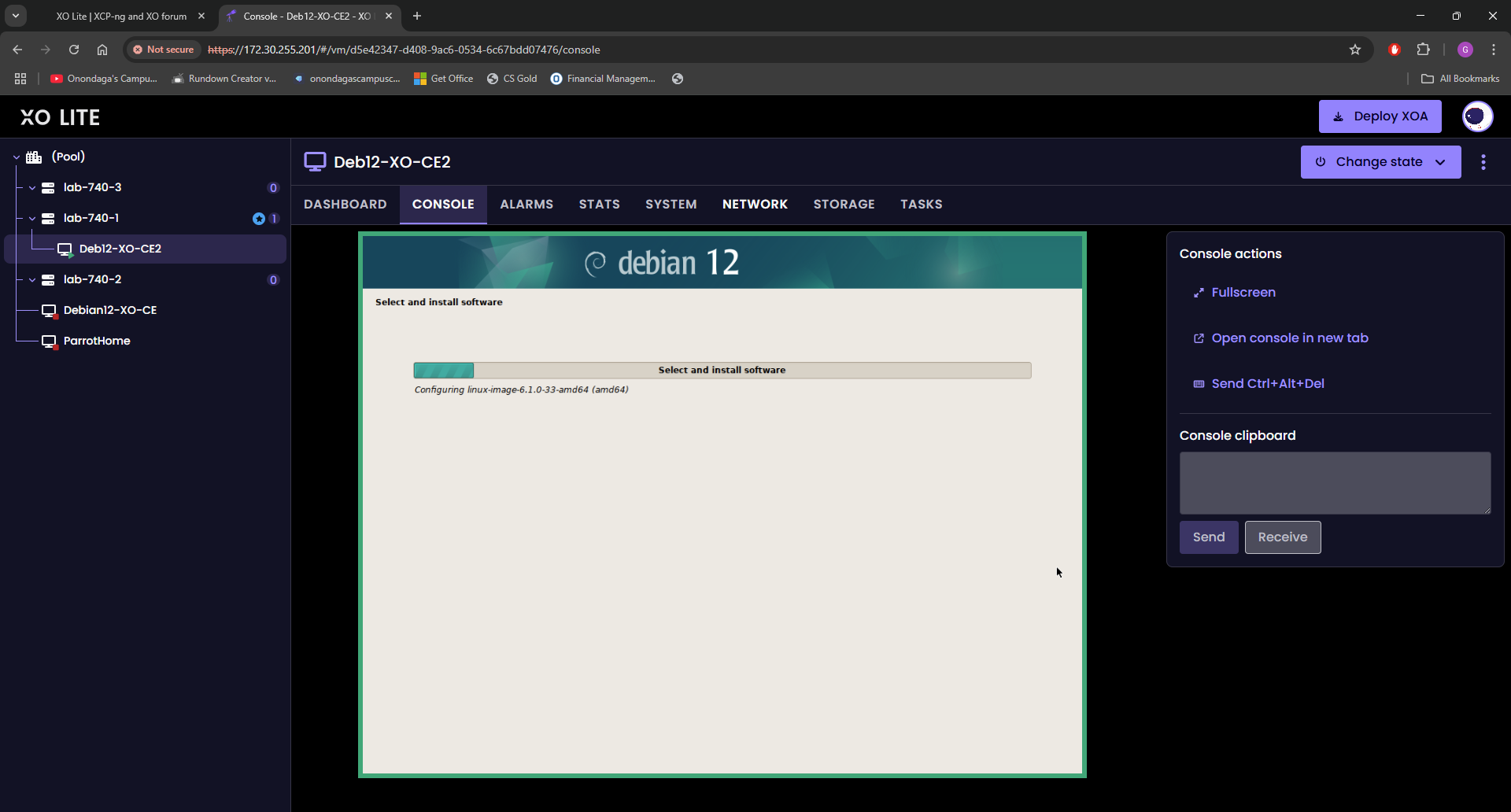

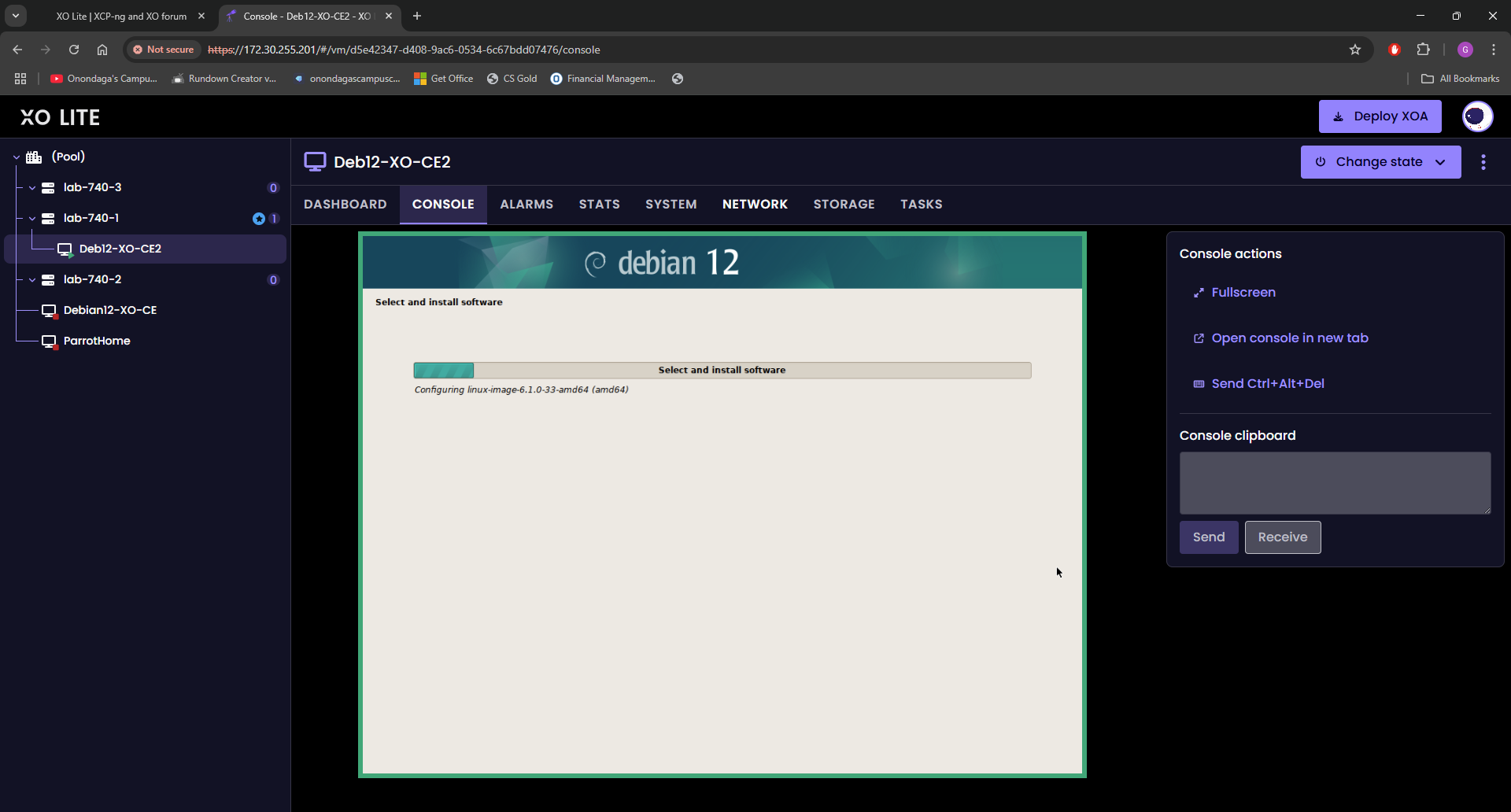

Went to the New VM to create a simple Debian vm on which I intended to host XO-CE from sources. Typed the info in, chose the Debian image from my ISO SR, the rest of the config as normal. Uncheck the boot after creation box, and create.

Click on the VM to bring the console up, then click power on... UEFI loader comes up, and ... Can't find the boot disk. Tried another iso that I knew was capable of EFI boot, same.

Realized I was trying to start in EFI, made a new one in BIOS mode, this one worked but now my screen shots are messed up so I'll probably have to go through and delete stuff with a different XO and go back to grabbing images of the process to write up "for the next guy".

Other than this small issue, it's working and doing it all from XO Lite. Yes still a bunch of missing features, but I know you are working on this. I just wanted to try things this way on this fresh build and see what I could get done from local console and XO Lite.

️ XO 6: dedicated thread for all your feedback!

️ XO 6: dedicated thread for all your feedback!