Some backups failing

-

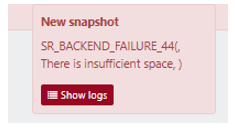

Most backups working fine. 5 not working. End with "SR_Backend_Failure_44 (There is unsufficient space)". Those 5 x VMs will not snapshot either. The host is 8.2.1.

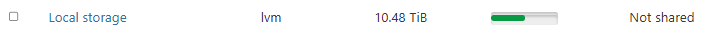

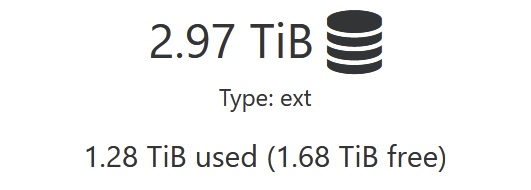

Local storage on the host is 51% used.

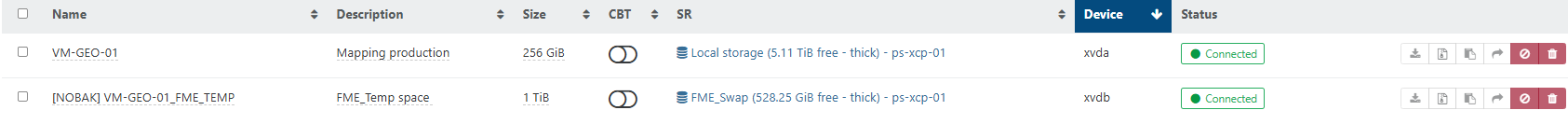

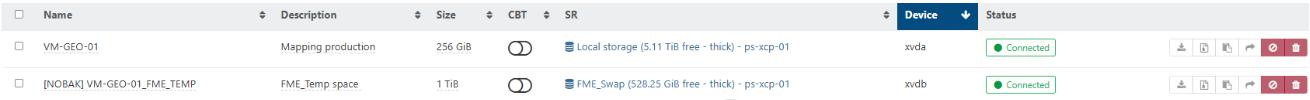

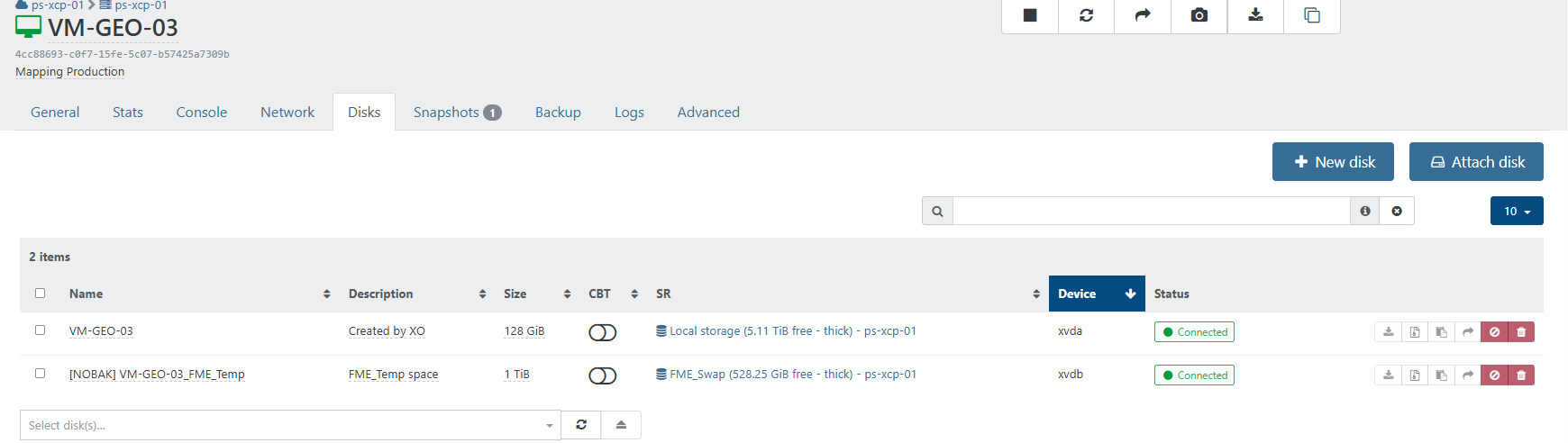

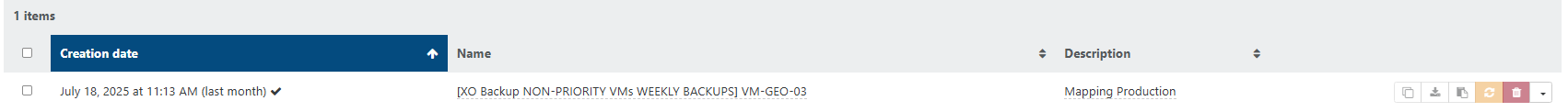

This is one of the VMs that won;t backup, however there are 6 identical, and only one of them backs up correctly.

Any ideas?

-

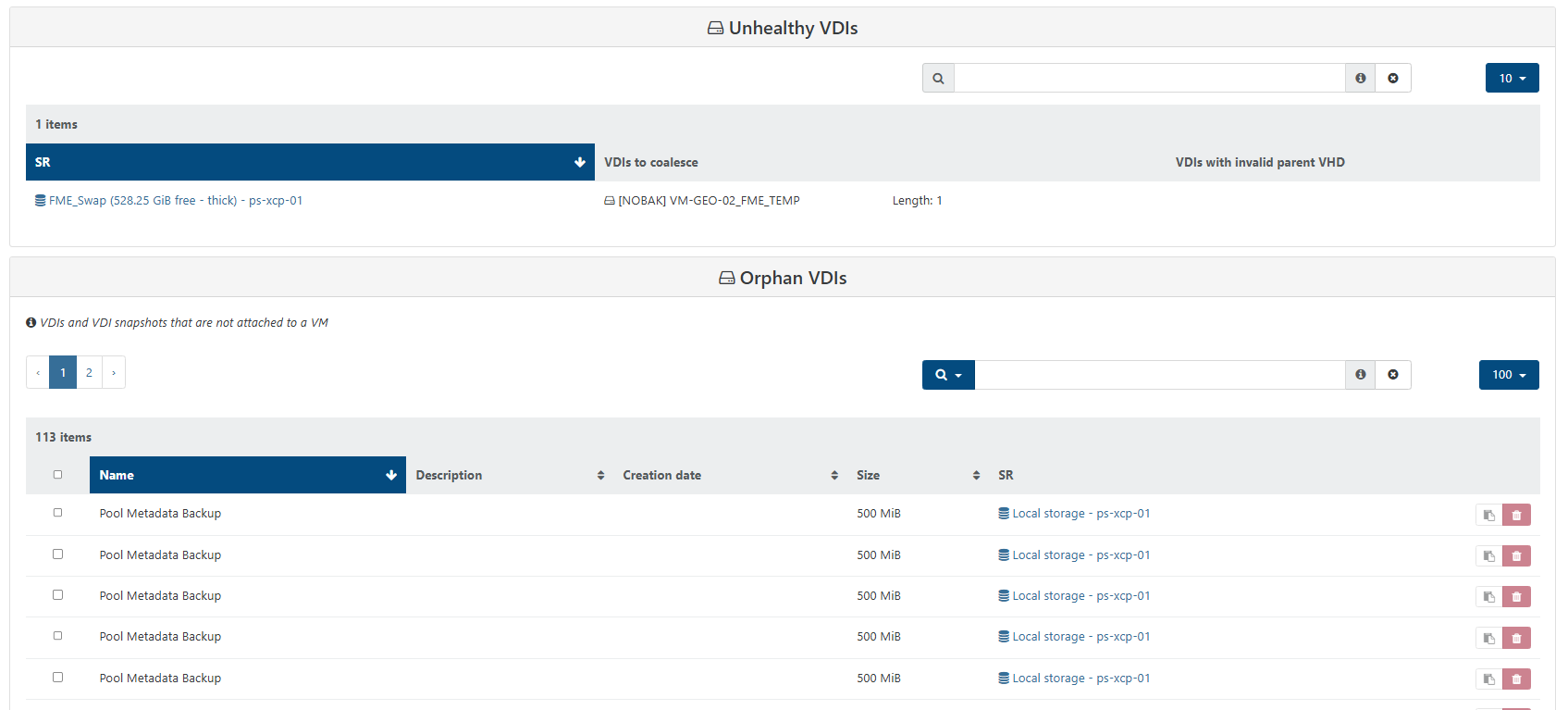

It sounds like you are running low on diskspace on the SR where these VMs are located. Have you checked the Unhealth VDIs section under

Dashboard > Health tabto see if you have a coalesce issue? -

@Danp Hi Dan, there is only one Unhealthy VDI, but about 110 (500mb) Orphan VDIs....?

-

Just too add something too. The 1 x VM that snapshots fine had management agent 9.4.1 on it - and the other 5 have 9.3.3. So I assumed this might have been the issue - and upgraded one of them to 9.4.1 as a test. Still same error.

Note that about 10 other VMs on the host snapshot and backup just fine. -

The error log for the snapshot failure:

vm.snapshot { "id": "567459b9-362c-90e8-9b2b-9e61572243ff" } { "code": "SR_BACKEND_FAILURE_44", "params": [ "", "There is insufficient space", "" ], "task": { "uuid": "f2c46023-ea35-7d61-1106-b799adec4648", "name_label": "Async.VM.snapshot", "name_description": "", "allowed_operations": [], "current_operations": {}, "created": "20250829T10:31:09Z", "finished": "20250829T10:31:16Z", "status": "failure", "resident_on": "OpaqueRef:b71238e8-bce1-4a59-b9be-870e2de57558", "progress": 1, "type": "<none/>", "result": "", "error_info": [ "SR_BACKEND_FAILURE_44", "", "There is insufficient space", "" ], "other_config": {}, "subtask_of": "OpaqueRef:NULL", "subtasks": [ "OpaqueRef:09be2d2a-c450-42fc-8c3a-3c876274bb18" ], "backtrace": "(((process xapi)(filename ocaml/xapi/xapi_vm_clone.ml)(line 80))((process xapi)(filename list.ml)(line 110))((process xapi)(filename ocaml/xapi/xapi_vm_clone.ml)(line 122))((process xapi)(filename lib/xapi-stdext-pervasives/pervasiveext.ml)(line 24))((process xapi)(filename lib/xapi-stdext-pervasives/pervasiveext.ml)(line 35))((process xapi)(filename ocaml/xapi/xapi_vm_clone.ml)(line 130))((process xapi)(filename ocaml/xapi/xapi_vm_clone.ml)(line 171))((process xapi)(filename ocaml/xapi/xapi_vm_clone.ml)(line 209))((process xapi)(filename ocaml/xapi/xapi_vm_clone.ml)(line 220))((process xapi)(filename list.ml)(line 121))((process xapi)(filename ocaml/xapi/xapi_vm_clone.ml)(line 222))((process xapi)(filename ocaml/xapi/xapi_vm_clone.ml)(line 442))((process xapi)(filename lib/xapi-stdext-pervasives/pervasiveext.ml)(line 24))((process xapi)(filename lib/xapi-stdext-pervasives/pervasiveext.ml)(line 35))((process xapi)(filename ocaml/xapi/xapi_vm_snapshot.ml)(line 33))((process xapi)(filename ocaml/xapi/message_forwarding.ml)(line 131))((process xapi)(filename lib/xapi-stdext-pervasives/pervasiveext.ml)(line 24))((process xapi)(filename ocaml/xapi/rbac.ml)(line 205))((process xapi)(filename ocaml/xapi/server_helpers.ml)(line 95)))" }, "message": "SR_BACKEND_FAILURE_44(, There is insufficient space, )", "name": "XapiError", "stack": "XapiError: SR_BACKEND_FAILURE_44(, There is insufficient space, ) at Function.wrap (file:///opt/xo/xo-builds/xen-orchestra-202408190902/packages/xen-api/_XapiError.mjs:16:12) at default (file:///opt/xo/xo-builds/xen-orchestra-202408190902/packages/xen-api/_getTaskResult.mjs:13:29) at Xapi._addRecordToCache (file:///opt/xo/xo-builds/xen-orchestra-202408190902/packages/xen-api/index.mjs:1041:24) at file:///opt/xo/xo-builds/xen-orchestra-202408190902/packages/xen-api/index.mjs:1075:14 at Array.forEach (<anonymous>) at Xapi._processEvents (file:///opt/xo/xo-builds/xen-orchestra-202408190902/packages/xen-api/index.mjs:1065:12) at Xapi._watchEvents (file:///opt/xo/xo-builds/xen-orchestra-202408190902/packages/xen-api/index.mjs:1238:14)" } -

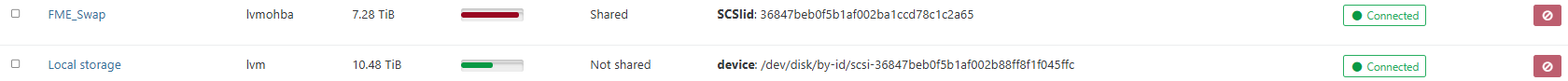

What is the type and size of the SR? How much space is free on it?

-

The SR of the host is 51% full.

The VM:

-

The 1TB disk resides on a different SR, FME_Snap, which appears to be near capacity. This is why you can't take a snapshot or backup these VMs.

-

@Danp Thanks Dan - that makes sense - however one of those 6 x VMs is backing up and snapshotting perfectly, and it has the extra disk too..

Why would this be?

-

Is there a possibility of converting that SR to thin provisioning - would that help in this case?

-

Or could I get a one off backup of those VMs by disconnecting that disk temporarily?

-

Thanks everyone for their help. I believe I have a way forward now.