Can't migrate VM_REQUIRES_NETWORK

-

I get the following error when I try to migrate a VM to another host.

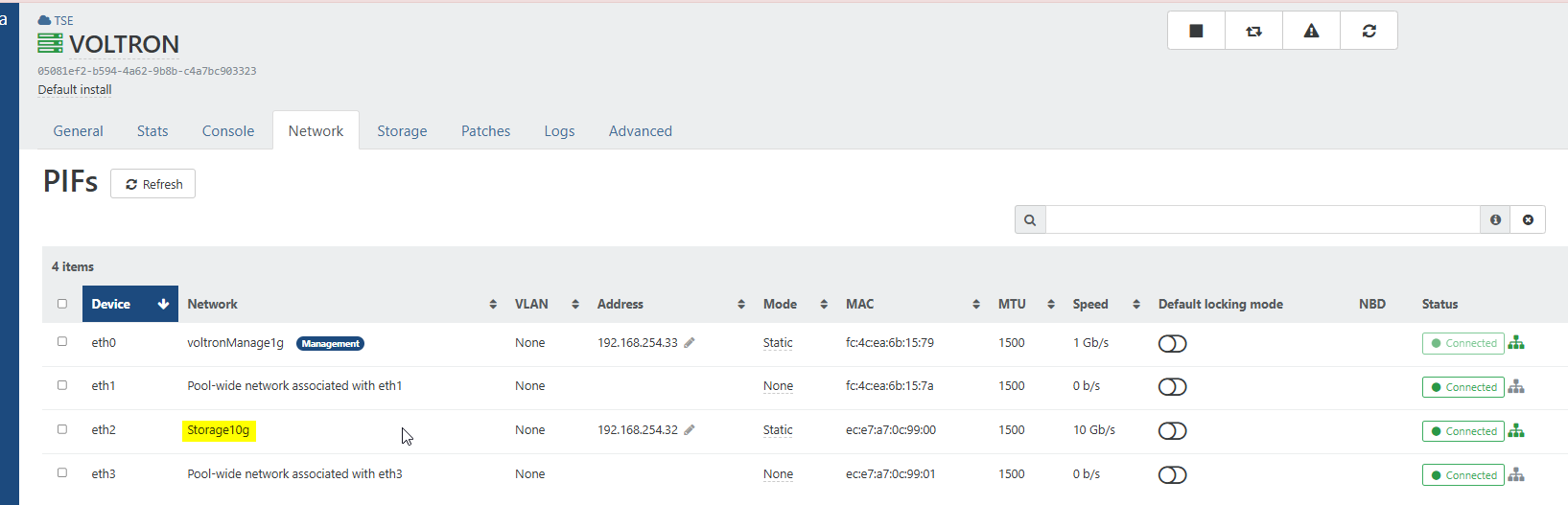

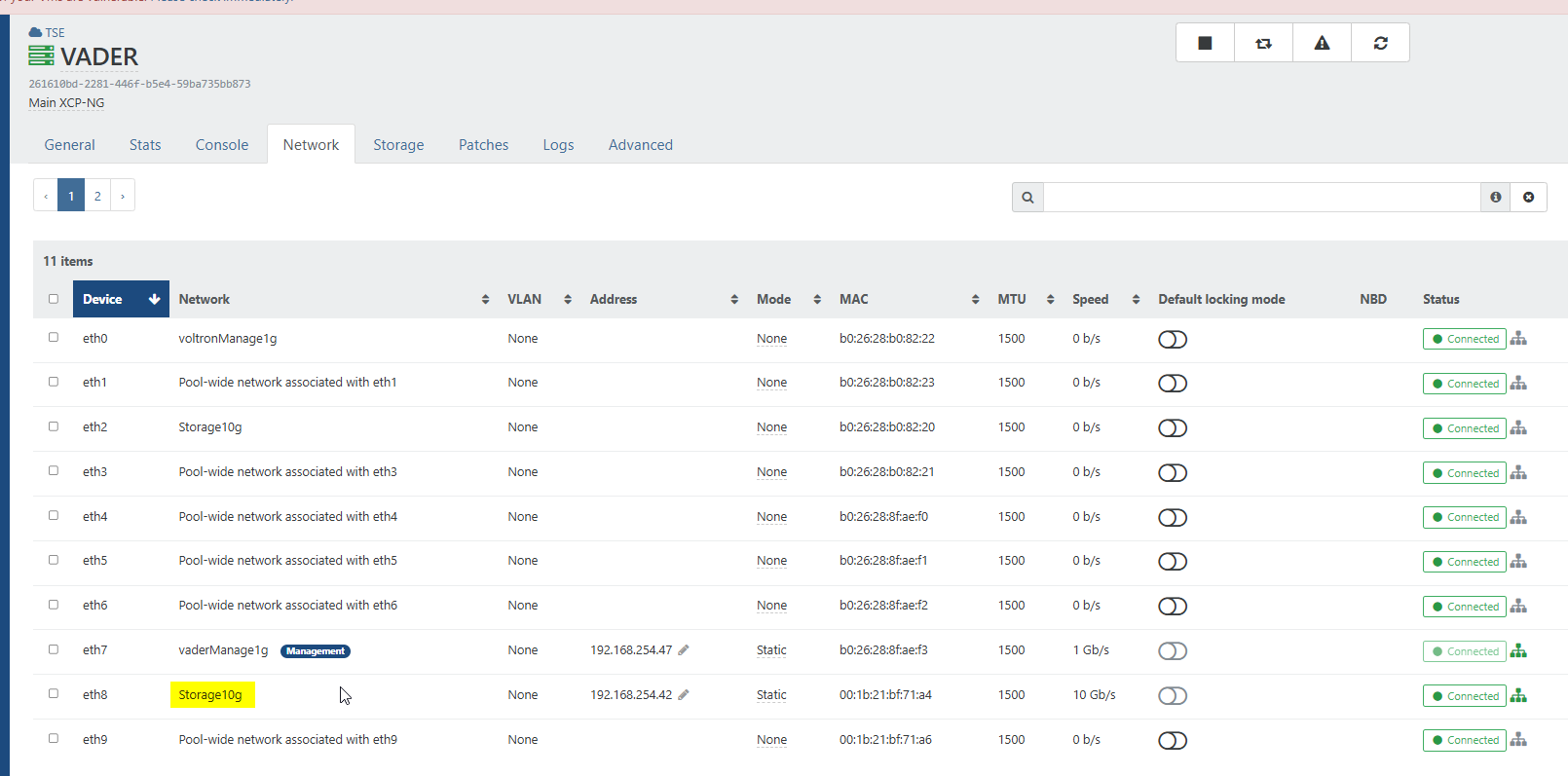

I'm selecting Storage10g as the migration network and both hosts have this network configured.

vm.migrate { "vm": "1a0672e4-cd08-a217-5403-7e18b237f296", "migrationNetwork": "cd1d0820-5fd6-2d79-ab12-1c3e92f06c2d", "sr": "ab9780b1-fcdc-7ea4-395a-5369bd6024c5", "targetHost": "05081ef2-b594-4a62-9b8b-c4a7bc903323" } { "code": "VM_REQUIRES_NETWORK", "params": [ "OpaqueRef:c3355c6b-773e-64a4-fe63-ebbd6aa68686", "OpaqueRef:eb6eb456-cb99-4439-b7d0-a5dc282ae52b" ], "task": { "uuid": "6e008922-6846-e081-50d0-fef98f6dee5b", "name_label": "Async.VM.assert_can_migrate", "name_description": "", "allowed_operations": [], "current_operations": {}, "created": "20251122T01:47:47Z", "finished": "20251122T01:47:47Z", "status": "failure", "resident_on": "OpaqueRef:1e3c6744-f007-4357-8c83-b3e46db7ef53", "progress": 1, "type": "<none/>", "result": "", "error_info": [ "VM_REQUIRES_NETWORK", "OpaqueRef:c3355c6b-773e-64a4-fe63-ebbd6aa68686", "OpaqueRef:eb6eb456-cb99-4439-b7d0-a5dc282ae52b" ], "other_config": {}, "subtask_of": "OpaqueRef:NULL", "subtasks": [], "backtrace": "(((process xapi)(filename ocaml/xapi/xapi_network_attach_helpers.ml)(line 174))((process xapi)(filename ocaml/xapi/xapi_vm_helpers.ml)(line 705))((process xapi)(filename ocaml/xapi/xapi_vm_migrate.ml)(line 1812))((process xapi)(filename ocaml/xapi/message_forwarding.ml)(line 2547))((process xapi)(filename ocaml/xapi/rbac.ml)(line 229))((process xapi)(filename ocaml/xapi/rbac.ml)(line 239))((process xapi)(filename ocaml/xapi/server_helpers.ml)(line 78)))" }, "message": "VM_REQUIRES_NETWORK(OpaqueRef:c3355c6b-773e-64a4-fe63-ebbd6aa68686, OpaqueRef:eb6eb456-cb99-4439-b7d0-a5dc282ae52b)", "name": "XapiError", "stack": "XapiError: VM_REQUIRES_NETWORK(OpaqueRef:c3355c6b-773e-64a4-fe63-ebbd6aa68686, OpaqueRef:eb6eb456-cb99-4439-b7d0-a5dc282ae52b) at Function.wrap (file:///opt/xo/xo-builds/xen-orchestra-202511102201/packages/xen-api/_XapiError.mjs:16:12) at default (file:///opt/xo/xo-builds/xen-orchestra-202511102201/packages/xen-api/_getTaskResult.mjs:13:29) at Xapi._addRecordToCache (file:///opt/xo/xo-builds/xen-orchestra-202511102201/packages/xen-api/index.mjs:1073:24) at file:///opt/xo/xo-builds/xen-orchestra-202511102201/packages/xen-api/index.mjs:1107:14 at Array.forEach (<anonymous>) at Xapi._processEvents (file:///opt/xo/xo-builds/xen-orchestra-202511102201/packages/xen-api/index.mjs:1097:12) at Xapi._watchEvents (file:///opt/xo/xo-builds/xen-orchestra-202511102201/packages/xen-api/index.mjs:1270:14)" } -

@bazzacad and does the VM network exists on destination host ?

are your hosts strictly identical network wise ?

one screen you point eth2 and the other eth8 for the 10g network. -

@Pilow Do you mean both NICs need to have the same ID? e.g. both on eth2? How would I change that?

-

@bazzacad I don't know for sure this is the culprit.

but as far as I know, it is really recommanded to have strictly the same nic ordering/usage for multiple hosts in the same pool to avoid quirks.network configuration is centrally done at the pool level and pushed on the hosts, so you better have them configured/cabled the same way.

you see your eth0/management ? you named it "voltronmanage1g", but is has been pushed on VADER host too with this name.

If you use eth0 for management, call it "management" but not with a host-attached name.your eth2 seems physically disconnect on Vader, perhaps taking the ethernet cable from eth8 should be enough on this host ?

you should bring back the management interface on eth0 too, it's actually on eth7 on Vader.

reconfigure IP addresses on the pifs too, once connected.try to replicate the same configuration/connections on your hosts if they are in the same pool (seems to be the case, pool TSE here)

-

@bazzacad you do not seem to have the same number of PIFs in each host

I wish you do not have to, but you can swap names if needed, read the doc here https://docs.xcp-ng.org/networking/#renaming-nics -

@Pilow, Well, it was a major pain, but I got both hosts on eth0 for management & eth2 for Storage10g & it's working now.

I wish XOA made this an easier process. -

@bazzacad great, glad it worked for you