AMD Eypc 7402P ?

-

@speedy01 the Cstate setting was turned of by default in the bios if I remember correctly. Will check that.

-

Wait, it's an EPYC Rome CPU, right?

-

@olivierlambert Yes

-

And your host is working? XCp-ng 8.0 right? Default/stock configuration? (I mean no testing kernel or Xen, right?)

-

@olivierlambert

Yep the only thing I had to do was to to install xcp-ng while it booted in efi mode (install worked fine in legacy, but I could not boot it up after, did a reinstall when having EFI as only boot mode and it worked out of the box) -

@olivierlambert

If you want me to do some testing or give some logs or w/e let me know. -

Okay I'm a bit surprised, I thought Rome wasn't supported before next Xen release (coming around mid-december, Xen 4.13)

edit: I'm asking some Xen devs, I'll keep you posted.

-

[09:13 xcp-ng-onvduxbl ~]# cat /proc/cpuinfo processor : 0 vendor_id : AuthenticAMD cpu family : 23 model : 49 model name : AMD EPYC 7402P 24-Core Processor stepping : 0 microcode : 0x830101c cpu MHz : 2800.165 cache size : 512 KB physical id : 0 siblings : 16 core id : 0 cpu cores : 16 apicid : 0 initial apicid : 0 fpu : yes fpu_exception : yes cpuid level : 13 wp : yes flags : fpu de tsc msr pae mce cx8 apic mca cmov pat clflush mmx fxsr sse sse2 ht syscall nx mmxext fxsr_opt lm constant_tsc r ep_good nopl nonstop_tsc cpuid extd_apicid pni pclmulqdq ssse3 fma cx16 sse4_1 sse4_2 movbe popcnt aes xsave avx f16c rdrand hypervisor lahf_lm cmp_legacy abm sse4a misalignsse 3dnowprefetch bpext ssbd ibpb vmmcall fsgsbase bmi1 avx2 bmi2 rdseed adx clflushopt clwb sha_ni xsaveopt xsavec xgetbv1 clzero arat rdpid bugs : fxsave_leak null_seg spectre_v1 spectre_v2 spec_store_bypass bogomips : 5600.03 TLB size : 3072 4K pages clflush size : 64 cache_alignment : 64 address sizes : 48 bits physical, 48 bits virtual power management: [09:15 xcp-ng-onvduxbl ~]# cat /etc/xensource-inventory PRIMARY_DISK='/dev/disk/by-id/md-name-localhost:127' PRODUCT_VERSION='8.0.0' DOM0_VCPUS='16' CONTROL_DOMAIN_UUID='d79f1f63-c627-49cb-b493-2cfb372b9e51' DOM0_MEM='4304' COMPANY_NAME_SHORT='Open Source' MANAGEMENT_ADDRESS_TYPE='IPv4' PARTITION_LAYOUT='ROOT,BACKUP,LOG,BOOT,SWAP,SR' PRODUCT_VERSION_TEXT='8.0' PRODUCT_BRAND='XCP-ng' INSTALLATION_UUID='3a9c29f2-11f0-4f71-a457-be1be5973e79' PRODUCT_VERSION_TEXT_SHORT='8.0' BRAND_CONSOLE='XCP-ng Center' PRODUCT_NAME='xenenterprise' MANAGEMENT_INTERFACE='xenbr0' COMPANY_PRODUCT_BRAND='XCP-ng' PLATFORM_VERSION='3.0.0' BUILD_NUMBER='release/naples/master/45' STUNNEL_LEGACY='false' PLATFORM_NAME='XCP' BRAND_CONSOLE_URL='https://xcp-ng.org' BACKUP_PARTITION='/dev/disk/by-id/md-name-localhost:127-part2' INSTALLATION_DATE='2019-11-12 23:01:52.715183' COMPANY_NAME='Open Source' -

Thx for the details, coming back to you as soon I got news

-

Hello, everybody,

we have just successfully tested this CPU for 3 weeks and we still have a Lenovo test system in our data center:

The configuration is as follows:

Lenovo SR655

https://lenovopress.com/lp1161-thinksystem-sr655-server

Its a CTO-Configuration:

1x ThinkSystem SR655 24x2.5" chassis

1x ThinkSystem AMD EPYC 7402P 24C 180W 2.8GHz Processor

2x ThinkSystem 64GB TruDDR4 3200MHz (2Rx4 1.2V) RDIMM-A

1x ThinkSystem M.2 SATA/NVMe 2-Bay Enablement Kit

2x ThinkSystem M.2 128GB SATA 6Gbps Non-Hot Swap SSD

(This is the XCP-NG Boot-Disk)1x ThinkSystem SR635/SR655 x8 PCIe Internal riser

1x ThinkSystem SR655 x16/x8/x8 PCIe Riser1

1x ThinkSystem SR655 x16/x8/x8 PCIe Riser2

1x ThinkSystem Mellanox ConnectX-4 Lx 10/25GbE SFP28 2-port OCP

(LACP-Trunk 2x 10GB zu IBM G8264 vLAG)1x Emulex 16Gb Gen6 FC Dual-port HBA

(Attached to Storwize V5k shared Block-Storage)2x ThinkSystem 1100W (230V/115V) Platinum

hot swap power supply

2x 2.8m, 13A/100-250V, C13 to C14 Jumper CordLoad tests were problem-free for days. The system is impressively fast.

- Debian VM with 48 vCPUs builds Linux-Kernel (defconfig 64bit) in 32 seconds.

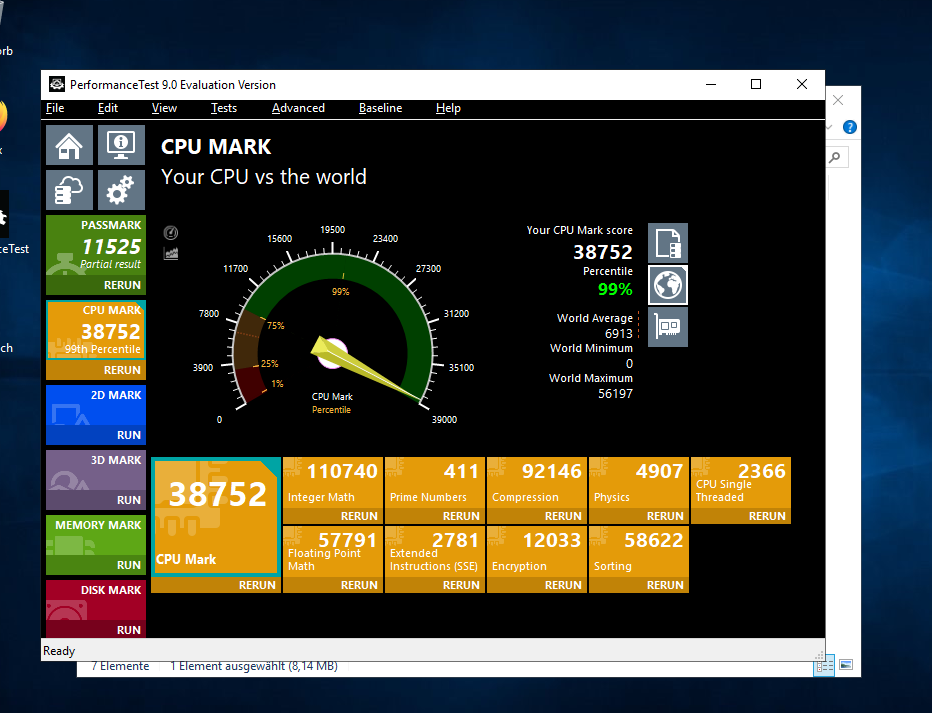

- Windows VM with 48 vCPUs reaches ~39k in Passmark CPU-Mark

Best regards!

-Borisprocessor : 0 vendor_id : AuthenticAMD cpu family : 23 model : 49 model name : AMD EPYC 7402P 24-Core Processor stepping : 0 microcode : 0x830101c cpu MHz : 2794.751 cache size : 512 KB physical id : 0 siblings : 16 core id : 0 cpu cores : 16 apicid : 0 initial apicid : 0 fpu : yes fpu_exception : yes cpuid level : 13 wp : yes flags : fpu de tsc msr pae mce cx8 apic mca cmov pat clflush mmx fxsr sse sse2 ht syscall nx mmxext fxsr_opt lm constant_tsc rep_good nopl nonstop_tsc cpuid extd_apicid pni pclmulqdq ssse3 fma cx16 sse4_1 sse4_2 movbe popcnt aes x save avx f16c rdrand hypervisor lahf_lm cmp_legacy abm sse4a misalignsse 3dnowpr efetch bpext ssbd ibpb vmmcall fsgsbase bmi1 avx2 bmi2 rdseed adx clflushopt clw b sha_ni xsaveopt xsavec xgetbv1 clzero arat rdpid bugs : fxsave_leak null_seg spectre_v1 spectre_v2 spec_store_bypass bogomips : 5589.60 TLB size : 3072 4K pages clflush size : 64 cache_alignment : 64 address sizes : 48 bits physical, 48 bits virtual power management:

-

@Silencer80 very nice! mind pasting some harddrive speed test from your highspeed drives?

-

@nmym Which drives do you mean? The M2-SSDs are relatively slow and only for the OS. Our Storwize V5K (Gen1) is only connected with 8GB fiber channel and can only handle 1400MB/s and 25k IOPS. That's not fast anymore... I just didn't have anything else to test.

We had the system two weeks ago attached to an IBM flash system 900. That makes up to 1.2 million IOPs at low latency. Under VMWare it was very fast. I couldn't test XCP-NG there, because the Storage was already in production.

-

@Silencer80 said in AMD Eypc 7402P ?:

o an IBM flash system 900. Th

My NVMe disks cant break 1GB/s on xen, on vmware however its 70% faster. Since they are on a pci-e (3gen) 2x the theoretical limit is 2GB/s afaik

they should also bring (due to pci limit) around 200k iops. I get maks 90k . Just curious -

@nmym Currently I can not check it myself, because I do not have such spare hardware.

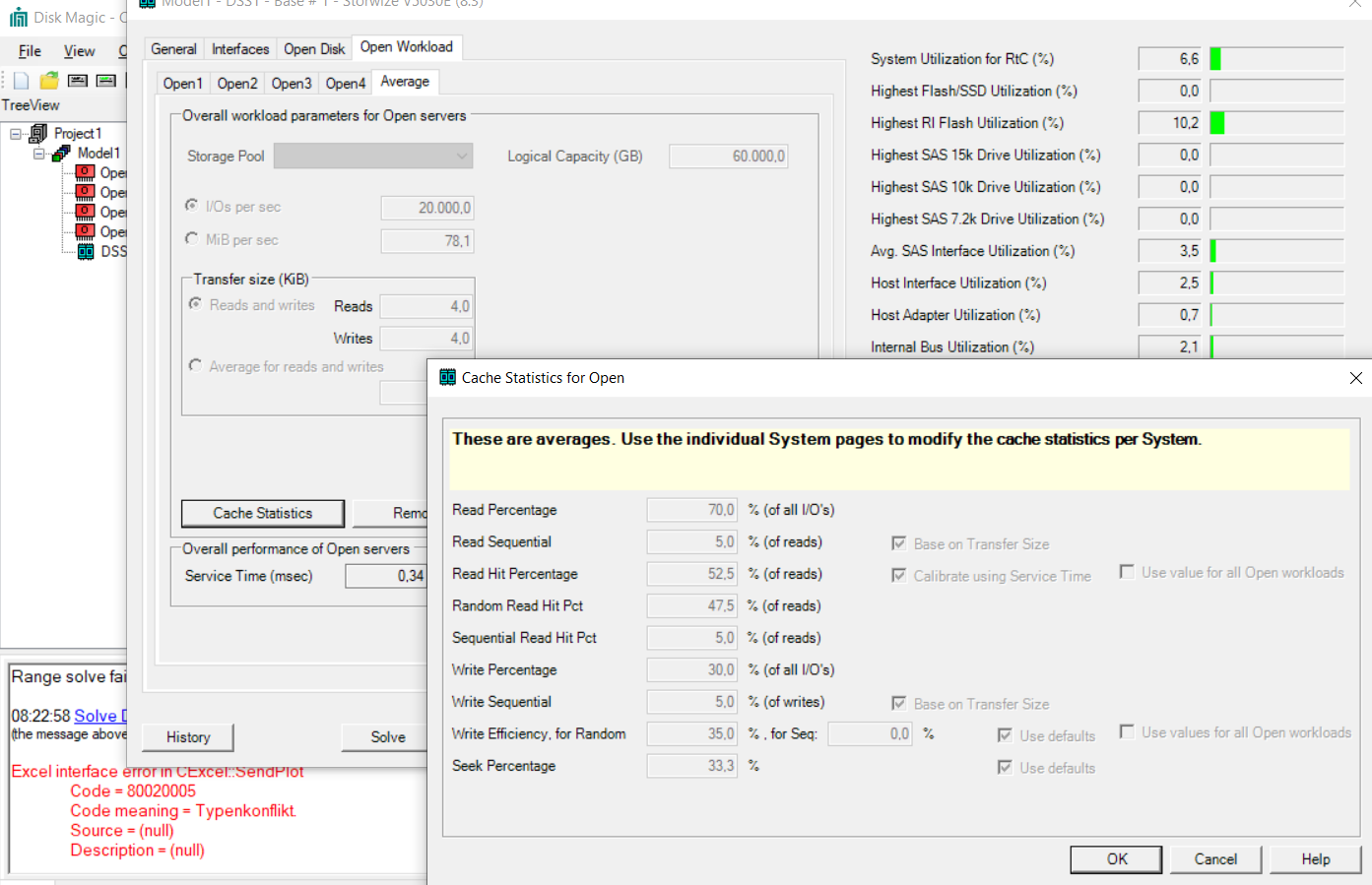

I also design our new SR655 pool with an AllFlash Storwize V5030E on a Fiber Channel SAN. I only need 90TB @ 90K IOPs. So this is not about the highest performance in the storage area. My oppinion is that it's more important to have thin provisioning on the storage side and SCSI unmap support on the hypervisor.

But that's my opinion and Oliver sees it quite differently. That's legitimate, too.

We only use local storage in very few projects.

This test was only about the validation of the CPUs.

Is there anything new from the Xen Devs to Rome? I can't see any limitations, bug or failure.

I am happy to do further tests. Write to me if you are interested in anything.

-

Interesting. Yes we all have different requirements.

We only use local storage to reduce complexity and money spend.

In that sense moving VM-s between servers takes longer than it would with a San (as whole disk needs to be moved aswell)

This is mainly the reason we want performance -

@Silencer80

I've been experimenting some here because I had a hard time getting your performance numbers on cpu passmark.

Anyway this was related to c-state not being disabled in BIOS.

After disabling this i got the numbers you got through a VM. Very nice.

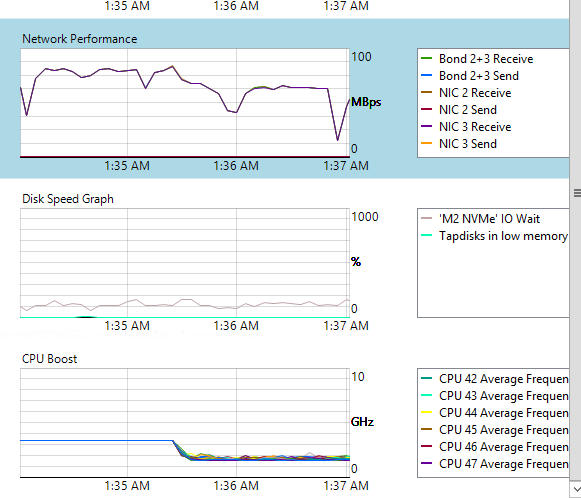

I had to do speedtests on network and disk, and one funny example is bandwidth which is heavy dependant on this. I used thexenpm set-scaling-governor performanceto set the max cpu clock andxenpm set-scaling-governor ondemandto reset it to default. (both commands gives tons of error in cli, but it somehow works.

When I set this to ondemand, my network speed drops. It's somehow ironic as I have the demand for this speed, but the process does not demand, resulting in slower network transfer.

Is there anything to automate this? (I will probably turn on performanc emode when i need it, though its not really the best way of achiving this. -

@nmym The Lenovo Bios may be better designed for optimal performance. After tuning the system by hand, I also asked Lenovo for the Optimal Bios settings and got the following answer with good result:

"the current recommendation is to set the UEFI Operating Mode to "Maximum Performance".

Please check if the "EfficiencyModeEn" parameter is set to "Auto" and not to "Enabled".

There was a FW bug, which should be fixed by the end of September. (UEFI CFE105D for pre-GA HW, UEFI CFE106D for GA HW)"Our test system is already running VMware again to add a few more tests. Afterwards we will send the system back to Lenovo next week.

However, we ordered 4 systems. So I can contribute more tests in the coming weeks. Then also with optimal RAM equipment (8x64GB @3200MHz) and new Shared Storage Storwize V5030E.

I have sized the storage as follows:

4x Hosts with 2x16GB HBAs each. Redundant FC-SAN. Storage with 8x16GB HBAs, distributed on both fabrics. Single-Initiator Zoning for each Server-HBA to both Storage Controlers.

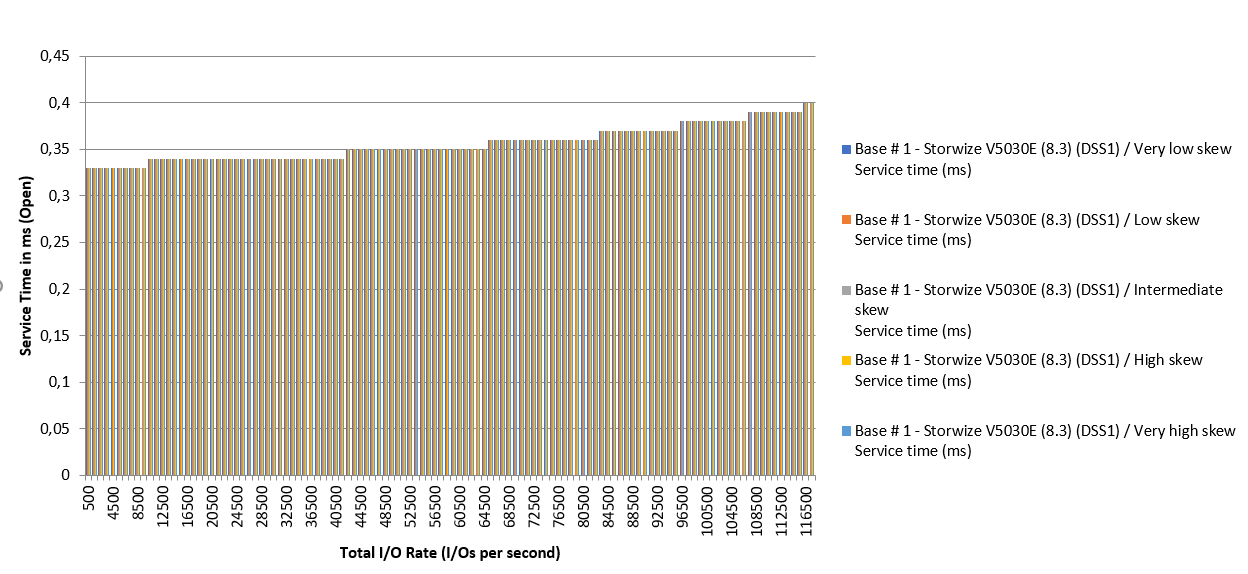

According to sizing tools (Capacity Magic / Disk Magic), the storage system then reliably delivers 90TB and approx. 120,000 IOP/s at <0.4ms. This meets our requirements.

-

Before installing Xen, the first thing I change is the BIOS power profile settings. In my experience, it works pretty well with

PerformanceandOS Control. For production, my choice is the latter because it gives to Xen the core frequency scaling management (boost) and saves energy when there's a low demand after hours.@nmym said in AMD Eypc 7402P ?:

When I set this to ondemand, my network speed drops. It's somehow ironic as I have the demand for this speed, but the process does not demand, resulting in slower network transfer

For benchmark purposes, try reducing the dom0 #vcpus (4 is good value IMHO for the tests). Since it's a high core count processor, a 8+ dom0 cores is good for hosting 100+ VMs but it might distort benchmark results because the packet processing will be spread across all dom0 cores. With the CPU governor set to

ondemandthe more cores you have the less will be the need to boost. This behavior doesn't occur inperformancedue to the cores being at the base clock minimum frequency. -

Does your statement explicitly refer to AMD CPUs?

I still have to investigate whether this KB article also reflects the optimum for ROME CPUs.

-

No, my systems are Intel-based but might work also for AMD processors. The only caveat is whether AMD fixed the C-STATES/MWAIT bug causing spontaneous reboots on the 1st gen architecture. Since ROME is a new arch, maybe it's not affected. The only way to be sure is enabling C-STATES and keep an eye on it.

That Citrix KB is my go-to reference about Xen power management. Very good article.

Hello! It looks like you're interested in this conversation, but you don't have an account yet.

Getting fed up of having to scroll through the same posts each visit? When you register for an account, you'll always come back to exactly where you were before, and choose to be notified of new replies (either via email, or push notification). You'll also be able to save bookmarks and upvote posts to show your appreciation to other community members.

With your input, this post could be even better 💗

Register Login