Ubuntu Live iso Hangs on XCP-ng 8.1.0; Guest Reports: 'watchdog: BUG: soft lockup' #371

-

I do have some other hardware that I could try later. I did notice something that I missed earlier. When I boot into the ubuntu ISO, I see a couple outputs in the console before it gets to the setup area.

[0.887324] vbd vbd-5696: 19 xenbus_dev_probe on device/vbd/5696

[1.172167] piix4_smbus 0000:00:01.3 SMBus Host Controller not enabled!Maybe this is telling for what could be going on here?

-

@wsciaroni just double checked, I see that too very early on and not for long, but then it boots.

-

Okay, so maybe that's not the problem.

It does seem very strange that I can boot the Debian 10 ISO, and a CentOS iso, but not an Ubuntu Server ISO... -

Just checked uefi too, that also works.

-

I just tested the http://releases.ubuntu.com/18.04.4/ubuntu-18.04.4-live-server-amd64.iso there was no issue from my side FUJITSU, (,PRIMERGY RX2540 M4,)

latest version xcp-ng / xen orchestra -

Hi all,

We are experimenting the same error but with XCP-ng version 8.0.

Our configuration:- XCP-ng version 8.0

- VM with Ubuntu server 18.04.1

- Server Dell PowerEdge R440 with Intel Xeon Silver 4110 (2,1GHz, 8C/16T, cache 11Mo, 9,6GT/s, 85W, Turbo, HT), mémoire DDR4 2400MHz

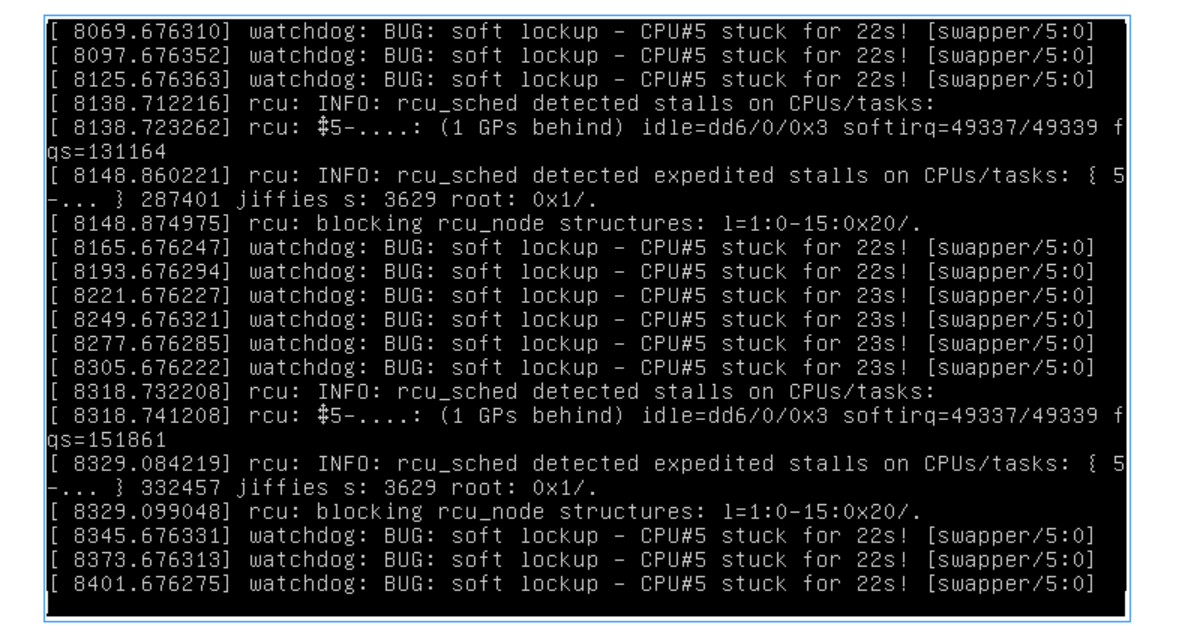

We don't have any problem deploying our VM image with Ubuntu server 18.04.1, but randomly when the VM executes we have the following error:

watchdog: BUG: soft lockup - CPU#1 stuck for ...

Then no other choice than restarting the VM.Note that we have the following warnings after the error:

rcu: INFO: rcu_sched detected expedited stalls on CPUs/tasks: ...

rcu: blocking rcu_nodes structure: ...As we are getting ready to go to production in the coming month, we will have to change hypervisor if a fix cannot be made. I'm sad to see that the error is still present in v8.1

-

As we are getting ready to go to production in the coming month, we will have to change hypervisor if a fix cannot be made. I'm sad to see that the error is still present in v8.1

If you don't report it with details, there's no way we fix a problem we can't reproduce nor spot outside one previous user here.

Obviously, we'll need more details and logs to be able to help.

edit: in other words, we aren't aware of a general problem of this sort. 8.0 (and 8.1) are used intensively by thousands of users around the world. If everyone had issues like this, we would have known. Also it would have been even spotted by Citrix before us.

So the hard task here is to understand why this problem is happening on your side. It can be a bug on XCP-ng code somewhere, due to a specific combo, but it's not widespread.

-

@olivierlambert

You are absolutely right for the reporting part.

The problem happens randomly and we haven't identified a pattern that would trigger it in a deterministic way.

Now it is reassuring that the problem seems very specific. On our side it occurs on two servers with the exact same configuration.I would be happy to report details next time it happens. As to be efficient, I would appreciate some specifics on what to report.

Regards

-

Actually I don't see the Xeon Silver 4110 in Citrix HCL for Hypervisor 8.0.0/8.0.1.

So I don't know if it is worth looking for the root cause.

However I note that wsciaroni reported CPU is listed (Xeon(R) E5-2640 v3) -

I don't think it's a problem on this specific model. Have you run a memtest on this machine?

-

Regarding logs to bring: https://xcp-ng.org/docs/troubleshooting.html

-

Hi Olivier,

I experienced the problem at 14:39:09 today (23/05/2020).

Below some data, hoping it can help.Context:

- 1 single physical machine: Server Dell PowerEdge R440 with Intel Xeon Silver 4110 (2,1GHz, 8C/16T, cache 11Mo, 9,6GT/s, 85W, Turbo, HT), mémoire DDR4 2400MHz

- XCP-ng version 8.0

- 2 imported VMs with Ubuntu server 18.04.1

- Only one VM sometimes freezes (6 CPU). The other one never does (1 CPU).

- The problem occurred when deploying a Kubernetes cluster from the other VM (using Rancher) to the one that froze. Thus, network and CPU were actively used at the time.

Note that I did that same operation around 5 times before on the exact same 2 VMs (deployment tests) with no problem. - See attached screenshot of the frozen VM console output.

Server installation notes

- Boot from a USB Key - XCP-ng 8.0. Choose "install" menu entry,

- Select a preconfigured RAID10 array to install XCP-ng.

- Select the preconfigured RAID10 array for the storage repository (keep the "thick provisioning" option),

- Network static configuration (no DNS server).

- ip: 192.168.1.10

- mask: 255.255.255.0

- gateway: 192.168.1.1

- Put a random ip for the DNS server (e.g. 172.17.1.4),

- No NTP server.

- No additional pack installed.

Frozen VM installation notes

- CPU=6,

- Memory=20GB

- "Place all imported virtual disks on this target SR:"

- Map the VM network interface to the active server interface.

- BIOS Boot

- Don't use Operating System Fixup

- Network static configuration.

- k8s-node01: 192.168.1.110

- mask: 255.255.255.0

- gateway: 192.168.1.1

- Autostart on server boot

- Storage 80GB + 2 more partitions added

XCP-ng server log files

- The logs below (all from /var/log/) were active during/after the problem occurred.

audit.log

May 23 14:36:09 xcp-ng-yqzvwsgr xapi: [20200523T12:36:09.118Z|audit|xcp-ng-yqzvwsgr|1377881 INET :::80|handler:http/rrd_updates D:64870ad12cf9|audit] ('trackid=4cb8b11fe9c6adddea3b4e09e5503449' 'LOCAL_SUPERUSER' '' 'ALLOWED' 'OK' 'HTTP' 'http/rrd_updates' ())

May 23 14:39:51 xcp-ng-yqzvwsgr xapi: [20200523T12:39:51.967Z|audit|xcp-ng-yqzvwsgr|1377931 INET :::80|handler:http/connect_console D:69721c42e847|audit] ('trackid=ebde3ebf745f529e150f1d1e5657ce01' 'LOCAL_SUPERUSER' 'root' 'ALLOWED' 'OK' 'HTTP' 'http/connect_console' (('ref' '' 'ea88f58c-fc4b-844b-e868-7493d84a5345' 'OpaqueRef:eff0510e-22fb-4a41-aeda-503d5f2922eb')))

May 23 14:39:51 xcp-ng-yqzvwsgr xapi: [20200523T12:39:51.967Z|audit|xcp-ng-yqzvwsgr|1377931 INET :::80|check:http/connect_console/host_console D:014fa9e6b374|audit] ('trackid=ebde3ebf745f529e150f1d1e5657ce01' 'LOCAL_SUPERUSER' 'root' 'ALLOWED' 'OK' 'HTTP' 'http/connect_console/host_console' (('ref' '' 'ea88f58c-fc4b-844b-e868-7493d84a5345' 'OpaqueRef:eff0510e-22fb-4a41-aeda-503d5f2922eb')))

May 23 14:40:00 xcp-ng-yqzvwsgr xapi: [20200523T12:40:00.597Z|audit|xcp-ng-yqzvwsgr|1377932 INET :::80|handler:http/connect_console D:63be5cf43224|audit] ('trackid=ebde3ebf745f529e150f1d1e5657ce01' 'LOCAL_SUPERUSER' 'root' 'ALLOWED' 'OK' 'HTTP' 'http/connect_console' ())

May 23 14:41:12 xcp-ng-yqzvwsgr xapi: [20200523T12:41:12.293Z|audit|xcp-ng-yqzvwsgr|1377948 INET :::80|handler:http/rrd_updates D:d00eb5d5099a|audit] ('trackid=4cb8b11fe9c6adddea3b4e09e5503449' 'LOCAL_SUPERUSER' '' 'ALLOWED' 'OK' 'HTTP' 'http/rrd_updates' ())xenstored-access.log

May 23 14:40:00 xcp-ng-yqzvwsgr oxenstored: A15 rm /local/domain/22/data/cant_suspend_reason

May 23 14:40:00 xcp-ng-yqzvwsgr oxenstored: A15 rm /local/domain/22/data/cant_suspend_reason

May 23 14:40:00 xcp-ng-yqzvwsgr oxenstored: A1886 write /local/domain/22/data/set_clipboard [omitted]

May 23 14:40:00 xcp-ng-yqzvwsgr oxenstored: A1886 w event /local/domain/22/data/set_clipboard set_clipboarddaemon.log

May 23 14:40:01 xcp-ng-yqzvwsgr systemd[1]: Started Session c23294 of user root.

May 23 14:40:01 xcp-ng-yqzvwsgr systemd[1]: Starting Session c23294 of user root.

May 23 14:40:01 xcp-ng-yqzvwsgr systemd[1]: Started Session c23295 of user root.

May 23 14:40:01 xcp-ng-yqzvwsgr systemd[1]: Starting Session c23295 of user root.cron

May 23 14:40:01 xcp-ng-yqzvwsgr CROND[11920]: (root) CMD (/usr/lib64/sa/sa1 1 1)

May 23 14:40:01 xcp-ng-yqzvwsgr CROND[11921]: (root) CMD (/opt/xensource/libexec/xapi-logrotate.sh)

May 23 14:45:01 xcp-ng-yqzvwsgr CROND[13202]: (root) CMD (python -c 'import imp; vmss = imp.load_source("vmss", "/etc/xapi.d/plugins/vmss"); vmss.trigger_schedule_snapshots();')secure

May 23 14:39:40 xcp-ng-yqzvwsgr stunnel: LOG5[3142:140148215760640]: Service [xapi] accepted connection from ::ffff:172.17.1.2:1386

May 23 14:39:40 xcp-ng-yqzvwsgr stunnel: LOG5[3142:140148215760640]: connect_blocking: connected 127.0.0.1:80

May 23 14:39:40 xcp-ng-yqzvwsgr stunnel: LOG5[3142:140148215760640]: Service [xapi] connected remote server from 127.0.0.1:36266

May 23 14:39:44 xcp-ng-yqzvwsgr stunnel: LOG5[3142:140148215650048]: Service [xapi] accepted connection from ::ffff:172.17.1.2:1387

May 23 14:39:44 xcp-ng-yqzvwsgr stunnel: LOG5[3142:140148215650048]: connect_blocking: connected 127.0.0.1:80

May 23 14:39:44 xcp-ng-yqzvwsgr stunnel: LOG5[3142:140148215650048]: Service [xapi] connected remote server from 127.0.0.1:36268

May 23 14:39:44 xcp-ng-yqzvwsgr stunnel: LOG5[3142:140148215871232]: Service [xapi] accepted connection from ::ffff:172.17.1.2:1388

May 23 14:39:44 xcp-ng-yqzvwsgr stunnel: LOG5[3142:140148215871232]: connect_blocking: connected 127.0.0.1:80

May 23 14:39:44 xcp-ng-yqzvwsgr stunnel: LOG5[3142:140148215871232]: Service [xapi] connected remote server from 127.0.0.1:36270

May 23 14:39:44 xcp-ng-yqzvwsgr stunnel: LOG5[3142:140148216092416]: Service [xapi] accepted connection from ::ffff:172.17.1.2:1389

May 23 14:39:44 xcp-ng-yqzvwsgr stunnel: LOG5[3142:140148216092416]: connect_blocking: connected 127.0.0.1:80

May 23 14:39:44 xcp-ng-yqzvwsgr stunnel: LOG5[3142:140148216092416]: Service [xapi] connected remote server from 127.0.0.1:36272

May 23 14:39:51 xcp-ng-yqzvwsgr stunnel: LOG5[3142:140148215981824]: Service [xapi] accepted connection from ::ffff:172.17.1.2:1393

May 23 14:39:51 xcp-ng-yqzvwsgr stunnel: LOG5[3142:140148215981824]: connect_blocking: connected 127.0.0.1:80

May 23 14:39:51 xcp-ng-yqzvwsgr stunnel: LOG5[3142:140148215981824]: Service [xapi] connected remote server from 127.0.0.1:36274

May 23 14:40:00 xcp-ng-yqzvwsgr stunnel: LOG5[3142:140148216162048]: Service [xapi] accepted connection from ::ffff:172.17.1.2:1394

May 23 14:40:00 xcp-ng-yqzvwsgr stunnel: LOG5[3142:140148216162048]: connect_blocking: connected 127.0.0.1:80

May 23 14:40:00 xcp-ng-yqzvwsgr stunnel: LOG5[3142:140148216162048]: Service [xapi] connected remote server from 127.0.0.1:36278

May 23 14:40:18 xcp-ng-yqzvwsgr stunnel: LOG5[3142:140148215981824]: Connection closed: 1231524 byte(s) sent to SSL, 574 byte(s) sent to socket

May 23 14:41:29 xcp-ng-yqzvwsgr stunnel: LOG5[3142:140148215760640]: Connection closed: 1926969 byte(s) sent to SSL, 3880 byte(s) sent to socket

May 23 14:41:29 xcp-ng-yqzvwsgr stunnel: LOG5[3142:140148216092416]: Connection closed: 259 byte(s) sent to SSL, 318 byte(s) sent to socketxensource.log

May 23 14:38:43 xcp-ng-yqzvwsgr xapi: [debug|xcp-ng-yqzvwsgr|1377916 UNIX /var/lib/xcp/xapi||dummytaskhelper] task dispatch:event.from D:89574dcb797d created by task D:6cdc379c15f1

May 23 14:39:14 xcp-ng-yqzvwsgr xapi: [debug|xcp-ng-yqzvwsgr|1377917 UNIX /var/lib/xcp/xapi||dummytaskhelper] task dispatch:session.logout D:c011a082589a created by task D:6cdc379c15f1

May 23 14:39:14 xcp-ng-yqzvwsgr xapi: [ info|xcp-ng-yqzvwsgr|1377917 UNIX /var/lib/xcp/xapi|session.logout D:76826a82de67|xapi] Session.destroy trackid=8ad18d114c73b897cffa86b75b72bb2c

May 23 14:39:14 xcp-ng-yqzvwsgr xapi: [debug|xcp-ng-yqzvwsgr|1377918 UNIX /var/lib/xcp/xapi||dummytaskhelper] task dispatch:session.slave_login D:edd9206267d2 created by task D:6cdc379c15f1

May 23 14:39:14 xcp-ng-yqzvwsgr xapi: [ info|xcp-ng-yqzvwsgr|1377918 UNIX /var/lib/xcp/xapi|session.slave_login D:75241f399147|xapi] Session.create trackid=02d0588ff3334647fb2a39319b04244f pool=true uname= originator=xapi is_local_superuser=true auth_user_sid= parent=trackid=9834f5af41c964e225f24279aefe4e49

May 23 14:39:14 xcp-ng-yqzvwsgr xapi: [debug|xcp-ng-yqzvwsgr|1377919 UNIX /var/lib/xcp/xapi||dummytaskhelper] task dispatch:pool.get_all D:c6c40733d126 created by task D:75241f399147

May 23 14:39:14 xcp-ng-yqzvwsgr xapi: [debug|xcp-ng-yqzvwsgr|1377920 UNIX /var/lib/xcp/xapi||dummytaskhelper] task dispatch:event.from D:2ceaee79a5d2 created by task D:6cdc379c15f1

May 23 14:39:28 xcp-ng-yqzvwsgr xapi: [debug|xcp-ng-yqzvwsgr|308 |xapi events D:3647b44d7f38|dummytaskhelper] task timeboxed_rpc D:dececb4b9201 created by task D:3647b44d7f38

May 23 14:39:28 xcp-ng-yqzvwsgr xapi: [debug|xcp-ng-yqzvwsgr|1377921 UNIX /var/lib/xcp/xapi||dummytaskhelper] task dispatch:event.from D:e96e0716c10a created by task D:3647b44d7f38

May 23 14:39:41 xcp-ng-yqzvwsgr xapi: [debug|xcp-ng-yqzvwsgr|1377922 INET :::80|session.login_with_password D:57713482620e|xapi] Success: local auth, user root from HTTP request from Internet with User-Agent: XenCenter/8.0.0

May 23 14:39:41 xcp-ng-yqzvwsgr xapi: [ info|xcp-ng-yqzvwsgr|1377922 INET :::80|session.login_with_password D:57713482620e|xapi] Session.create trackid=ebde3ebf745f529e150f1d1e5657ce01 pool=false uname=root originator=XenCenter/8.0.0 is_local_superuser=true auth_user_sid= parent=trackid=9834f5af41c964e225f24279aefe4e49

May 23 14:39:41 xcp-ng-yqzvwsgr xapi: [debug|xcp-ng-yqzvwsgr|1377923 UNIX /var/lib/xcp/xapi||dummytaskhelper] task dispatch:pool.get_all D:4d57f00d74bb created by task D:57713482620e

May 23 14:39:43 xcp-ng-yqzvwsgr xapi: [debug|xcp-ng-yqzvwsgr|1377922 INET :::80|event.from D:dc53f54f253f|xapi] get_since_for_events: last_in_memory (6979) >= since (0): Using slow message lookup

May 23 14:39:45 xcp-ng-yqzvwsgr xapi: [debug|xcp-ng-yqzvwsgr|1377927 UNIX /var/lib/xcp/xapi||dummytaskhelper] task dispatch:session.logout D:a145e5e8112f created by task D:6cdc379c15f1

May 23 14:39:45 xcp-ng-yqzvwsgr xapi: [ info|xcp-ng-yqzvwsgr|1377927 UNIX /var/lib/xcp/xapi|session.logout D:a503679fa7d8|xapi] Session.destroy trackid=02d0588ff3334647fb2a39319b04244f

May 23 14:39:45 xcp-ng-yqzvwsgr xapi: [debug|xcp-ng-yqzvwsgr|1377928 UNIX /var/lib/xcp/xapi||dummytaskhelper] task dispatch:session.slave_login D:a8cd0095ecc3 created by task D:6cdc379c15f1

May 23 14:39:45 xcp-ng-yqzvwsgr xapi: [ info|xcp-ng-yqzvwsgr|1377928 UNIX /var/lib/xcp/xapi|session.slave_login D:8ac76e7704d5|xapi] Session.create trackid=cc258e716a3c1a3f08cb8aa3ed55684c pool=true uname= originator=xapi is_local_superuser=true auth_user_sid= parent=trackid=9834f5af41c964e225f24279aefe4e49

May 23 14:39:45 xcp-ng-yqzvwsgr xapi: [debug|xcp-ng-yqzvwsgr|1377929 UNIX /var/lib/xcp/xapi||dummytaskhelper] task dispatch:pool.get_all D:a6b429562b56 created by task D:8ac76e7704d5

...- The logs below (all from /var/log/) were not active during/after the problem occurred. I tail them because they are mentioned in the troubleshooting page.

SMlog

May 23 12:42:18 xcp-ng-yqzvwsgr SM: [13215] lock: opening lock file /var/lock/sm/aafae74b-fcaf-494c-bf1a-c482603db26b/vdi

May 23 12:42:18 xcp-ng-yqzvwsgr SM: [13215] blktap2.deactivate

May 23 12:42:18 xcp-ng-yqzvwsgr SM: [13215] lock: acquired /var/lock/sm/aafae74b-fcaf-494c-bf1a-c482603db26b/vdi

May 23 12:42:18 xcp-ng-yqzvwsgr SM: [13232] pread SUCCESS

May 23 12:42:18 xcp-ng-yqzvwsgr SM: [13224] pread SUCCESS

May 23 12:42:18 xcp-ng-yqzvwsgr SM: [13232] vdi_deactivate {'sr_uuid': 'ffbb72f2-abd2-818a-937c-2cde97929bf0', 'subtask_of': 'DummyRef:|c059df81-120b-4b97-af44-51f6c7228ede|VDI.deactivate', 'vdi_ref': 'OpaqueRef:34a8263a-80f6-4435-99b8-381490aa87de', 'vdi_on_boot': 'persist', 'args': [], 'vdi_location': 'c3c1bf34-a6cd-4919-b7e5-80e47da03f88', 'host_ref': 'OpaqueRef:37b319c9-5b88-407d-8325-a40f957a81d5', 'session_ref': 'OpaqueRef:ae70fd5a-c67e-4686-b712-0a928ce34898', 'device_config': {'device': '/dev/disk/by-id/scsi-364cd98f0622a1100250a58db11b8c2ae-part3', 'SRmaster': 'true'}, 'command': 'vdi_deactivate', 'vdi_allow_caching': 'false', 'sr_ref': 'OpaqueRef:9ddadcdc-5bda-4b25-ab6f-0543e9295214', 'vdi_uuid': 'c3c1bf34-a6cd-4919-b7e5-80e47da03f88'}kern.log

May 23 12:42:18 xcp-ng-yqzvwsgr kernel: [6904165.419855] device tap21.0 left promiscuous mode

May 23 12:42:19 xcp-ng-yqzvwsgr kernel: [6904167.073971] device vif21.0 left promiscuous mode

May 23 12:42:24 xcp-ng-yqzvwsgr kernel: [6904171.425112] block tda: sector-size: 512/512 capacity: 209715200

May 23 12:42:24 xcp-ng-yqzvwsgr kernel: [6904171.657529] block tdb: sector-size: 512/512 capacity: 419430400

May 23 12:42:24 xcp-ng-yqzvwsgr kernel: [6904171.876633] block tdc: sector-size: 512/512 capacity: 167780352

May 23 12:42:25 xcp-ng-yqzvwsgr kernel: [6904172.463214] device vif22.0 entered promiscuous mode

May 23 12:42:25 xcp-ng-yqzvwsgr kernel: [6904172.786716] device tap22.0 entered promiscuous mode$ ll -rt /var/log/installer/

...

-rw-r--r-- 1 root root 524684 Nov 18 2019 install-log$ ll -rt /var/crash/

total 0xen-bugtool --yestoall

/var/opt/xen/bug-report/bug-report-20200523145728.tar.bz2

I'll send you this file upon request. -

Hi!

Thanks for the feedback. However, please edit your post to use Markdown syntax to get better visibility on logs files/code blocks.

Then, you should always have a NTP server configured in your hosts, it's very important.

-

Hi Olivier,

I understand the importance of an NTP server. In the industry I am in, there is usually no Internet access when we deploy on site (for security reasons).

I'll ask but I don't think these networks provide a NTP server.

Do you think this could be somehow linked to the problem we are experiencing?Below are the logs with better formatting. Let me know if you expect a specific one.

XCP-NG SERVER LOG FILES

The logs below (all from /var/log/) were active during/after the problem occurred.

audit.log

May 23 14:36:09 xcp-ng-yqzvwsgr xapi: [20200523T12:36:09.118Z|audit|xcp-ng-yqzvwsgr|1377881 INET :::80|handler:http/rrd_updates D:64870ad12cf9|audit] ('trackid=4cb8b11fe9c6adddea3b4e09e5503449' 'LOCAL_SUPERUSER' '' 'ALLOWED' 'OK' 'HTTP' 'http/rrd_updates' ()) May 23 14:39:51 xcp-ng-yqzvwsgr xapi: [20200523T12:39:51.967Z|audit|xcp-ng-yqzvwsgr|1377931 INET :::80|handler:http/connect_console D:69721c42e847|audit] ('trackid=ebde3ebf745f529e150f1d1e5657ce01' 'LOCAL_SUPERUSER' 'root' 'ALLOWED' 'OK' 'HTTP' 'http/connect_console' (('ref' '' 'ea88f58c-fc4b-844b-e868-7493d84a5345' 'OpaqueRef:eff0510e-22fb-4a41-aeda-503d5f2922eb'))) May 23 14:39:51 xcp-ng-yqzvwsgr xapi: [20200523T12:39:51.967Z|audit|xcp-ng-yqzvwsgr|1377931 INET :::80|check:http/connect_console/host_console D:014fa9e6b374|audit] ('trackid=ebde3ebf745f529e150f1d1e5657ce01' 'LOCAL_SUPERUSER' 'root' 'ALLOWED' 'OK' 'HTTP' 'http/connect_console/host_console' (('ref' '' 'ea88f58c-fc4b-844b-e868-7493d84a5345' 'OpaqueRef:eff0510e-22fb-4a41-aeda-503d5f2922eb'))) May 23 14:40:00 xcp-ng-yqzvwsgr xapi: [20200523T12:40:00.597Z|audit|xcp-ng-yqzvwsgr|1377932 INET :::80|handler:http/connect_console D:63be5cf43224|audit] ('trackid=ebde3ebf745f529e150f1d1e5657ce01' 'LOCAL_SUPERUSER' 'root' 'ALLOWED' 'OK' 'HTTP' 'http/connect_console' ()) May 23 14:41:12 xcp-ng-yqzvwsgr xapi: [20200523T12:41:12.293Z|audit|xcp-ng-yqzvwsgr|1377948 INET :::80|handler:http/rrd_updates D:d00eb5d5099a|audit] ('trackid=4cb8b11fe9c6adddea3b4e09e5503449' 'LOCAL_SUPERUSER' '' 'ALLOWED' 'OK' 'HTTP' 'http/rrd_updates' ())xenstored-access.log

May 23 14:40:00 xcp-ng-yqzvwsgr oxenstored: A15 rm /local/domain/22/data/cant_suspend_reason May 23 14:40:00 xcp-ng-yqzvwsgr oxenstored: A15 rm /local/domain/22/data/cant_suspend_reason May 23 14:40:00 xcp-ng-yqzvwsgr oxenstored: A1886 write /local/domain/22/data/set_clipboard [omitted] May 23 14:40:00 xcp-ng-yqzvwsgr oxenstored: A1886 w event /local/domain/22/data/set_clipboard set_clipboarddaemon.log

May 23 14:40:01 xcp-ng-yqzvwsgr systemd[1]: Started Session c23294 of user root. May 23 14:40:01 xcp-ng-yqzvwsgr systemd[1]: Starting Session c23294 of user root. May 23 14:40:01 xcp-ng-yqzvwsgr systemd[1]: Started Session c23295 of user root. May 23 14:40:01 xcp-ng-yqzvwsgr systemd[1]: Starting Session c23295 of user root.cron

May 23 14:40:01 xcp-ng-yqzvwsgr CROND[11920]: (root) CMD (/usr/lib64/sa/sa1 1 1) May 23 14:40:01 xcp-ng-yqzvwsgr CROND[11921]: (root) CMD (/opt/xensource/libexec/xapi-logrotate.sh) May 23 14:45:01 xcp-ng-yqzvwsgr CROND[13202]: (root) CMD (python -c 'import imp; vmss = imp.load_source("vmss", "/etc/xapi.d/plugins/vmss"); vmss.trigger_schedule_snapshots();')secure

May 23 14:39:40 xcp-ng-yqzvwsgr stunnel: LOG5[3142:140148215760640]: Service [xapi] accepted connection from ::ffff:172.17.1.2:1386 May 23 14:39:40 xcp-ng-yqzvwsgr stunnel: LOG5[3142:140148215760640]: connect_blocking: connected 127.0.0.1:80 May 23 14:39:40 xcp-ng-yqzvwsgr stunnel: LOG5[3142:140148215760640]: Service [xapi] connected remote server from 127.0.0.1:36266 May 23 14:39:44 xcp-ng-yqzvwsgr stunnel: LOG5[3142:140148215650048]: Service [xapi] accepted connection from ::ffff:172.17.1.2:1387 May 23 14:39:44 xcp-ng-yqzvwsgr stunnel: LOG5[3142:140148215650048]: connect_blocking: connected 127.0.0.1:80 May 23 14:39:44 xcp-ng-yqzvwsgr stunnel: LOG5[3142:140148215650048]: Service [xapi] connected remote server from 127.0.0.1:36268 May 23 14:39:44 xcp-ng-yqzvwsgr stunnel: LOG5[3142:140148215871232]: Service [xapi] accepted connection from ::ffff:172.17.1.2:1388 May 23 14:39:44 xcp-ng-yqzvwsgr stunnel: LOG5[3142:140148215871232]: connect_blocking: connected 127.0.0.1:80 May 23 14:39:44 xcp-ng-yqzvwsgr stunnel: LOG5[3142:140148215871232]: Service [xapi] connected remote server from 127.0.0.1:36270 May 23 14:39:44 xcp-ng-yqzvwsgr stunnel: LOG5[3142:140148216092416]: Service [xapi] accepted connection from ::ffff:172.17.1.2:1389 May 23 14:39:44 xcp-ng-yqzvwsgr stunnel: LOG5[3142:140148216092416]: connect_blocking: connected 127.0.0.1:80 May 23 14:39:44 xcp-ng-yqzvwsgr stunnel: LOG5[3142:140148216092416]: Service [xapi] connected remote server from 127.0.0.1:36272 May 23 14:39:51 xcp-ng-yqzvwsgr stunnel: LOG5[3142:140148215981824]: Service [xapi] accepted connection from ::ffff:172.17.1.2:1393 May 23 14:39:51 xcp-ng-yqzvwsgr stunnel: LOG5[3142:140148215981824]: connect_blocking: connected 127.0.0.1:80 May 23 14:39:51 xcp-ng-yqzvwsgr stunnel: LOG5[3142:140148215981824]: Service [xapi] connected remote server from 127.0.0.1:36274 May 23 14:40:00 xcp-ng-yqzvwsgr stunnel: LOG5[3142:140148216162048]: Service [xapi] accepted connection from ::ffff:172.17.1.2:1394 May 23 14:40:00 xcp-ng-yqzvwsgr stunnel: LOG5[3142:140148216162048]: connect_blocking: connected 127.0.0.1:80 May 23 14:40:00 xcp-ng-yqzvwsgr stunnel: LOG5[3142:140148216162048]: Service [xapi] connected remote server from 127.0.0.1:36278 May 23 14:40:18 xcp-ng-yqzvwsgr stunnel: LOG5[3142:140148215981824]: Connection closed: 1231524 byte(s) sent to SSL, 574 byte(s) sent to socket May 23 14:41:29 xcp-ng-yqzvwsgr stunnel: LOG5[3142:140148215760640]: Connection closed: 1926969 byte(s) sent to SSL, 3880 byte(s) sent to socket May 23 14:41:29 xcp-ng-yqzvwsgr stunnel: LOG5[3142:140148216092416]: Connection closed: 259 byte(s) sent to SSL, 318 byte(s) sent to socketxensource.log

May 23 14:38:43 xcp-ng-yqzvwsgr xapi: [debug|xcp-ng-yqzvwsgr|1377916 UNIX /var/lib/xcp/xapi||dummytaskhelper] task dispatch:event.from D:89574dcb797d created by task D:6cdc379c15f1 May 23 14:39:14 xcp-ng-yqzvwsgr xapi: [debug|xcp-ng-yqzvwsgr|1377917 UNIX /var/lib/xcp/xapi||dummytaskhelper] task dispatch:session.logout D:c011a082589a created by task D:6cdc379c15f1 May 23 14:39:14 xcp-ng-yqzvwsgr xapi: [ info|xcp-ng-yqzvwsgr|1377917 UNIX /var/lib/xcp/xapi|session.logout D:76826a82de67|xapi] Session.destroy trackid=8ad18d114c73b897cffa86b75b72bb2c May 23 14:39:14 xcp-ng-yqzvwsgr xapi: [debug|xcp-ng-yqzvwsgr|1377918 UNIX /var/lib/xcp/xapi||dummytaskhelper] task dispatch:session.slave_login D:edd9206267d2 created by task D:6cdc379c15f1 May 23 14:39:14 xcp-ng-yqzvwsgr xapi: [ info|xcp-ng-yqzvwsgr|1377918 UNIX /var/lib/xcp/xapi|session.slave_login D:75241f399147|xapi] Session.create trackid=02d0588ff3334647fb2a39319b04244f pool=true uname= originator=xapi is_local_superuser=true auth_user_sid= parent=trackid=9834f5af41c964e225f24279aefe4e49 May 23 14:39:14 xcp-ng-yqzvwsgr xapi: [debug|xcp-ng-yqzvwsgr|1377919 UNIX /var/lib/xcp/xapi||dummytaskhelper] task dispatch:pool.get_all D:c6c40733d126 created by task D:75241f399147 May 23 14:39:14 xcp-ng-yqzvwsgr xapi: [debug|xcp-ng-yqzvwsgr|1377920 UNIX /var/lib/xcp/xapi||dummytaskhelper] task dispatch:event.from D:2ceaee79a5d2 created by task D:6cdc379c15f1 May 23 14:39:28 xcp-ng-yqzvwsgr xapi: [debug|xcp-ng-yqzvwsgr|308 |xapi events D:3647b44d7f38|dummytaskhelper] task timeboxed_rpc D:dececb4b9201 created by task D:3647b44d7f38 May 23 14:39:28 xcp-ng-yqzvwsgr xapi: [debug|xcp-ng-yqzvwsgr|1377921 UNIX /var/lib/xcp/xapi||dummytaskhelper] task dispatch:event.from D:e96e0716c10a created by task D:3647b44d7f38 May 23 14:39:41 xcp-ng-yqzvwsgr xapi: [debug|xcp-ng-yqzvwsgr|1377922 INET :::80|session.login_with_password D:57713482620e|xapi] Success: local auth, user root from HTTP request from Internet with User-Agent: XenCenter/8.0.0 May 23 14:39:41 xcp-ng-yqzvwsgr xapi: [ info|xcp-ng-yqzvwsgr|1377922 INET :::80|session.login_with_password D:57713482620e|xapi] Session.create trackid=ebde3ebf745f529e150f1d1e5657ce01 pool=false uname=root originator=XenCenter/8.0.0 is_local_superuser=true auth_user_sid= parent=trackid=9834f5af41c964e225f24279aefe4e49 May 23 14:39:41 xcp-ng-yqzvwsgr xapi: [debug|xcp-ng-yqzvwsgr|1377923 UNIX /var/lib/xcp/xapi||dummytaskhelper] task dispatch:pool.get_all D:4d57f00d74bb created by task D:57713482620e May 23 14:39:43 xcp-ng-yqzvwsgr xapi: [debug|xcp-ng-yqzvwsgr|1377922 INET :::80|event.from D:dc53f54f253f|xapi] get_since_for_events: last_in_memory (6979) >= since (0): Using slow message lookup May 23 14:39:45 xcp-ng-yqzvwsgr xapi: [debug|xcp-ng-yqzvwsgr|1377927 UNIX /var/lib/xcp/xapi||dummytaskhelper] task dispatch:session.logout D:a145e5e8112f created by task D:6cdc379c15f1 May 23 14:39:45 xcp-ng-yqzvwsgr xapi: [ info|xcp-ng-yqzvwsgr|1377927 UNIX /var/lib/xcp/xapi|session.logout D:a503679fa7d8|xapi] Session.destroy trackid=02d0588ff3334647fb2a39319b04244f May 23 14:39:45 xcp-ng-yqzvwsgr xapi: [debug|xcp-ng-yqzvwsgr|1377928 UNIX /var/lib/xcp/xapi||dummytaskhelper] task dispatch:session.slave_login D:a8cd0095ecc3 created by task D:6cdc379c15f1 May 23 14:39:45 xcp-ng-yqzvwsgr xapi: [ info|xcp-ng-yqzvwsgr|1377928 UNIX /var/lib/xcp/xapi|session.slave_login D:8ac76e7704d5|xapi] Session.create trackid=cc258e716a3c1a3f08cb8aa3ed55684c pool=true uname= originator=xapi is_local_superuser=true auth_user_sid= parent=trackid=9834f5af41c964e225f24279aefe4e49 May 23 14:39:45 xcp-ng-yqzvwsgr xapi: [debug|xcp-ng-yqzvwsgr|1377929 UNIX /var/lib/xcp/xapi||dummytaskhelper] task dispatch:pool.get_all D:a6b429562b56 created by task D:8ac76e7704d5 ...The logs below (all from /var/log/) were not active during/after the problem occurred. I tail them because they are mentioned in the troubleshooting page.

SMlogMay 23 12:42:18 xcp-ng-yqzvwsgr SM: [13215] lock: opening lock file /var/lock/sm/aafae74b-fcaf-494c-bf1a-c482603db26b/vdi May 23 12:42:18 xcp-ng-yqzvwsgr SM: [13215] blktap2.deactivate May 23 12:42:18 xcp-ng-yqzvwsgr SM: [13215] lock: acquired /var/lock/sm/aafae74b-fcaf-494c-bf1a-c482603db26b/vdi May 23 12:42:18 xcp-ng-yqzvwsgr SM: [13232] pread SUCCESS May 23 12:42:18 xcp-ng-yqzvwsgr SM: [13224] pread SUCCESS May 23 12:42:18 xcp-ng-yqzvwsgr SM: [13232] vdi_deactivate {'sr_uuid': 'ffbb72f2-abd2-818a-937c-2cde97929bf0', 'subtask_of': 'DummyRef:|c059df81-120b-4b97-af44-51f6c7228ede|VDI.deactivate', 'vdi_ref': 'OpaqueRef:34a8263a-80f6-4435-99b8-381490aa87de', 'vdi_on_boot': 'persist', 'args': [], 'vdi_location': 'c3c1bf34-a6cd-4919-b7e5-80e47da03f88', 'host_ref': 'OpaqueRef:37b319c9-5b88-407d-8325-a40f957a81d5', 'session_ref': 'OpaqueRef:ae70fd5a-c67e-4686-b712-0a928ce34898', 'device_config': {'device': '/dev/disk/by-id/scsi-364cd98f0622a1100250a58db11b8c2ae-part3', 'SRmaster': 'true'}, 'command': 'vdi_deactivate', 'vdi_allow_caching': 'false', 'sr_ref': 'OpaqueRef:9ddadcdc-5bda-4b25-ab6f-0543e9295214', 'vdi_uuid': 'c3c1bf34-a6cd-4919-b7e5-80e47da03f88'}kern.log

May 23 12:42:18 xcp-ng-yqzvwsgr kernel: [6904165.419855] device tap21.0 left promiscuous mode May 23 12:42:19 xcp-ng-yqzvwsgr kernel: [6904167.073971] device vif21.0 left promiscuous mode May 23 12:42:24 xcp-ng-yqzvwsgr kernel: [6904171.425112] block tda: sector-size: 512/512 capacity: 209715200 May 23 12:42:24 xcp-ng-yqzvwsgr kernel: [6904171.657529] block tdb: sector-size: 512/512 capacity: 419430400 May 23 12:42:24 xcp-ng-yqzvwsgr kernel: [6904171.876633] block tdc: sector-size: 512/512 capacity: 167780352 May 23 12:42:25 xcp-ng-yqzvwsgr kernel: [6904172.463214] device vif22.0 entered promiscuous mode May 23 12:42:25 xcp-ng-yqzvwsgr kernel: [6904172.786716] device tap22.0 entered promiscuous mode$ ll -rt /var/crash/ total 0I'll send you the below report file or the install-log one upon request.

xen-bugtool --yestoall /var/opt/xen/bug-report/bug-report-20200523145728.tar.bz2 -

If you don't want to rely on an external NTP, you can have a local NTP machine, to at least sync all hosts internally.

It's more important to have the same time on the same site for every machine than having the "right universal time" only partially (not on all machines)

-

The "CPU stuck for 22s" message reminds me of another user how had a similar message, not in a VM but in dom0 logs themselves. This was workarounded by disabling C-STATES.

-

Hi stormi and thank you for the information.

I'll check the C-STATES status and will disable it if enable next time I will be on site (home working for now).

I'll let you know the output, especially if the problem stops occurring after a few weeks.

Any other suggestion is of course welcome. -

So on our servers, the C-STATES was already disabled.

We are following another lead: to upgrade the linux Kernel to 5.4 (see https://bugzilla.kernel.org/show_bug.cgi?id=204071).

We won't be able to test before sometimes but I'll let you know. If this is the problem then this is not related with XCP-ng.

Sorry for the late feedback. -

The final note on our problem:

- It was not linked to the Linux kernel nor XCP-ng

- The VM image we were using was built from an Azure VM and contained some Hyper-V libraries. They were the cause of the instability.

Thus again, not a XCP-ng problem. Sorry for the inconvenience and thank you for your support.

-

@nbaudet thanks a lot for your feedback!