CentOS 8 VM reboots under IO load

-

Hello everyone,

I've got a strange issue on my XCP-ng installation at home.

Short story:

In short, a CentOS 8 VM that I have keeps crashing whenever I try to perform updates or give it some IO load (more on this later). I seem to have the same issue with a Windows Server 2019 VM which also crashes sometimes while performing updates (but am unable to reproduce this consistently). A brand new CO8 VM does not have this issue neither other older CO VMs (5 and 6) on the same host.Symptoms:

When I say crashing, from the XO console for the CO8 VM it simply looks like a reboot. I lose SSH and I see the VM rebooting, no error messages nor anything. There is nothing in the VM's logs either following the crash. I tried finding logs in XCP-ng and either they're empty or I'm not looking in the right place.The VM is not running any services/workload either. The crash is reproducible whenever I run a yum update or an IO benchmark (such as fio)

Troubleshooting that I did:

At first, I suspected RAM issues. I ran memtest86 overnight with no errors reported. I even changed out the RAM from another working computer, but the same issues happened. So I do not suspect this is bad RAM.The XCP-ng install is running in Software RAID1 (configured by the XCP-ng installer) over a pair of RAID edition SATA disks. I do not suspect the disks are bad, as other VMs have no issues neither are there any errors reported in logs.

I then thought perhaps this was a bug with XCP-ng 8.1, so I upgraded to 8.2 recently. Same issue persists with no difference.

I also doubt that it's a hardware problem in general as my other CentOS 5 and CentOS 6 VMs run rock solid, no matter how hard I hit the IO. A brand new CO8 VM was also able to complete IO benchmarks without crashing.

Nothing crazy was done on the crashing CO8 VM either, it's running the stock CO kernel. I even uninstalled the xen guest tools in case it was an issue with them.

My question is as follows:

What could I do to to troubleshoot this further? Does XCP-ng have a log that could give me clues?

I'm happy that this is happening in my home testing environment and not production, but I'd like to resolve this issue and gain insight into how I could troubleshoot this if I'm ever faced with such a thing in prod.Thanks!

-

Hi!

Have you checked https://xcp-ng.org/docs/troubleshooting.html ?

-

-

I am experiencing the same problem with a Centos8 stream VM. Did you find a solution?

-

Anything in the logs? Eg

xl dmesgordmesgwhen the VM crashes? -

@olivierlambert I was experiencing the same problem on a centos8 host. I could always reproduce the crash by triggering an rsync of a 10GB folder. I was also getting these lines in

/var/log/xensource.logNov 16 17:46:30 bm-ve-srv02 xenopsd-xc: [debug|bm-ve-srv02|39 |Async.VM.clean_shutdown R:2f7f9c937513|xenops] Device.Generic.hard_shutdown_request frontend (domid=37 | kind=vif | devid=1); backend (domid=0 | kind=vif | devid=1)I could workaround it by setting a fixed value for memory as suggested in post https://xcp-ng.org/forum/topic/4176/vm-keep-rebooting

In the "Advanced" tab for the VM I had "Memory Limits >> Dynamic 2GB/16GB"

I have changed it to "Memory Limits >> Dynamic 16GB/16GB" and the machine doesn't crash anymore when I trigger the rsync.

-

Probably a problem with dynamic memory allocation and free memory space available/used by something else. Did you have anything in

xl dmesg? -

@olivierlambert indeed, I had useful information in

xl dmesg(XEN) [4940621.304592] p2m_pod_demand_populate: Dom34 out of PoD memory! (tot=2097181 ents=2097120 dom0) (XEN) [4940621.304599] domain_crash called from p2m_pod_demand_populate+0x76a/0xb02 (XEN) [4940846.207706] p2m_pod_demand_populate: Dom35 out of PoD memory! (tot=2097182 ents=2097120 dom35) (XEN) [4940846.207716] domain_crash called from p2m_pod_demand_populate+0x76a/0xb02 (XEN) [4940846.207718] Domain 35 (vcpu#3) crashed on cpu#12: (XEN) [4940846.207721] ----[ Xen-4.7.6-6.9.xcpng x86_64 debug=n Not tainted ]---- (XEN) [4940846.207723] CPU: 12 (XEN) [4940846.207725] RIP: 0010:[<ffffffff91f6a639>] (XEN) [4940846.207726] RFLAGS: 0000000000010206 CONTEXT: hvm guest (d35v3) (XEN) [4940846.207729] rax: 0000000000000400 rbx: 0000000001933000 rcx: 0000000000000c00 (XEN) [4940846.207731] rdx: 0000000000000c00 rsi: 0000000000000000 rdi: ffff950686600400 (XEN) [4940846.207733] rbp: 0000000000000400 rsp: ffffabd642883b98 r8: 0000000000001000 (XEN) [4940846.207734] r9: ffff950686600400 r10: 0000000000000000 r11: 0000000000001000 (XEN) [4940846.207736] r12: ffffabd642883cd0 r13: ffff950630d5d1f0 r14: 0000000000000000 (XEN) [4940846.207737] r15: ffffeb95cc198000 cr0: 0000000080050033 cr4: 00000000007706e0 (XEN) [4940846.207739] cr3: 000000028fa52002 cr2: 0000560144fb4fa0 (XEN) [4940846.207740] fsb: 00007f58e207fb80 gsb: ffff95077d6c0000 gss: 0000000000000000 (XEN) [4940846.207742] ds: 0000 es: 0000 fs: 0000 gs: 0000 ss: 0018 cs: 0010 (XEN) [4940846.208059] p2m_pod_demand_populate: Dom35 out of PoD memory! (tot=2097182 ents=2097120 dom35) (XEN) [4940846.208067] domain_crash called from p2m_pod_demand_populate+0x76a/0xb02 (XEN) [4940846.208380] p2m_pod_demand_populate: Dom35 out of PoD memory! (tot=2097182 ents=2097120 dom35) (XEN) [4940846.208383] domain_crash called from p2m_pod_demand_populate+0x76a/0xb02 (XEN) [4940846.208689] p2m_pod_demand_populate: Dom35 out of PoD memory! (tot=2097182 ents=2097120 dom35) (XEN) [4940846.208691] domain_crash called from p2m_pod_demand_populate+0x76a/0xb02 (XEN) [4941014.518002] p2m_pod_demand_populate: Dom36 out of PoD memory! (tot=2097182 ents=2097120 dom36) (XEN) [4941014.518009] domain_crash called from p2m_pod_demand_populate+0x76a/0xb02 (XEN) [4941014.518011] Domain 36 (vcpu#1) crashed on cpu#8: (XEN) [4941014.518014] ----[ Xen-4.7.6-6.9.xcpng x86_64 debug=n Not tainted ]---- (XEN) [4941014.518016] CPU: 8 (XEN) [4941014.518018] RIP: 0010:[<ffffffffae56a639>] (XEN) [4941014.518019] RFLAGS: 0000000000010206 CONTEXT: hvm guest (d36v1) (XEN) [4941014.518022] rax: 0000000000000400 rbx: 0000000000833000 rcx: 0000000000000c00 (XEN) [4941014.518024] rdx: 0000000000000c00 rsi: 0000000000000000 rdi: ffff9309f3efe400 (XEN) [4941014.518026] rbp: 0000000000000400 rsp: ffffa9cc42ec7b98 r8: 0000000000001000 (XEN) [4941014.518027] r9: ffff9309f3efe400 r10: 0000000000000000 r11: 0000000000001000 (XEN) [4941014.518029] r12: ffffa9cc42ec7cd0 r13: ffff9309c48073f0 r14: 0000000000000000 (XEN) [4941014.518031] r15: ffffe851cccfbf80 cr0: 0000000080050033 cr4: 00000000007706e0 (XEN) [4941014.518032] cr3: 000000010a57e001 cr2: 0000558ea0189328 (XEN) [4941014.518034] fsb: 00007fd2b9c1cb80 gsb: ffff930abd640000 gss: 0000000000000000 (XEN) [4941014.518035] ds: 0000 es: 0000 fs: 0000 gs: 0000 ss: 0018 cs: 0010 (XEN) [4941014.518373] p2m_pod_demand_populate: Dom36 out of PoD memory! (tot=2097182 ents=2097120 dom36) (XEN) [4941014.518376] domain_crash called from p2m_pod_demand_populate+0x76a/0xb02 (XEN) [4941014.518703] p2m_pod_demand_populate: Dom36 out of PoD memory! (tot=2097182 ents=2097120 dom36) (XEN) [4941014.518705] domain_crash called from p2m_pod_demand_populate+0x76a/0xb02 (XEN) [4941015.091088] p2m_pod_demand_populate: Dom36 out of PoD memory! (tot=2097181 ents=2097120 dom0) (XEN) [4941015.091098] domain_crash called from p2m_pod_demand_populate+0x76a/0xb02 (XEN) [4941015.091453] p2m_pod_demand_populate: Dom36 out of PoD memory! (tot=2097181 ents=2097120 dom0) (XEN) [4941015.091456] domain_crash called from p2m_pod_demand_populate+0x76a/0xb02 (XEN) [4941019.236252] p2m_pod_demand_populate: Dom36 out of PoD memory! (tot=2097181 ents=2097120 dom0) (XEN) [4941019.236262] domain_crash called from p2m_pod_demand_populate+0x76a/0xb02 (XEN) [4941019.237006] p2m_pod_demand_populate: Dom36 out of PoD memory! (tot=2097181 ents=2097120 dom0) (XEN) [4941019.237013] domain_crash called from p2m_pod_demand_populate+0x76a/0xb02 (XEN) [4941019.237301] p2m_pod_demand_populate: Dom36 out of PoD memory! (tot=2097181 ents=2097120 dom0) (XEN) [4941019.237303] domain_crash called from p2m_pod_demand_populate+0x76a/0xb02thanks for you help.

-

That's pretty clear. Your host didn't have enough "populate on demand" memory, used for dynamic memory usage. So the domain crashed when trying to get more memory in live.

-

@olivierlambert that's weird because if I go to XOA >> hosts to check the information for this hypervisor it says:

RAM: 178 GiB used on 256 GiB (78 GiB free)I am running

XCP-ng 7.6.0 (GPLv2) -

There might be an issue somewhere in the way dynamic memory is handled, but I'm afraid it would be a lot of work to debug and we're not likely to do it for XCP-ng 7.6.

Alternatively, maybe at some point the host used all the available RAM and released it since?

-

@stormi indeed I don't think it's worth the time debugging the issue in such an old version of xcp-ng, specially when there is a workaround by setting a fixed amount of ram.

We should upgrade this host anyway and I will report back in case we still experience similar issue with the latest stable version.

-

Hello guys,

Glad to hear that my thread had traction and others helped with troubleshooting

My issue still keeps on happening and I now just left that Win Server 2019 VM that keeps crashing nightly when it tries to auto-apply Windows updates.

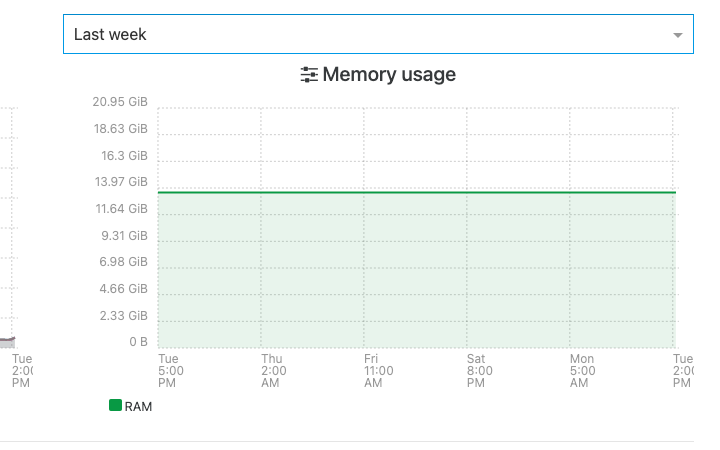

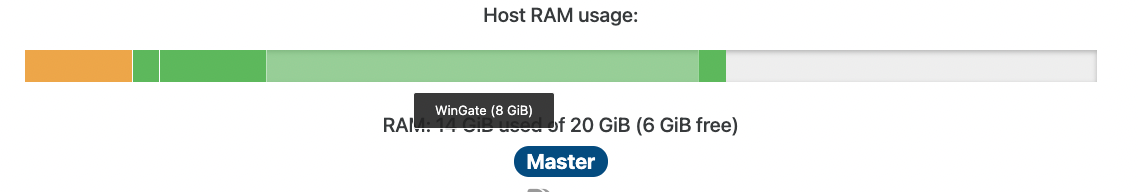

xl dmesg shows that it's out of memory:[14:59 xenhome ~]# xl dmesg m_pod_demand_populate: Dom18 out of PoD memory! (tot=2097695 ents=524256 dom0) (XEN) [4145112.313876] domain_crash called from p2m_pod_demand_populate+0x751/0xa40 (XEN) [4145112.317876] p2m_pod_demand_populate: Dom18 out of PoD memory! (tot=2097695 ents=524256 dom0) (XEN) [4145112.317879] domain_crash called from p2m_pod_demand_populate+0x751/0xa40 (XEN) [4145112.320228] p2m_pod_demand_populate: Dom18 out of PoD memory! (tot=2097695 ents=524256 dom0)However, this host should have more than enough RAM. Here is a screenshot of the RAM graph from XO for the last week:

The windows VM in question has a 2GB/8GB dynamic allocation, but the graph shows the 8GB always in use:

And unlike @pescobar, I am running the latest version of XCP-NG here:

[15:03 xenhome ~]# cat /etc/redhat-release XCP-ng release 8.2.0 (xenenterprise)I'm glad to hear that not doing dynamic solved the issue for pescobar, but now I want to get to the bottom of this because maybe this bug might impact someone in prod.

Let me know what other info I could provide so that we can troubleshoot this further.

Thanks!

-

There's not enough memory for the ballooning driver to grow, and this cause a domain crash.

Going to the bottom of this is not simple I'm afraid.