Nvidia Quadro P400 not working on Ubuntu server via GPU/PCIe passthrough

-

Well just tested the Quadro M4000 also no luck on this one... Although this GPU is alot more powerfull than the P400 so I will keep in anyway....

EDIT: Well complained to quickly, apparently a host reboot resolved it and I see the GPU now in the Plex VM

-

@thefrisianclause Hello, not sure if I missed something from the above thread, but did any of you try to turn off the CPUID "hypervisor present" bit on an Intel-based XCP-ng host VM using this technique from the thread referenced by @warriorcookie above? https://xcp-ng.org/forum/topic/4643/nested-virtualization-of-windows-hyper-v-on-xcp-ng/26

It is the equivalent of the ESXi Hypervisor.CPUID.v0="FALSE" vmx file configuration tweak. It configures the XCP-ng VM to, in effect, lie to the guest OS by saying, "you are not running on a hypervisor."

-

@xcp-ng-justgreat Does this also work for Linux? As I currently run Linux with this and not Windows. But I will have a look into it

Also I am running this on an AMD Ryzen 3800XT.

EDIT: I also had the Quadro M4000 running this afternoon, but had to redo the VM. Now I cant get it to work anymore and did the same tasks. Sometimes Xen is like a 'lady' sometimes it works and sometimes it doesn't

-

@thefrisianclause Yes, it should work for a Linux guest too. It alters the VM's apparent CPUID as presented to the guest OS--whatever that happens to be. I'm not one-hundred percent sure about AMD, but try the same technique. The same bit probably has the same purpose on an AMD CPU.

-

How would you have hide this exactly? For Linux, the "best solution" for now is to get a modified Nvidia driver without the virt check. On Windows, it's already working since recent drivers (they removed the check)

-

@xcp-ng-justgreat Unfortunately this did not work on Ubuntu 20.04.3 LTS ..... Even my recently bought Quadro M4000 won't work...

I am now getting this error:

[ 50.123425] NVRM: GPU 0000:00:06.0: RmInitAdapter failed! (0x25:0x40:1250) [ 50.123475] NVRM: GPU 0000:00:06.0: rm_init_adapter failed, device minor number 0 [ 51.102646] NVRM: GPU 0000:00:06.0: GPU has fallen off the bus. [ 51.304888] NVRM: A GPU crash dump has been created. If possible, please run NVRM: nvidia-bug-report.sh as root to collect this data before NVRM: the NVIDIA kernel module is unloaded. [ 51.507717] NVRM: GPU 0000:00:06.0: RmInitAdapter failed! (0x24:0xffff:1220) [ 51.507755] NVRM: GPU 0000:00:06.0: rm_init_adapter failed, device minor number 0 -

@thefrisianclause Sorry to hear that. NVIDIA does jealously guard its secret sauce from the world. Altering the CPUID hypervisor bit falls within the realm of unnatural acts. While the technique has proven useful in other use cases and is a good thing to know about, it may be that countermeasures have been added to the GPU drivers to expose the lie. Hard to know . . .

-

It is strange as I had it working this afternoon, on a different VM.... But shouldnt Quadro cards not have these particular issues (code 43), as they are 'Quadro'?

-

@thefrisianclause That's a good point. However, it's not here http://hcl.xenserver.org/gpus/?gpusupport__version=20&vendor=50 so NVIDIA and Citrix have no obligation to support it for their commercial customers. If you do get it to work, it's by the grace of Vates and/or other XCP-ng users here. Best of luck!

-

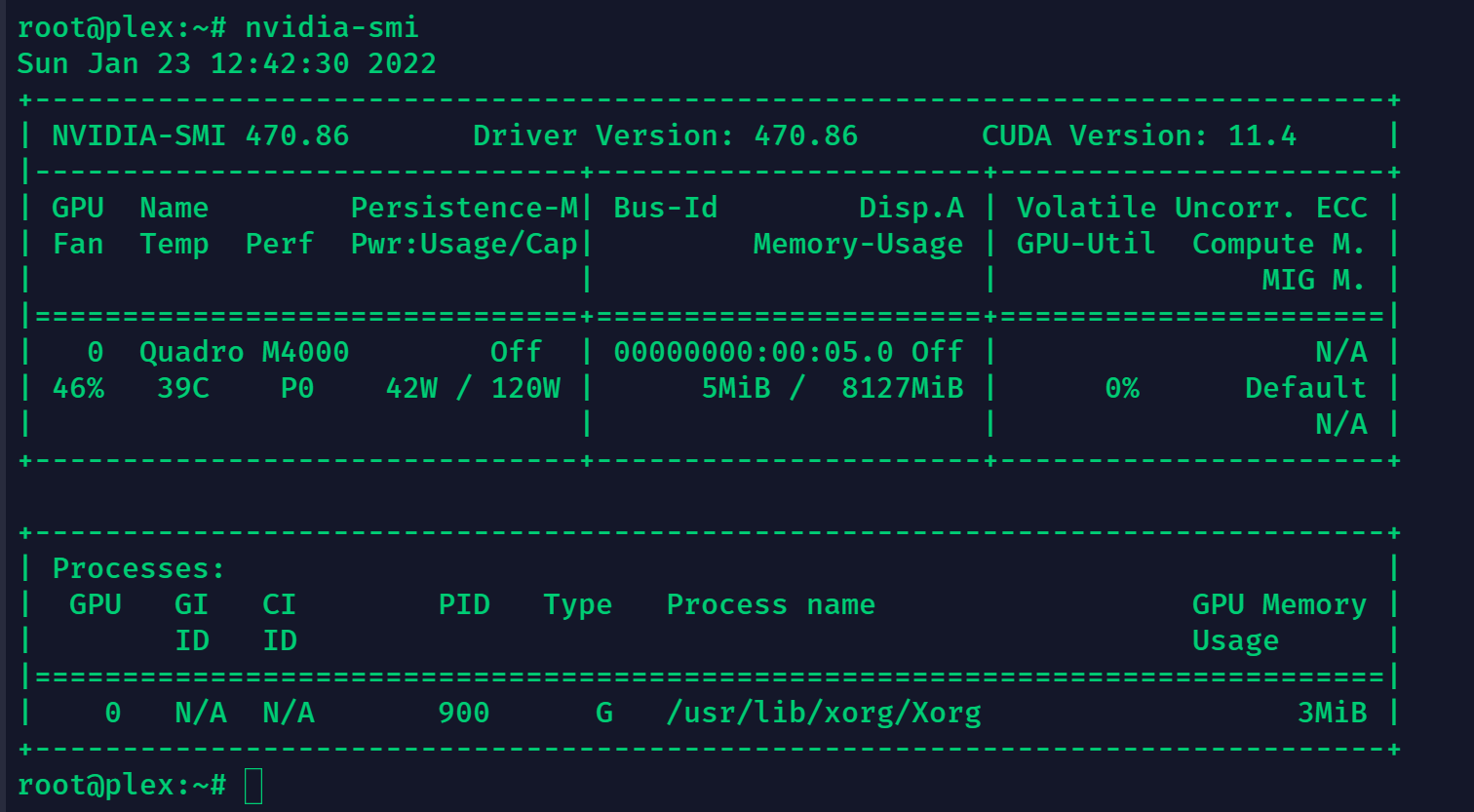

@olivierlambert @XCP-ng-JustGreat For some reason I got the M4000 working again in XCP-NG

Lets hope it does not leave me again

EDIT:

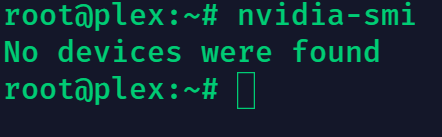

Well I tested something in a case of an emergency reboot where I rebooted the whole host, the card has fallen of the bus and nvidia-smi does not work anymore.[ 19.111279] NVRM: GPU 0000:00:05.0: RmInitAdapter failed! (0x25:0x40:1250) [ 19.111325] NVRM: GPU 0000:00:05.0: rm_init_adapter failed, device minor number 0 [ 20.089528] NVRM: GPU 0000:00:05.0: GPU has fallen off the bus. [ 20.292170] NVRM: A GPU crash dump has been created. If possible, please run NVRM: nvidia-bug-report.sh as root to collect this data before NVRM: the NVIDIA kernel module is unloaded. [ 20.495046] NVRM: GPU 0000:00:05.0: RmInitAdapter failed! (0x24:0xffff:1220) [ 20.495093] NVRM: GPU 0000:00:05.0: rm_init_adapter failed, device minor number 0 [ 21.468090] NVRM: GPU 0000:00:05.0: RmInitAdapter failed! (0x22:0x56:667) [ 21.468109] NVRM: GPU 0000:00:05.0: rm_init_adapter failed, device minor number 0 [ 22.077205] NVRM: GPU 0000:00:05.0: RmInitAdapter failed! (0x22:0x56:667) [ 22.077257] NVRM: GPU 0000:00:05.0: rm_init_adapter failed, device minor number 0 [ 22.686023] NVRM: GPU 0000:00:05.0: RmInitAdapter failed! (0x22:0x56:667) [ 22.686061] NVRM: GPU 0000:00:05.0: rm_init_adapter failed, device minor number 0 [ 23.294321] NVRM: GPU 0000:00:05.0: RmInitAdapter failed! (0x22:0x56:667) [ 23.294381] NVRM: GPU 0000:00:05.0: rm_init_adapter failed, device minor number 0 [ 24.479743] rfkill: input handler disabled [ 30.633389] NVRM: GPU 0000:00:05.0: RmInitAdapter failed! (0x22:0x56:667) [ 30.633444] NVRM: GPU 0000:00:05.0: rm_init_adapter failed, device minor number 0 [ 31.242314] NVRM: GPU 0000:00:05.0: RmInitAdapter failed! (0x22:0x56:667) [ 31.242353] NVRM: GPU 0000:00:05.0: rm_init_adapter failed, device minor number 0I believe this has nothing to do with code 43?

And it lets my whole XCP-NG host crash when trying to reboot the plex vm again...

So I assume the card works, but falls off the bus for some reason and I have no idea why?

EDIT 2:

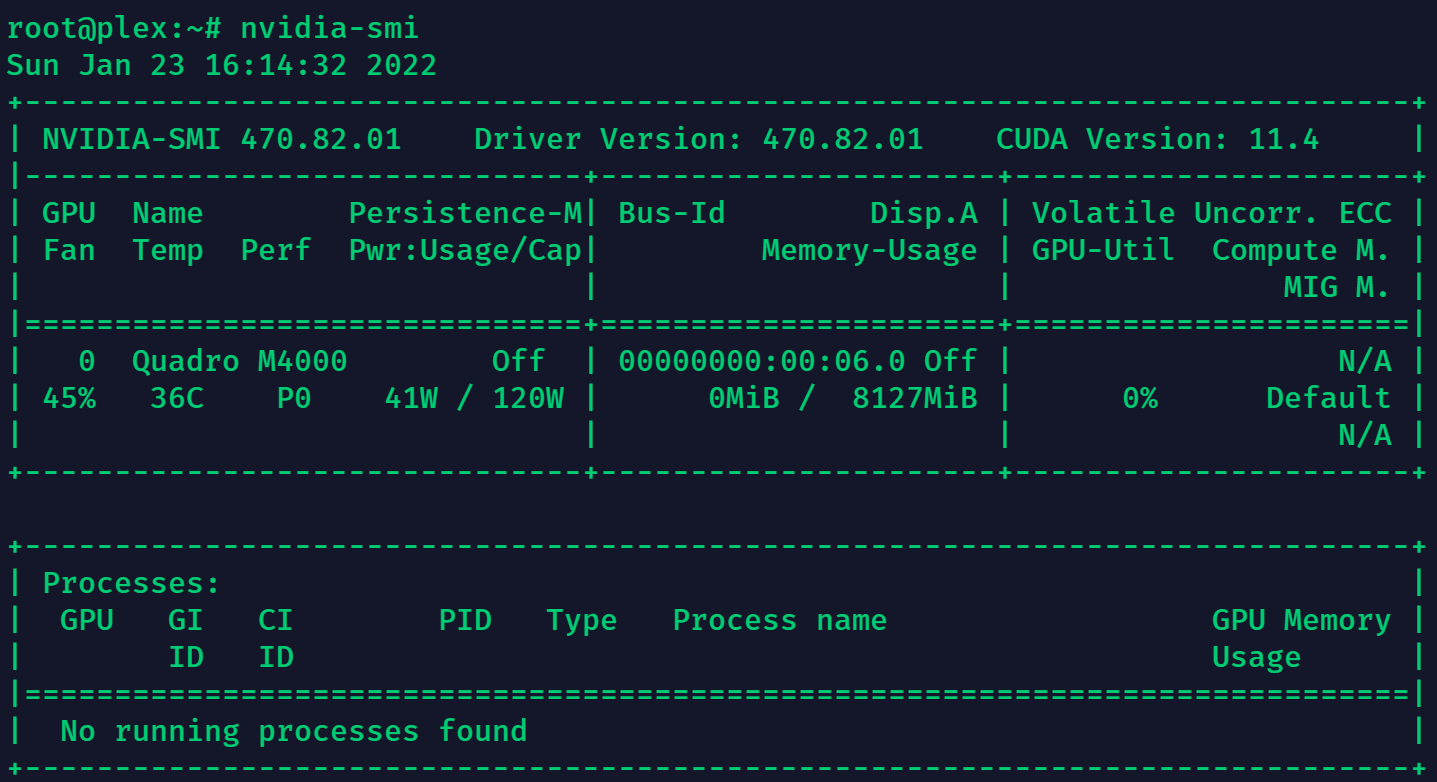

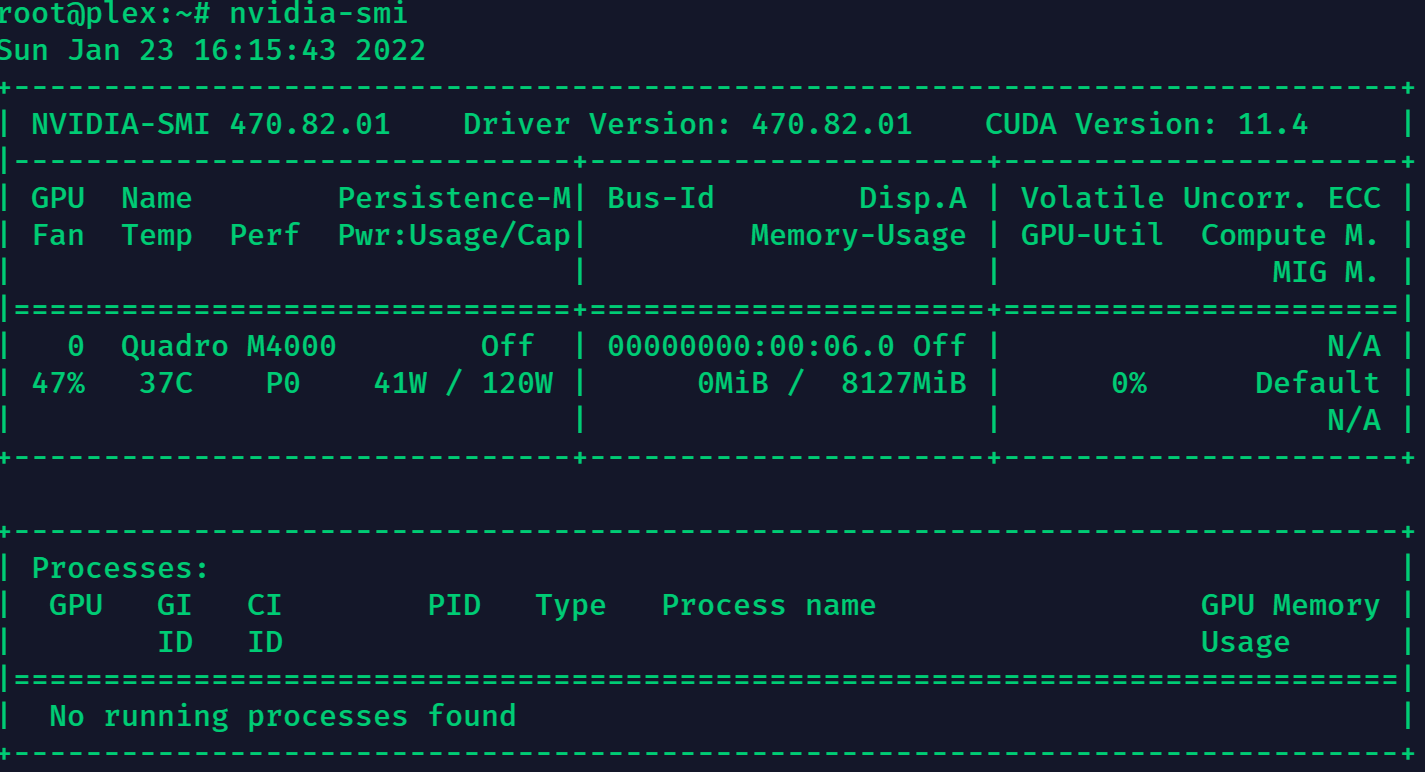

Just reinstalled a new Ubuntu 21.10, and there it works as well.

Also after reboot of the Ubuntu 21.10, this keeps working:

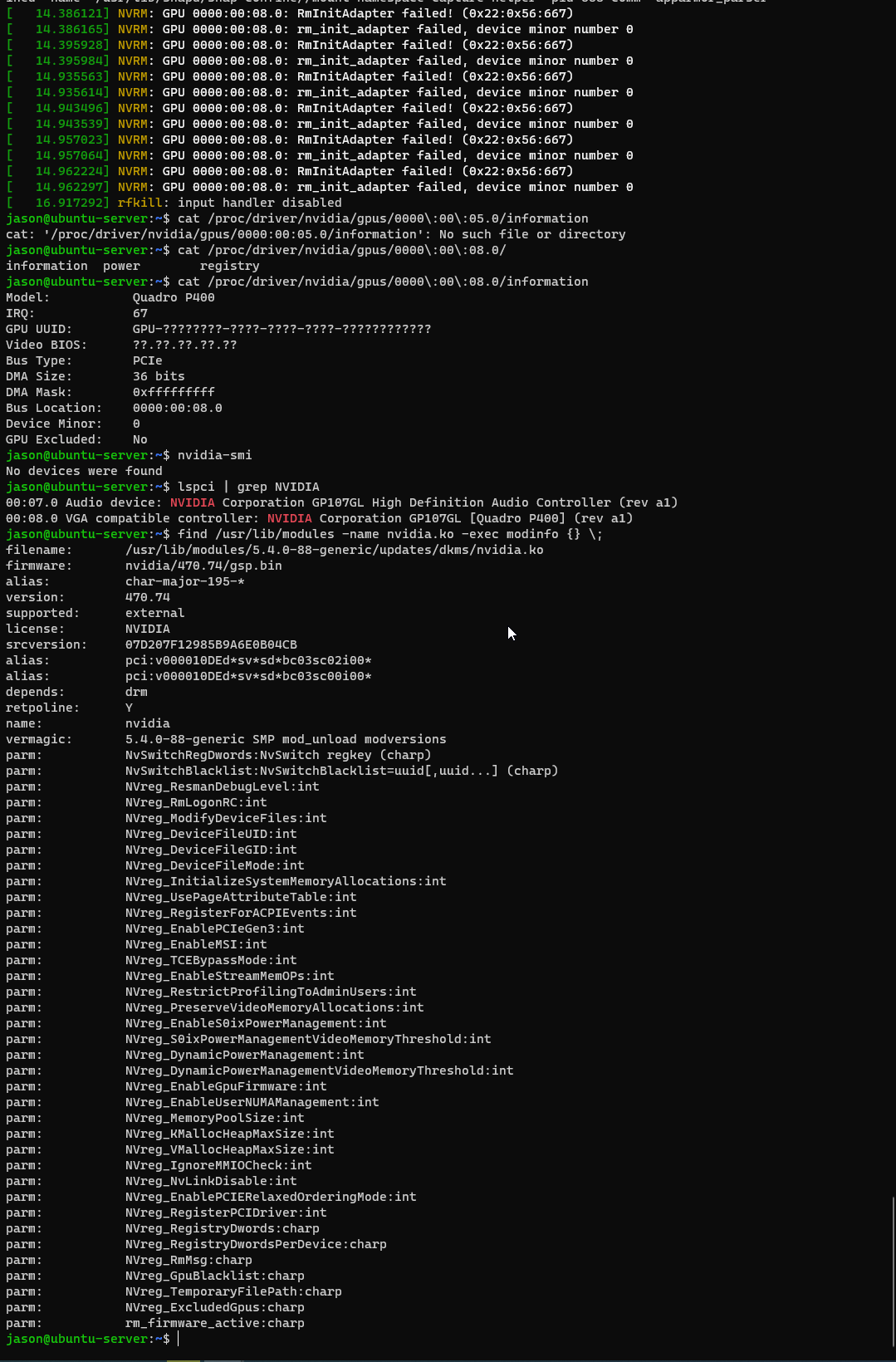

but when I reboot the host itself, I get this:

And the GPU falls off the bus, resulting in this error:[ 24.428255] loop3: detected capacity change from 0 to 8 [ 42.582836] NVRM: GPU 0000:00:06.0: RmInitAdapter failed! (0x25:0x40:1250) [ 42.582888] NVRM: GPU 0000:00:06.0: rm_init_adapter failed, device minor number 0 [ 43.562201] NVRM: GPU 0000:00:06.0: GPU has fallen off the bus. [ 43.764639] NVRM: A GPU crash dump has been created. If possible, please run NVRM: nvidia-bug-report.sh as root to collect this data before NVRM: the NVIDIA kernel module is unloaded. [ 43.968873] NVRM: GPU 0000:00:06.0: RmInitAdapter failed! (0x24:0xffff:1220) [ 43.968930] NVRM: GPU 0000:00:06.0: rm_init_adapter failed, device minor number 0So I believe something happens on the XCP-NG side in this matter?

EDIT 3:

Looking further in the logs I find this: [ 10.315135] [drm:nv_drm_load [nvidia_drm]] ERROR [nvidia-drm] [GPU ID 0x00000006] Failed to allocate NvKmsKapiDevice

[ 10.315943] [drm:nv_drm_probe_devices [nvidia_drm]] ERROR [nvidia-drm] [GPU ID 0x00000006] Failed to register deviceEDIT 4: So sometimes it works and sometimes it doesnt, it goes very sporadically. So now I have the feeling that it could be an error on XCP-NG's side but I am not 100% sure. As the card itself works apparently, but I also have the feeling this could be an power consumption issue as I use an 2x Molex to 6 Pin PCI-E converter for this graphics card.

(Sorry for the long message)...

-

@thefrisianclause Not all Quadro cards. Datacenter class cards will passthrough fine. Workstation cards do not on Linux guests. They will passthrough on windows guests as Nvidia removed the check in the driver.

-

@xcp-ng-justgreat said in Nvidia Quadro P400 not working on Ubuntu server via GPU/PCIe passthrough:

@thefrisianclause Hello, not sure if I missed something from the above thread, but did any of you try to turn off the CPUID "hypervisor present" bit on an Intel-based XCP-ng host VM using this technique from the thread referenced by @warriorcookie above? https://xcp-ng.org/forum/topic/4643/nested-virtualization-of-windows-hyper-v-on-xcp-ng/26

It is the equivalent of the ESXi Hypervisor.CPUID.v0="FALSE" vmx file configuration tweak. It configures the XCP-ng VM to, in effect, lie to the guest OS by saying, "you are not running on a hypervisor."

Can you clarify? I thought this thread had been left as "close but no cigar"? Seems to have gotten the attention of some xen devs though...

-

@warriorcookie I believe they still have a heavy heart when Linus told 'F You NVidia'

Also I think the Quadro M4000 should not have any problems with passing through as this one uses other drivers than the Quadro P400 I also have. Otherwise all Quadro cards would be unusable with Xen and the Xen footprint would be a lot less I believe....

Also I think the Quadro M4000 should not have any problems with passing through as this one uses other drivers than the Quadro P400 I also have. Otherwise all Quadro cards would be unusable with Xen and the Xen footprint would be a lot less I believe....The Driver does seem te work as I sometimes get information from nvidia-smi (when freshly installed or on occasion).

The GPU on the other hand just falls off the bus for some strange reason (also see message above about this).

-

@warriorcookie Your characterization is basically correct, but perhaps it should be "closer but no cigar." Masking the hypervisor's presence from the guest is required in all of the other hypervisors to successfully run a Windows guest with nested virtualization enabled. Prior to the discovery of the cited technique, nobody in the community knew how to do it on XenServer/XCP-ng using the xe API. However, the upstream Xen code itself and likely the guest drivers need more work in order for nested virtualization of a Windows guest to work reliably the way it does on ESXi, Hyper-V, etc. With the advent of Windows 11 and Server 2022, a virtualized TPM is also a required feature for full Windows compliance, so Xen has quite a bit on its "to do" list with respect to nested virtualization of Windows.

-

Well I just installed Windows Server 2022 with the Quadro P400 and everything works fine now... So I believe for the time being I will keep Plex on Windows for now even if this has a larger footprint than Linux...

-

Now you get Linus middle finger toward Nvidia on Linux

-

@olivierlambert Haha yes yes and I thank him for it

But I will be back on Linux once all of this has been resolved on Nvidia's side.

But I will be back on Linux once all of this has been resolved on Nvidia's side.Atleast I can migrate everything to XCP-NG now, so I will be accessing the Xen Eco system

-

Yeah at least it's better than nothing

I would love NV to remove this limitation on Linux too

I would love NV to remove this limitation on Linux too

-

yeah, closed source drivers on linux has always felt so.... dirty...

I'd use AMD if I could, but unfortunately I need NVENC and NVDEC.

-

Yeah I know

That's the issue when there's a greedy leader on a market.

That's the issue when there's a greedy leader on a market.