Unable to migrate VMs

-

@fred974 Yes from the VM host the basic CLI is something like

xe vm-migrate uuid=<VMuuid> host-uuid=<Hostuuid> -

[15:37 srv-tri-xcptmp ~]# xe vm-migrate uuid=8be08afd-f7c9-f044-661e-631b41b110b7 host-uuid=9eebc91d-3277-4f13-948e-f964ecf548d2Error: Host 9eebc91d-3277-4f13-948e-f964ecf548d2 not foundThis is strange as the host is visible XO

From the host the VM is on, I can telnet the destination host

[15:47 srv-tri-xcptmp ~]# telnet 85.75.xxx.xx 443 Trying 85.75.xxx.xx... Connected to 85.75.xxx.xx. Escape character is '^]'. ^] -

@fred974 Are you trying to migrate to the same pool or to another pool?

-

@darkbeldin said in Unable to migrate VMs:

@fred974 Are you trying to migrate to the same pool or to another pool?

I am migrating to another pool on another DC. I am using zerotier to link the hosts. But the issue also exist when exposing the destination host IP to the public. -

When I add the hosting using its public IP, I have the same issue. It is added to XO, I can list the VM on the destination host. I cannot access its console tough. I tried via the official XOA and same error.

-

@fred974 Sorry not sure i understand, from which host to which host are you trying to migrate? are they on the same pool?

-

@darkbeldin said in Unable to migrate VMs:

@fred974 Sorry not sure i understand, from which host to which host are you trying to migrate? are they on the same pool?

I am trying to migrate 1 VM located in datacentre 1 to another host located on another datacentre on a completely different pool. There is 2 pools of 2 individual host.

Currently I am using XOA official from the host the VM reside. I added the destination host to that XOA via its public IP and I can see it in XOA but cannot access the console of the remote host from XOA for some reason.

The error at command line is saying that it cannot find the remote host, even tough it exist in XOA. Was I suppose to run the following command from the XOA server and not the host?

xe vm-migrate uuid=<VMuuid> host-uuid=<Hostuuid> -

@fred974 No in the case of a remote pool you have to use other parameters.

xe vm-migrate vm=<vmuuid> remote-master=<remote pool master IP> remote-username=root remote-password=<password> vif:<uuid of the migration VIF> -

@darkbeldin Do I need to specify the remote storage uuid?

-

@fred974 here is the error from command line

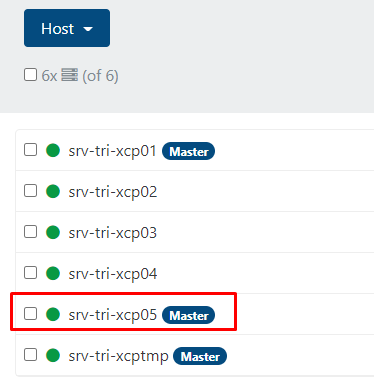

Performing a Storage XenMotion migration. Your VM's VDIs will be migrated with the VM. Will migrate to remote host: srv-tri-xcp05, using remote network: MGNT_LAN. Here is the VDI mapping: VDI dd2cc891-c44f-4dfe-9bfd-5887e73b5bf3 -> SR 66eb4d5b-3f8b-4370-2cb6-f2ac42e69257 The server failed to handle your request, due to an internal error. The given message may give details useful for debugging the problem. message: Xmlrpc_client.Connection_reset -

Your connection is reset. Check XAPI logs on source and destination, also check you have enough memory and disk space in the dom0.

-

@olivierlambert said in Unable to migrate VMs:

also check you have enough memory and disk space in the dom0

On source or destination?

-

Check both, that won't hurt

-

@olivierlambert said in Unable to migrate VMs:

Your connection is reset. Check XAPI logs on source and destination, also check you have enough memory and disk space in the dom0.

dom0 memeory is good on the host sending the VM. On the receiving host, it is low but from what I can see in XAPI log on the sending host, it doesn't even make it as far as the receiving host.

tail -f /var/log/xensource.logJan 5 09:01:21 srv-tri-xcptmp xapi: [ info||46324 HTTPS 194.12.14.36->|Async.VM.migrate_send R:7e6621769ab0|dispatcher] spawning a new thread to handle the current task (trackid=4434341f9e2235fa38c17085de8161a3) Jan 5 09:01:21 srv-tri-xcptmp xapi: [debug||46324 HTTPS 194.12.14.36->|Async.VM.migrate_send R:7e6621769ab0|audit] VM.migrate_send: VM = '8be08afd-f7c9-f044-661e-631b41b110b7 (UNMS-Controller)' Jan 5 09:01:21 srv-tri-xcptmp xapi: [debug||46324 HTTPS 194.12.14.36->|Async.VM.migrate_send R:7e6621769ab0|dummytaskhelper] task VM.assert_can_migrate D:cd498f64732f created (trackid=4434341f9e2235fa38c17085de8161a3) by task R:7e6621769ab0 Jan 5 09:01:21 srv-tri-xcptmp xapi: [debug||46324 HTTPS 194.12.14.36->|VM.assert_can_migrate D:cd498f64732f|audit] VM.assert_can_migrate: VM = '8be08afd-f7c9-f044-661e-631b41b110b7 (UNMS-Controller)' Jan 5 09:01:21 srv-tri-xcptmp xapi: [debug||46324 HTTPS 194.12.14.36->|VM.assert_can_migrate D:cd498f64732f|xapi_vm_migrate] This is a cross-pool migration Jan 5 09:01:21 srv-tri-xcptmp xapi: [debug||46324 HTTPS 194.12.14.36->|VM.assert_can_migrate D:cd498f64732f|stunnel] stunnel start Jan 5 09:01:21 srv-tri-xcptmp xapi: [debug||46324 HTTPS 194.12.14.36->|VM.assert_can_migrate D:cd498f64732f|xmlrpc_client] stunnel pid: 18502 (cached = false) connected to 192.168.15.13:443 Jan 5 09:01:21 srv-tri-xcptmp xapi: [debug||46324 HTTPS 194.12.14.36->|VM.assert_can_migrate D:cd498f64732f|xmlrpc_client] with_recorded_stunnelpid task_opt=None s_pid=18502 Jan 5 09:01:31 srv-tri-xcptmp xapi: [ warn||46324 HTTPS 194.12.14.36->|VM.assert_can_migrate D:cd498f64732f|xmlrpc_client] stunnel pid: 18502 caught Xmlrpc_client.Connection_reset Jan 5 09:01:31 srv-tri-xcptmp xapi: [debug||46324 HTTPS 194.12.14.36->|VM.assert_can_migrate D:cd498f64732f|stunnel] 2022.01.05 09:01:21 LOG5[ui]: stunnel 5.56 on x86_64-koji-linux-gnu platform Jan 5 09:01:31 srv-tri-xcptmp xapi: [debug||46324 HTTPS 194.12.14.36->|VM.assert_can_migrate D:cd498f64732f|stunnel] 2022.01.05 09:01:21 LOG5[ui]: Compiled/running with OpenSSL 1.1.1c FIPS 28 May 2019 Jan 5 09:01:31 srv-tri-xcptmp xapi: [debug||46324 HTTPS 194.12.14.36->|VM.assert_can_migrate D:cd498f64732f|stunnel] 2022.01.05 09:01:21 LOG5[ui]: Threading:PTHREAD Sockets:POLL,IPv6 TLS:ENGINE,FIPS,OCSP,SNI Auth:LIBWRAP Jan 5 09:01:31 srv-tri-xcptmp xapi: [debug||46324 HTTPS 194.12.14.36->|VM.assert_can_migrate D:cd498f64732f|stunnel] 2022.01.05 09:01:21 LOG5[ui]: Reading configuration from descriptor 7 Jan 5 09:01:31 srv-tri-xcptmp xapi: [debug||46324 HTTPS 194.12.14.36->|VM.assert_can_migrate D:cd498f64732f|stunnel] 2022.01.05 09:01:21 LOG5[ui]: UTF-8 byte order mark not detected Jan 5 09:01:31 srv-tri-xcptmp xapi: [debug||46324 HTTPS 194.12.14.36->|VM.assert_can_migrate D:cd498f64732f|stunnel] 2022.01.05 09:01:21 LOG5[ui]: FIPS mode disabled Jan 5 09:01:31 srv-tri-xcptmp xapi: [debug||46324 HTTPS 194.12.14.36->|VM.assert_can_migrate D:cd498f64732f|stunnel] 2022.01.05 09:01:21 LOG4[ui]: Service [stunnel] needs authentication to prevent MITM attacks Jan 5 09:01:31 srv-tri-xcptmp xapi: [debug||46324 HTTPS 194.12.14.36->|VM.assert_can_migrate D:cd498f64732f|stunnel] 2022.01.05 09:01:21 LOG5[ui]: Configuration successful Jan 5 09:01:31 srv-tri-xcptmp xapi: [debug||46324 HTTPS 194.12.14.36->|VM.assert_can_migrate D:cd498f64732f|stunnel] 2022.01.05 09:01:21 LOG5[0]: Service [stunnel] accepted connection from unnamed socket Jan 5 09:01:31 srv-tri-xcptmp xapi: [debug||46324 HTTPS 194.12.14.36->|VM.assert_can_migrate D:cd498f64732f|stunnel] 2022.01.05 09:01:31 LOG3[0]: s_connect: s_poll_wait 192.168.15.13:443: TIMEOUTconnect exceeded Jan 5 09:01:31 srv-tri-xcptmp xapi: [debug||46324 HTTPS 194.12.14.36->|VM.assert_can_migrate D:cd498f64732f|stunnel] 2022.01.05 09:01:31 LOG3[0]: No more addresses to connect Jan 5 09:01:31 srv-tri-xcptmp xapi: [debug||46324 HTTPS 194.12.14.36->|VM.assert_can_migrate D:cd498f64732f|stunnel] 2022.01.05 09:01:31 LOG5[0]: Connection reset: 0 byte(s) sent to TLS, 0 byte(s) sent to socket Jan 5 09:01:31 srv-tri-xcptmp xapi: [error||46324 ||backtrace] VM.assert_can_migrate D:cd498f64732f failed with exception Xmlrpc_client.Connection_reset Jan 5 09:01:31 srv-tri-xcptmp xapi: [error||46324 ||backtrace] Raised Xmlrpc_client.Connection_reset Jan 5 09:01:31 srv-tri-xcptmp xapi: [error||46324 ||backtrace] 1/20 xapi Raised at file http-svr/xmlrpc_client.ml, line 307 Jan 5 09:01:31 srv-tri-xcptmp xapi: [error||46324 ||backtrace] 2/20 xapi Called from file lib/xapi-stdext-pervasives/pervasiveext.ml, line 24 Jan 5 09:01:31 srv-tri-xcptmp xapi: [error||46324 ||backtrace] 3/20 xapi Called from file lib/xapi-stdext-pervasives/pervasiveext.ml, line 35 Jan 5 09:01:31 srv-tri-xcptmp xapi: [error||46324 ||backtrace] 4/20 xapi Called from file lib/xapi-stdext-pervasives/pervasiveext.ml, line 24 Jan 5 09:01:31 srv-tri-xcptmp xapi: [error||46324 ||backtrace] 5/20 xapi Called from file lib/xapi-stdext-pervasives/pervasiveext.ml, line 35 Jan 5 09:01:31 srv-tri-xcptmp xapi: [error||46324 ||backtrace] 6/20 xapi Called from file stunnel/stunnel.ml, line 270 Jan 5 09:01:31 srv-tri-xcptmp xapi: [error||46324 ||backtrace] 7/20 xapi Called from file ocaml/xapi-client/client.ml, line 14 Jan 5 09:01:31 srv-tri-xcptmp xapi: [error||46324 ||backtrace] 8/20 xapi Called from file ocaml/xapi-client/client.ml, line 7924 Jan 5 09:01:31 srv-tri-xcptmp xapi: [error||46324 ||backtrace] 9/20 xapi Called from file ocaml/xapi/helpers.ml, line 778 Jan 5 09:01:31 srv-tri-xcptmp xapi: [error||46324 ||backtrace] 10/20 xapi Called from file ocaml/xapi/helpers.ml, line 786 Jan 5 09:01:31 srv-tri-xcptmp xapi: [error||46324 ||backtrace] 11/20 xapi Called from file ocaml/xapi/helpers.ml, line 793 Jan 5 09:01:31 srv-tri-xcptmp xapi: [error||46324 ||backtrace] 12/20 xapi Called from file ocaml/xapi/helpers.ml, line 794 Jan 5 09:01:31 srv-tri-xcptmp xapi: [error||46324 ||backtrace] 13/20 xapi Called from file ocaml/xapi/helpers.ml, line 824 Jan 5 09:01:31 srv-tri-xcptmp xapi: [error||46324 ||backtrace] 14/20 xapi Called from file ocaml/xapi/xapi_vm_migrate.ml, line 1546 Jan 5 09:01:31 srv-tri-xcptmp xapi: [error||46324 ||backtrace] 15/20 xapi Called from file ocaml/xapi/message_forwarding.ml, line 2087 Jan 5 09:01:31 srv-tri-xcptmp xapi: [error||46324 ||backtrace] 16/20 xapi Called from file ocaml/xapi/server_helpers.ml, line 100 Jan 5 09:01:31 srv-tri-xcptmp xapi: [error||46324 ||backtrace] 17/20 xapi Called from file ocaml/xapi/server_helpers.ml, line 121 Jan 5 09:01:31 srv-tri-xcptmp xapi: [error||46324 ||backtrace] 18/20 xapi Called from file lib/xapi-stdext-pervasives/pervasiveext.ml, line 24 Jan 5 09:01:31 srv-tri-xcptmp xapi: [error||46324 ||backtrace] 19/20 xapi Called from file map.ml, line 135 Jan 5 09:01:31 srv-tri-xcptmp xapi: [error||46324 ||backtrace] 20/20 xapi Called from file sexp_conv.ml, line 147 Jan 5 09:01:31 srv-tri-xcptmp xapi: [error||46324 ||backtrace] Jan 5 09:01:31 srv-tri-xcptmp xapi: [ warn||46324 ||rbac_audit] cannot marshall arguments for the action VM.migrate_send: name and value list lengths don't match. str_names=[session_id,vm,dest,live,vdi_map,vif_map,options,vgpu_map,], xml_values=[S(OpaqueRef:9485c91a-4469-495f-b818-0aa0049bc743),S(OpaqueRef:74b4df43-4a23-4b21-becf-4190f183ef9c),{SM:S(http://192.168.15.13/services/SM?session_id=OpaqueRef:5e864347-868f-4238-bfe4-0c1232201dd1);host:S(OpaqueRef:aa99f3e4-befa-43b3-a5f7-40b4e34d1161);xenops:S(http://192.168.15.13/services/xenops?session_id=OpaqueRef:5e864347-868f-4238-bfe4-0c1232201dd1);session_id:S(OpaqueRef:5e864347-868f-4238-bfe4-0c1232201dd1);master:S(http://192.168.15.13/)},B(true),{OpaqueRef:909eadf8-f664-454a-869d-c446698fc322:S(OpaqueRef:2070a8fe-7a3a-4ddf-8427-cba22fd25986)},{OpaqueRef:07ccf8d9-c7dd-4bf9-8ead-fec3ff80eeb9:S(OpaqueRef:588e7e79-c91e-40de-95a6-2c6bdd62fb5f)},{force:S(false)},] Jan 5 09:01:31 srv-tri-xcptmp xapi: [error||46324 ||backtrace] Async.VM.migrate_send R:7e6621769ab0 failed with exception Xmlrpc_client.Connection_reset Jan 5 09:01:31 srv-tri-xcptmp xapi: [error||46324 ||backtrace] Raised Xmlrpc_client.Connection_reset Jan 5 09:01:31 srv-tri-xcptmp xapi: [error||46324 ||backtrace] 1/1 xapi Raised at file (Thread 46324 has no backtrace table. Was with_backtraces called?, line 0 Jan 5 09:01:31 srv-tri-xcptmp xapi: [error||46324 ||backtrace] Jan 5 09:01:32 srv-tri-xcptmp xapi: [debug||46380 /var/lib/xcp/xapi||dummytaskhelper] task dispatch:session.logout D:9e9cac5e28e3 created by task D:6aa2dbb701ac Jan 5 09:01:32 srv-tri-xcptmp xapi: [ info||46380 /var/lib/xcp/xapi|session.logout D:7c663a75d701|xapi_session] Session.destroy trackid=250842705355fbcbb9f563a08a45189d Jan 5 09:01:32 srv-tri-xcptmp xapi: [debug||46381 /var/lib/xcp/xapi||dummytaskhelper] task dispatch:session.slave_login D:8a1a48773968 created by task D:6aa2dbb701ac Jan 5 09:01:32 srv-tri-xcptmp xapi: [ info||46381 /var/lib/xcp/xapi|session.slave_login D:0c9ecbe677ce|xapi_session] Session.create trackid=a4cb57489d9587c4458b76c9b1501ed7 pool=true uname= originator=xapi is_local_superuser=true auth_user_sid= parent=trackid=9834f5af41c964e225f24279aefe4e49 Jan 5 09:01:32 srv-tri-xcptmp xapi: [debug||46382 /var/lib/xcp/xapi||dummytaskhelper] task dispatch:pool.get_all D:357747db6aca created by task D:0c9ecbe677ce Jan 5 09:01:32 srv-tri-xcptmp xapi: [debug||46383 /var/lib/xcp/xapi||dummytaskhelper] task dispatch:event.from D:c051555ce754 created by task D:6aa2dbb701ac Jan 5 09:01:34 srv-tri-xcptmp xcp-rrdd: [ info||9 ||rrdd_main] memfree has changed to 701172 in domain 3As you can see it cannot connect to the receiving host

192.168.15.13:443: TIMEOUTconnect exceeded -

@fred974 said in Unable to migrate VMs:

_connect: s_poll_wait 192.168.15.13:443: TIMEOUTconnect exceeded

Indeed that's weird, like if something was blocked. I would check your network/FW and so on.

-

@olivierlambert Is port 80 and 443 all that is needed correct

-

Yes, but there's something problematic somewhere in your case.

Have you restart the toolstack on destination?

-

@olivierlambert said in Unable to migrate VMs:

Have you restart the toolstack on destination?

Yes I have, also rebooted. I just installed XOA on the destination server and added the sender server to it. It does connect and I can see all the VM. This time I can also access both hosts console in XOA but it still fail to transfer

vm.migrate { "vm": "8be08afd-f7c9-f044-661e-631b41b110b7", "mapVifsNetworks": { "ccba90a8-0e93-f0fe-80d7-816363036c97": "24c00a36-b0ee-2f9b-832e-6e3a26d53bc3" }, "migrationNetwork": "aec8aa3f-712f-0346-3165-03c5b08083d0", "sr": "66eb4d5b-3f8b-4370-2cb6-f2ac42e69257", "targetHost": "9eebc91d-3277-4f13-948e-f964ecf548d2" } { "code": 21, "data": { "objectId": "8be08afd-f7c9-f044-661e-631b41b110b7", "code": "INTERNAL_ERROR" }, "message": "operation failed", "name": "XoError", "stack": "XoError: operation failed at operationFailed (/usr/local/lib/node_modules/xo-server/node_modules/xo-common/src/api-errors.js:21:32) at file:///usr/local/lib/node_modules/xo-server/src/api/vm.mjs:483:15 at Object.migrate (file:///usr/local/lib/node_modules/xo-server/src/api/vm.mjs:470:3) at Api.callApiMethod (file:///usr/local/lib/node_modules/xo-server/src/xo-mixins/api.mjs:307:20)" } -

It's not a failure/problem of XO, but your hosts (as you can see the same issue persists despite using xe, XCP-ng Center or XO).

Have you checked the disk space? Also raise the memory in the dom0.