Epyc VM to VM networking slow

-

@john-c License moved. All fine.

Backup Intel host running on 1G NIC's (for the time being) bonded lacp.

Already faster than before.

I have an XO instance running on a Proxmox host to be able to manage the pools when the main XOA is down (updates etc), so I'm good there and don't need another (2nd) backup host (would be crazy overkill).

-

@manilx said in Epyc VM to VM networking slow:

@john-c License moved. All fine.

Backup Intel host running on 1G NIC's (for the time being) bonded lacp.

Already faster than before.

I have an XO instance running on a Proxmox host to be able to manage the pools when the main XOA is down (updates etc), so I'm good there and don't need another (2nd) backup host (would be crazy overkill).

I mean have the Proxmox host as XCP-ng then and have it join the XO/XOA's pool, preferably if they are they same in hardware, components. That way when the HPE ProLiant DL360 Gen10 is down for updates, the XO/XOA VM can migrate between them live as required. So you can have RPU on the dedicated XO/XOA Intel based hosts.

-

@john-c Proxmox host is a Protectli. All good. XOA will be on the single Intel host pool, no need for redundancy here.

XO on Proxmox for emergencies.....Remember: this is ALL a WORKAROUND for the stupid AMD EPYC bug!!!!!!

Not in the least the final solution.The final is XOA running on our EPYC production pool as it was

-

@manilx said in Epyc VM to VM networking slow:

@john-c Proxmox host is a Protectli. All good. XOA will be on the single Intel host pool, no need for redundancy here.

XO on Proxmox for emergencies.....Remember: this is ALL a WORKAROUND for the stupid AMD EPYC bug!!!!!!

Not in the least the final solution.The final is XOA running on our EPYC production pool as it was

Alright in that case just the HPE ProLiant DL360 Gen10 as dedicated XO/XOA host. But bear in mind that when its updating the XCP-ng installed on it, the host will be unavailable and thus that instance of XO/XOA until the booting after reboot is complete.

-

@john-c Yes, obviously. For that I have XO on a mini-pc

-

@john-c @olivierlambert

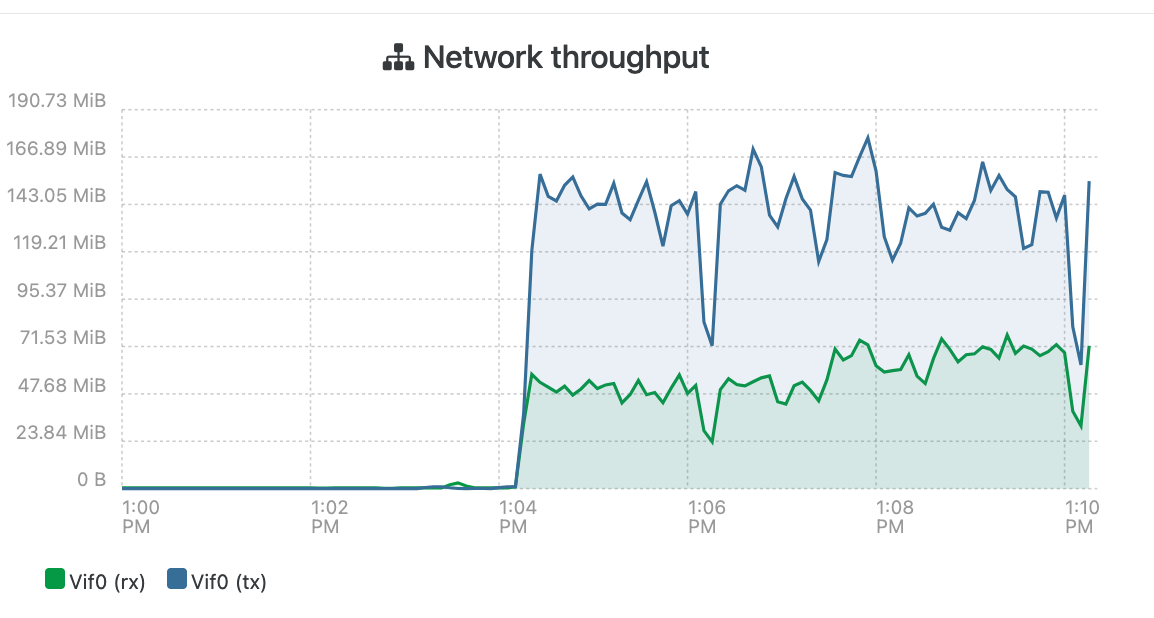

One of our standard backup jobs. This is a 100% increase!!! On 1G lacp bond. Instead of 10G on EPYC host!1,5 yrs battling with this and in the end it's all due to the same issue as we now see.

-

@manilx it is deffo interesting to see more proof that this but may be wider than expected.

-

@manilx said in Epyc VM to VM networking slow:

@john-c @olivierlambert

One of our standard backup jobs. This is a 100% increase!!! On 1G lacp bond. Instead of 10G on EPYC host!1,5 yrs battling with this and in the end it's all due to the same issue as we now see.

Don't forget to also post the comparison and screenshot when you have fitted the 10G 4 Port NIC with the 2 lacp bonds on the Intel HPE!

-

@john-c WILL DO! I've told purchasing to order the card you recommended. Let's see how long that'll take....

EDIT: ordered on Amazon. Expect to be here 1st week Nov.

Will report back then pinging you.

-

@manilx said in Epyc VM to VM networking slow:

@john-c WILL DO! I've told purchasing to order the card you recommended. Let's see how long that'll take....

EDIT: ordered on Amazon. Expect to be here 1st week Nov.

Will report back then pinging you.

The Friday (1st November 2024) after this months update to Xen Orchestra or is it the week after?

-

@manilx I've been waiting for your ping back with the report. Following you saying the first week in November 2024, now in the beginning of the 2nd week in November 2024.

I'm wondering how's it going please, anything holding it up?

-

@john-c Hi. Ordered from Amazon that day and after more than 2 weeks order was cancelled without notice from supplier. Reordered from another one and I'm still waiting....

Not easy to get one. -

@manilx said in Epyc VM to VM networking slow:

@john-c Hi. Ordered from Amazon that day and after more than 2 weeks order was cancelled without notice from supplier. Reordered from another one and I'm still waiting....

Not easy to get one.Thanks for your reply. I hope it goes well this time, anyway if it still proves difficult then you can go for another quad port 10Gbe NIC which is compatible to do the LACP 2 bond with.

If the selected quad port 10Gbe NIC is available on general sale, then you can get it through the supplier who provided you with your HPE Care Packs.

-

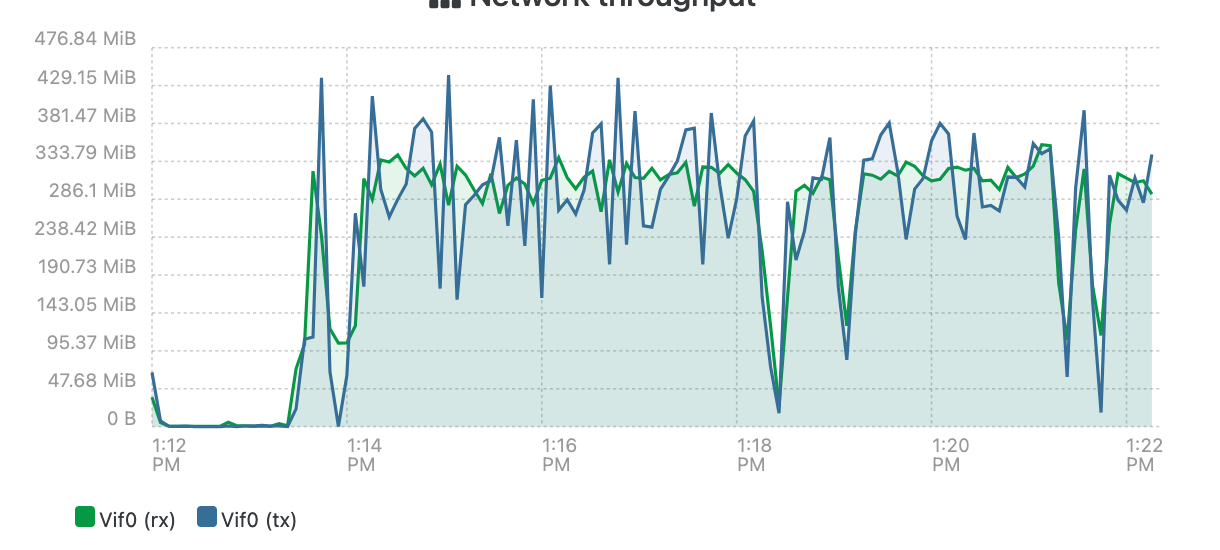

@john-c @olivierlambert Now we're talking!!!

Here are the results of a 2 VM Delta/NBD backup (initial one) using 2 10GB NICS in bond:

WHAT a difference, when we run XOA on an Intel host instead of an EPYC one, with backups.

I've told this from the beginning, that the slow backup speeds were due to the EPYC issue (as I got 200+ MB/s @home with measily Protectli's on 10G)

Looking on what the Synology gets: I get up to 500+ MB/s during the backup!

-

It's a good discovery that having XOA outside the pool can make the backup performance much better.

How is the problem solving going for the root cause? We too have quite poor network performance and would really like to see the end of this. Can we get a summary of the actions taken so far and what the prognosis is for a solution?

Did anyone try plain Xen on a new 6.x kernel to see if the networking is the same there?

-

Is there a difference when running

iperf3with-C bbrflag on the client side ?

In my testing with AMD EPYC and some other CPUs, results are more consistent overall with BBR, and better on the AMD EPYC side (but no miracle, it's still far from perfect).AMD EPYC 7262

vm to vm, 4 threads, iperf3

Without BBR : 4.5-6 Gbps (sometimes more; varies a lot)

With BBR : 7-8 Gbps -

@TeddyAstie That is interesting. I had a look. The default seems to be

cubic, butbbris available usingmodprobe tcp_bbr. I also wonder if different queuing disciplines (tc qdisc) can help. For example mqprio that spreads packes across the available NIC HW queues? -

@Forza the default one seems

cubicwhich in my testing causes chaotic (either good or bad) network performance on XCP-ng (even on non-EPYC platforms) where BBR is more consistent (and also better on AMD EPYC).I also wonder if different queuing disciplines (tc qdisc) can help. For example mqprio that spreads packes across the available NIC HW queues?

Regarding PV network, I don't think queue management will change anything as netfront/netback is single-queue.It's multi-queue so maybe it changes something. -

For those who have AMD EPYC 7003 (Zen 3 EPYCs), you may find in Processor settings in firmware

- Enhanced REP MOVSB/STOSB (ERMS)

- Fast Short REP MOVSB (FSRM)

Which is apparently disabled by default.

It could be interesting to enable them and see if it changes anything performance-wise. I am not sure if it's just for showing a flag, or if it changes anything in the CPU behavior though.You can also try REP-MOV/STOS Streaming to see it changes anything too.

-

I'm attaching results with Epyc 9004 / AS-1015CS-TNR-EU / MB: H13SSW

user cpu family market v2v 1T v2v 4T h2v 1T h2v 4T notes dknight-bg EPYC 9354P Zen4 server 5.10 G (130/150/250) 6.24 G (131/254/348) 11.1 G (0/131/216) 11.1 G (0/187/302) Disabled: Enhanced REP MOVSB/STOSB (ERMS), Fast Short REP MOVSB (FSRM) dknight-bg EPYC 9354P Zen4 server 6.71 G (112/223/269) 7.11 G (122/261/342) 11.3 G (0/145/190) 11.5G (0/179/282) Enabled: Enhanced REP MOVSB/STOSB (ERMS), Fast Short REP MOVSB (FSRM) I couldn't find a setting for REP-MOV/STOPS Streaming in the BIOS, nor in the MB manual.