@Forza said in Xen Orchestra Lite:

I tried this, but I just get a 403 forbidden, trying to access the pool master from the browser. Do I need to start a webserver or something to get this going?

Use https://

I struggled with the same

@Forza said in Xen Orchestra Lite:

I tried this, but I just get a 403 forbidden, trying to access the pool master from the browser. Do I need to start a webserver or something to get this going?

Use https://

I struggled with the same

@infodavid I don't think I managed to make it working for me. It's been 4 years and after ups and downs with using USB in VM I decided to let it go and no longer attach USB devices to VMs.

@Forza said in Xen Orchestra Lite:

I tried this, but I just get a 403 forbidden, trying to access the pool master from the browser. Do I need to start a webserver or something to get this going?

Use https://

I struggled with the same

@julien-f unfortunately it did not help. I removed all snapshots for this VM in snapshots tab of this VM in XO. This caused some coalescing in SR>Advanced tab of SR where this VM is located. I waited till it ended and tried to run backup again. I got the same error.

I hope I'm not hijacking the thread, but having the same problem since over a week. A delta backup job with 4 VMs. 1 constantly failing with the same error. NFS share as remote.

commit 395d8

{

"data": {

"mode": "delta",

"reportWhen": "failure"

},

"id": "1656280800006",

"jobId": "974bff41-5d64-49a9-bdcf-1638ea45802f",

"jobName": "Daily Delta backup",

"message": "backup",

"scheduleId": "df3701cc-ca3e-4a7c-894c-5899a0d3c3e7",

"start": 1656280800006,

"status": "failure",

"infos": [

{

"data": {

"vms": [

"c07d9c18-065c-be11-2a6d-a6c3988b0538",

"15d248ea-f95c-332f-8aa8-851a49a29494",

"93f1634d-ecaa-e4b7-b9b5-2c2fc4a76695",

"d71b5012-b13d-eae5-71fc-5028fa44611e"

]

},

"message": "vms"

}

],

"tasks": [

{

"data": {

"type": "VM",

"id": "c07d9c18-065c-be11-2a6d-a6c3988b0538"

},

"id": "1656280802155",

"message": "backup VM",

"start": 1656280802155,

"status": "failure",

"tasks": [

{

"id": "1656280802805",

"message": "clean-vm",

"start": 1656280802805,

"status": "success",

"end": 1656280802835,

"result": {

"merge": false

}

},

{

"id": "1656280802874",

"message": "snapshot",

"start": 1656280802874,

"status": "success",

"end": 1656280809980,

"result": "b6daf2e9-bbed-97fc-ed59-a6070a948cf9"

},

{

"data": {

"id": "e0bd1fee-ca05-4f0e-98bf-595c41be9924",

"isFull": true,

"type": "remote"

},

"id": "1656280809981",

"message": "export",

"start": 1656280809981,

"status": "failure",

"tasks": [

{

"id": "1656280810039",

"message": "transfer",

"start": 1656280810039,

"status": "failure",

"end": 1656281025733,

"result": {

"generatedMessage": false,

"code": "ERR_ASSERTION",

"actual": false,

"expected": true,

"operator": "==",

"message": "footer1 !== footer2",

"name": "AssertionError",

"stack": "AssertionError [ERR_ASSERTION]: footer1 !== footer2\n at VhdFile.readHeaderAndFooter (/opt/xo/xo-builds/xen-orchestra-202206241101/packages/vhd-lib/Vhd/VhdFile.js:189:7)\n at async Function.open (/opt/xo/xo-builds/xen-orchestra-202206241101/packages/vhd-lib/Vhd/VhdFile.js:93:5)\n at async openVhd (/opt/xo/xo-builds/xen-orchestra-202206241101/packages/vhd-lib/openVhd.js:10:12)\n at async Promise.all (index 0)\n at async checkVhd (/opt/xo/xo-builds/xen-orchestra-202206241101/@xen-orchestra/backups/writers/_checkVhd.js:7:3)\n at async NfsHandler._outputStream (/opt/xo/xo-builds/xen-orchestra-202206241101/@xen-orchestra/fs/dist/abstract.js:604:9)\n at async NfsHandler.outputStream (/opt/xo/xo-builds/xen-orchestra-202206241101/@xen-orchestra/fs/dist/abstract.js:245:5)\n at async RemoteAdapter.outputStream (/opt/xo/xo-builds/xen-orchestra-202206241101/@xen-orchestra/backups/RemoteAdapter.js:594:5)\n at async RemoteAdapter.writeVhd (/opt/xo/xo-builds/xen-orchestra-202206241101/@xen-orchestra/backups/RemoteAdapter.js:589:7)\n at async /opt/xo/xo-builds/xen-orchestra-202206241101/@xen-orchestra/backups/writers/DeltaBackupWriter.js:233:11"

}

}

],

"end": 1656281025734,

"result": {

"generatedMessage": false,

"code": "ERR_ASSERTION",

"actual": false,

"expected": true,

"operator": "==",

"message": "footer1 !== footer2",

"name": "AssertionError",

"stack": "AssertionError [ERR_ASSERTION]: footer1 !== footer2\n at VhdFile.readHeaderAndFooter (/opt/xo/xo-builds/xen-orchestra-202206241101/packages/vhd-lib/Vhd/VhdFile.js:189:7)\n at async Function.open (/opt/xo/xo-builds/xen-orchestra-202206241101/packages/vhd-lib/Vhd/VhdFile.js:93:5)\n at async openVhd (/opt/xo/xo-builds/xen-orchestra-202206241101/packages/vhd-lib/openVhd.js:10:12)\n at async Promise.all (index 0)\n at async checkVhd (/opt/xo/xo-builds/xen-orchestra-202206241101/@xen-orchestra/backups/writers/_checkVhd.js:7:3)\n at async NfsHandler._outputStream (/opt/xo/xo-builds/xen-orchestra-202206241101/@xen-orchestra/fs/dist/abstract.js:604:9)\n at async NfsHandler.outputStream (/opt/xo/xo-builds/xen-orchestra-202206241101/@xen-orchestra/fs/dist/abstract.js:245:5)\n at async RemoteAdapter.outputStream (/opt/xo/xo-builds/xen-orchestra-202206241101/@xen-orchestra/backups/RemoteAdapter.js:594:5)\n at async RemoteAdapter.writeVhd (/opt/xo/xo-builds/xen-orchestra-202206241101/@xen-orchestra/backups/RemoteAdapter.js:589:7)\n at async /opt/xo/xo-builds/xen-orchestra-202206241101/@xen-orchestra/backups/writers/DeltaBackupWriter.js:233:11"

}

}

],

"end": 1656281026510,

"result": {

"generatedMessage": false,

"code": "ERR_ASSERTION",

"actual": false,

"expected": true,

"operator": "==",

"message": "footer1 !== footer2",

"name": "AssertionError",

"stack": "AssertionError [ERR_ASSERTION]: footer1 !== footer2\n at VhdFile.readHeaderAndFooter (/opt/xo/xo-builds/xen-orchestra-202206241101/packages/vhd-lib/Vhd/VhdFile.js:189:7)\n at async Function.open (/opt/xo/xo-builds/xen-orchestra-202206241101/packages/vhd-lib/Vhd/VhdFile.js:93:5)\n at async openVhd (/opt/xo/xo-builds/xen-orchestra-202206241101/packages/vhd-lib/openVhd.js:10:12)\n at async Promise.all (index 0)\n at async checkVhd (/opt/xo/xo-builds/xen-orchestra-202206241101/@xen-orchestra/backups/writers/_checkVhd.js:7:3)\n at async NfsHandler._outputStream (/opt/xo/xo-builds/xen-orchestra-202206241101/@xen-orchestra/fs/dist/abstract.js:604:9)\n at async NfsHandler.outputStream (/opt/xo/xo-builds/xen-orchestra-202206241101/@xen-orchestra/fs/dist/abstract.js:245:5)\n at async RemoteAdapter.outputStream (/opt/xo/xo-builds/xen-orchestra-202206241101/@xen-orchestra/backups/RemoteAdapter.js:594:5)\n at async RemoteAdapter.writeVhd (/opt/xo/xo-builds/xen-orchestra-202206241101/@xen-orchestra/backups/RemoteAdapter.js:589:7)\n at async /opt/xo/xo-builds/xen-orchestra-202206241101/@xen-orchestra/backups/writers/DeltaBackupWriter.js:233:11"

}

},

{

"data": {

"type": "VM",

"id": "15d248ea-f95c-332f-8aa8-851a49a29494"

},

"id": "1656280802194",

"message": "backup VM",

"start": 1656280802194,

"status": "success",

"tasks": [

{

"id": "1656280802804",

"message": "clean-vm",

"start": 1656280802804,

"status": "success",

"end": 1656280802921,

"result": {

"merge": false

}

},

{

"id": "1656280803262",

"message": "snapshot",

"start": 1656280803262,

"status": "success",

"end": 1656280821804,

"result": "6e02fc2a-9fbc-6e3b-f895-a4dd079105f8"

},

{

"data": {

"id": "e0bd1fee-ca05-4f0e-98bf-595c41be9924",

"isFull": false,

"type": "remote"

},

"id": "1656280821805",

"message": "export",

"start": 1656280821805,

"status": "success",

"tasks": [

{

"id": "1656280822234",

"message": "transfer",

"start": 1656280822234,

"status": "success",

"end": 1656281410464,

"result": {

"size": 12277793792

}

},

{

"id": "1656281411017",

"message": "clean-vm",

"start": 1656281411017,

"status": "success",

"end": 1656281411097,

"result": {

"merge": false

}

}

],

"end": 1656281411101

}

],

"end": 1656281411102

},

{

"data": {

"type": "VM",

"id": "93f1634d-ecaa-e4b7-b9b5-2c2fc4a76695"

},

"id": "1656281026511",

"message": "backup VM",

"start": 1656281026511,

"status": "success",

"tasks": [

{

"id": "1656281026565",

"message": "clean-vm",

"start": 1656281026565,

"status": "success",

"end": 1656281026660,

"result": {

"merge": false

}

},

{

"id": "1656281026800",

"message": "snapshot",

"start": 1656281026800,

"status": "success",

"end": 1656281028768,

"result": "d59b9d10-69c1-d55f-664b-f1320d12edf5"

},

{

"data": {

"id": "e0bd1fee-ca05-4f0e-98bf-595c41be9924",

"isFull": false,

"type": "remote"

},

"id": "1656281028769",

"message": "export",

"start": 1656281028769,

"status": "success",

"tasks": [

{

"id": "1656281028808",

"message": "transfer",

"start": 1656281028808,

"status": "success",

"end": 1656281035797,

"result": {

"size": 121699840

}

},

{

"id": "1656281036348",

"message": "clean-vm",

"start": 1656281036348,

"status": "success",

"end": 1656281037475,

"result": {

"merge": false

}

}

],

"end": 1656281037657

}

],

"end": 1656281037658

},

{

"data": {

"type": "VM",

"id": "d71b5012-b13d-eae5-71fc-5028fa44611e"

},

"id": "1656281037658:0",

"message": "backup VM",

"start": 1656281037658,

"status": "success",

"tasks": [

{

"id": "1656281037766",

"message": "clean-vm",

"start": 1656281037766,

"status": "success",

"end": 1656281037817,

"result": {

"merge": false

}

},

{

"id": "1656281037940",

"message": "snapshot",

"start": 1656281037940,

"status": "success",

"end": 1656281039700,

"result": "cb25e9c2-d870-2760-2ce5-5a0ee6c141f3"

},

{

"data": {

"id": "e0bd1fee-ca05-4f0e-98bf-595c41be9924",

"isFull": false,

"type": "remote"

},

"id": "1656281039700:0",

"message": "export",

"start": 1656281039700,

"status": "success",

"tasks": [

{

"id": "1656281039771",

"message": "transfer",

"start": 1656281039771,

"status": "success",

"end": 1656281064244,

"result": {

"size": 669190144

}

},

{

"id": "1656281065362",

"message": "clean-vm",

"start": 1656281065362,

"status": "success",

"end": 1656281066689,

"result": {

"merge": false

}

}

],

"end": 1656281066938

}

],

"end": 1656281066939

}

],

"end": 1656281411102

}

@olivierlambert I want to move them on NFS in order to be able to backup later from NFS to S3 in more granular way

Generally, we are talking about XO - NFS. S3 would be out of XO.

I tried to reply in the old topic, but was suggested to open new one.

TL;DR

How to move existing backups related to a backup job to new remote?

I have couple of XO backup jobs - daily deltas, weekly deltas, monthly full - all unfortunately landing in the same NFS remote on TrueNAS.

Now I would like to do a little clean up for further backing these backups to the cloud and be able, for example, more precisely define storage classes on S3. Simply keeping daily backups in daily share/remote, weekly in weekly, etc.

I know I can edit jobs and set remotes. But can I easily move existing backups to respective remotes? Or if it's not possible do I need to keep original remote until all deltas will be deleted based on their retention?

I would like to avoid manually looking into xo-vm-backups folder to figure out what and where to manually move

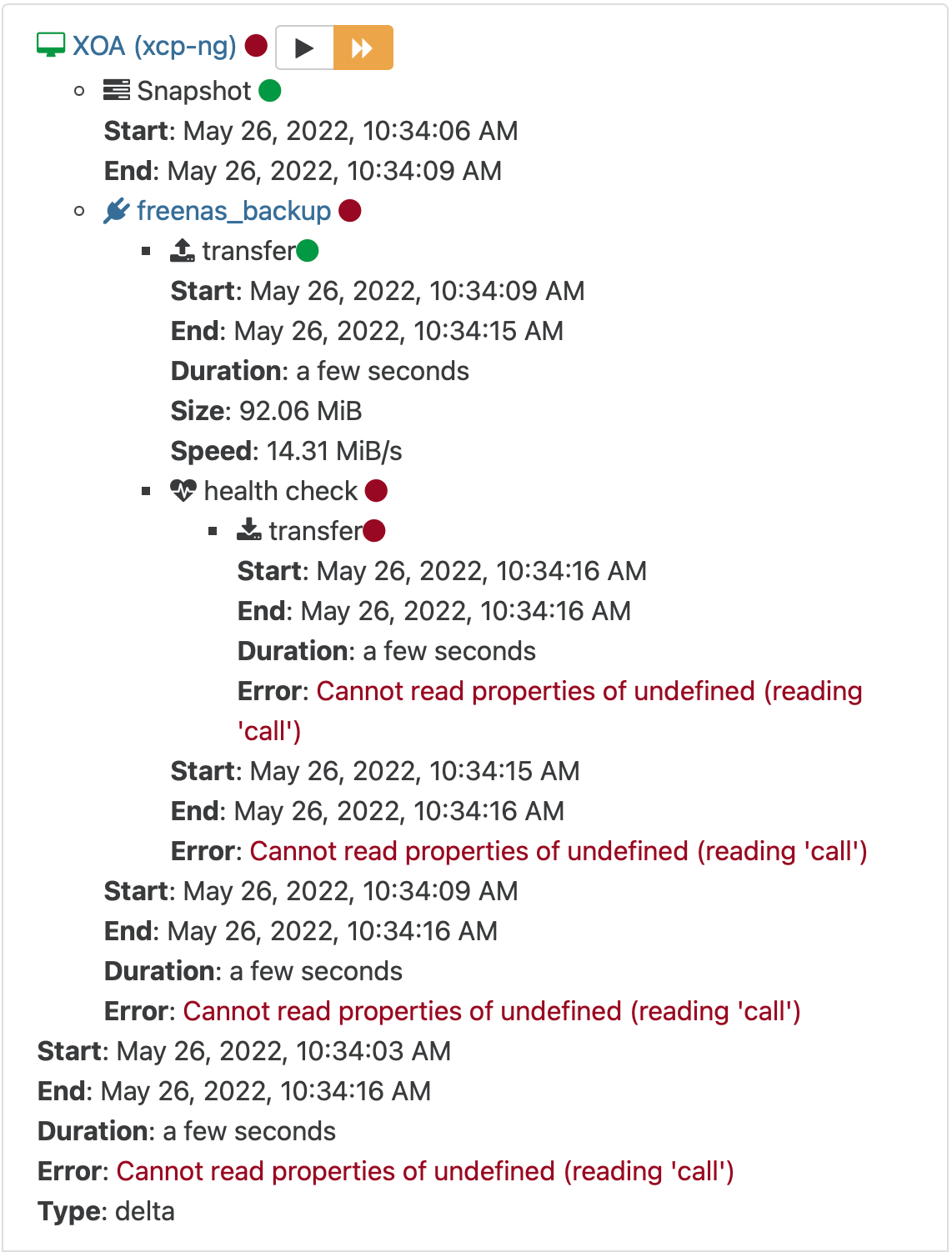

Hello,

yesterday I update XO to

Xen Orchestra, commit b6cff

xo-server 5.93.1

xo-web 5.96.0

Today morning I spotted that night backups jobs failed with following error.

XOA (xcp-ng)

Snapshot

Start: May 26, 2022, 10:34:06 AM

End: May 26, 2022, 10:34:09 AM

freenas_backup

transfer

Start: May 26, 2022, 10:34:09 AM

End: May 26, 2022, 10:34:15 AM

Duration: a few seconds

Size: 92.06 MiB

Speed: 14.31 MiB/s

health check

transfer

Start: May 26, 2022, 10:34:16 AM

End: May 26, 2022, 10:34:16 AM

Duration: a few seconds

Error: Cannot read properties of undefined (reading 'call')

Start: May 26, 2022, 10:34:15 AM

End: May 26, 2022, 10:34:16 AM

Error: Cannot read properties of undefined (reading 'call')

Start: May 26, 2022, 10:34:09 AM

End: May 26, 2022, 10:34:16 AM

Duration: a few seconds

Error: Cannot read properties of undefined (reading 'call')

Start: May 26, 2022, 10:34:03 AM

End: May 26, 2022, 10:34:16 AM

Duration: a few seconds

Error: Cannot read properties of undefined (reading 'call')

Type: delta

What could be a reason of this error? Does it also mean that actually backup finished, but some post-job failed?

Edit: Just to add, I am doing backup to NFS remote.

@abatex Why are you looking for SR id using VM-list? Use

xe sr-list

@olivierlambert do you have similar timeline plans to 8.1 for 8.2? I mean ~2 months between beta and final release?

@johanb7 I had the same problem in the past with FTDI-based USB device. I could see it in Xen host, but not in VM. I've spent many evenings trying to make it visible in VM, but with no luck though.

I don't know why, but seems that some USB devices won't attach to VM.