I'm having similar issue with A400 on xcp-ng8.3

Proprietary driver fails with following message when running nvidia-smi :

NVRM: GPU 0000:00:05.0: RmInitAdapter failed! (0x24:0x72:1568)

[ 44.619030] NVRM: GPU 0000:00:05.0: rm_init_adapter failed, device minor number 0

[ 45.095040] nvidia_uvm: module uses symbols nvUvmInterfaceDisableAccessCntr from proprietary module nvidia, inheriting taint.

[ 45.144703] nvidia-uvm: Loaded the UVM driver, major device number 241.

system is actually loading the driver :

[ 6.026970] xen: --> pirq=88 -> irq=36 (gsi=36)

[ 6.027485] nvidia 0000:00:05.0: vgaarb: changed VGA decodes: olddecodes=io+mem,decodes=none:owns=io+mem

[ 6.029010] NVRM: loading NVIDIA UNIX x86_64 Kernel Module 550.144.03 Mon Dec 30 17:44:08 UTC 2024

[ 6.063945] nvidia-modeset: Loading NVIDIA Kernel Mode Setting Driver for UNIX platforms 550.144.03 Mon Dec 30 17:10:10 UTC 2024

[ 6.118261] [drm] [nvidia-drm] [GPU ID 0x00000005] Loading driver

[ 6.118265] [drm] Initialized nvidia-drm 0.0.0 20160202 for 0000:00:05.0 on minor 1

xl pci-assignable-list gives :

0000:43:00.0

0000:43:00.1

and gpu is assigned as passthrough,, but when listing test VM i have empty list of devices..

[23:06 epycrep ~]# xl pci-list Avideo-nvidia

[23:35 epycrep ~]#

Not sure if i want to try more before switching gpu to something else. Any hint where to look for ?

Server is gigabyte g292-z20 wih epyc 7402p and single gpu for testing. IOMMU enabled.

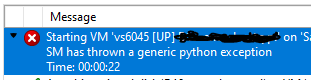

This shows up while trying to start vm that I updated (apt-get dist-upgrade).

This shows up while trying to start vm that I updated (apt-get dist-upgrade).