Nvidia Quadro P400 not working on Ubuntu server via GPU/PCIe passthrough

-

@olivierlambert But still if Nvidia has no plans on doing the same for Linux in what it did for Windows, how will XCP-NG/Xen project react to this? Will there be a possibility for a Kernel parameter for Xen to just 'hide' the hypervisor of some sort?

-

It's not that easy sadly. It's not just a parameter, it's a modification needed to be done directly inside Xen. And after that, the guest won't be able to detect Xen (by definition), so say good bye to PV drivers too.

Maybe there's more elegant solutions, but after discussing with a core Xen dev, there's no "shortcut": this might take a decent amount of resources. I'm not sure it's big priority to us at the moment I'm afraid

-

@olivierlambert Ahh thats a real bummer indeed... Lets hope Nvidia makes the same happen for Linux as it did for Windows, well actually they should otherwise they have a preference of OS so to speak

-

I believe we have something here... In May 2022 Nvidia announced their open source Linux drivers.

As far as I can read, this is not much but a start.I found this article: https://developer.nvidia.com/blog/nvidia-releases-open-source-gpu-kernel-modules/

Maybe this gives us (the consumer) and the XCP-NG team more opportunities to make more use of Nvidia GPU's?

-

@TheFrisianClause I ended up selling my P400 and buying a P2000 which worked straight away. It's possible the P400 may work in future but I didn't want to wait until then.

Unfortunate waste of money, but I'm pretty happy with the setup now running 2 XCP-NG hosts now with Plex with the P2000 doing transcoding, game servers and other utilities.

-

@Pyroteq Currently I am running my plex server via TrueNAS scale with HW transcoding. So I don't need it with XCP-NG anymore...

But for the people who do need it, this can be useful to them.

But for the people who do need it, this can be useful to them. -

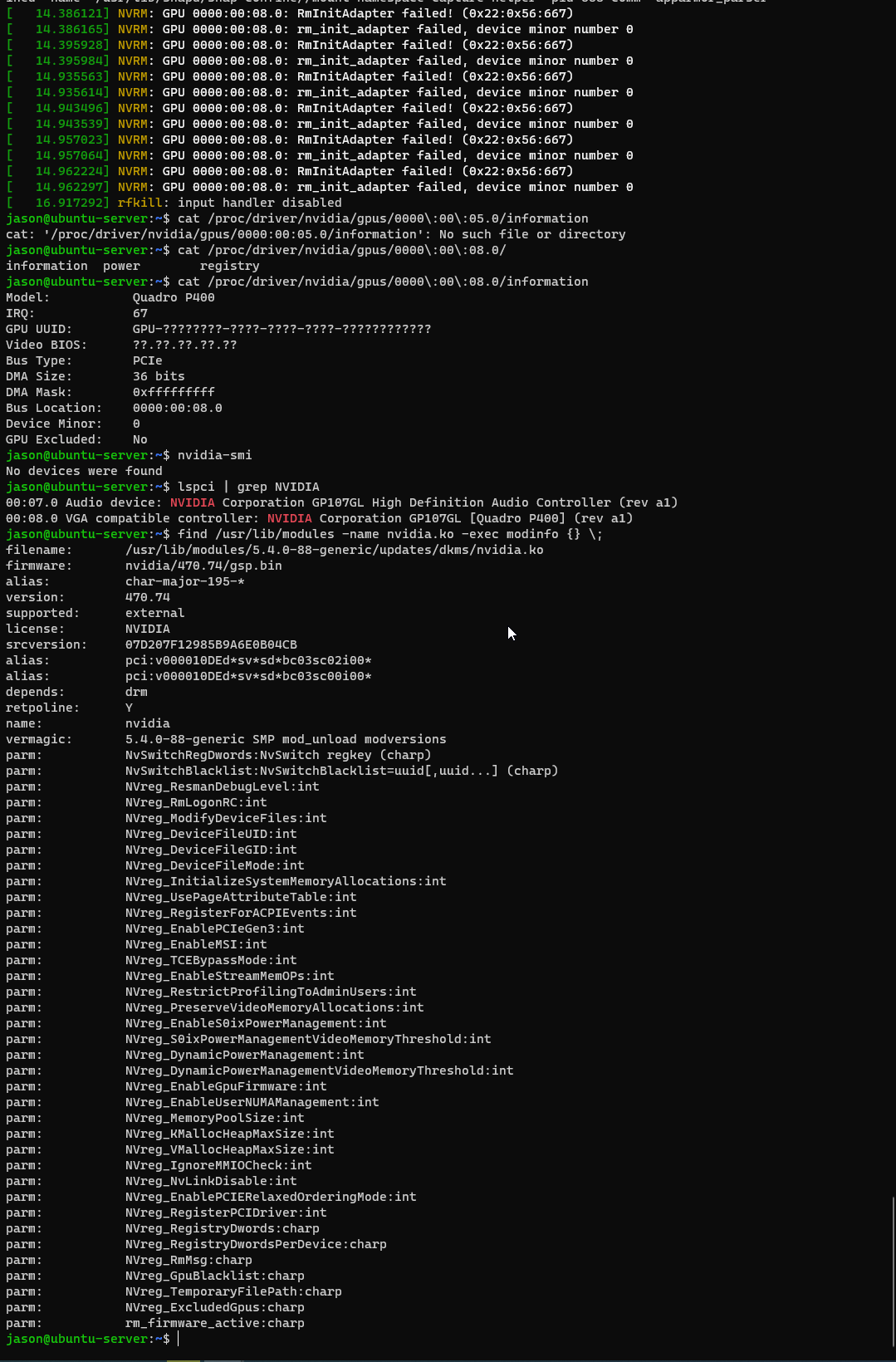

@olivierlambert Hi Olivier, I'm getting exactly the same issue -

Real server: DL380p Gen8 with Nvidia Tesla K80

cat /proc/driver/nvidia/gpus/*/information Model: Tesla K80 IRQ: 93 GPU UUID: GPU-????????-????-????-????-???????????? Video BIOS: ??.??.??.??.?? Bus Type: PCIe DMA Size: 40 bits DMA Mask: 0xffffffffff Bus Location: 0000:00:05.0 Device Minor: 0 GPU Excluded: No Jun 08 11:53:48 gpu-1 nvidia-persistenced[1499]: Started (1499) Jun 08 11:53:49 gpu-1 kernel: resource sanity check: requesting [mem 0xf3700000-0xf46fffff], which spans more than 0000:00:05.0 [mem 0xf3000000-0xf3ffffff] Jun 08 11:53:49 gpu-1 kernel: caller _nv033206rm+0x39/0xb0 [nvidia] mapping multiple BARs Jun 08 11:53:49 gpu-1 kernel: NVRM: GPU 0000:00:05.0: RmInitAdapter failed! (0x24:0xffff:1211) Jun 08 11:53:49 gpu-1 kernel: NVRM: GPU 0000:00:05.0: rm_init_adapter failed, device minor number 0 Jun 08 11:53:49 gpu-1 kernel: resource sanity check: requesting [mem 0xf3700000-0xf46fffff], which spans more than 0000:00:05.0 [mem 0xf3000000-0xf3ffffff] Jun 08 11:53:49 gpu-1 kernel: caller _nv033206rm+0x39/0xb0 [nvidia] mapping multiple BARs Jun 08 11:53:49 gpu-1 kernel: NVRM: GPU 0000:00:05.0: RmInitAdapter failed! (0x24:0xffff:1211) Jun 08 11:53:49 gpu-1 kernel: NVRM: GPU 0000:00:05.0: rm_init_adapter failed, device minor number 0 Jun 08 11:53:49 gpu-1 nvidia-persistenced[1499]: device 0000:00:05.0 - failed to open. -

Fixed it!

Hidden bios menu Ctril-A on the DL380 seems to have sorted it. Then enabled PCI Express 64Bit BAR Support

(Playing with building a gpu enabled kubernetes cluster on XCP-NG.)

-

Yaaay!!! Nice catch!

-

I'm having similar issue with A400 on xcp-ng8.3

Proprietary driver fails with following message when running nvidia-smi :

NVRM: GPU 0000:00:05.0: RmInitAdapter failed! (0x24:0x72:1568)

[ 44.619030] NVRM: GPU 0000:00:05.0: rm_init_adapter failed, device minor number 0

[ 45.095040] nvidia_uvm: module uses symbols nvUvmInterfaceDisableAccessCntr from proprietary module nvidia, inheriting taint.

[ 45.144703] nvidia-uvm: Loaded the UVM driver, major device number 241.system is actually loading the driver :

[ 6.026970] xen: --> pirq=88 -> irq=36 (gsi=36)

[ 6.027485] nvidia 0000:00:05.0: vgaarb: changed VGA decodes: olddecodes=io+mem,decodes=none:owns=io+mem

[ 6.029010] NVRM: loading NVIDIA UNIX x86_64 Kernel Module 550.144.03 Mon Dec 30 17:44:08 UTC 2024

[ 6.063945] nvidia-modeset: Loading NVIDIA Kernel Mode Setting Driver for UNIX platforms 550.144.03 Mon Dec 30 17:10:10 UTC 2024

[ 6.118261] [drm] [nvidia-drm] [GPU ID 0x00000005] Loading driver

[ 6.118265] [drm] Initialized nvidia-drm 0.0.0 20160202 for 0000:00:05.0 on minor 1xl pci-assignable-list gives :

0000:43:00.0

0000:43:00.1and gpu is assigned as passthrough,, but when listing test VM i have empty list of devices..

[23:06 epycrep ~]# xl pci-list Avideo-nvidia

[23:35 epycrep ~]#Not sure if i want to try more before switching gpu to something else. Any hint where to look for ?

Server is gigabyte g292-z20 wih epyc 7402p and single gpu for testing. IOMMU enabled.

Hello! It looks like you're interested in this conversation, but you don't have an account yet.

Getting fed up of having to scroll through the same posts each visit? When you register for an account, you'll always come back to exactly where you were before, and choose to be notified of new replies (either via email, or push notification). You'll also be able to save bookmarks and upvote posts to show your appreciation to other community members.

With your input, this post could be even better 💗

Register Login