Network bonds in XCP-ng

Today we're going to talk a little bit about bonds in XCP-ng, the easiest way to achieve network redundancy for your hosts. There are many different types of bonds and each type has its own specific requirements, so we'll outline the major types that most users find themselves using. The main types of bonds you can choose from in XCP-ng are:

- Active / Active (Balance-SLB) - This bond type does not usually require the switch to be "aware" of the bond, so it can be used with switches that do not support LACP or similar. It will do its best to balance traffic between all bond members, and of course if a bond member fails, traffic will move over to the remaining bond member(s). Note that some low end switches might have issues with this bond type.

- Active / Backup - This bond type does not require the switch to be "aware" of the bond, so it can be used with any type of switch. It utilizes one bond member for all traffic in normal situations, and only during a link failure will it move traffic over to the backup port.

- LACP - LACP is an active link aggregation protocol that requires the switch to be LACP aware, and configured for LACP on the associated ports. The upside is that it can detect path failures even if the physical ports do not drop, and can fail over very quickly. It balances traffic across all bond members during normal use.

For this article I'll step through the creation of an LACP bond, as this is what we use in production and is a very popular choice as it has minimal failover time and can detect mid-span link failures that may not drop the physical link from the host perspective (eg failures on the other side of a media converter).

🌐 Creating a LACP bond in Xen Orchestra

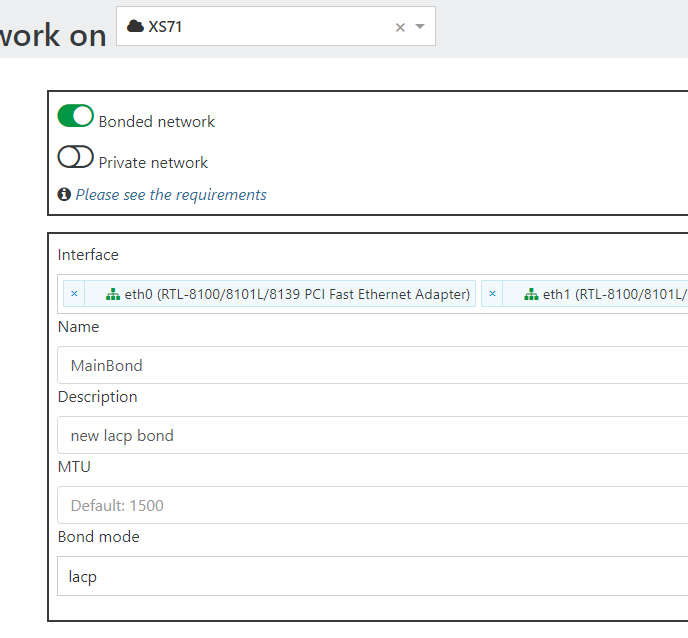

In Xen Orchestra, this is quite simple. Just navigate to New > Network so you're on the Create Network page. Choose the pool you'd like to create the bond on, then be sure to activate the "Bonded network" toggle. Now, under the Interface dropdown, select 2 or more interfaces you'd like to turn into an LACP bond:

Be sure to give it a name and description, and of course set the "Bond mode" to LACP. You should leave the MTU blank/default in 99% of cases. Click create network, and it will create the new bond on your host. If this is a pool, it will create this bond on all your pool members, so be sure all your pool members are cabled and configured on the switch side for LACP!

One thing to keep in mind is that if your host(s) had their management interface on any of the interfaces you just added to the bond, XCP-ng will automatically move the management interface on top of the new bond. So if you had your management interface you use to manage your XCP-ng server on eth0, and you create a bond consisting of eth0 and eth1, the management interface will automatically be moved/updated to exist on top of the new bond network instead.

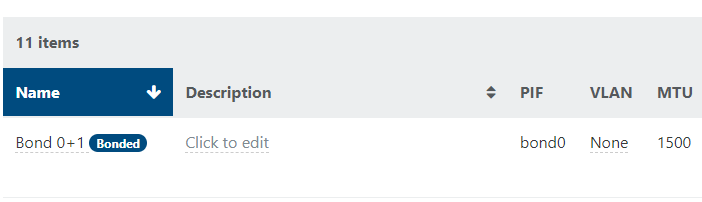

Now you have a new network (bond0) on your pool you can assign to VMs that you'd like to utilize this bond:

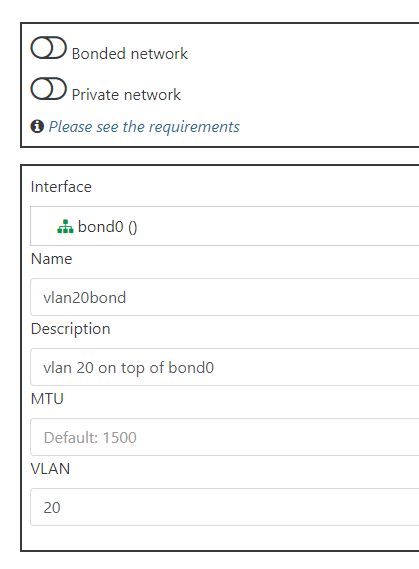

That's it! If you'd like to create VLAN networks that run on top of this new bond, you would just create a new network again using the New > Network page as before, but select the new bond0 interface, and fill out the VLAN field. For instance, if I want a vlan 20 network using this bond:

You'd then have a new network using the name you provided that you can assign to VMs, and it will use VLAN 20 on top of your new bond. Note that this whole process is the same if you'd rather use Active/Active or Active/Backup bond modes instead of LACP, you'd just select the appropriate mode when creating the bond on the network creation page.

🖥️ Creating a bond from the CLI (no network access)

Occasionally users do not have access to a management client like Xen Orchestra, or may not have working networking on the host and only have CLI access remaining. In any case, you can also create a bond from the XCP-ng host CLI using xe.

First, create a new network named bond1 for example:

xe network-create name-label="bond1"

That command should return the UUID of the new network it created, save it somewhere as you'll need it later. Now we need to get the UUIDs of the physical ports you'd like to use in the bond:

xe pif-list

Note the physical port UUIDs, note down the UUIDs of the ports you'd like to bond together. Now use all the UUIDs you gathered above in the following command. Replace <network-uuid> with the UUID that our first xe network-create command returned, and replace UUID#1 and UUID#2 with the UUIDs of the ethernet ports you'd like to use in the bond. Note you can use tab completion to avoid having to type the entire UUID in all these commands:

xe bond-create mode=lacp network-uuid=<network-uuid> pif-uuids=<UUID#1>,<UUID#2>

As an example, here's what my command looked like:

xe bond-create mode=lacp network-uuid=20b66f78-1d7f-c831-5d7b-3fa83251d379 pif-uuids=a3d4bd42-6736-67b9-7aed-622d285dd7dc,893d1488-0188-0e7e-829d-895cefb7a4cf

It will return a new UUID, that's the UUID of your new bond network. That's it! You now have a new bond network you can assign to VMs.

🛠️ Creating a new management interface on your bond via CLI

Note that if you have lost your management interface or similar (and that's why you're stuck using the CLI), you can now configure a management interface on top of this new bond manually. First, find the UUID of your bond by running xe pif-list - you're looking for the entry named bond0, and it should have a vlan value of -1- (which means no vlan). For instance, on my machine:

uuid ( RO) : f7baee9b-67b5-860f-8bad-69f39088d4a4

device ( RO): bond0

currently-attached ( RO): true

VLAN ( RO): -1

network-uuid ( RO): 6e93da18-f159-4801-f67a-eccb78cc0b62

We want to use that top UUID in the following command along with your own network information you'd like to assign to this host:

xe pif-reconfigure-ip uuid=f7baee9b-67b5-860f-8bad-69f39088d4a4 netmask=255.255.255.0 gateway=192.168.1.1 IP=192.168.1.5 mode=static

Now we just need to tell XCP-ng to use this interface as the management interface (use the same top UUID from before):

xe host-management-reconfigure pif-uuid=f7baee9b-67b5-860f-8bad-69f39088d4a4

That's it! Assuming LACP is properly configured on the switch side, you should now be able to access the host via the IP you assigned.

💪 Advanced tips

If you're an experienced user, there's a few further options you can toggle, if you're familiar with them. If you don't know what these are, you can ignore them!

⏲️ Set the LACP rate for the bond

Like most LACP implementations, it defaults to slow for the LACP rate. Note that this just refers to how often LACP PDU packets are sent, not the link speed. This rate does not have to match what is configured on the switch side.

To change the LACP rate to fast to send PDUs every second instead of every 30 seconds, first find the UUID of your bond:

xe bond-list

Copy the resulting UUID of the bond, and use it in the following command:

xe bond-param-set uuid=<bond-uuid> properties:lacp-time=fast

You'll need to reboot for this to take effect.

rate setting is a common point of misunderstanding. It refers to the rate that LACP PDU packets are sent to the LACP partner (your switch in this case). See below for more details.🤿 A quick dive into LACP rate/PDU

LACP PDU packets serve a couple purposes, but one of them is to act as a keep-alive. If a reply PDU is not received within 3x the rate value (3x 1s = 3s for fast, 3x 30s = 90s for slow), then the link is treated as dead and the LACP protocol will disable the link, and move traffic over to the remaining links. However keep in mind an LACP partner will always instantly reply to received PDUs, this is why the rate does not need to match on both sides of an LACP link. If your host is set to send PDUs every 1 second (fast), but the switch side is set to slow (send a PDU every 30 seconds), it will still instantly reply to all PDUs it receives from its partner (so every 1 second in this case), and no links will mistakenly be marked as dead. For optimal failover speed (assuming you have a stable enough network), of course both should be set to fast.

Another common point of misunderstanding is when these PDU keep-alives are relied upon to fail over traffic. Given the above explanation of LACP PDU timing, you might think this means it could take a full second (or a full 90 seconds if using slow rate!) for a link to be marked dead and for traffic to move to working links, however this is not true. The LACP protocol instantly (within milliseconds) marks links as dead and moves traffic when a port physically changes state (for example when a cable is unplugged, fails, bounces, etc). PDU packets (and by extension the PDU rate you've set) do not come into play here when a link is lost at either end. When they do come into play is in scenarios where you may have a link failure in the middle of a complex connection, where each end of the link (the host and switch) do not lose their link status. For example, a setup where a host connects to an active fiber media converter, which then runs over a few miles of fiber - then back into a media converter on the other end to the switch. The fiber in the middle could be cut, but the switch and host physical ethernet ports will not go down, because they're plugged into active media converters - this is a case where the PDUs will come in handy, and when one is not received by the partner, LACP will fail the link over. If your hosts are connected directly to your switches, you don't need to worry about this.

🔢 Advanced monitoring

If you'd like to see some more detailed status information about your bond(s), you can use some ovs (openvswitch) commands on the host itself.

ovs-appctl lacp/show will show the overall current LACP status, along with a lot of details of each bond member:

[03:58 XEN-HOME-02 ~]# ovs-appctl lacp/show

---- bond0 ----

status: active negotiated

sys_id: 00:02:c9:3a:7e:c0

sys_priority: 65534

aggregation key: 1

lacp_time: fast

slave: eth0: current attached

port_id: 2

port_priority: 65535

may_enable: true

actor sys_id: 00:02:c9:3a:7e:c0

actor sys_priority: 65534

actor port_id: 2

actor port_priority: 65535

actor key: 1

actor state: activity timeout aggregation synchronized collecting distributing

partner sys_id: cc:4e:24:b8:d9:d0

partner sys_priority: 1

partner port_id: 136

partner port_priority: 1

partner key: 20003

partner state: activity timeout aggregation synchronized collecting distributing

----trimmed----

ovs-appctl bond/show will show overall bond details for any type of bond, not just LACP bonds:

[04:09 XEN-HOME-02 ~]# ovs-appctl bond/show

---- bond0 ----

bond_mode: balance-tcp

bond may use recirculation: yes, Recirc-ID : 1

bond-hash-basis: 0

updelay: 31000 ms

downdelay: 200 ms

next rebalance: 8704 ms

lacp_status: negotiated

active slave mac: 00:02:c9:3a:7e:c0(eth0)

slave eth0: enabled

active slave

may_enable: true

hash 108: 2 kB load

hash 128: 13583 kB load

hash 142: 1319 kB load

hash 151: 3 kB load

hash 157: 1 kB load

hash 175: 54 kB load

hash 223: 1 kB load

hash 247: 1 kB load

----trimmed----

ovs-vsctl list port bond0 will show details about the bond interface itself:

[04:10 XEN-HOME-02 ~]# ovs-vsctl list port bond0

_uuid : 16d25e3e-d8e9-4fb0-9713-930bbf033918

bond_active_slave : "00:02:c9:3a:7e:c0"

bond_downdelay : 200

bond_fake_iface : false

bond_mode : balance-tcp

bond_updelay : 31000

external_ids : {}

fake_bridge : false

interfaces : [3dd372d2-a2b3-49f3-ae7a-5d96d1cb1944, e21cf8f8-04bd-410e-8278-2012f835b45c]

lacp : active

mac : "00:02:c9:3a:7e:c0"

name : "bond0"

other_config : {bond-detect-mode=miimon, bond-miimon-interval="100", lacp-fallback-ab="true", lacp-time=fast}

---trimmed---

That's it for today! If you have any questions or comments, feel free to open a thread on the Network category of our forums!