I'd also love to understand this more fully, and I'm happy to help write the documentation on it.

Latest posts made by DigitalPlumber

-

RE: Attempting to understand backup scheduling

-

RE: MTU change

Hey, @stevewest15 -

I'm going to echo Olivier here. I stumbled across this posting (sorry for being a couple of months out) because I was looking for the right procedure to change my MTU to 9k - based on a recommendation from iX Systems (we have a TrueNAS Core X10 from iX). My two XCP-NG boxes have 10G NICs and the X10 has a dual 10G NIC LACP bond.

I was seeing, frankly, disappointing numbers when I watched port statistics on the 10G switch; I never really got above ~2G even on the LACP bond while doing a backup from the pool to the pool's backup SR.

After reading Olivier's response to you, I thought I'd iperf it without changing the MTU. Olivier and his team are a bunch of REALLY smart people, and I'm inclined to believe what they tell you... But I'm a strong believer in "trust but verify". Ce n'est pas, @olivierlambert?

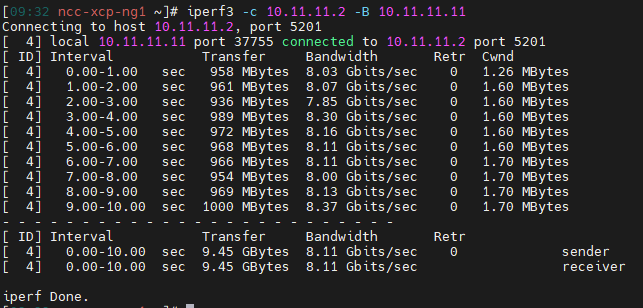

Anyway, this is what I got just with straight 1500 MTU using iperf3 between one of my XCP-NG hosts and the X10. As you can see, I didn't do any modifications such as paralleling or MSS settings. Just a raw test:

The 10.11.11.0/24 network is our non-routed SAN/NAS network. While pushing to 9k MTU (don't forget to look at MSS as well) might help these ~80% utilization numbers, the real issue in my mind is the gap between the ~2G I was seeing and the raw numbers above. This tells me that there's something else afoot here. A few thoughts:

- TrueNAS encryption. If the target is encrypting/decrypting data on write/read, you're going to see significantly lower numbers

- My initially disappointed observations were during backups. Again, there's a lot going on under the hood that could be affecting performance before it even gets to the wire (or glass, as in my case).

- Perhaps I need to look at what I can do to tune NFS itself

- Changing the MTU (and probably MSS) is absolutely a valid thing to do, but every device in the path needs to be adjusted as well. And 5 years from now, when you go to replace the switch, or a NIC, or the storage array... Are you going to remember?

I've been in that situation... and I didn't. Hopefully you're smarter than me!

I've been in that situation... and I didn't. Hopefully you're smarter than me!

iPerf is showing that there's probably 20% in overhead on my SAN, which is something worth looking at, but at this point it's not an outrageous number - particularly since I'm not even coming close to touching that number during normal ops. It's worth a Wireshark though.