XCP-ng 8.0.0 Beta now available!

-

In fact it was true until we release 0.8 in XCP-ng, but yeah, now we should update the doc

-

Works great for me, both with LVM SR and ZFS file SR!

Love it!Just one minor issue: I can't get an old Windows XP VM to boot with Citrix legacy PV drivers. It was working with xcp-ng 7.6, but no longer with 8.0 beta. It does not have internet access, It is needed to control old hardware for which no driver for newer Windows OSes exist.

-

Testing 8.0 beta on Dell R710, clean install. Everything I've tried so far works flawlessly. Installed XOA from welcome page and few Linux VMs. Also installed W2008R2 (using a Dell ROK licence I've found on the server with BIOS settings copied) and it also works fine. I've used Windows update setting in XOA method to get the PV drivers and they installed fine without disabling Driver signing. Only thing missing is a Management agent/tools. What would be a preffered method to install that on 8.0?

Edit: I've extracted managementagentx64.msi from Citrix Hypervisor 8.0 install ISO and installed it on W2008R2 VM. Worked like a charm.

-

Planning on testing soon. Haven't had much time at home lately to mess with the lab.

Thanks for all the hard work!

-

Sharing results of some tests I have been doing lately, maybe that can give ideas to other testers. Those are cross-pool live migration tests. The goal was to evaluate if creating a new pool and then live migrating the VMs from the old pool would allow to avoid downtime in every case.

Summary of the results: when it comes to migrating VMs from older versions of XenServer, the situation is better in 8.0 than it was in 7.6, because Citrix fixed a few bugs (that we reported). Some failing cases remains and will not be fixed because they would require patching unsupported versions of XenServer.

Tests done using nested VMs as the sender pool, and a baremetal XCP-ng 8.0 as receiving pool.

I have 4 test VMs to migrate. They are small VMs, with small disks and little RAM, and little load too. So if you have bigger VMs and can test, you know what to do!

- HVM Centos 7 64 bit from the "Centos 7" template (this is important). No guest tools.

- HVM Centos 7 64 bit from the "Other" template (this is important). No guest tools.

- PV Centos 6 64 bit from the Centos 6 template. No guest tools.

- HVM Windows 7 32 bit with PV drivers installed.

I have tested migration from XenServer 6.5, 7.0, 7.1 and 7.2 (all fully patched).

- From XenServer 6.5 to XCP-ng 8.0

- HVM Centos 7 from Centos 7 template: fails! The VDIs are transferred but when it comes to migrating the RAM and complete the migration it fails, reboots the VM and creates a duplicated VM in the target pool ! (same UUID). This is a bug that Citrix won't fix in older versions : https://bugs.xenserver.org/browse/XSO-932

- Other VMs: OK

- From XenServer 7.0 to XCP-ng 8.0

- HVM Centos 7 from Centos 7 template: same failure

- Other VMs: OK

- From XenServer 7.1 or 7.2 to XCP-ng 8.0: all good.

I have also tested storage migration during a pool upgrade (for VMs that are on a local storage repository and need to be live migrated to another host of the pool), to check if this fix we are trying to contribute upstream works as intended (it wouldn't work in XCP-ng 7.6 because of additional bugs fixed since by Citrix in CH 8.0) : https://github.com/xapi-project/xen-api/pull/3800. Good news, it worked (in my tests), so I consider leaving it enabled (it isn't in CH 8.0). Feel free to try it and report! It's still recommended to use shared storage for easier live migrations, but being able to do the migration when the VM is on local storage can still be handy. Reference: https://github.com/xcp-ng/xcp/issues/90

-

@cg You should be careful when recommending dedup on ZFS. I think (but my knowledge might be outdated) there are still at least some caveats people should be aware of.

A quick search reveals you typically need 1-5 GB of RAM per TB of storage. -

@Ultra2D said in XCP-ng 8.0.0 Beta now available!:

@cg You should be careful when recommending dedup on ZFS. I think (but my knowledge might be outdated) there are still at least some caveats people should be aware of.

A quick search reveals you typically need 1-5 GB of RAM per TB of storage.As a rule of thumb I have 1 GB per TB of storage in mind and I'm not aware of any problems with Dedup or Compression on ZFS(oL).

I wouldn't touch that (and RAID beside 0/1/10) on btrfs though - that's probably why many people are interested in ZFSoL, although the licenses are somewhat tricky.I don't have much experience with dedup on ZFS tough, I just did some tests with about 100 of storage on another system and it worked perfect - just not the result I hoped to get, but that's data-dependend.

But hey: Prove me I'm wrong. Don't forget sources/links plz. and keep the versions in mind!

-

Unless necessary, deduplication should NOT be enabled on a system. See Deduplication

And continue from there. For instance:

Deduplicating data is a very resource-intensive operation. It is generally

recommended that you have at least 1.25 GiB of RAM per 1 TiB of storage when

you enable deduplication. Calculating the exact requirement depends heavily

on the type of data stored in the pool. -

@Ultra2D said in XCP-ng 8.0.0 Beta now available!:

Unless necessary, deduplication should NOT be enabled on a system. See Deduplication

I don't really see big arguments here. As I wrote: CONSIDER. I'm sure you know what that means.

If I know I'm running a bunch of similar VMs, I'm pretty perfect by throwing a bit of RAM for multiplicating the effective space of my SSD array.

It could diminish the need for an expensive vendor dedup-array.And continue from there. For instance:

Deduplicating data is a very resource-intensive operation. It is generally

recommended that you have at least 1.25 GiB of RAM per 1 TiB of storage when

you enable deduplication. Calculating the exact requirement depends heavily

on the type of data stored in the pool.So what's wrong with that? I said as rule of thumb I have 1 GB per TB in mind. A rule of thumb is not a law and roughly fits. Also: ZFS should always have some RAM for caching, so you need a bit anyways and RAM is pretty cheap these days.

Though XCP-ng is a server OS: Don't forget to use ECC RAM on productive systems with important data. RAM corruption is can be pretty bad for ZFS.

-

@cg Yes, I can read, thanks. It says "heavily consider".

I honestly don't care if you run ZFS with dedup, and if it keeps on working for you that's great, but it did sound like a recommendation for running dedup without adding a big fat warning. I felt it was necessary to add that warning.Some more info about the rule of thumb:

https://github.com/zfsonlinux/zfs/issues/2829

https://news.ycombinator.com/item?id=8437921 -

If it makes you sleep tighter: Heavily consider using ZFS. As consider means: See if it makes sense for you and estimate if you have data that could benefit from it.

It doesn't make sense to enable deduplication for AVC video archives etc. pp. It would just add complexity and overhead.Consider always means: Read about it, see what it takes and if it makes sense - but it's a good thing and you could benefit from that. Not: Enable it, no matter what it takes.

I'm out for nitpicking about ZFS, the show is yours...

-

I now tried to install XCP-ng 8.0.0 Beta on my new Ryzen test machine, with no success.

Install 7.6 from USB-stick works perfect.

Install 8.0.0 Beta neither works from USB-stick nor USB-DVD drive.

I also tested CH 8.0 from USB-stick: Same (so no specific XCP-ng problem)Since Citrix won't give a - their support for unsupported (unpaid) servers is well known - about booting on a Ryzen PC, I report it here.

What happens is:

I can't see much on the screen when booting to "install", neither UEFI nor "normal boot".

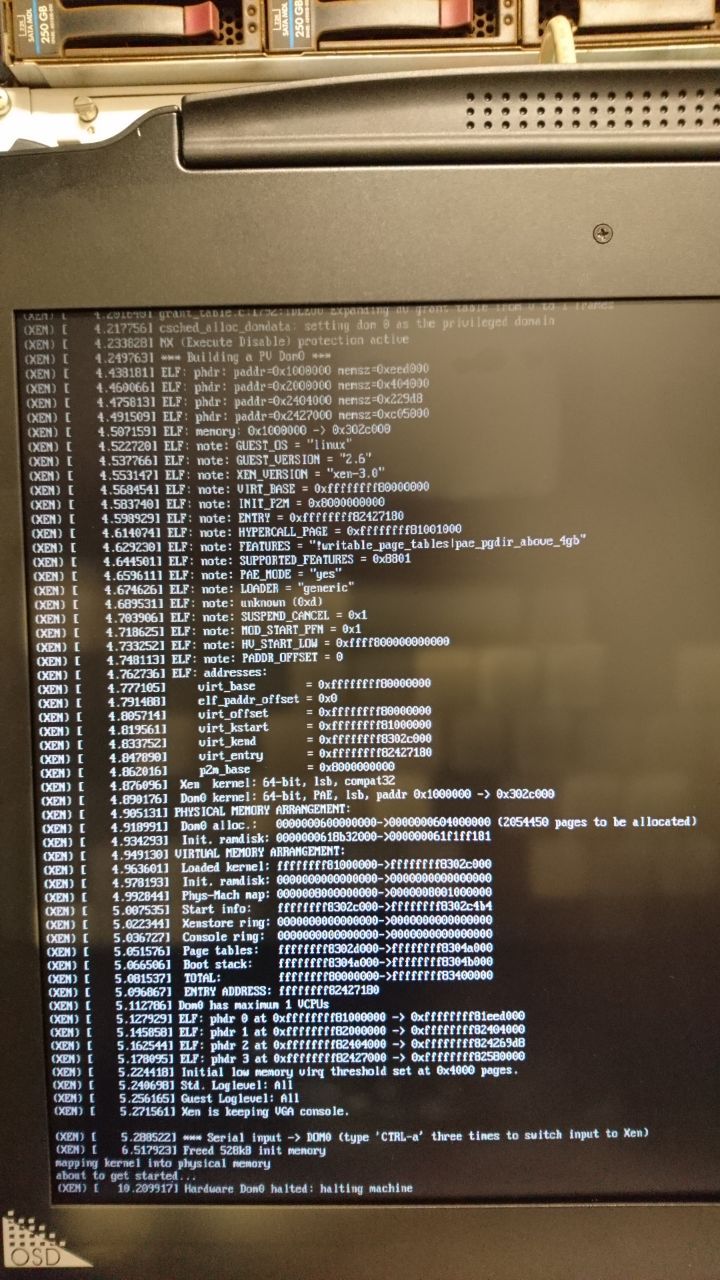

When I boot to safe (both methods), it ends up like that:

mapping kernel into physical memory about to get started... Hardware Dom0 halted: halting machineI don't get it. Everything seems good, no errors, nothing. Only reset button brings it back to life.

CPU: Ryzen 2200G

Mainboard: Asus Prime A320M A

Firmware Version: Version 4801 2019/05/10

PRIME A320M-A BIOS 4801

Update AM4 ComboPI 0.0.7.2A for next-gen processors and to improve CPU compatibilitySomething must have been change between 7.6 and 8.0, that makes it "halt".

-

Dom0can't boot here. Likely related to a more recent kernel than in 7.6.Note that I don't have any issue on a Ryzen 7 (2xxx) nor EPYC.

Anyone else with a 2200G?

-

Additionally: I installed XS 7.6 before and 'upgraded' it via USB-stick to XCP-ng 7.6.

Worked without any problems and VMs were fine.Also regarding boot: I had a problem like that with OPSI, as their bootimage had some changes to the kernel, that made it stop.

What helped was to limit the memory to 2G (2048M). I don't know if/how to add that to the USB-stick, to test if that helps here, too.

(Tried noapic/noacpi on OPSI before... maxmem helped!) -

In UEFI, you can edit the Grub menu before starting install. Try to change RAM value there

-

I've taken a loot at the USB-stick and found grub config files. What I found was a maxmem of 8192 MB already configured.

I changed it to 2048M (I don't see any reason why the setup should need more than a few hundret MBs) aaaand it worked.

As it affected 2 different systems (opsi bootimage for PXE install and CH/XCP) I'm very sure it's a kernel bug!

Though I can't say which kernels are affected, except that old kernels work.

When I have time, I may test it on our Epyc server, but I guess that it

s more about the embedded Vega GPU and the shared memory.https://forum.opsi.org/viewtopic.php?f=8&t=10611 Et al.

I'm not the first one, having trouble with Ryzen/Vega APU and the Linux kernel.tl;dr:

As a workaround, I recommend setting the mem-limit for install stick generally to 2048M, as there shouldn't be any benefit for more anyways and it pushes compatibility.Yet have to upgrade the system and see if XCP-ng 8 Beta boots properly (had it still on CH 8.0 for test).

-

Indeed, I suppose it's related to Ryzen+Vega APU, because I can't reproduce on EPYC nor Ryzen without APUs.

Regarding making the chance upstream, I'm not entirely confident to make the modification, because it's hard to evaluate the impact (our RC is pretty close now). But it's up to @stormi to decide

-

At this stage, I'd rather document the issue to help people workaround it than change the default values inherited from Citrix (who tested the installer on a lot of hardware). I also suggest to open an issue at https://github.com/xcp-ng/xcp/issues so that other users that would meet the same issue could share their experience and let us try to estimate whether it's a widely spread issue or something very specific to some hardware.

-

Looks like the best way to me

-

@cg said in XCP-ng 8.0.0 Beta now available!:

I created an issue, IMHO it would be good if the problem itself makes it to upstream to either bump someone to fix it or get feedback that problem is known/fixed in version X.Y.Z.

For reference:

https://github.com/xcp-ng/xcp/issues/206