Delta Backups & Continous Replication of Empty Drives

-

Hi,

I'm running into an issue where the backup jobs are taking longer than 24 hrs and failing. I understand this is a limitation with xenserver (per this post).

However after further investigation I'm seeing that the initial Delta & Replication are copying the entire drive even though they are on thin provisioning.

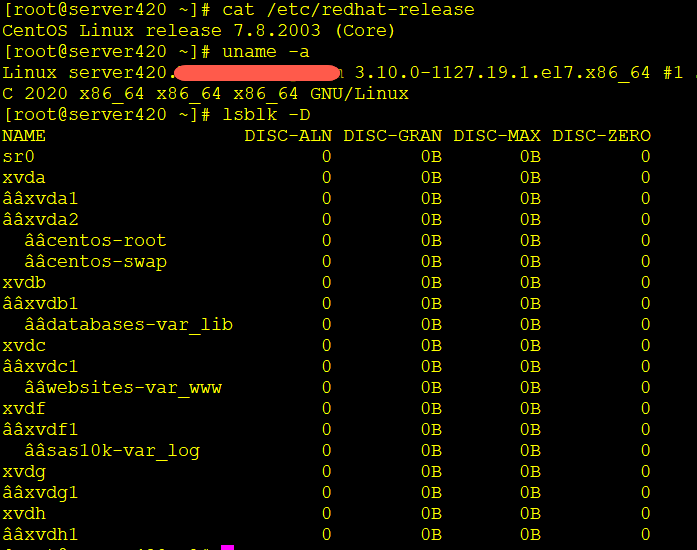

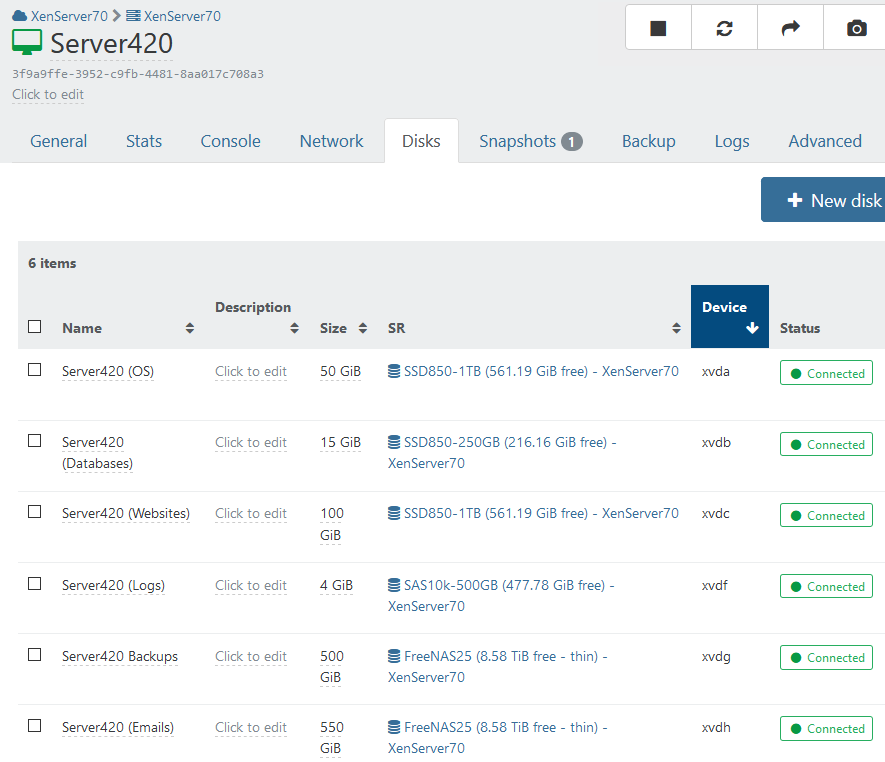

For example, on a VM, I have a 500GB drive that is barely used (1.3 GB):

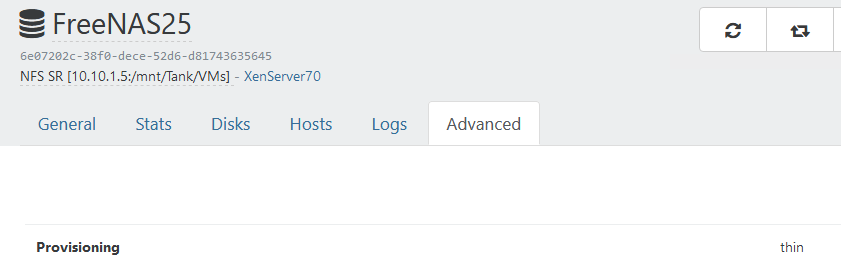

/dev/xvdg1 500G 1.3G 499G 1% /BackupsThe above drive is provisioned from a FreeNas which is provisioned as "thin":

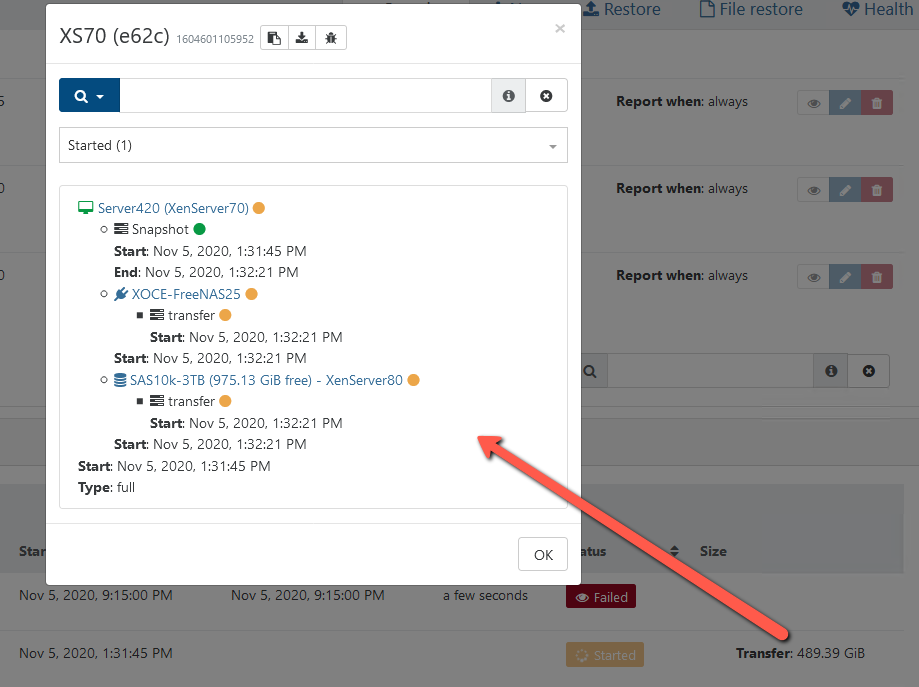

But when XO is running the backup job (Delta & Continous), it's moving 500 GB of data across the network and storing the entire 500 GB of the Delta Backup and Continuous Replication as part of the initial full backup.

I thought with thin provisioning, the backups would only be of actual used data (in my example above) that would be 1.3 GB instead of 500GB of empty data.

Am I doing something wrong?

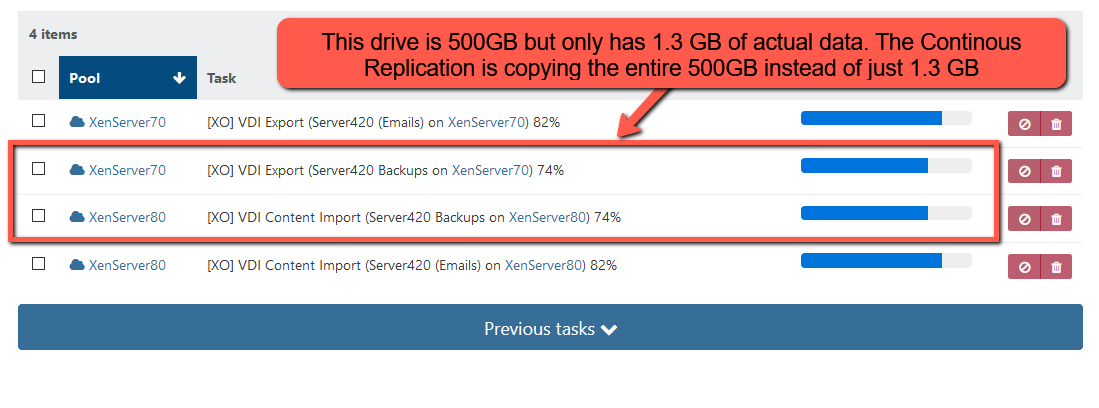

The backup job has been running for almost 22 hrs and appears to be copying the entire 500GB drive even though it's mostly blank:

On the target remote for the Delta Backup, I see it still copying data:

root@freenas25[~]# ls -alh /mnt/Tank/VMs/Backups/xo-vm-backups/3f9a9ffe-3952-c9fb-4481-8aa017c 708a3/vdis/ef24e62c-8829-4956-9aa6-10ebb3dd0e8f/55a93b7d-1e2e-4903-961b-c3df8b73fee3 total 325168553 drwxr-xr-x 2 4294967294 wheel 3B Nov 5 13:32 . drwxr-xr-x 8 4294967294 wheel 8B Nov 5 13:32 .. -rw-r--r-- 1 4294967294 wheel 310G Nov 6 10:41 .20201105T183221Z.vhd

-

We can't help without knowing:

- XO version you are using

- XCP-ng or XenServer version you are using

Also, it can sounds blank from the filesystem perspective, but not from the blocks.

-

@olivierlambert Thanks for your help:

-

xo-server 5.70.0

-

xo-web 5.74.0

-

XCP-ng 8.0

-

-

Check the size of the VHD on the SR itself. I think it might be because the VHD hasn't freed blocks on the filesystem.

-

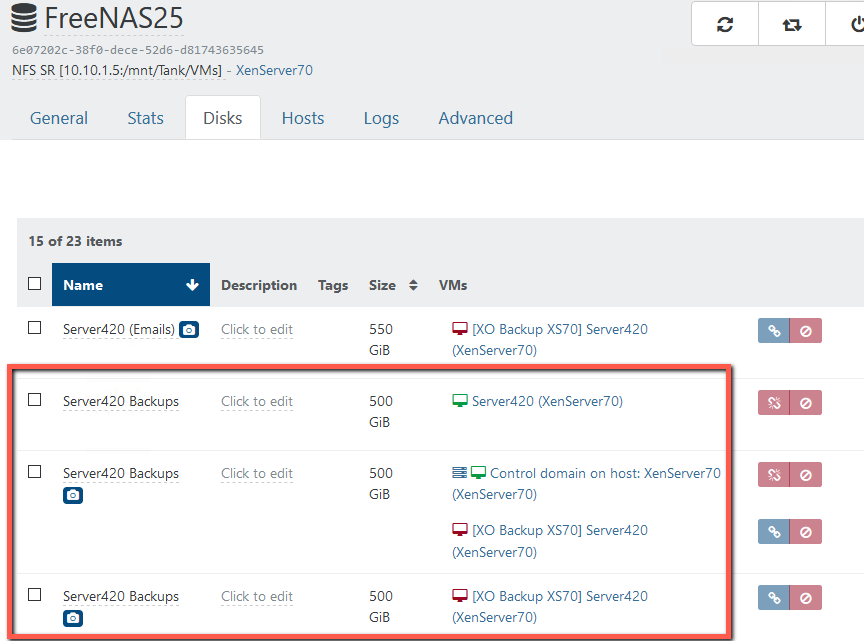

EDIT: @olivierlambert Thank you, I checked the SR and it's showing much larger size (421 GB) while the VM OS shows only 1.3 GB:

root@freenas25[/mnt/Tank/VMs]# du -hs 6e07202c-38f0-dece-52d6-d81743635645/55a93b7d-1e2e-4903-961b-c3df8b73fee3 .vhd 421G 6e07202c-38f0-dece-52d6-d81743635645/55a93b7d-1e2e-4903-961b-c3df8b73fee3.vhdVM OS

[root@server420 ~]# du -hs /Backups/ 1.3G /Backups/ -

Ping @julien-f

-

Hi @olivierlambert , sorry the VHD path I provided above was wrong.

It appears the SR is showing it's using 421 GB while the VM OS shows 1.4 GB.

How long does it take for "VHD to free blocks on the filesystem"? This is NFS SR.

Thx,

SW

-

So it's logical then. XOA is just pulling all the VHD blocks exposed by the host.

What you can do: try to reclaim on the VM FS (eg trim? I don't remember, maybe @nraynaud knows more).

Alternatively, you can use

xe vdi-copyto create a new cleaner VHD with only what's used. -

@stevewest15 Hello, can you tell us the list of partitions and what FS are inside the VHD please?

thanks,

Nicolas.

-

Here are a few of the operations I have in mind:

https://unix.stackexchange.com/questions/44234/clear-unused-space-with-zeros-ext3-ext4 -

@nraynaud Hi, Thank you for your assistance. Here is /etc/fstab from the VM which is CentOS 7.x:

UUID=ef4eef1c-dea6-452e-9fb4-20948465f484 /boot xfs defaults 0 0 /dev/mapper/centos-root / xfs defaults 0 0 /dev/xvdh1 /emails xfs defaults 0 0 /dev/mapper/databases-var_lib /var/lib xfs defaults 0 0 /dev/mapper/sas10k-var_log /var/log xfs defaults 0 0 /dev/mapper/websites-var_www /var/www xfs defaults 0 0 /dev/mapper/centos-swap swap swap defaults 0 0 UUID=20706a6e-bda3-4277-abf1-c74c4619a1d8 /Backups/ xfs defaults 0 0And this is from XO:

-

@stevewest15 Thanks, I'm not really familiar with XFS. there might be a way to reclaim (trim) or zero the unused place on the disk.

-

It should: xfs.org/index.php/FITRIM/discard

A

fstrim /mountpointshould work. -

@olivierlambert Thanks, I tried running fstrim but it returned "the discard operation is not supported" on this NFS SR mount. I also tried running on SSD mounts which do support TRIM and they all returned the same message about "discard...not supported."

The VM OS (CentOS 7.8) does support TRIM in kernel so not sure if xcp-ng or xenserver is the reason why the VM can't issue fstrim on the NFS SR mount or the SSD SR mounts.