XCP 8.2 VCPUs-max=80 but VM shows 64 CPU only, NUMA nodes and Threads per core not matching

-

@sapcode said in XCP 8.2 VCPUs-max=80 but VM shows 64 CPU only, NUMA nodes and Threads per core not matching:

@s-pam The defaults at my ubuntu server looks like this:

CONFIG_NUMA=y

CONFIG_AMD_NUMA=y

CONFIG_X86_64_ACPI_NUMA=y

CONFIG_NODES_SPAN_OTHER_NODES=y

CONFIG_NUMA_EMU=y

CONFIG_NODES_SHIFT=10

CONFIG_NR_CPUS=8192I found this https://docs.citrix.com/en-us/citrix-hypervisor/system-requirements/configuration-limits.html which seems to indicate an upper limit on vcpus per guest.

-

This is an "officially supported scope" limit but not a technical one. However, things could get weird with more CPUs. For Linux guest, that should be OK in theory, but never tried with more than 64.

-

@olivierlambert said in XCP 8.2 VCPUs-max=80 but VM shows 64 CPU only, NUMA nodes and Threads per core not matching:

This is an "officially supported scope" limit but not a technical one. However, things could get weird with more CPUs. For Linux guest, that should be OK in theory, but never tried with more than 64.

Hi Oliver,

"officially supported scope" limit, means XCP is also following the 32 vcpu limit of citrix ?Does "Not a technical one" means there are some hacks possible to overcome 32 vcpu limit, i would be glad to do beta testing here and go up to 40 vcpu if you tell me where to write the hack.

The machine is running multiple SAP HANA 2.0 SP4 instances and acts as a pure development, demo box so we would have no trouble in testing any hacky stuff to overcome that 32 vcpu limit.

Let me know your thougts, we can also schedule a remote teams session if needed.

Best regards

Operator from hell -

@sapcode I've used 48 cores on my EPYC servers so I know this works. Didn't have a chance to test >64 cores though.

-

The 32 vCPU limit is only implemented in XenCenter/XCP-ng Center, not in Xen Orchestra nor XAPI.

So we do not enforce anything, and there's no "hack".

I discussed that with Xen devs back in the days, and they told me that Windows guest could get funky behaviour with more than 32 vCPUs, Linux is higher. It's due to complex interactions with VMs and Xen. I have 0 idea for FreeBSD for example.

As @S-Pam said, I already seen people with 48 cores or so, but higher than 64 is a bit unusual, so I have near 0 knowledge on how it might behave there.

-

@s-pam 48 cores in double or single socket ?

We have got 40 cores * 2 Sockets, so it looks like the citrix limit is 32 vcpu per socket.... -

@sapcode said in XCP 8.2 VCPUs-max=80 but VM shows 64 CPU only, NUMA nodes and Threads per core not matching:

@s-pam 48 cores in double or single socket ?

We have got 40 cores * 2 Sockets, so it looks like the citrix limit is 32 vcpu per socket....Single socket EPYC servers.

-

@olivierlambert said in XCP 8.2 VCPUs-max=80 but VM shows 64 CPU only, NUMA nodes and Threads per core not matching:

I hav

Hi Oliver,

we have only SUSE & RedHat Linux guest VM's so i have a good feeling 2 x 40 vCPU should be no issue.

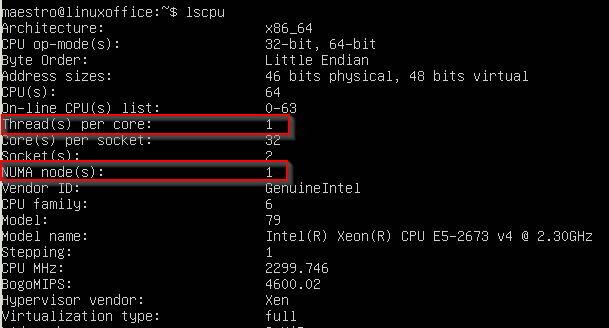

We tried Xen Orchestra, the assignment of 80 Cpu ( 2 sockets x 40 ) works like in XCP-ng Center, but after booting the Ubuntu 20 server - lscpu shows again 64 vCPU and only 1 NUMA node.

Let me know if you want to check settings at our landscape, we can do a remote screenshare with Microsoft Teams / Zoom.

Best regards

-

@olivierlambert The strange thing is follwing, all vCPU's and NUMA nodes are shown correctly in xl info:

xl info -n host : xcpng82 release : 4.19.0+1 version : #1 SMP Tue Mar 30 22:34:15 CEST 2021 machine : x86_64 nr_cpus : 80 max_cpu_id : 79 nr_nodes : 2 cores_per_socket : 20 threads_per_core : 2 cpu_mhz : 2300.006 hw_caps : bfebfbff:77fef3ff:2c100800:00000121:00000001:001cbfbb:00000000:00000100 virt_caps : pv hvm hvm_directio pv_directio hap shadow iommu_hap_pt_share total_memory : 786321 free_memory : 14403 sharing_freed_memory : 0 sharing_used_memory : 0 outstanding_claims : 0 free_cpus : 0 cpu_topology : cpu: core socket node 0: 0 0 0 1: 0 0 0 2: 1 0 0 3: 1 0 0 4: 2 0 0 5: 2 0 0 6: 3 0 0 7: 3 0 0 8: 4 0 0 9: 4 0 0 10: 8 0 0 11: 8 0 0 12: 9 0 0 13: 9 0 0 14: 10 0 0 15: 10 0 0 16: 11 0 0 17: 11 0 0 18: 12 0 0 19: 12 0 0 20: 16 0 0 21: 16 0 0 22: 17 0 0 23: 17 0 0 24: 18 0 0 25: 18 0 0 26: 19 0 0 27: 19 0 0 28: 20 0 0 29: 20 0 0 30: 24 0 0 31: 24 0 0 32: 25 0 0 33: 25 0 0 34: 26 0 0 35: 26 0 0 36: 27 0 0 37: 27 0 0 38: 28 0 0 39: 28 0 0 40: 0 1 1 41: 0 1 1 42: 1 1 1 43: 1 1 1 44: 2 1 1 45: 2 1 1 46: 3 1 1 47: 3 1 1 48: 4 1 1 49: 4 1 1 50: 8 1 1 51: 8 1 1 52: 9 1 1 53: 9 1 1 54: 10 1 1 55: 10 1 1 56: 11 1 1 57: 11 1 1 58: 12 1 1 59: 12 1 1 60: 16 1 1 61: 16 1 1 62: 17 1 1 63: 17 1 1 64: 18 1 1 65: 18 1 1 66: 19 1 1 67: 19 1 1 68: 20 1 1 69: 20 1 1 70: 24 1 1 71: 24 1 1 72: 25 1 1 73: 25 1 1 74: 26 1 1 75: 26 1 1 76: 27 1 1 77: 27 1 1 78: 28 1 1 79: 28 1 1 device topology : device node 0000:03:00.0 0 0000:83:04.0 1 0000:00:1f.2 0 0000:00:1c.0 0 0000:09:00.0 0 0000:84:00.0 1 0000:00:04.4 0 0000:80:05.2 1 0000:80:02.0 1 0000:00:1f.0 0 0000:83:0c.0 1 0000:02:00.0 0 0000:00:04.2 0 0000:00:01.0 0 0000:80:05.0 1 0000:80:04.6 1 0000:08:00.0 0 0000:83:00.0 1 0000:00:04.0 0 0000:80:04.4 1 0000:00:16.0 0 0000:01:00.0 0 0000:82:00.0 1 0000:80:04.2 1 0000:80:01.0 1 0000:00:05.1 0 0000:04:08.0 0 0000:00:04.7 0 0000:00:1f.3 0 0000:00:00.0 0 0000:80:04.0 1 0000:04:10.0 0 0000:00:04.5 0 0000:06:00.0 0 0000:81:00.0 1 0000:00:03.0 0 0000:87:00.0 1 0000:02:00.1 0 0000:00:04.3 0 0000:80:05.1 1 0000:00:1a.0 0 0000:00:11.4 0 0000:80:04.7 1 0000:05:00.0 0 0000:00:04.1 0 0000:00:1d.0 0 0000:80:04.5 1 0000:86:00.0 1 0000:00:05.4 0 0000:00:16.1 0 0000:01:00.1 0 0000:83:08.0 1 0000:00:11.0 0 0000:80:04.3 1 0000:00:05.2 0 0000:00:02.0 0 0000:85:00.0 1 0000:00:14.0 0 0000:80:04.1 1 0000:00:1c.2 0 0000:00:05.0 0 0000:00:04.6 0 0000:80:05.4 1 numa_info : node: memsize memfree distances 0: 395264 9023 10,21 1: 393216 5379 21,10 xen_major : 4 xen_minor : 13 xen_extra : .1-9.9.1 xen_version : 4.13.1-9.9.1 xen_caps : xen-3.0-x86_64 xen-3.0-x86_32p hvm-3.0-x86_32 hvm-3.0-x86_32p hvm-3.0-x86_64 xen_scheduler : credit xen_pagesize : 4096 platform_params : virt_start=0xffff800000000000 xen_changeset : 6278553325a9, pq 70d4b5941e4f xen_commandline : dom0_mem=8192M,max:8192M watchdog ucode=scan crashkernel=256M,below=4G console=vga vga=mode-0x0311 cpuid=no-ibrsb,no-ibpb,no-stibp bti=thunk=jmp,rsb_native=no,rsb_vmexit=no xpti=no cpufreq=xen:performance max_cstate=0 nospec_store_bypass_disable noibrs noibpb nopti l1tf=off mitigations=off spectre_v2_user=off spectre_v2=off nospectre_v2 nospectre_v1 no_stf_barrier mds=off spec-ctrl=no dom0_max_vcpus=1-1 cc_compiler : gcc (GCC) 4.8.5 20150623 (Red Hat 4.8.5-28) cc_compile_by : mockbuild cc_compile_domain : [unknown] cc_compile_date : Thu Feb 4 18:23:36 CET 2021 build_id : a76c6ee84d87600fa0d520cd8ecb8113b1105af4 xend_config_format : 4But in xl vcpu-list for the specific VM "0__SAP S4H S4HANA 2.0 1709 SP4" for the vCPU 64-79 the state looks strange:

# xl vcpu-list [10:35 xcpng82 ~]# xl vcpu-list Name ID VCPU CPU State Time(s) Affinity (Hard / Soft) Domain-0 0 0 79 r-- 257.2 all / all 0__Confluence & Jira & Gitlab 1 0 40 -b- 142.2 all / all 0__Confluence & Jira & Gitlab 1 1 19 -b- 142.8 all / all 0__Confluence & Jira & Gitlab 1 2 65 -b- 141.5 all / all 0__Confluence & Jira & Gitlab 1 3 70 -b- 136.8 all / all 0__Confluence & Jira & Gitlab 1 4 34 -b- 138.2 all / all 0__Confluence & Jira & Gitlab 1 5 3 -b- 141.9 all / all 0__SAP S4H S4HANA 2.0 1709 SP4 2 0 76 -b- 47.3 all / all 0__SAP S4H S4HANA 2.0 1709 SP4 2 1 64 -b- 25.0 all / all 0__SAP S4H S4HANA 2.0 1709 SP4 2 2 23 -b- 25.9 all / all 0__SAP S4H S4HANA 2.0 1709 SP4 2 3 28 -b- 22.8 all / all 0__SAP S4H S4HANA 2.0 1709 SP4 2 4 32 -b- 34.2 all / all 0__SAP S4H S4HANA 2.0 1709 SP4 2 5 53 -b- 53.9 all / all 0__SAP S4H S4HANA 2.0 1709 SP4 2 6 11 -b- 36.1 all / all 0__SAP S4H S4HANA 2.0 1709 SP4 2 7 7 -b- 35.4 all / all 0__SAP S4H S4HANA 2.0 1709 SP4 2 8 12 -b- 39.7 all / all 0__SAP S4H S4HANA 2.0 1709 SP4 2 9 55 -b- 26.7 all / all 0__SAP S4H S4HANA 2.0 1709 SP4 2 10 49 -b- 26.1 all / all 0__SAP S4H S4HANA 2.0 1709 SP4 2 11 20 -b- 28.1 all / all 0__SAP S4H S4HANA 2.0 1709 SP4 2 12 60 -b- 41.5 all / all 0__SAP S4H S4HANA 2.0 1709 SP4 2 13 43 -b- 22.1 all / all 0__SAP S4H S4HANA 2.0 1709 SP4 2 14 69 -b- 29.8 all / all 0__SAP S4H S4HANA 2.0 1709 SP4 2 15 36 -b- 24.5 all / all 0__SAP S4H S4HANA 2.0 1709 SP4 2 16 62 -b- 28.5 all / all 0__SAP S4H S4HANA 2.0 1709 SP4 2 17 37 -b- 28.1 all / all 0__SAP S4H S4HANA 2.0 1709 SP4 2 18 66 -b- 26.2 all / all 0__SAP S4H S4HANA 2.0 1709 SP4 2 19 10 -b- 22.3 all / all 0__SAP S4H S4HANA 2.0 1709 SP4 2 20 68 -b- 30.0 all / all 0__SAP S4H S4HANA 2.0 1709 SP4 2 21 13 -b- 28.6 all / all 0__SAP S4H S4HANA 2.0 1709 SP4 2 22 38 -b- 21.2 all / all 0__SAP S4H S4HANA 2.0 1709 SP4 2 23 27 -b- 24.3 all / all 0__SAP S4H S4HANA 2.0 1709 SP4 2 24 46 -b- 28.3 all / all 0__SAP S4H S4HANA 2.0 1709 SP4 2 25 74 -b- 24.8 all / all 0__SAP S4H S4HANA 2.0 1709 SP4 2 26 52 -b- 23.6 all / all 0__SAP S4H S4HANA 2.0 1709 SP4 2 27 56 -b- 23.8 all / all 0__SAP S4H S4HANA 2.0 1709 SP4 2 28 35 -b- 29.9 all / all 0__SAP S4H S4HANA 2.0 1709 SP4 2 29 77 -b- 25.2 all / all 0__SAP S4H S4HANA 2.0 1709 SP4 2 30 57 -b- 19.4 all / all 0__SAP S4H S4HANA 2.0 1709 SP4 2 31 47 -b- 26.6 all / all 0__SAP S4H S4HANA 2.0 1709 SP4 2 32 51 -b- 27.6 all / all 0__SAP S4H S4HANA 2.0 1709 SP4 2 33 54 -b- 43.9 all / all 0__SAP S4H S4HANA 2.0 1709 SP4 2 34 71 -b- 23.7 all / all 0__SAP S4H S4HANA 2.0 1709 SP4 2 35 67 -b- 22.5 all / all 0__SAP S4H S4HANA 2.0 1709 SP4 2 36 22 -b- 25.7 all / all 0__SAP S4H S4HANA 2.0 1709 SP4 2 37 15 -b- 21.7 all / all 0__SAP S4H S4HANA 2.0 1709 SP4 2 38 75 -b- 24.6 all / all 0__SAP S4H S4HANA 2.0 1709 SP4 2 39 2 -b- 23.4 all / all 0__SAP S4H S4HANA 2.0 1709 SP4 2 40 58 -b- 28.9 all / all 0__SAP S4H S4HANA 2.0 1709 SP4 2 41 73 -b- 23.3 all / all 0__SAP S4H S4HANA 2.0 1709 SP4 2 42 16 -b- 24.6 all / all 0__SAP S4H S4HANA 2.0 1709 SP4 2 43 17 -b- 21.2 all / all 0__SAP S4H S4HANA 2.0 1709 SP4 2 44 26 -b- 31.2 all / all 0__SAP S4H S4HANA 2.0 1709 SP4 2 45 78 -b- 21.3 all / all 0__SAP S4H S4HANA 2.0 1709 SP4 2 46 9 -b- 20.7 all / all 0__SAP S4H S4HANA 2.0 1709 SP4 2 47 18 -b- 21.1 all / all 0__SAP S4H S4HANA 2.0 1709 SP4 2 48 42 -b- 28.5 all / all 0__SAP S4H S4HANA 2.0 1709 SP4 2 49 50 -b- 22.2 all / all 0__SAP S4H S4HANA 2.0 1709 SP4 2 50 45 -b- 23.5 all / all 0__SAP S4H S4HANA 2.0 1709 SP4 2 51 61 -b- 23.0 all / all 0__SAP S4H S4HANA 2.0 1709 SP4 2 52 44 -b- 28.2 all / all 0__SAP S4H S4HANA 2.0 1709 SP4 2 53 30 -b- 24.5 all / all 0__SAP S4H S4HANA 2.0 1709 SP4 2 54 5 -b- 24.5 all / all 0__SAP S4H S4HANA 2.0 1709 SP4 2 55 24 -b- 27.6 all / all 0__SAP S4H S4HANA 2.0 1709 SP4 2 56 29 -b- 30.7 all / all 0__SAP S4H S4HANA 2.0 1709 SP4 2 57 14 -b- 26.9 all / all 0__SAP S4H S4HANA 2.0 1709 SP4 2 58 33 -b- 21.4 all / all 0__SAP S4H S4HANA 2.0 1709 SP4 2 59 4 -b- 24.4 all / all 0__SAP S4H S4HANA 2.0 1709 SP4 2 60 45 -b- 29.5 all / all 0__SAP S4H S4HANA 2.0 1709 SP4 2 61 72 -b- 28.1 all / all 0__SAP S4H S4HANA 2.0 1709 SP4 2 62 0 -b- 24.5 all / all 0__SAP S4H S4HANA 2.0 1709 SP4 2 63 41 -b- 22.7 all / all 0__SAP S4H S4HANA 2.0 1709 SP4 2 64 - --p 0.0 all / all 0__SAP S4H S4HANA 2.0 1709 SP4 2 65 - --p 0.0 all / all 0__SAP S4H S4HANA 2.0 1709 SP4 2 66 - --p 0.0 all / all 0__SAP S4H S4HANA 2.0 1709 SP4 2 67 - --p 0.0 all / all 0__SAP S4H S4HANA 2.0 1709 SP4 2 68 - --p 0.0 all / all 0__SAP S4H S4HANA 2.0 1709 SP4 2 69 - --p 0.0 all / all 0__SAP S4H S4HANA 2.0 1709 SP4 2 70 - --p 0.0 all / all 0__SAP S4H S4HANA 2.0 1709 SP4 2 71 - --p 0.0 all / all 0__SAP S4H S4HANA 2.0 1709 SP4 2 72 - --p 0.0 all / all 0__SAP S4H S4HANA 2.0 1709 SP4 2 73 - --p 0.0 all / all 0__SAP S4H S4HANA 2.0 1709 SP4 2 74 - --p 0.0 all / all 0__SAP S4H S4HANA 2.0 1709 SP4 2 75 - --p 0.0 all / all 0__SAP S4H S4HANA 2.0 1709 SP4 2 76 - --p 0.0 all / all 0__SAP S4H S4HANA 2.0 1709 SP4 2 77 - --p 0.0 all / all 0__SAP S4H S4HANA 2.0 1709 SP4 2 78 - --p 0.0 all / all 0__SAP S4H S4HANA 2.0 1709 SP4 2 79 - --p 0.0 all / allCan you see the "State" flag "-b-" for CPU 1-63 seems okbut for CPU 64-79 it says "--p" what does that mean ?

Best regards

-

@sapcode

Found a description here: https://linux.die.net/man/1/xm

But no idea why it starts some CPUs in the pause state....

Will do some testing with C states and govenor performance....STATES

The State field lists 6 states for a Xen Domain, and which ones the current Domain is in.

r - running The domain is currently running on a CPU

b - blocked The domain is blocked, and not running or runnable. This can be caused because the domain is waiting on IO (a traditional wait state) or has gone to sleep because there was nothing else for it to do.

p - paused The domain has been paused, usually occurring through the administrator running xm pause. When in a paused state the domain will still consume allocated resources like memory, but will not be eligible for scheduling by the Xen hypervisor.

s - shutdown The guest has requested to be shutdown, rebooted or suspended, and the domain is in the process of being destroyed in response.

c - crashed The domain has crashed, which is always a violent ending. Usually this state can only occur if the domain has been configured not to restart on crash. See xmdomain.cfg for more info.

d - dying The domain is in process of dying, but hasn't completely shutdown or crashed.