SR_BACKEND_FAILURE_109

-

So what would be the best solution to get this resolved?

-

Do you have a lot of snapshots in your VMs?

Having an XOA deployed would help to diagnose too.

-

So we had them scheduled to take snapshots every night of every VM. I noticed that errors were accumulating this week. How would I deploy XOA to help diagnose the issue?

-

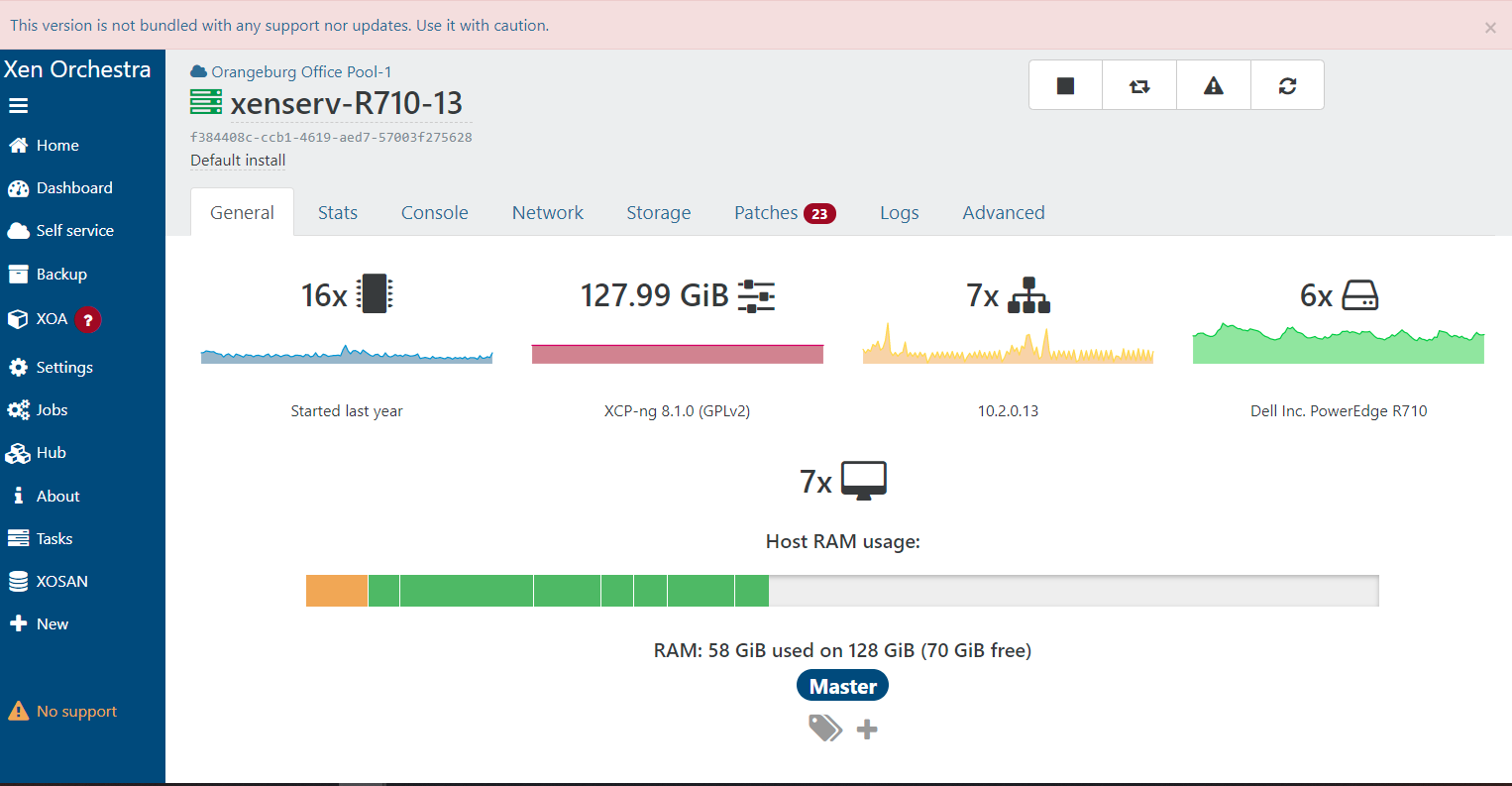

In the XCP-NG Center GUI we have 16 VM's with 41 snapshots currently in the snapshot section on the center.

-

Using XCP-ng Center scheduled snapshot? That's really a poorly crafted feature vs XO backup capabilities (and it's not taking into account the chain, unlike XO).

I strongly suggest to get rid of XCP-ng Center at some point.

Regarding XOA deploy: https://help.vates.fr/kb/en-us/9-xen-orchestra-appliance-xoa/18-deploy-your-xoa

To fix your chain issues, please start to remove snapshots.

-

One question does the Xen Orchestra manager have to be ran on XCPNG or can get rid of XCPNG all together?

-

Also getting this log error on the Xen Orchestra Web Interface.[0_1629315103732_2021-08-18T19_31_00.542Z - XO.log](Uploading 100%)

SR_BACKEND_FAILURE_47(, The SR is not available [opterr=directory not mounted: /var/run/sr-mount/4e765024-1b4d-fca2-bc51-1a09dfb669b6], )

-

- You can run Xen Orchestra anywhere you like, as long it can reach your pool master

- Please do as I said earlier: SR view, click on the SR in question, Advanced tab. Do you have uncoalesced disks in there?

-

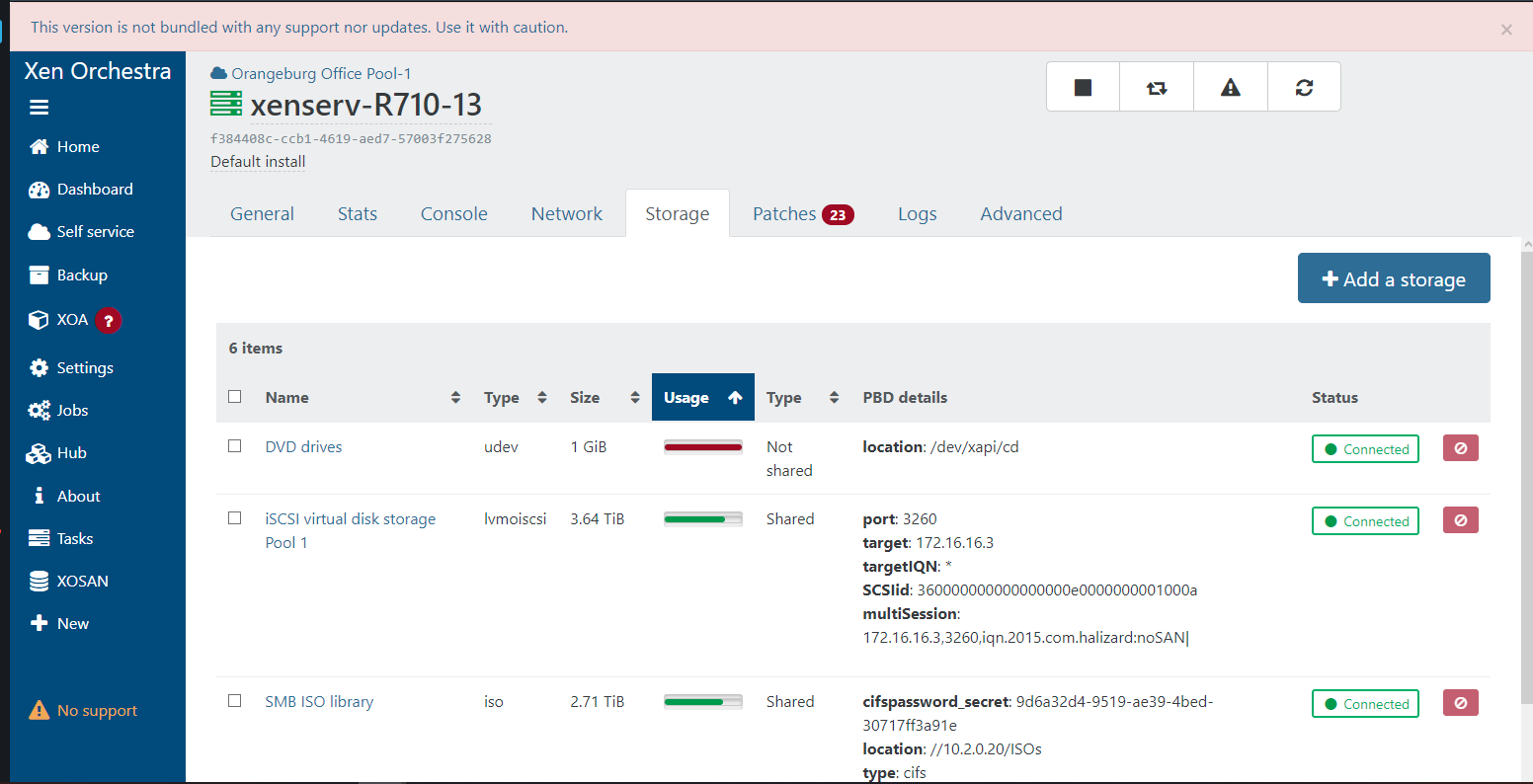

So in the ISCSI virtual disk storage pool 1 there are 606 items I do not see the option for coalesce at all. Here's some screenshots.

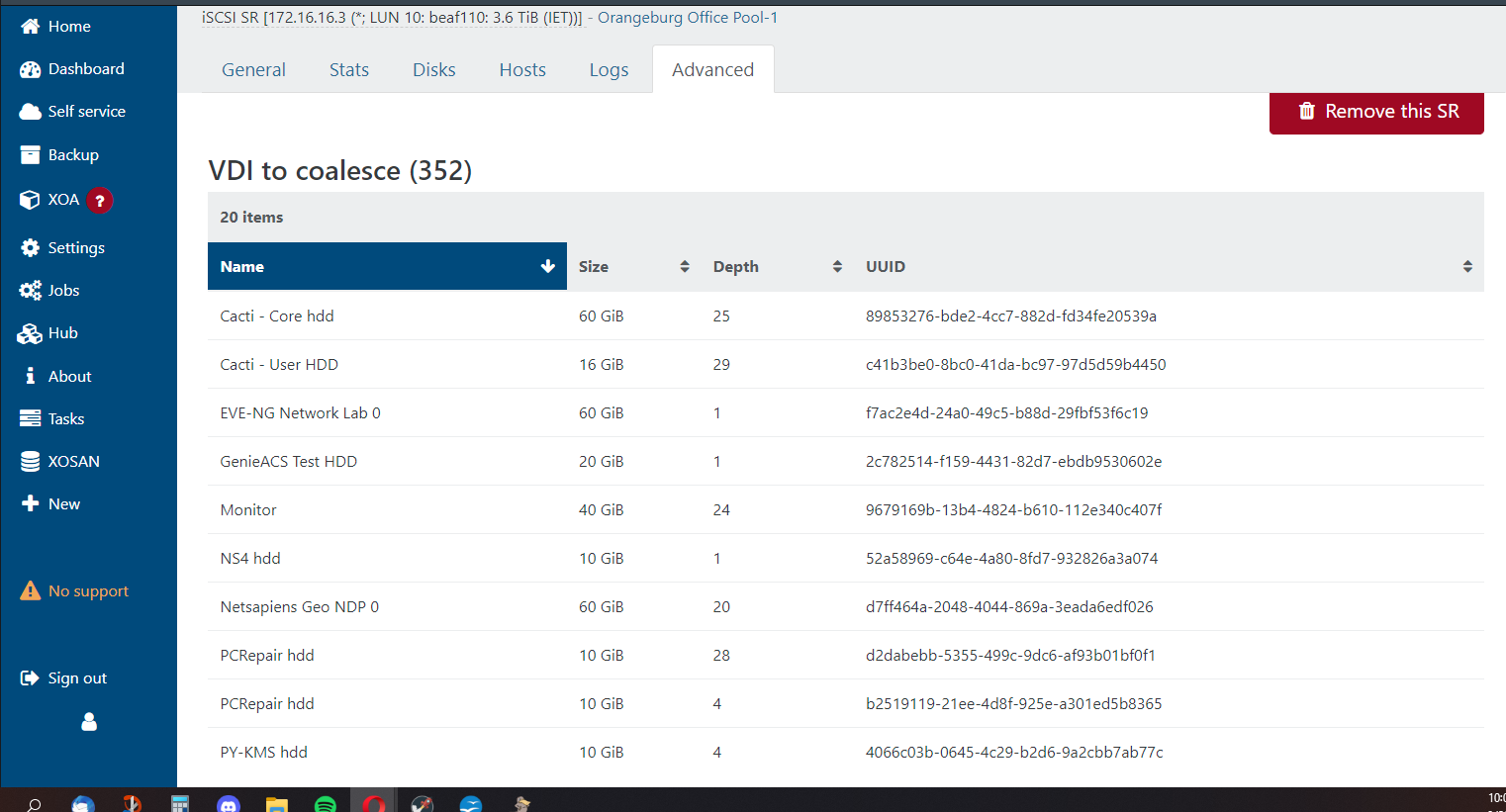

Also after researching I have found that there are 352 Items that need to be coalesce.

On the VDI to Coalesce page what is the Depth?

-

@ntithomas Hi,

Each time you remove a snapshot the main disk have to coalesce the change "merge the difference" to integrate the removed snapshot. The depth is the number of removed snapshot you have to merged in the main disk. 29 is a lot it will need a lot of time.

-

So is there a way to speed this up or do I just have to wait for it?

-

And by a lot do you mean 1 day? 2 Days? 1 Week?

-

I'm trying to migrate these servers off of this pool since this pool is having some sort issue with the VDI chain being overloaded, but when I try to migrate them it says the snapshot chain is too long. What does migrating have to do with snapshots when its two completely different actions?

-

@ntithomas No you will have to wait for it to be done you can grep the coalesce process on your host to be sure it's running.

ps axf | grep coalesce -

@ntithomas It really depend on your host ressources so can't give you a hint on that but checking the coalesce page you will see the progress.

The thing being the more depth you have the longer it take because the merge as to happen on all the disk at the same time and that's a lot of operation to be done. -

@ntithomas When you migrate a VM you migrate the disk, so the coalesce as to happen before you can migrate the disk

-

So when I grep this comes back.

24347 pts/4 S+ 0:00 _ grep --color=auto coalesce

And checking the XOA VDI to Coalesce page and I will see the progress?

-

@ntithomas You should have at least 2 lines apparently coalesce is not happening there. you should rescan all disk on XOA.

-

Okay this is the error I get when I rescan all disk

SR_BACKEND_FAILURE_47(, The SR is not available [opterr=directory not mounted: /var/run/sr-mount/4e765024-1b4d-fca2-bc51-1a09dfb669b6], )

-

We did recently have a failed drive that finished rebuilding a few days ago.