Vm.migrate Operation blocked

-

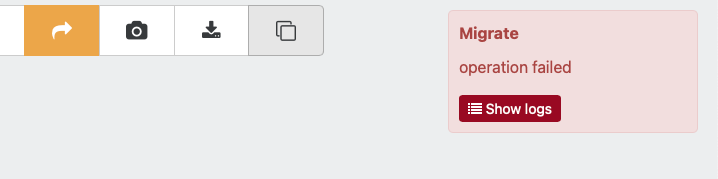

vm.migrate { "vm": "44db041e-8855-d142-e619-2e2819245a82", "migrationNetwork": "359e8975-0c69-e837-58ba-f6e8cd2e1140", "sr": "9290c72b-fdcd-cb03-9408-970ade25fcd0", "targetHost": "8adfc9f3-823d-4ce8-9971-237c785481a9" } { "code": 21, "data": { "objectId": "44db041e-8855-d142-e619-2e2819245a82", "code": "OPERATION_BLOCKED" }, "message": "operation failed", "name": "XoError", "stack": "XoError: operation failed at operationFailed (/home/node/xen-orchestra/packages/xo-common/src/api-errors.js:21:32) at file:///home/node/xen-orchestra/packages/xo-server/src/api/vm.mjs:482:15 at Object.migrate (file:///home/node/xen-orchestra/packages/xo-server/src/api/vm.mjs:469:3) at Api.callApiMethod (file:///home/node/xen-orchestra/packages/xo-server/src/xo-mixins/api.mjs:304:20)" }After updating xcp-ng with latest patches i cant migrate my virtual machines anymore. They are all in the same pool on the same storage. Tried in both XOA and XCP-NG Center, same result.

We are using XCP-NG 8.2

Anyone have a clue where to look?

-

Can you post the output of the following?

xe vm-param-get uuid=xxxxxx param-name=blocked-operations -

Thanks for answering!

xe vm-param-list uuid=46ca24f2-f699-6d33-657d-bbb74b495c97 | grep blocked-operations blocked-operations (MRW): migrate_send: VM_CREATED_BY_XENDESKTOPSo I deleted the blocked operations.

xe vm-param-clear uuid=46ca24f2-f699-6d33-657d-bbb74b495c97 param-name=blocked-operationsI then rebooted the machine and checked the allowed-operation

xe vm-param-list uuid=46ca24f2-f699-6d33-657d-bbb74b495c97 | grep allowed-operations allowed-operations (SRO): changing_dynamic_range; migrate_send; pool_migrate; changing_VCPUs_live; suspend; hard_reboot; hard_shutdown; clean_reboot; clean_shutdown; pause; checkpoint; snapshotI can now see that migrate_send is back. But when i tried to migrate it again a new error message popped up.

vm.migrate { "vm": "46ca24f2-f699-6d33-657d-bbb74b495c97", "migrationNetwork": "359e8975-0c69-e837-58ba-f6e8cd2e1140", "sr": "9290c72b-fdcd-cb03-9408-970ade25fcd0", "targetHost": "6a310c4f-d3b6-414e-8ca8-2b05b27b45d9" } { "code": 21, "data": { "objectId": "46ca24f2-f699-6d33-657d-bbb74b495c97", "code": "VDI_ON_BOOT_MODE_INCOMPATIBLE_WITH_OPERATION" }, "message": "operation failed", "name": "XoError", "stack": "XoError: operation failed at operationFailed (/home/node/xen-orchestra/packages/xo-common/src/api-errors.js:21:32) at file:///home/node/xen-orchestra/packages/xo-server/src/api/vm.mjs:482:15 at Object.migrate (file:///home/node/xen-orchestra/packages/xo-server/src/api/vm.mjs:469:3) at Api.callApiMethod (file:///home/node/xen-orchestra/packages/xo-server/src/xo-mixins/api.mjs:304:20)" }Do you have any idea where i can poke around next?

Thanks

-

I think you shouldn't do that. If there's operations blocked, there's a reason.

The reason here is probably Citrix Desktop or Citrix App (XenDesktop).

Also, on desktop virt, XenDesktop is using a specific mode, which doesn't save what's written after shutdown. So it's not possible to migrate those VMs.

You should ask Citrix if what you want to do is possible with XenDesktop. In any case, this is not related to an XCP-ng problem but the way XenDesktop is interacting with your VMs.

Also, I'm please tell us what they think about having XenDesktop on top of XCP-ng

We'd like to have official support, but I think they won't care.

We'd like to have official support, but I think they won't care. -

I have now conluded the problem is related to MCS (Machine Creation Services). MCS does not support XenMotion Storage. When you move vms with regular XenMotion it all works as expected. We are pretty new to MCS thats why this problem shoved up.

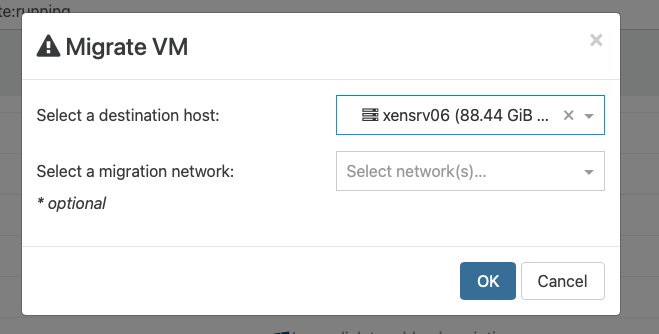

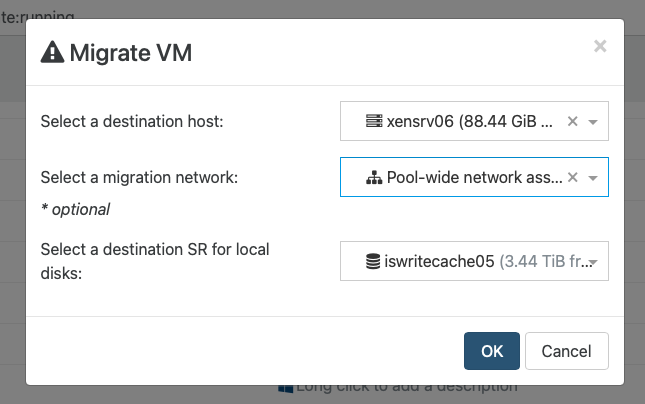

If you migrate VMs in XenOrchestra with the default settings without touching the migration network it works fine because its using XenMotion

If you choose a migration network like the picture below it won't work because its using XenMotion Storage

Even if XenDesktop is not officially supported on XCP-ng it has been working fine for us. We have 80 terminal servers on 18 XCP-ng, been running it for years, no big problems.

Anyway thanks for your response.

-

Great to know

So it works as "expected". That would be wonderful to have your XCP-ng hosts covered by our support so you get the entire stack supported!

So it works as "expected". That would be wonderful to have your XCP-ng hosts covered by our support so you get the entire stack supported! -

@fanuelsen I was having a similar problem just now with XCP-NG 8.3 LTS and the latest XO. I was unable to migrate an MCS-created VM using XO (I was doing a Host Migrate within the pool only; no storage migration). Oddly, I was able to do the Host Migration using XCP-NG Center.

This was in a production Pool that I had recently set up, and this time I had been given a dedicated 10Gbps team with VLANs for migration and host management, and two separate 10Gbps teams for storage and for VMs, respectively.

To get live migration of my MCS-created VMs to work, I had to delete the Default Migration Network on the Pool's Advanced tab. I don't see a downside to doing this as all my NICs are 10Gbps, so all NICs should operate at roughly the same speed.

ETA: With the Default Migration Network deleted, I have confirmed that migration traffic defaults to going over the management NICs where it is desired rather than going over the storage or VM NICs.

Hello! It looks like you're interested in this conversation, but you don't have an account yet.

Getting fed up of having to scroll through the same posts each visit? When you register for an account, you'll always come back to exactly where you were before, and choose to be notified of new replies (either via email, or push notification). You'll also be able to save bookmarks and upvote posts to show your appreciation to other community members.

With your input, this post could be even better 💗

Register Login