-

@ronan-a said in XOSTOR hyperconvergence preview:

Yes, you can. Please take a look to: https://linbit.com/drbd-user-guide/linstor-guide-1_0-en/#s-managing_network_interface_cards

To get the storage pool name, execute this command in your pool:OK - so to set up this XOSTOR SR I would first

xe sr-create type=linstor name-label=XOSTOR01 host-uuid=xxx device-config:hosts=<XCPNG Host Names> ...etc...to create the Linstor storage-pool. Then

linstor storage-pool listto get the name the pool. Then on each node found in

device-config:hosts=<XCPNG Host Names>run the following command:linstor storage-pool set-property <host/node_name> <pool_name> PrefNic <nic_name>where

nic_nameis the name of the Linstor interface created for the specific NIC. -

As promised I have done some more "organised" testing, with a brand new cluster set up to test XOSTOR. Early simple tests seemed to be OK and I can create, restart, snapshot, move and delete VMs with no problem.

But then after putting a VM under load for an hour and then restarting it I am seeing the same weird behaviour I saw previously with XOSTOR.

Firstly, the VM took far longer than expected to shutdown. Then trying to restart the VM fails.

Let me know if you want me to provide logs or any specific testing.

vm.start { "id": "ade699f2-42f0-8629-35ea-6fcc69de99d7", "bypassMacAddressesCheck": false, "force": false } { "code": "SR_BACKEND_FAILURE_1200", "params": [ "", "No such Tapdisk(minor=12)", "" ], "call": { "method": "VM.start", "params": [ "OpaqueRef:a60d0553-a2f2-41e6-9df4-fad745fbacc8", false, false ] }, "message": "SR_BACKEND_FAILURE_1200(, No such Tapdisk(minor=12), )", "name": "XapiError", "stack": "XapiError: SR_BACKEND_FAILURE_1200(, No such Tapdisk(minor=12), ) at Function.wrap (/opt/xo/xo-builds/xen-orchestra-202206111352/packages/xen-api/src/_XapiError.js:16:12) at /opt/xo/xo-builds/xen-orchestra-202206111352/packages/xen-api/src/transports/json-rpc.js:37:27 at AsyncResource.runInAsyncScope (async_hooks.js:197:9) at cb (/opt/xo/xo-builds/xen-orchestra-202206111352/node_modules/bluebird/js/release/util.js:355:42) at tryCatcher (/opt/xo/xo-builds/xen-orchestra-202206111352/node_modules/bluebird/js/release/util.js:16:23) at Promise._settlePromiseFromHandler (/opt/xo/xo-builds/xen-orchestra-202206111352/node_modules/bluebird/js/release/promise.js:547:31) at Promise._settlePromise (/opt/xo/xo-builds/xen-orchestra-202206111352/node_modules/bluebird/js/release/promise.js:604:18) at Promise._settlePromise0 (/opt/xo/xo-builds/xen-orchestra-202206111352/node_modules/bluebird/js/release/promise.js:649:10) at Promise._settlePromises (/opt/xo/xo-builds/xen-orchestra-202206111352/node_modules/bluebird/js/release/promise.js:729:18) at _drainQueueStep (/opt/xo/xo-builds/xen-orchestra-202206111352/node_modules/bluebird/js/release/async.js:93:12) at _drainQueue (/opt/xo/xo-builds/xen-orchestra-202206111352/node_modules/bluebird/js/release/async.js:86:9) at Async._drainQueues (/opt/xo/xo-builds/xen-orchestra-202206111352/node_modules/bluebird/js/release/async.js:102:5) at Immediate.Async.drainQueues [as _onImmediate] (/opt/xo/xo-builds/xen-orchestra-202206111352/node_modules/bluebird/js/release/async.js:15:14) at processImmediate (internal/timers.js:464:21) at process.callbackTrampoline (internal/async_hooks.js:130:17)" }The linstore volume for this VM appears to be OK (by the way I hope eventually we have an easy way to match up VMs to Linstore volumes)

[16:53 XCPNG31 ~]# linstor volume list | grep xcp-volume-00b34ae3-2ad3-44ea-aa13-d5de1fbf756f | XCPNG30 | xcp-volume-00b34ae3-2ad3-44ea-aa13-d5de1fbf756f | xcp-sr-linstor_group | 0 | 1001 | /dev/drbd1001 | 40.10 GiB | InUse | UpToDate | | XCPNG31 | xcp-volume-00b34ae3-2ad3-44ea-aa13-d5de1fbf756f | xcp-sr-linstor_group | 0 | 1001 | /dev/drbd1001 | 40.10 GiB | Unused | UpToDate | | XCPNG32 | xcp-volume-00b34ae3-2ad3-44ea-aa13-d5de1fbf756f | DfltDisklessStorPool | 0 | 1001 | /dev/drbd1001 | | Unused | Diskless | [16:59 XCPNG31 ~]# linstor node list ╭─────────────────────────────────────────────────────────╮ ┊ Node ┊ NodeType ┊ Addresses ┊ State ┊ ╞═════════════════════════════════════════════════════════╡ ┊ XCPNG30 ┊ COMBINED ┊ 192.168.1.30:3366 (PLAIN) ┊ Online ┊ ┊ XCPNG31 ┊ COMBINED ┊ 192.168.1.31:3366 (PLAIN) ┊ Online ┊ ┊ XCPNG32 ┊ COMBINED ┊ 192.168.1.32:3366 (PLAIN) ┊ Online ┊ ╰─────────────────────────────────────────────────────────╯ [17:13 XCPNG31 ~]# linstor storage-pool list ╭──────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────╮ ┊ StoragePool ┊ Node ┊ Driver ┊ PoolName ┊ FreeCapacity ┊ TotalCapacity ┊ CanSnapshots ┊ State ┊ SharedName ┊ ╞══════════════════════════════════════════════════════════════════════════════════════════════════════════════════════════════╡ ┊ DfltDisklessStorPool ┊ XCPNG30 ┊ DISKLESS ┊ ┊ ┊ ┊ False ┊ Ok ┊ ┊ ┊ DfltDisklessStorPool ┊ XCPNG31 ┊ DISKLESS ┊ ┊ ┊ ┊ False ┊ Ok ┊ ┊ ┊ DfltDisklessStorPool ┊ XCPNG32 ┊ DISKLESS ┊ ┊ ┊ ┊ False ┊ Ok ┊ ┊ ┊ xcp-sr-linstor_group ┊ XCPNG30 ┊ LVM ┊ linstor_group ┊ 3.50 TiB ┊ 3.64 TiB ┊ False ┊ Ok ┊ ┊ ┊ xcp-sr-linstor_group ┊ XCPNG31 ┊ LVM ┊ linstor_group ┊ 3.49 TiB ┊ 3.64 TiB ┊ False ┊ Ok ┊ ┊ ┊ xcp-sr-linstor_group ┊ XCPNG32 ┊ LVM ┊ linstor_group ┊ 3.50 TiB ┊ 3.64 TiB ┊ False ┊ Ok ┊ ┊ ╰──────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────╯I am seeing the following errors in the SMLog (bb027c9a-5655-4f93-9090-e76b34b2c90d is the disk for this VM)

Jul 23 16:48:30 XCPNG30 SM: [22961] lock: opening lock file /var/lock/sm/bb027c9a-5655-4f93-9090-e76b34b2c90d/vdi Jul 23 16:48:30 XCPNG30 SM: [22961] blktap2.deactivate Jul 23 16:48:30 XCPNG30 SM: [22961] lock: acquired /var/lock/sm/bb027c9a-5655-4f93-9090-e76b34b2c90d/vdi Jul 23 16:48:30 XCPNG30 SM: [22961] ['/usr/sbin/tap-ctl', 'close', '-p', '19527', '-m', '2', '-t', '30'] Jul 23 16:49:00 XCPNG30 SM: [22961] = 5 Jul 23 16:49:00 XCPNG30 SM: [22961] ***** BLKTAP2:<function _deactivate_locked at 0x7f6fb33208c0>: EXCEPTION <class 'blktap2.CommandFailure'>, ['/usr/sbin/tap-ctl', 'close', '-p', '19527', '-m', '2', '-t', '30'] failed: status=5, pid=22983, errmsg=Input/output error Jul 23 16:49:00 XCPNG30 SM: [22961] File "/opt/xensource/sm/blktap2.py", line 85, in wrapper Jul 23 16:49:00 XCPNG30 SM: [22961] ret = op(self, *args) Jul 23 16:49:00 XCPNG30 SM: [22961] File "/opt/xensource/sm/blktap2.py", line 1744, in _deactivate_locked Jul 23 16:49:00 XCPNG30 SM: [22961] self._deactivate(sr_uuid, vdi_uuid, caching_params) Jul 23 16:49:00 XCPNG30 SM: [22961] File "/opt/xensource/sm/blktap2.py", line 1785, in _deactivate Jul 23 16:49:00 XCPNG30 SM: [22961] self._tap_deactivate(minor) Jul 23 16:49:00 XCPNG30 SM: [22961] File "/opt/xensource/sm/blktap2.py", line 1368, in _tap_deactivate Jul 23 16:49:00 XCPNG30 SM: [22961] tapdisk.shutdown() Jul 23 16:49:00 XCPNG30 SM: [22961] File "/opt/xensource/sm/blktap2.py", line 880, in shutdown Jul 23 16:49:00 XCPNG30 SM: [22961] TapCtl.close(self.pid, self.minor, force) Jul 23 16:49:00 XCPNG30 SM: [22961] File "/opt/xensource/sm/blktap2.py", line 433, in close Jul 23 16:49:00 XCPNG30 SM: [22961] cls._pread(args) Jul 23 16:49:00 XCPNG30 SM: [22961] File "/opt/xensource/sm/blktap2.py", line 296, in _pread Jul 23 16:49:00 XCPNG30 SM: [22961] tapctl._wait(quiet) Jul 23 16:49:00 XCPNG30 SM: [22961] File "/opt/xensource/sm/blktap2.py", line 285, in _wait Jul 23 16:49:00 XCPNG30 SM: [22961] raise self.CommandFailure(self.cmd, **info) Jul 23 16:49:00 XCPNG30 SM: [22961] Jul 23 16:49:00 XCPNG30 SM: [22961] lock: released /var/lock/sm/bb027c9a-5655-4f93-9090-e76b34b2c90d/vdi Jul 23 16:49:00 XCPNG30 SM: [22961] call-plugin on 7aaaf4a5-0e43-442e-a9b1-38620c87fd69 (linstor-manager:lockVdi with {'groupName': 'linstor_group', 'srUuid': '141d63f6-d3ed-4a2f-588a-1835f0cea588', 'vdiUuid': 'bb027c9a-5655-4f93-9090-e76b34b2c90d', 'locked': 'False'}) returned: True Jul 23 16:49:00 XCPNG30 SM: [22961] ***** generic exception: vdi_deactivate: EXCEPTION <class 'blktap2.CommandFailure'>, ['/usr/sbin/tap-ctl', 'close', '-p', '19527', '-m', '2', '-t', '30'] failed: status=5, pid=22983, errmsg=Input/output error Jul 23 16:49:00 XCPNG30 SM: [22961] File "/opt/xensource/sm/SRCommand.py", line 110, in run Jul 23 16:49:00 XCPNG30 SM: [22961] return self._run_locked(sr) Jul 23 16:49:00 XCPNG30 SM: [22961] File "/opt/xensource/sm/SRCommand.py", line 159, in _run_locked Jul 23 16:49:00 XCPNG30 SM: [22961] rv = self._run(sr, target) Jul 23 16:49:00 XCPNG30 SM: [22961] File "/opt/xensource/sm/SRCommand.py", line 274, in _run Jul 23 16:49:00 XCPNG30 SM: [22961] caching_params) Jul 23 16:49:00 XCPNG30 SM: [22961] File "/opt/xensource/sm/blktap2.py", line 1729, in deactivate Jul 23 16:49:00 XCPNG30 SM: [22961] if self._deactivate_locked(sr_uuid, vdi_uuid, caching_params): Jul 23 16:49:00 XCPNG30 SM: [22961] File "/opt/xensource/sm/blktap2.py", line 85, in wrapper Jul 23 16:49:00 XCPNG30 SM: [22961] ret = op(self, *args) Jul 23 16:49:00 XCPNG30 SM: [22961] File "/opt/xensource/sm/blktap2.py", line 1744, in _deactivate_locked Jul 23 16:49:00 XCPNG30 SM: [22961] self._deactivate(sr_uuid, vdi_uuid, caching_params) Jul 23 16:49:00 XCPNG30 SM: [22961] File "/opt/xensource/sm/blktap2.py", line 1785, in _deactivate Jul 23 16:49:00 XCPNG30 SM: [22961] self._tap_deactivate(minor) Jul 23 16:49:00 XCPNG30 SM: [22961] File "/opt/xensource/sm/blktap2.py", line 1368, in _tap_deactivate Jul 23 16:49:00 XCPNG30 SM: [22961] tapdisk.shutdown() Jul 23 16:49:00 XCPNG30 SM: [22961] File "/opt/xensource/sm/blktap2.py", line 880, in shutdown Jul 23 16:49:00 XCPNG30 SM: [22961] TapCtl.close(self.pid, self.minor, force) Jul 23 16:49:00 XCPNG30 SM: [22961] File "/opt/xensource/sm/blktap2.py", line 433, in close Jul 23 16:49:00 XCPNG30 SM: [22961] cls._pread(args) Jul 23 16:49:00 XCPNG30 SM: [22961] File "/opt/xensource/sm/blktap2.py", line 296, in _pread Jul 23 16:49:00 XCPNG30 SM: [22961] tapctl._wait(quiet) Jul 23 16:49:00 XCPNG30 SM: [22961] File "/opt/xensource/sm/blktap2.py", line 285, in _wait Jul 23 16:49:00 XCPNG30 SM: [22961] raise self.CommandFailure(self.cmd, **info) Jul 23 16:49:00 XCPNG30 SM: [22961] Jul 23 16:49:00 XCPNG30 SM: [22961] ***** LINSTOR resources on XCP-ng: EXCEPTION <class 'blktap2.CommandFailure'>, ['/usr/sbin/tap-ctl', 'close', '-p', '19527', '-m', '2', '-t', '30'] failed: status=5, pid=22983, errmsg=Input/output error Jul 23 16:49:00 XCPNG30 SM: [22961] File "/opt/xensource/sm/SRCommand.py", line 378, in run Jul 23 16:49:00 XCPNG30 SM: [22961] ret = cmd.run(sr) Jul 23 16:49:00 XCPNG30 SM: [22961] File "/opt/xensource/sm/SRCommand.py", line 110, in run Jul 23 16:49:00 XCPNG30 SM: [22961] return self._run_locked(sr) Jul 23 16:49:00 XCPNG30 SM: [22961] File "/opt/xensource/sm/SRCommand.py", line 159, in _run_locked Jul 23 16:49:00 XCPNG30 SM: [22961] rv = self._run(sr, target) Jul 23 16:49:00 XCPNG30 SM: [22961] File "/opt/xensource/sm/SRCommand.py", line 274, in _run Jul 23 16:49:00 XCPNG30 SM: [22961] caching_params) Jul 23 16:49:00 XCPNG30 SM: [22961] File "/opt/xensource/sm/blktap2.py", line 1729, in deactivate Jul 23 16:49:00 XCPNG30 SM: [22961] if self._deactivate_locked(sr_uuid, vdi_uuid, caching_params): Jul 23 16:49:00 XCPNG30 SM: [22961] File "/opt/xensource/sm/blktap2.py", line 85, in wrapper Jul 23 16:49:00 XCPNG30 SM: [22961] ret = op(self, *args) Jul 23 16:49:00 XCPNG30 SM: [22961] File "/opt/xensource/sm/blktap2.py", line 1744, in _deactivate_locked Jul 23 16:49:00 XCPNG30 SM: [22961] self._deactivate(sr_uuid, vdi_uuid, caching_params) Jul 23 16:49:00 XCPNG30 SM: [22961] File "/opt/xensource/sm/blktap2.py", line 1785, in _deactivate Jul 23 16:49:00 XCPNG30 SM: [22961] self._tap_deactivate(minor) Jul 23 16:49:00 XCPNG30 SM: [22961] File "/opt/xensource/sm/blktap2.py", line 1368, in _tap_deactivate Jul 23 16:49:00 XCPNG30 SM: [22961] tapdisk.shutdown() Jul 23 16:49:00 XCPNG30 SM: [22961] File "/opt/xensource/sm/blktap2.py", line 880, in shutdown Jul 23 16:49:00 XCPNG30 SM: [22961] TapCtl.close(self.pid, self.minor, force) Jul 23 16:49:00 XCPNG30 SM: [22961] File "/opt/xensource/sm/blktap2.py", line 433, in close Jul 23 16:49:00 XCPNG30 SM: [22961] cls._pread(args) Jul 23 16:49:00 XCPNG30 SM: [22961] File "/opt/xensource/sm/blktap2.py", line 296, in _pread Jul 23 16:49:00 XCPNG30 SM: [22961] tapctl._wait(quiet) Jul 23 16:49:00 XCPNG30 SM: [22961] File "/opt/xensource/sm/blktap2.py", line 285, in _wait Jul 23 16:49:00 XCPNG30 SM: [22961] raise self.CommandFailure(self.cmd, **info) Jul 23 16:49:00 XCPNG30 SM: [22961]If I try to start this VM after the above issue I get this error:

vm.start { "id": "ade699f2-42f0-8629-35ea-6fcc69de99d7", "bypassMacAddressesCheck": false, "force": false } { "code": "FAILED_TO_START_EMULATOR", "params": [ "OpaqueRef:a60d0553-a2f2-41e6-9df4-fad745fbacc8", "domid 12", "QMP failure at File \"xc/device.ml\", line 3366, characters 71-78" ], "call": { "method": "VM.start", "params": [ "OpaqueRef:a60d0553-a2f2-41e6-9df4-fad745fbacc8", false, false ] }, "message": "FAILED_TO_START_EMULATOR(OpaqueRef:a60d0553-a2f2-41e6-9df4-fad745fbacc8, domid 12, QMP failure at File \"xc/device.ml\", line 3366, characters 71-78)", "name": "XapiError", "stack": "XapiError: FAILED_TO_START_EMULATOR(OpaqueRef:a60d0553-a2f2-41e6-9df4-fad745fbacc8, domid 12, QMP failure at File \"xc/device.ml\", line 3366, characters 71-78) at Function.wrap (/opt/xo/xo-builds/xen-orchestra-202206111352/packages/xen-api/src/_XapiError.js:16:12) at /opt/xo/xo-builds/xen-orchestra-202206111352/packages/xen-api/src/transports/json-rpc.js:37:27 at AsyncResource.runInAsyncScope (async_hooks.js:197:9) at cb (/opt/xo/xo-builds/xen-orchestra-202206111352/node_modules/bluebird/js/release/util.js:355:42) at tryCatcher (/opt/xo/xo-builds/xen-orchestra-202206111352/node_modules/bluebird/js/release/util.js:16:23) at Promise._settlePromiseFromHandler (/opt/xo/xo-builds/xen-orchestra-202206111352/node_modules/bluebird/js/release/promise.js:547:31) at Promise._settlePromise (/opt/xo/xo-builds/xen-orchestra-202206111352/node_modules/bluebird/js/release/promise.js:604:18) at Promise._settlePromise0 (/opt/xo/xo-builds/xen-orchestra-202206111352/node_modules/bluebird/js/release/promise.js:649:10) at Promise._settlePromises (/opt/xo/xo-builds/xen-orchestra-202206111352/node_modules/bluebird/js/release/promise.js:729:18) at _drainQueueStep (/opt/xo/xo-builds/xen-orchestra-202206111352/node_modules/bluebird/js/release/async.js:93:12) at _drainQueue (/opt/xo/xo-builds/xen-orchestra-202206111352/node_modules/bluebird/js/release/async.js:86:9) at Async._drainQueues (/opt/xo/xo-builds/xen-orchestra-202206111352/node_modules/bluebird/js/release/async.js:102:5) at Immediate.Async.drainQueues [as _onImmediate] (/opt/xo/xo-builds/xen-orchestra-202206111352/node_modules/bluebird/js/release/async.js:15:14) at processImmediate (internal/timers.js:464:21) at process.callbackTrampoline (internal/async_hooks.js:130:17)" }This VM is set up the same way as several other VMs I have running on another cluster but using SRs on NFS mounts.

This is with latest XCP-ng version, all servers patched and up-to-date.

yum update ... No packages marked for update -

@geoffbland Thank you for your tests.

Could you send me the other logs (/var/log/SMlog + kern.log + drbd-kern.log please)? Also check if the LVM volumes are reachable with

linstor resource list. Also, you can check withlvscommand on each host.EIOerror is not a nice error to observe. -

@ronan-a The volumes are reachable on all nodes:

[16:13 XCPNG30 ~]# linstor --controllers=192.168.1.30,192.168.1.31,192.168.1.32 resource list | grep xcp-volume-00b34ae3-2ad3-44ea-aa13-d5de1fbf756f | xcp-volume-00b34ae3-2ad3-44ea-aa13-d5de1fbf756f | XCPNG30 | 7001 | InUse | Ok | UpToDate | 2022-07-15 20:03:53 | | xcp-volume-00b34ae3-2ad3-44ea-aa13-d5de1fbf756f | XCPNG31 | 7001 | Unused | Ok | UpToDate | 2022-07-15 20:03:59 | | xcp-volume-00b34ae3-2ad3-44ea-aa13-d5de1fbf756f | XCPNG32 | 7001 | Unused | Ok | Diskless | 2022-07-15 20:03:51 | [16:12 XCPNG31 ~]# linstor --controllers=192.168.1.30,192.168.1.31,192.168.1.32 resource list | grep xcp-volume-00b34ae3-2ad3-44ea-aa13-d5de1fbf756f | xcp-volume-00b34ae3-2ad3-44ea-aa13-d5de1fbf756f | XCPNG30 | 7001 | InUse | Ok | UpToDate | 2022-07-15 20:03:53 | | xcp-volume-00b34ae3-2ad3-44ea-aa13-d5de1fbf756f | XCPNG31 | 7001 | Unused | Ok | UpToDate | 2022-07-15 20:03:59 | | xcp-volume-00b34ae3-2ad3-44ea-aa13-d5de1fbf756f | XCPNG32 | 7001 | Unused | Ok | Diskless | 2022-07-15 20:03:51 | [16:14 XCPNG32 ~]# linstor --controllers=192.168.1.30,192.168.1.31,192.168.1.32 resource list | grep xcp-volume-00b34ae3-2ad3-44ea-aa13-d5de1fbf756f | xcp-volume-00b34ae3-2ad3-44ea-aa13-d5de1fbf756f | XCPNG30 | 7001 | InUse | Ok | UpToDate | 2022-07-15 20:03:53 | | xcp-volume-00b34ae3-2ad3-44ea-aa13-d5de1fbf756f | XCPNG31 | 7001 | Unused | Ok | UpToDate | 2022-07-15 20:03:59 | | xcp-volume-00b34ae3-2ad3-44ea-aa13-d5de1fbf756f | XCPNG32 | 7001 | Unused | Ok | Diskless | 2022-07-15 20:03:51 |Volumes appear to be OK on 2 hosts and not present on the third - although with rep set as 2 I think that is expected?

[16:14 XCPNG30 ~]# lvs | grep xcp-volume-00b34ae3-2ad3-44ea-aa13-d5de1fbf756f xcp-volume-00b34ae3-2ad3-44ea-aa13-d5de1fbf756f_00000 linstor_group -wi-ao---- <40.10g [16:15 XCPNG31 ~]# lvs | grep xcp-volume-00b34ae3-2ad3-44ea-aa13-d5de1fbf756f xcp-volume-00b34ae3-2ad3-44ea-aa13-d5de1fbf756f_00000 linstor_group -wi-ao---- <40.10g [16:19 XCPNG32 ~]# lvs | grep xcp-volume-00b34ae3-2ad3-44ea-aa13-d5de1fbf756f No linesAlthough when running the lvs command on each host I am getting a lot of warnings about DRDB volumes - these seem to be volumes that were previously deleted but not cleaned up fully:

/dev/drbd1024: open failed: Wrong medium type /dev/drbd1026: open failed: Wrong medium type /dev/drbd1028: open failed: Wrong medium type /dev/drbd1000: open failed: Wrong medium type /dev/drbd1002: open failed: Wrong medium type /dev/drbd1012: open failed: Wrong medium type /dev/drbd1014: open failed: Wrong medium type /dev/drbd1016: open failed: Wrong medium type /dev/drbd1018: open failed: Wrong medium type /dev/drbd1020: open failed: Wrong medium type /dev/drbd1022: open failed: Wrong medium type -

We've been trying to re-setup linstor on our new pool, and it seems to be working this time. However, when attempting to enable pool HA, it's not working correctly with this error :

[16:36 ovbh-pprod-xen10 ~]# xe pool-ha-enable heartbeat-sr-uuids=a8b860a9-5246-0dd2-8b7f-4806604f219a This operation cannot be performed because this VDI could not be properly attached to the VM. vdi: 5ca46fd4-c315-46c9-8272-5192118e33a9 (Statefile for HA)SMLog

Jul 26 16:37:10 ovbh-pprod-xen10 SM: [11456] lock: opening lock file /var/lock/sm/a8b860a9-5246-0dd2-8b7f-4806604f219a/sr Jul 26 16:37:10 ovbh-pprod-xen10 SM: [11456] lock: acquired /var/lock/sm/a8b860a9-5246-0dd2-8b7f-4806604f219a/sr Jul 26 16:37:10 ovbh-pprod-xen10 SM: [11456] Synchronize metadata... Jul 26 16:37:10 ovbh-pprod-xen10 SM: [11456] VDI 5ca46fd4-c315-46c9-8272-5192118e33a9 loaded! (path=/dev/drbd/by-res/xcp-persistent-ha-statefile/0, hidden=0) Jul 26 16:37:10 ovbh-pprod-xen10 SM: [11456] lock: released /var/lock/sm/a8b860a9-5246-0dd2-8b7f-4806604f219a/sr Jul 26 16:37:10 ovbh-pprod-xen10 SM: [11456] vdi_generate_config {'sr_uuid': 'a8b860a9-5246-0dd2-8b7f-4806604f219a', 'subtask_of': 'OpaqueRef:29c37d59-5cb3-4cfe-aa3f-097ae089480d', 'vdi_ref': 'OpaqueRef:f352e1aa-bdb7-449b-9291-808331b2f070', 'vdi_on_boot': 'persist', 'args': [], 'vdi_location': '5ca46fd4-c315-46c9-8272-5192118e33a9', 'host_ref': 'OpaqueRef:b5e3bf1f-2c0e-4272-85f5-7cde69a8d98e', 'session_ref': 'OpaqueRef:a1883dc6-76bf-4b2c-9829-6ed610791896', 'device_config': {'group-name': 'linstor_group/thin_device', 'redundancy': '3', 'hosts': 'ovbh-pprod-xen10,ovbh-pprod-xen11,ovbh-pprod-xen12', 'SRmaster': 'true', 'provisioning': 'thin'}, 'command': 'vdi_generate_config', 'vdi_allow_caching': 'false', 'sr_ref': 'OpaqueRef:41cd1a0c-a388-494a-a94d-35ebad53d283', 'local_cache_sr': '539b8459-d081-b16e-607c-34a51e0e21d3', 'vdi_uuid': '5ca46fd4-c315-46c9-8272-5192118e33a9'} Jul 26 16:37:10 ovbh-pprod-xen10 SM: [11456] LinstorVDI.generate_config for 5ca46fd4-c315-46c9-8272-5192118e33a9 Jul 26 16:37:10 ovbh-pprod-xen10 SM: [11522] lock: opening lock file /var/lock/sm/a8b860a9-5246-0dd2-8b7f-4806604f219a/sr Jul 26 16:37:10 ovbh-pprod-xen10 SM: [11522] lock: acquired /var/lock/sm/a8b860a9-5246-0dd2-8b7f-4806604f219a/sr Jul 26 16:37:10 ovbh-pprod-xen10 SM: [11522] VDI 5ca46fd4-c315-46c9-8272-5192118e33a9 loaded! (path=/dev/http-nbd/xcp-persistent-ha-statefile, hidden=False) Jul 26 16:37:10 ovbh-pprod-xen10 SM: [11522] lock: released /var/lock/sm/a8b860a9-5246-0dd2-8b7f-4806604f219a/sr Jul 26 16:37:10 ovbh-pprod-xen10 SM: [11522] vdi_attach_from_config {'sr_uuid': 'a8b860a9-5246-0dd2-8b7f-4806604f219a', 'sr_sm_config': {}, 'vdi_path': '/dev/http-nbd/xcp-persistent-ha-statefile', 'device_config': {'group-name': 'linstor_group/thin_device', 'redundancy': '3', 'hosts': 'ovbh-pprod-xen10,ovbh-pprod-xen11,ovbh-pprod-xen12', 'SRmaster': 'true', 'provisioning': 'thin'}, 'command': 'vdi_attach_from_config', 'vdi_uuid': '5ca46fd4-c315-46c9-8272-5192118e33a9'} Jul 26 16:37:10 ovbh-pprod-xen10 SM: [11522] LinstorVDI.attach_from_config for 5ca46fd4-c315-46c9-8272-5192118e33a9 Jul 26 16:37:10 ovbh-pprod-xen10 SM: [11522] LinstorVDI.attach for 5ca46fd4-c315-46c9-8272-5192118e33a9 Jul 26 16:37:10 ovbh-pprod-xen10 SM: [11522] Kill http server /run/http-server-xcp-persistent-ha-statefile.pid (pid=10814) Jul 26 16:37:10 ovbh-pprod-xen10 SM: [11522] Starting http-disk-server on port 8076... Jul 26 16:37:10 ovbh-pprod-xen10 SM: [11522] Got exception: [Errno 2] No such file or directory: '/etc/xensource/xhad.conf'. Retry number: 0 Jul 26 16:37:11 ovbh-pprod-xen10 SM: [11522] Got exception: [Errno 2] No such file or directory: '/etc/xensource/xhad.conf'. Retry number: 1 Jul 26 16:37:12 ovbh-pprod-xen10 SM: [11522] Got exception: [Errno 2] No such file or directory: '/etc/xensource/xhad.conf'. Retry number: 2 Jul 26 16:37:13 ovbh-pprod-xen10 SM: [11522] Got exception: [Errno 2] No such file or directory: '/etc/xensource/xhad.conf'. Retry number: 3 Jul 26 16:37:14 ovbh-pprod-xen10 SM: [11522] Got exception: [Errno 2] No such file or directory: '/etc/xensource/xhad.conf'. Retry number: 4 Jul 26 16:37:15 ovbh-pprod-xen10 SM: [11522] Got exception: [Errno 2] No such file or directory: '/etc/xensource/xhad.conf'. Retry number: 5 Jul 26 16:37:16 ovbh-pprod-xen10 SM: [11522] Got exception: [Errno 2] No such file or directory: '/etc/xensource/xhad.conf'. Retry number: 6 Jul 26 16:37:17 ovbh-pprod-xen10 SM: [11522] Got exception: [Errno 2] No such file or directory: '/etc/xensource/xhad.conf'. Retry number: 7 Jul 26 16:37:18 ovbh-pprod-xen10 SM: [11522] Got exception: [Errno 2] No such file or directory: '/etc/xensource/xhad.conf'. Retry number: 8 Jul 26 16:37:19 ovbh-pprod-xen10 SM: [11522] Got exception: [Errno 2] No such file or directory: '/etc/xensource/xhad.conf'. Retry number: 9 Jul 26 16:37:19 ovbh-pprod-xen10 SM: [11522] Raising exception [46, The VDI is not available [opterr=Failed to start nbd-server: Cannot start persistent NBD server: no XAPI session, nor XHA config file]] Jul 26 16:37:19 ovbh-pprod-xen10 SM: [11522] ***** LinstorVDI.attach_from_config: EXCEPTION <class 'SR.SROSError'>, The VDI is not available [opterr=Failed to start nbd-server: Cannot start persistent NBD server: no XAPI session, nor XHA config file] Jul 26 16:37:19 ovbh-pprod-xen10 SM: [11522] File "/opt/xensource/sm/LinstorSR", line 2027, in attach_from_config Jul 26 16:37:19 ovbh-pprod-xen10 SM: [11522] return self.attach(sr_uuid, vdi_uuid) Jul 26 16:37:19 ovbh-pprod-xen10 SM: [11522] File "/opt/xensource/sm/LinstorSR", line 1827, in attach Jul 26 16:37:19 ovbh-pprod-xen10 SM: [11522] return self._attach_using_http_nbd() Jul 26 16:37:19 ovbh-pprod-xen10 SM: [11522] File "/opt/xensource/sm/LinstorSR", line 2764, in _attach_using_http_nbd Jul 26 16:37:19 ovbh-pprod-xen10 SM: [11522] self._start_persistent_nbd_server(volume_name) Jul 26 16:37:19 ovbh-pprod-xen10 SM: [11522] File "/opt/xensource/sm/LinstorSR", line 2647, in _start_persistent_nbd_server Jul 26 16:37:19 ovbh-pprod-xen10 SM: [11522] opterr='Failed to start nbd-server: {}'.format(e) Jul 26 16:37:19 ovbh-pprod-xen10 SM: [11522] Jul 26 16:37:19 ovbh-pprod-xen10 SM: [11522] Raising exception [47, The SR is not available [opterr=Unable to attach from config]] Jul 26 16:37:19 ovbh-pprod-xen10 SM: [11522] ***** generic exception: vdi_attach_from_config: EXCEPTION <class 'SR.SROSError'>, The SR is not available [opterr=Unable to attach from config] Jul 26 16:37:19 ovbh-pprod-xen10 SM: [11522] File "/opt/xensource/sm/SRCommand.py", line 110, in run Jul 26 16:37:19 ovbh-pprod-xen10 SM: [11522] return self._run_locked(sr) Jul 26 16:37:19 ovbh-pprod-xen10 SM: [11522] File "/opt/xensource/sm/SRCommand.py", line 159, in _run_locked Jul 26 16:37:19 ovbh-pprod-xen10 SM: [11522] rv = self._run(sr, target) Jul 26 16:37:19 ovbh-pprod-xen10 SM: [11522] File "/opt/xensource/sm/SRCommand.py", line 294, in _run Jul 26 16:37:19 ovbh-pprod-xen10 SM: [11522] ret = target.attach_from_config(self.params['sr_uuid'], self.vdi_uuid) Jul 26 16:37:19 ovbh-pprod-xen10 SM: [11522] File "/opt/xensource/sm/LinstorSR", line 2032, in attach_from_config Jul 26 16:37:19 ovbh-pprod-xen10 SM: [11522] opterr='Unable to attach from config' Jul 26 16:37:19 ovbh-pprod-xen10 SM: [11522] Jul 26 16:37:19 ovbh-pprod-xen10 SM: [11522] ***** LINSTOR resources on XCP-ng: EXCEPTION <class 'SR.SROSError'>, The SR is not available [opterr=Unable to attach from config] Jul 26 16:37:19 ovbh-pprod-xen10 SM: [11522] File "/opt/xensource/sm/SRCommand.py", line 378, in run Jul 26 16:37:19 ovbh-pprod-xen10 SM: [11522] ret = cmd.run(sr) Jul 26 16:37:19 ovbh-pprod-xen10 SM: [11522] File "/opt/xensource/sm/SRCommand.py", line 110, in run Jul 26 16:37:19 ovbh-pprod-xen10 SM: [11522] return self._run_locked(sr) Jul 26 16:37:19 ovbh-pprod-xen10 SM: [11522] File "/opt/xensource/sm/SRCommand.py", line 159, in _run_locked Jul 26 16:37:19 ovbh-pprod-xen10 SM: [11522] rv = self._run(sr, target) Jul 26 16:37:19 ovbh-pprod-xen10 SM: [11522] File "/opt/xensource/sm/SRCommand.py", line 294, in _run Jul 26 16:37:19 ovbh-pprod-xen10 SM: [11522] ret = target.attach_from_config(self.params['sr_uuid'], self.vdi_uuid) Jul 26 16:37:19 ovbh-pprod-xen10 SM: [11522] File "/opt/xensource/sm/LinstorSR", line 2032, in attach_from_config Jul 26 16:37:19 ovbh-pprod-xen10 SM: [11522] opterr='Unable to attach from config' Jul 26 16:37:19 ovbh-pprod-xen10 SM: [11522]xensource

Jul 26 16:37:09 ovbh-pprod-xen10 xapi: [ info||12893 /var/lib/xcp/xapi||cli] xe pool-ha-enable heartbeat-sr-uuids=a8b860a9-5246-0dd2-8b7f-4806604f219a username=root password=(omitted) Jul 26 16:37:09 ovbh-pprod-xen10 xapi: [ info||12893 /var/lib/xcp/xapi|session.login_with_password D:28e65110d9c7|xapi_session] Session.create trackid=aaadbd98bb0d2da814837704c59da59e pool=false uname=root originator=cli is_local_superuser=true auth_user_sid= parent=trackid=9834f5af41c964e225f24279aefe4e49 Jul 26 16:37:09 ovbh-pprod-xen10 xapi: [debug||12894 /var/lib/xcp/xapi||dummytaskhelper] task dispatch:pool.get_all D:d4e1d279b97d created by task D:28e65110d9c7 Jul 26 16:37:09 ovbh-pprod-xen10 xapi: [debug||12893 /var/lib/xcp/xapi|pool.enable_ha R:91a5f9c321fc|audit] Pool.enable_ha: pool = '630d94cc-d026-b478-2a9e-ce3bc6759fc6 (OVBH-PROD-XENPOOL03)'; heartbeat_srs = [ OpaqueRef:41cd1a0c-a388-494a-a94d-35ebad53d283 ]; configuration = [ ] Jul 26 16:37:09 ovbh-pprod-xen10 xapi: [debug||12893 /var/lib/xcp/xapi|pool.enable_ha R:91a5f9c321fc|xapi_ha] Enabling HA on the Pool. Jul 26 16:37:09 ovbh-pprod-xen10 xapi: [ warn||12893 /var/lib/xcp/xapi|pool.enable_ha R:91a5f9c321fc|xapi_ha] Warning: A possible network anomaly was found. The following hosts possibly have storage PIFs that can be unplugged: A possible network anomaly was found. The following hosts possibly have storage PIFs that are not dedicated:, ovbh-pprod-xen10: bond1 (uuid: 92e5951a-a007-5fcd-cd6e-583e808d3fc4) Jul 26 16:37:09 ovbh-pprod-xen10 xapi: [debug||12893 /var/lib/xcp/xapi|pool.enable_ha R:91a5f9c321fc|xapi_message] Message.create IP_CONFIGURED_PIF_CAN_UNPLUG 3 Pool 630d94cc-d026-b478-2a9e-ce3bc6759fc6 Jul 26 16:37:09 ovbh-pprod-xen10 xapi: [debug||12893 /var/lib/xcp/xapi|pool.enable_ha R:91a5f9c321fc|thread_queue] push(email message queue, IP_CONFIGURED_PIF_CAN_UNPLUG): queue = [ IP_CONFIGURED_PIF_CAN_UNPLUG ](1) Jul 26 16:37:09 ovbh-pprod-xen10 xapi: [ info||12893 /var/lib/xcp/xapi|pool.enable_ha R:91a5f9c321fc|xha_statefile] re-using existing statefile: 5ca46fd4-c315-46c9-8272-5192118e33a9 Jul 26 16:37:09 ovbh-pprod-xen10 xapi: [ info||12893 /var/lib/xcp/xapi|pool.enable_ha R:91a5f9c321fc|xha_metadata_vdi] re-using existing metadata VDI: 3eea65a8-3905-406d-bfdd-8c774bb43ee0 Jul 26 16:37:09 ovbh-pprod-xen10 xapi: [debug||12893 /var/lib/xcp/xapi|pool.enable_ha R:91a5f9c321fc|xapi_ha_vm_failover] Protected VMs: [ ] Jul 26 16:37:09 ovbh-pprod-xen10 xapi: [debug||4145 ||thread_queue] pop(email message queue) = IP_CONFIGURED_PIF_CAN_UNPLUG Jul 26 16:37:09 ovbh-pprod-xen10 xapi: [debug||12893 /var/lib/xcp/xapi|pool.enable_ha R:91a5f9c321fc|xapi_ha_vm_failover] Restart plan for non-agile offline VMs: [ ] Jul 26 16:37:09 ovbh-pprod-xen10 xapi: [debug||12893 /var/lib/xcp/xapi|pool.enable_ha R:91a5f9c321fc|xapi_ha_vm_failover] Planning configuration for offline agile VMs = { total_hosts = 3; num_failures = 0; hosts = [ 8a459076 (ovbh-pprod-xen12), 257938591744; 438137c7 (ovbh-pprod-xen11), 262271307776; b5e3bf1f (ovbh-pprod-xen10), 262271311872 ]; vms = [ ]; placement = [ ] } Jul 26 16:37:09 ovbh-pprod-xen10 xapi: [debug||12893 /var/lib/xcp/xapi|pool.enable_ha R:91a5f9c321fc|xapi_ha_vm_failover] Computing a specific plan for the failure of VMs: [ ] Jul 26 16:37:09 ovbh-pprod-xen10 xapi: [debug||12893 /var/lib/xcp/xapi|pool.enable_ha R:91a5f9c321fc|xapi_ha_vm_failover] Restart plan for agile offline VMs: [ ] Jul 26 16:37:09 ovbh-pprod-xen10 xapi: [debug||12893 /var/lib/xcp/xapi|pool.enable_ha R:91a5f9c321fc|xapi_ha_vm_failover] Planning configuration for future failures = { total_hosts = 3; num_failures = 0; hosts = [ 8a459076 (ovbh-pprod-xen12), 257938591744; 438137c7 (ovbh-pprod-xen11), 262271307776; b5e3bf1f (ovbh-pprod-xen10), 262271311872 ]; vms = [ ]; placement = [ ] } Jul 26 16:37:09 ovbh-pprod-xen10 xapi: [debug||12893 /var/lib/xcp/xapi|pool.enable_ha R:91a5f9c321fc|xapi_ha_vm_failover] plan_for_n_failures config = { total_hosts = 3; num_failures = 0; hosts = [ 8a459076 (ovbh-pprod-xen12), 257938591744; 438137c7 (ovbh-pprod-xen11), 262271307776; b5e3bf1f (ovbh-pprod-xen10), 262271311872 ]; vms = [ ]; placement = [ ] } Jul 26 16:37:09 ovbh-pprod-xen10 xapi: [debug||12893 /var/lib/xcp/xapi|pool.enable_ha R:91a5f9c321fc|xapi_ha_vm_failover] HA failover plan exists for all protected VMs Jul 26 16:37:09 ovbh-pprod-xen10 xapi: [debug||12893 /var/lib/xcp/xapi|pool.enable_ha R:91a5f9c321fc|xapi_ha_vm_failover] to_tolerate = 0 planned_for = 0 Jul 26 16:37:09 ovbh-pprod-xen10 xapi: [ info||12893 /var/lib/xcp/xapi|pool.enable_ha R:91a5f9c321fc|xapi_ha] Detaching any existing statefiles: these are not needed any more Jul 26 16:37:09 ovbh-pprod-xen10 xapi: [ info||12893 /var/lib/xcp/xapi|pool.enable_ha R:91a5f9c321fc|xapi_ha] Attempting to permanently attach statefile VDI: OpaqueRef:f352e1aa-bdb7-449b-9291-808331b2f070 Jul 26 16:37:09 ovbh-pprod-xen10 xapi: [ info||12893 /var/lib/xcp/xapi|pool.enable_ha R:91a5f9c321fc|static_vdis] permanent_vdi_attach: vdi = OpaqueRef:f352e1aa-bdb7-449b-9291-808331b2f070; sr = OpaqueRef:41cd1a0c-a388-494a-a94d-35ebad53d283 Jul 26 16:37:09 ovbh-pprod-xen10 xapi: [debug||12893 /var/lib/xcp/xapi|pool.enable_ha R:91a5f9c321fc|helpers] about to call script: /opt/xensource/bin/static-vdis Jul 26 16:37:09 ovbh-pprod-xen10 xapi: [ info||12895 HTTPS 10.2.0.5->:::80|host.get_servertime D:40d083416923|xapi_session] Session.create trackid=2cb32dffdfaa305684431f44c5873f23 pool=true uname= originator=xapi is_local_superuser=true auth_user_sid= parent=trackid=e8c14c89e4b0bc5c3ecf2f0ddf6faa88 Jul 26 16:37:09 ovbh-pprod-xen10 xapi: [debug||12896 /var/lib/xcp/xapi||dummytaskhelper] task dispatch:pool.get_all D:d74318d22de3 created by task D:40d083416923 Jul 26 16:37:09 ovbh-pprod-xen10 xapi: [debug||12895 HTTPS 10.2.0.5->:::80|host.get_servertime D:40d083416923|xmlrpc_client] stunnel pid: 4604 (cached = true) connected to 10.2.0.21:443 Jul 26 16:37:09 ovbh-pprod-xen10 xapi: [debug||12895 HTTPS 10.2.0.5->:::80|host.get_servertime D:40d083416923|xmlrpc_client] with_recorded_stunnelpid task_opt=None s_pid=4604 Jul 26 16:37:09 ovbh-pprod-xen10 xapi: [debug||12895 HTTPS 10.2.0.5->:::80|host.get_servertime D:40d083416923|xmlrpc_client] stunnel pid: 4604 (cached = true) returned stunnel to cache Jul 26 16:37:09 ovbh-pprod-xen10 xapi: [ info||12895 HTTPS 10.2.0.5->:::80|host.get_servertime D:40d083416923|xapi_session] Session.destroy trackid=2cb32dffdfaa305684431f44c5873f23 Jul 26 16:37:09 ovbh-pprod-xen10 xapi: [ info||12897 HTTPS 10.2.0.5->:::80|task.create D:5ee8faf4a9fa|taskhelper] task Xapi#getResource /rrd_updates R:58b4da0ce9ec (uuid:0be27ff2-b458-8a76-0a11-f069fdc7f186) created (trackid=e8c14c89e4b0bc5c3ecf2f0ddf6faa88) by task D:5ee8faf4a9fa Jul 26 16:37:09 ovbh-pprod-xen10 xapi: [debug||12898 /var/lib/xcp/xapi||dummytaskhelper] task dispatch:session.logout D:27670cf1c9d3 created by task D:65bd950f3466 Jul 26 16:37:09 ovbh-pprod-xen10 xapi: [ info||12898 /var/lib/xcp/xapi|session.logout D:6a026daeda09|xapi_session] Session.destroy trackid=cf6600bb9537bf503e328d9387afff9c Jul 26 16:37:09 ovbh-pprod-xen10 xapi: [debug||12899 /var/lib/xcp/xapi||dummytaskhelper] task dispatch:session.slave_login D:baa574e97b12 created by task D:65bd950f3466 Jul 26 16:37:09 ovbh-pprod-xen10 xapi: [ info||12899 /var/lib/xcp/xapi|session.slave_login D:8c52ad05a8de|xapi_session] Session.create trackid=dd82c958f9ec6e2e5126f98706f39fdf pool=true uname= originator=xapi is_local_superuser=true auth_user_sid= parent=trackid=9834f5af41c964e225f24279aefe4e49 Jul 26 16:37:09 ovbh-pprod-xen10 xapi: [debug||12900 /var/lib/xcp/xapi||dummytaskhelper] task dispatch:pool.get_all D:d1dfaed156c9 created by task D:8c52ad05a8de Jul 26 16:37:09 ovbh-pprod-xen10 xapi: [debug||12901 /var/lib/xcp/xapi||dummytaskhelper] task dispatch:event.from D:45591c3f6bf7 created by task D:65bd950f3466 Jul 26 16:37:09 ovbh-pprod-xen10 xapi: [ info||12902 /var/lib/xcp/xapi|session.login_with_password D:8a2fe7639b51|xapi_session] Session.create trackid=8ddfe1f61f1f00dc119fa7d8f95c8f60 pool=false uname=root originator=xen-api-scripts-static-vdis is_local_superuser=true auth_user_sid= parent=trackid=9834f5af41c964e225f24279aefe4e49 Jul 26 16:37:09 ovbh-pprod-xen10 xapi: [debug||12904 /var/lib/xcp/xapi||dummytaskhelper] task dispatch:pool.get_all D:0065eb435b07 created by task D:8a2fe7639b51 Jul 26 16:37:09 ovbh-pprod-xen10 xapi: [ info||12903 /var/lib/xcp/xapi|session.login_with_password D:5fc61c47cb61|xapi_session] Session.create trackid=6198b4d0eec3bea720a5489b7d9993c8 pool=false uname=__dom0__mail_alarm originator=xen-api-scripts-mail-alarm is_local_superuser=true auth_user_sid= parent=trackid=9834f5af41c964e225f24279aefe4e49 Jul 26 16:37:09 ovbh-pprod-xen10 xapi: [debug||12905 /var/lib/xcp/xapi||dummytaskhelper] task dispatch:pool.get_all D:66ebbe281c12 created by task D:5fc61c47cb61 Jul 26 16:37:09 ovbh-pprod-xen10 xapi: [ info||12922 /var/lib/xcp/xapi|session.logout D:bf2abbbb44ab|xapi_session] Session.destroy trackid=6198b4d0eec3bea720a5489b7d9993c8 Jul 26 16:37:09 ovbh-pprod-xen10 xapi: [error||4145 ||xapi_message] Unexpected exception in message hook /opt/xensource/libexec/mail-alarm: INTERNAL_ERROR: [ Subprocess exited with unexpected code 1; stdout = [ ]; stderr = [ pool:other-config:mail-destination not specified\x0A ] ] Jul 26 16:37:09 ovbh-pprod-xen10 xapi: [debug||4145 ||thread_queue] email message queue: completed processing 1 items: queue = [ ](0) Jul 26 16:37:09 ovbh-pprod-xen10 xapi: [debug||12934 /var/lib/xcp/xapi|VDI.generate_config R:29c37d595cb3|audit] VDI.generate_config: VDI = '5ca46fd4-c315-46c9-8272-5192118e33a9'; host = 'ff631fff-1947-4631-a35d-9352204f98d9 (ovbh-pprod-xen10)' Jul 26 16:37:09 ovbh-pprod-xen10 xapi: [debug||12934 /var/lib/xcp/xapi|VDI.generate_config R:29c37d595cb3|sm] SM linstor vdi_generate_config sr=OpaqueRef:41cd1a0c-a388-494a-a94d-35ebad53d283 vdi=OpaqueRef:f352e1aa-bdb7-449b-9291-808331b2f070 Jul 26 16:37:09 ovbh-pprod-xen10 xapi: [ info||12934 /var/lib/xcp/xapi|sm_exec D:e67ad7661ab5|xapi_session] Session.create trackid=5278325068f2d56e7a6449ad96d024c8 pool=false uname= originator=xapi is_local_superuser=true auth_user_sid= parent=trackid=9834f5af41c964e225f24279aefe4e49 Jul 26 16:37:09 ovbh-pprod-xen10 xapi: [debug||12935 /var/lib/xcp/xapi||dummytaskhelper] task dispatch:pool.get_all D:88e51aa2b828 created by task D:e67ad7661ab5 Jul 26 16:37:10 ovbh-pprod-xen10 xapi: [debug||12936 /var/lib/xcp/xapi||dummytaskhelper] task dispatch:host.get_other_config D:54ab4886bd6b created by task R:29c37d595cb3 Jul 26 16:37:10 ovbh-pprod-xen10 xapi: [debug||12937 /var/lib/xcp/xapi||dummytaskhelper] task dispatch:SR.get_sm_config D:097aa2e98f20 created by task R:29c37d595cb3 Jul 26 16:37:10 ovbh-pprod-xen10 xapi: [debug||12940 /var/lib/xcp/xapi||dummytaskhelper] task dispatch:VDI.get_by_uuid D:75ce02824999 created by task R:29c37d595cb3 Jul 26 16:37:10 ovbh-pprod-xen10 xapi: [debug||12941 /var/lib/xcp/xapi||dummytaskhelper] task dispatch:VDI.get_sm_config D:06b72052f6e1 created by task R:29c37d595cb3 Jul 26 16:37:10 ovbh-pprod-xen10 xapi: [ info||12934 /var/lib/xcp/xapi|sm_exec D:e67ad7661ab5|xapi_session] Session.destroy trackid=5278325068f2d56e7a6449ad96d024c8 Jul 26 16:37:10 ovbh-pprod-xen10 xapi: [ info||12943 /var/lib/xcp/xapi|session.logout D:f00dc0e4dc46|xapi_session] Session.destroy trackid=8ddfe1f61f1f00dc119fa7d8f95c8f60 Jul 26 16:37:10 ovbh-pprod-xen10 xapi: [debug||12893 /var/lib/xcp/xapi|pool.enable_ha R:91a5f9c321fc|helpers] /opt/xensource/bin/static-vdis add 5ca46fd4-c315-46c9-8272-5192118e33a9 HA statefile succeeded [ output = '' ] Jul 26 16:37:10 ovbh-pprod-xen10 xapi: [debug||12893 /var/lib/xcp/xapi|pool.enable_ha R:91a5f9c321fc|helpers] about to call script: /opt/xensource/bin/static-vdis Jul 26 16:37:10 ovbh-pprod-xen10 xapi: [ info||12946 /var/lib/xcp/xapi|session.login_with_password D:add1819f775b|xapi_session] Session.create trackid=1e6292226a91eaacb7b5776974b6de3b pool=false uname=root originator=SM is_local_superuser=true auth_user_sid= parent=trackid=9834f5af41c964e225f24279aefe4e49 Jul 26 16:37:10 ovbh-pprod-xen10 xapi: [debug||12947 /var/lib/xcp/xapi||dummytaskhelper] task dispatch:pool.get_all D:c9ab7c15ce3a created by task D:add1819f775b Jul 26 16:37:10 ovbh-pprod-xen10 xapi: [ info||12954 /var/lib/xcp/xapi|session.login_with_password D:eeb1f9d34c85|xapi_session] Session.create trackid=f06081966e03b8ea81e41bb411fc3576 pool=false uname=root originator=SM is_local_superuser=true auth_user_sid= parent=trackid=9834f5af41c964e225f24279aefe4e49 Jul 26 16:37:10 ovbh-pprod-xen10 xapi: [debug||12955 /var/lib/xcp/xapi||dummytaskhelper] task dispatch:pool.get_all D:334fc8bfbd81 created by task D:eeb1f9d34c85 Jul 26 16:37:12 ovbh-pprod-xen10 xapi: [debug||12961 HTTPS 10.2.0.5->:::80|host.call_plugin R:ccb65336b597|audit] Host.call_plugin host = '28ae0fc6-f725-4ebc-bf61-1816f1f60e72 (ovbh-pprod-xen12)'; plugin = 'updater.py'; fn = 'check_update' args = [ 'hidden' ] Jul 26 16:37:12 ovbh-pprod-xen10 xapi: [ info||12961 HTTPS 10.2.0.5->:::80|host.call_plugin R:ccb65336b597|xapi_session] Session.create trackid=fa887265fb5b0a83289b5f3bb40d3983 pool=true uname= originator=xapi is_local_superuser=true auth_user_sid= parent=trackid=e8c14c89e4b0bc5c3ecf2f0ddf6faa88 Jul 26 16:37:12 ovbh-pprod-xen10 xapi: [debug||12962 /var/lib/xcp/xapi||dummytaskhelper] task dispatch:pool.get_all D:e886ff38b224 created by task R:ccb65336b597 Jul 26 16:37:12 ovbh-pprod-xen10 xapi: [debug||12961 HTTPS 10.2.0.5->:::80|host.call_plugin R:ccb65336b597|xmlrpc_client] stunnel pid: 4621 (cached = true) connected to 10.2.0.21:443 Jul 26 16:37:12 ovbh-pprod-xen10 xapi: [debug||12961 HTTPS 10.2.0.5->:::80|host.call_plugin R:ccb65336b597|xmlrpc_client] with_recorded_stunnelpid task_opt=OpaqueRef:ccb65336-b597-49c1-9545-e278e9a59518 s_pid=4621 Jul 26 16:37:12 ovbh-pprod-xen10 xapi: [debug||12961 HTTPS 10.2.0.5->:::80|host.call_plugin R:ccb65336b597|xmlrpc_client] stunnel pid: 4621 (cached = true) returned stunnel to cache Jul 26 16:37:12 ovbh-pprod-xen10 xapi: [ info||12961 HTTPS 10.2.0.5->:::80|host.call_plugin R:ccb65336b597|xapi_session] Session.destroy trackid=fa887265fb5b0a83289b5f3bb40d3983 Jul 26 16:37:13 ovbh-pprod-xen10 xapi: [debug||818 :::80||dummytaskhelper] task dispatch:event.from D:375adb402468 created by task D:095c828033c5 Jul 26 16:37:15 ovbh-pprod-xen10 xapi: [ info||12965 HTTPS 10.2.0.5->:::80|host.get_servertime D:1ead2d4f30f6|xapi_session] Session.create trackid=7846fad60d9b0bef8c545406123f6ab1 pool=true uname= originator=xapi is_local_superuser=true auth_user_sid= parent=trackid=e8c14c89e4b0bc5c3ecf2f0ddf6faa88 Jul 26 16:37:15 ovbh-pprod-xen10 xapi: [debug||12966 /var/lib/xcp/xapi||dummytaskhelper] task dispatch:pool.get_all D:e0ba66373f7f created by task D:1ead2d4f30f6 Jul 26 16:37:15 ovbh-pprod-xen10 xapi: [debug||12965 HTTPS 10.2.0.5->:::80|host.get_servertime D:1ead2d4f30f6|xmlrpc_client] stunnel pid: 4648 (cached = true) connected to 10.2.0.21:443 Jul 26 16:37:15 ovbh-pprod-xen10 xapi: [debug||12965 HTTPS 10.2.0.5->:::80|host.get_servertime D:1ead2d4f30f6|xmlrpc_client] with_recorded_stunnelpid task_opt=None s_pid=4648 Jul 26 16:37:15 ovbh-pprod-xen10 xapi: [debug||12965 HTTPS 10.2.0.5->:::80|host.get_servertime D:1ead2d4f30f6|xmlrpc_client] stunnel pid: 4648 (cached = true) returned stunnel to cache Jul 26 16:37:15 ovbh-pprod-xen10 xapi: [ info||12965 HTTPS 10.2.0.5->:::80|host.get_servertime D:1ead2d4f30f6|xapi_session] Session.destroy trackid=7846fad60d9b0bef8c545406123f6ab1 Jul 26 16:37:15 ovbh-pprod-xen10 xapi: [ info||12967 HTTPS 10.2.0.5->:::80|task.create D:f7b8730a55b6|taskhelper] task Xapi#getResource /rrd_updates R:051d27e09482 (uuid:17cac94d-85fd-f9e4-adeb-b129582ebd88) created (trackid=e8c14c89e4b0bc5c3ecf2f0ddf6faa88) by task D:f7b8730a55b6 Jul 26 16:37:19 ovbh-pprod-xen10 xapi: [debug||12893 /var/lib/xcp/xapi|pool.enable_ha R:91a5f9c321fc|helpers] /opt/xensource/bin/static-vdis attach 5ca46fd4-c315-46c9-8272-5192118e33a9 exited with code 1 [stdout = ''; stderr = 'Traceback (most recent call last):\x0A File "/opt/xensource/bin/static-vdis", line 377, in <module>\x0A path = attach(sys.argv[2])\x0A File "/opt/xensource/bin/static-vdis", line 288, in attach\x0A path = call_backend_attach(driver, config)\x0A File "/opt/xensource/bin/static-vdis", line 226, in call_backend_attach\x0A xmlrpc = xmlrpclib.loads(xml[1])\x0A File "/usr/lib64/python2.7/xmlrpclib.py", line 1138, in loads\x0A return u.close(), u.getmethodname()\x0A File "/usr/lib64/python2.7/xmlrpclib.py", line 794, in close\x0A raise Fault(**self._stack[0])\x0Axmlrpclib.Fault: <Fault 47: 'The SR is not available [opterr=Unable to attach from config]'>\x0A'] Jul 26 16:37:19 ovbh-pprod-xen10 xapi: [error||12893 /var/lib/xcp/xapi|pool.enable_ha R:91a5f9c321fc|xapi_ha] Caught exception attaching statefile: INTERNAL_ERROR: [ Subprocess exited with unexpected code 1; stdout = [ ]; stderr = [ Traceback (most recent call last):\x0A File "/opt/xensource/bin/static-vdis", line 377, in <module>\x0A path = attach(sys.argv[2])\x0A File "/opt/xensource/bin/static-vdis", line 288, in attach\x0A path = call_backend_attach(driver, config)\x0A File "/opt/xensource/bin/static-vdis", line 226, in call_backend_attach\x0A xmlrpc = xmlrpclib.loads(xml[1])\x0A File "/usr/lib64/python2.7/xmlrpclib.py", line 1138, in loads\x0A return u.close(), u.getmethodname()\x0A File "/usr/lib64/python2.7/xmlrpclib.py", line 794, in close\x0A raise Fault(**self._stack[0])\x0Axmlrpclib.Fault: <Fault 47: 'The SR is not available [opterr=Unable to attach from config]'>\x0A ] ] Jul 26 16:37:19 ovbh-pprod-xen10 xapi: [ info||12893 /var/lib/xcp/xapi|pool.enable_ha R:91a5f9c321fc|static_vdis] permanent_vdi_detach: vdi = OpaqueRef:f352e1aa-bdb7-449b-9291-808331b2f070; sr = OpaqueRef:41cd1a0c-a388-494a-a94d-35ebad53d283 Jul 26 16:37:19 ovbh-pprod-xen10 xapi: [debug||12893 /var/lib/xcp/xapi|pool.enable_ha R:91a5f9c321fc|helpers] about to call script: /opt/xensource/bin/static-vdis Jul 26 16:37:19 ovbh-pprod-xen10 xapi: [debug||12893 /var/lib/xcp/xapi|pool.enable_ha R:91a5f9c321fc|helpers] /opt/xensource/bin/static-vdis detach 5ca46fd4-c315-46c9-8272-5192118e33a9 succeeded [ output = '' ] Jul 26 16:37:19 ovbh-pprod-xen10 xapi: [debug||12893 /var/lib/xcp/xapi|pool.enable_ha R:91a5f9c321fc|helpers] about to call script: /opt/xensource/bin/static-vdis Jul 26 16:37:20 ovbh-pprod-xen10 xapi: [debug||12893 /var/lib/xcp/xapi|pool.enable_ha R:91a5f9c321fc|helpers] /opt/xensource/bin/static-vdis del 5ca46fd4-c315-46c9-8272-5192118e33a9 succeeded [ output = '' ] Jul 26 16:37:20 ovbh-pprod-xen10 xapi: [debug||12893 /var/lib/xcp/xapi|pool.enable_ha R:91a5f9c321fc|xapi_ha] Caught exception while enabling HA: VDI_NOT_AVAILABLE: [ OpaqueRef:f352e1aa-bdb7-449b-9291-808331b2f070 ] Jul 26 16:37:20 ovbh-pprod-xen10 xapi: [error||12893 /var/lib/xcp/xapi||backtrace] pool.enable_ha R:91a5f9c321fc failed with exception Server_error(VDI_NOT_AVAILABLE, [ OpaqueRef:f352e1aa-bdb7-449b-9291-808331b2f070 ]) Jul 26 16:37:20 ovbh-pprod-xen10 xapi: [error||12893 /var/lib/xcp/xapi||backtrace] Raised Server_error(VDI_NOT_AVAILABLE, [ OpaqueRef:f352e1aa-bdb7-449b-9291-808331b2f070 ]) Jul 26 16:37:20 ovbh-pprod-xen10 xapi: [error||12893 /var/lib/xcp/xapi||backtrace] 1/12 xapi Raised at file ocaml/xapi/xapi_ha.ml, line 1196 Jul 26 16:37:20 ovbh-pprod-xen10 xapi: [error||12893 /var/lib/xcp/xapi||backtrace] 2/12 xapi Called from file ocaml/xapi/xapi_ha.ml, line 1811 Jul 26 16:37:20 ovbh-pprod-xen10 xapi: [error||12893 /var/lib/xcp/xapi||backtrace] 3/12 xapi Called from file ocaml/xapi/xapi_ha.ml, line 1929 Jul 26 16:37:20 ovbh-pprod-xen10 xapi: [error||12893 /var/lib/xcp/xapi||backtrace] 4/12 xapi Called from file lib/xapi-stdext-pervasives/pervasiveext.ml, line 24 Jul 26 16:37:20 ovbh-pprod-xen10 xapi: [error||12893 /var/lib/xcp/xapi||backtrace] 5/12 xapi Called from file lib/xapi-stdext-pervasives/pervasiveext.ml, line 35 Jul 26 16:37:20 ovbh-pprod-xen10 xapi: [error||12893 /var/lib/xcp/xapi||backtrace] 6/12 xapi Called from file lib/xapi-stdext-pervasives/pervasiveext.ml, line 24 Jul 26 16:37:20 ovbh-pprod-xen10 xapi: [error||12893 /var/lib/xcp/xapi||backtrace] 7/12 xapi Called from file ocaml/xapi/rbac.ml, line 231 Jul 26 16:37:20 ovbh-pprod-xen10 xapi: [error||12893 /var/lib/xcp/xapi||backtrace] 8/12 xapi Called from file ocaml/xapi/server_helpers.ml, line 103 Jul 26 16:37:20 ovbh-pprod-xen10 xapi: [error||12893 /var/lib/xcp/xapi||backtrace] 9/12 xapi Called from file ocaml/xapi/server_helpers.ml, line 121 Jul 26 16:37:20 ovbh-pprod-xen10 xapi: [error||12893 /var/lib/xcp/xapi||backtrace] 10/12 xapi Called from file lib/xapi-stdext-pervasives/pervasiveext.ml, line 24 Jul 26 16:37:20 ovbh-pprod-xen10 xapi: [error||12893 /var/lib/xcp/xapi||backtrace] 11/12 xapi Called from file lib/xapi-stdext-pervasives/pervasiveext.ml, line 35 Jul 26 16:37:20 ovbh-pprod-xen10 xapi: [error||12893 /var/lib/xcp/xapi||backtrace] 12/12 xapi Called from file lib/backtrace.ml, line 177 Jul 26 16:37:20 ovbh-pprod-xen10 xapi: [error||12893 /var/lib/xcp/xapi||backtrace] Jul 26 16:37:20 ovbh-pprod-xen10 xapi: [ info||12893 /var/lib/xcp/xapi|session.logout D:0869db223ebc|xapi_session] Session.destroy trackid=aaadbd98bb0d2da814837704c59da59e Jul 26 16:37:20 ovbh-pprod-xen10 xapi: [error||12893 /var/lib/xcp/xapi||cli] Converting exception VDI_NOT_AVAILABLE: [ OpaqueRef:f352e1aa-bdb7-449b-9291-808331b2f070 ] into a CLI response Jul 26 16:37:20 ovbh-pprod-xen10 xapi: [error||12893 /var/lib/xcp/xapi||backtrace] Raised Server_error(VDI_NOT_AVAILABLE, [ OpaqueRef:f352e1aa-bdb7-449b-9291-808331b2f070 ]) Jul 26 16:37:20 ovbh-pprod-xen10 xapi: [error||12893 /var/lib/xcp/xapi||backtrace] 1/1 xapi Raised at file (Thread 12893 has no backtrace table. Was with_backtraces called?, line 0 Jul 26 16:37:20 ovbh-pprod-xen10 xapi: [error||12893 /var/lib/xcp/xapi||backtrace] -

@Maelstrom96 said in XOSTOR hyperconvergence preview:

However, when attempting to enable pool HA, it's not working correctly with this error :

After reviewing the code, it seems like there's a critical file

/etc/xensource/xhad.confthat is not created when setting up HA and LinstorSR.py expects it. Hopefully that information can help -

Hello,

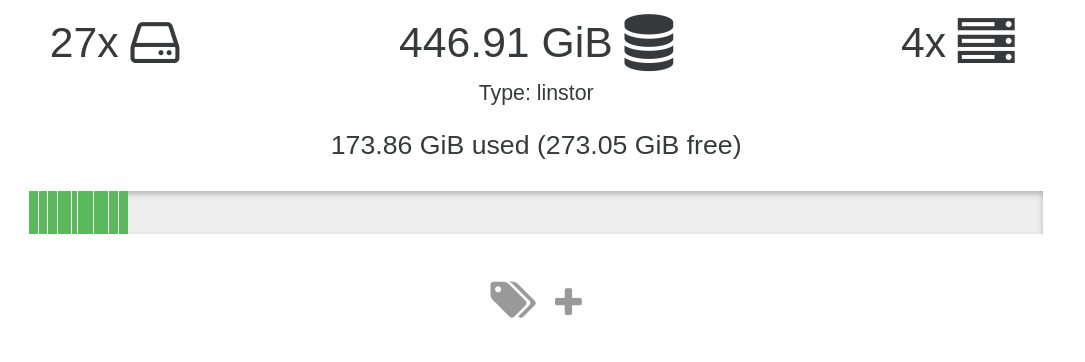

Have been using XOSTOR for a week. On Xen Orchestra the free space and used space calculations are off.

173.86/446.91 = 39%, the green lines at the bottom do not look like they are filling up 39%Also the free/used space in xen are not accurate, when I do

linstor storage-pool listi see a 40GiB used space while Xen Orchestra says 173.86GiB. Note I'm running delta backups on the vms so not sure if this is the cause.╭─────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────╮ ┊ StoragePool ┊ Node ┊ Driver ┊ PoolName ┊ FreeCapacity ┊ TotalCapacity ┊ CanSnapshots ┊ State ┊ SharedName ┊ ╞═════════════════════════════════════════════════════════════════════════════════════════════════════════════════════════════════════════════════════╡ ┊ DfltDisklessStorPool ┊ eva ┊ DISKLESS ┊ ┊ ┊ ┊ False ┊ Ok ┊ ┊ ┊ DfltDisklessStorPool ┊ mike ┊ DISKLESS ┊ ┊ ┊ ┊ False ┊ Ok ┊ ┊ ┊ DfltDisklessStorPool ┊ ozly ┊ DISKLESS ┊ ┊ ┊ ┊ False ┊ Ok ┊ ┊ ┊ DfltDisklessStorPool ┊ phoebe ┊ DISKLESS ┊ ┊ ┊ ┊ False ┊ Ok ┊ ┊ ┊ xcp-sr-linstor_group_thin_device ┊ eva ┊ LVM_THIN ┊ linstor_group/thin_device ┊ 403.47 GiB ┊ 446.91 GiB ┊ True ┊ Ok ┊ ┊ ┊ xcp-sr-linstor_group_thin_device ┊ mike ┊ LVM_THIN ┊ linstor_group/thin_device ┊ 403.47 GiB ┊ 446.91 GiB ┊ True ┊ Ok ┊ ┊ ┊ xcp-sr-linstor_group_thin_device ┊ ozly ┊ LVM_THIN ┊ linstor_group/thin_device ┊ 403.47 GiB ┊ 446.91 GiB ┊ True ┊ Ok ┊ ┊ ┊ xcp-sr-linstor_group_thin_device ┊ phoebe ┊ LVM_THIN ┊ linstor_group/thin_device ┊ 403.38 GiB ┊ 446.91 GiB ┊ True ┊ Ok ┊ ┊ ╰─────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────╯should I only focus on

linstor storage-pool listand ignore the numbers on Xen orchestra?Thank you,

-

@geoffbland said in XOSTOR hyperconvergence preview:

Volumes appear to be OK on 2 hosts and not present on the third - although with rep set as 2 I think that is expected?

Yes, this volume is a diskless, you can have a diskless DRBD in /dev, but no LVM volume is used.

Although when running the lvs command on each host I am getting a lot of warnings about DRDB volumes - these seem to be volumes that were previously deleted but not cleaned up fully:

I will confirm that.

-

@AudleyElwine said in XOSTOR hyperconvergence preview:

Also the free/used space in xen are not accurate, when I do linstor storage-pool list i see a 40GiB used space while Xen Orchestra says 173.86GiB. Note I'm running delta backups on the vms so not sure if this is the cause.

Yes the computation is incorrect. I fixed our method for the next beta update, the replication count was used in the formula, it's completely wrong. You should have (446.91 - 403.47) GiB instead of 173.86 GiB (so (446.91 - 403.47) * 4).

-

@Maelstrom96 said in XOSTOR hyperconvergence preview:

After reviewing the code, it seems like there's a critical file /etc/xensource/xhad.conf that is not created when setting up HA and LinstorSR.py expects it. Hopefully that information can help

Not exactly the problem. I fixed the issue but not released yet.

You can edit/opt/xensource/sm/LinstorSR.pyand replaceget_host_addressfunction definition on each host:def get_host_addresses(session): addresses = [] hosts = session.xenapi.host.get_all_records() # Instead of: # for record in hosts: # use the new line below: for record in hosts.itervalues(): addresses.append(record['address']) return addressesMust be fixed after that.

I think I will release a new RPM in ~1-2 weeks.

I think I will release a new RPM in ~1-2 weeks. -

Hi,

I restarted one of my vms but I couldint get it up due to this error.

SR_BACKEND_FAILURE_46(, The VDI is not available [opterr=Plugin linstor-manager failed], )I really dont know why, I tried my best to debug it but I dont know from where to start.

I did a

grep -r {VID-UUID} /var/log, hopefully there is something here we can debug.Note it was working normally before August 1 3pm in the logs, I cant migrate the data from this XOSTOR to local SR due to another error but I have a backup of the vms.

I'm on the latest version of the xostor;

./xostor --update-onlysays im on the latest version.Thank you

-

I exported a snapshot for the vm so the data is safe. It is just now I'm worried about restarting other nodes on the cluster.

I'm happy to debug it I just need some gudiance as I havent done anything special to the VM since it is creation, so not sure why the VDI error appeared.

-

@ronan-a In my latest test I created a new VM with multiple disks on XOSTOR. This worked OK and I was able to run and access all the disks.

However I then tried to remove this VM. After a long period of nothing happening (other than the spinning icon on the remove button) I get a "operation timed out" error and the VM is now shown as paused again.

vm.delete { "id": "90613dbb-bd40-8082-c227-a318cbdbd01d" } { "call": { "method": "VM.hard_shutdown", "params": [ "OpaqueRef:8aa8abb0-d204-43fd-897f-04425b790e68" ] }, "message": "operation timed out", "name": "TimeoutError", "stack": "TimeoutError: operation timed out at Promise.call (/opt/xo/xo-builds/xen-orchestra-202206111352/node_modules/promise-toolbox/timeout.js:11:16) at Xapi.apply (/opt/xo/xo-builds/xen-orchestra-202206111352/packages/xen-api/src/index.js:693:37) at Xapi._call (/opt/xo/xo-builds/xen-orchestra-202206111352/node_modules/limit-concurrency-decorator/src/index.js:85:24) at /opt/xo/xo-builds/xen-orchestra-202206111352/packages/xen-api/src/index.js:771:21 at loopResolver (/opt/xo/xo-builds/xen-orchestra-202206111352/node_modules/promise-toolbox/retry.js:83:46) at Promise._execute (/opt/xo/xo-builds/xen-orchestra-202206111352/node_modules/bluebird/js/release/debuggability.js:384:9) at Promise._resolveFromExecutor (/opt/xo/xo-builds/xen-orchestra-202206111352/node_modules/bluebird/js/release/promise.js:518:18) at new Promise (/opt/xo/xo-builds/xen-orchestra-202206111352/node_modules/bluebird/js/release/promise.js:103:10) at loop (/opt/xo/xo-builds/xen-orchestra-202206111352/node_modules/promise-toolbox/retry.js:85:22) at retry (/opt/xo/xo-builds/xen-orchestra-202206111352/node_modules/promise-toolbox/retry.js:87:10) at Xapi._sessionCall (/opt/xo/xo-builds/xen-orchestra-202206111352/packages/xen-api/src/index.js:762:20) at Xapi.call (/opt/xo/xo-builds/xen-orchestra-202206111352/packages/xen-api/src/index.js:273:14) at loopResolver (/opt/xo/xo-builds/xen-orchestra-202206111352/node_modules/promise-toolbox/retry.js:83:46) at Promise._execute (/opt/xo/xo-builds/xen-orchestra-202206111352/node_modules/bluebird/js/release/debuggability.js:384:9) at Promise._resolveFromExecutor (/opt/xo/xo-builds/xen-orchestra-202206111352/node_modules/bluebird/js/release/promise.js:518:18) at new Promise (/opt/xo/xo-builds/xen-orchestra-202206111352/node_modules/bluebird/js/release/promise.js:103:10) at loop (/opt/xo/xo-builds/xen-orchestra-202206111352/node_modules/promise-toolbox/retry.js:85:22) at Xapi.retry (/opt/xo/xo-builds/xen-orchestra-202206111352/node_modules/promise-toolbox/retry.js:87:10) at Xapi.call (/opt/xo/xo-builds/xen-orchestra-202206111352/node_modules/promise-toolbox/retry.js:103:18) at Xapi.destroy (/opt/xo/xo-builds/xen-orchestra-202206111352/@xen-orchestra/xapi/vm.js:361:18) at Api.callApiMethod (file:///opt/xo/xo-builds/xen-orchestra-202206111352/packages/xo-server/src/xo-mixins/api.mjs:310:20)" }If I try to delete again, the same thing happens.

All the volumes used by this VM still exist on linstor and linstor shows no errors.

Now when I try to create any new VM, this now also fails with the following error:

vm.create { "clone": true, "existingDisks": {}, "installation": { "method": "cdrom", "repository": "16ead07f-2f23-438f-9010-6f1e6c847e2c" }, "name_label": "testx", "template": "d276dc0c-3870-2b7e-64c2-b612bb856227-2cf37285-57bc-4633-a24f-0c6c825dda66", "VDIs": [ { "bootable": true, "device": "0", "size": 23622320128, "type": "system", "SR": "141d63f6-d3ed-4a2f-588a-1835f0cea588", "name_description": "testx_vdi", "name_label": "testx_xostor_vdi" } ], "VIFs": [ { "network": "965db545-28a2-5daf-1c90-0ae9a7882bc1", "allowedIpv4Addresses": [], "allowedIpv6Addresses": [] } ], "CPUs": "4", "cpusMax": 4, "cpuWeight": null, "cpuCap": null, "name_description": "testx", "memory": 4294967296, "bootAfterCreate": true, "copyHostBiosStrings": false, "secureBoot": false, "share": false, "coreOs": false, "tags": [], "hvmBootFirmware": "bios" } { "code": "SR_BACKEND_FAILURE_78", "params": [ "", "VDI Creation failed [opterr=error Invalid path, current=/dev/drbd1031, expected=/dev/drbd/by-res/xcp-volume-cc55faf8-84a0-431c-a2dc-a618d70e2c49/0 (realpath=/dev/drbd/by-res/xcp-volume-cc55faf8-84a0-431c-a2dc-a618d70e2c49/0)]", "" ], "call": { "method": "VDI.create", "params": [ { "name_description": "testx_vdi", "name_label": "testx_xostor_vdi", "other_config": {}, "read_only": false, "sharable": false, "SR": "OpaqueRef:7709e595-7889-4cf1-8980-c04bd145d296", "type": "user", "virtual_size": 23622320128 } ] }, "message": "SR_BACKEND_FAILURE_78(, VDI Creation failed [opterr=error Invalid path, current=/dev/drbd1031, expected=/dev/drbd/by-res/xcp-volume-cc55faf8-84a0-431c-a2dc-a618d70e2c49/0 (realpath=/dev/drbd/by-res/xcp-volume-cc55faf8-84a0-431c-a2dc-a618d70e2c49/0)], )", "name": "XapiError", "stack": "XapiError: SR_BACKEND_FAILURE_78(, VDI Creation failed [opterr=error Invalid path, current=/dev/drbd1031, expected=/dev/drbd/by-res/xcp-volume-cc55faf8-84a0-431c-a2dc-a618d70e2c49/0 (realpath=/dev/drbd/by-res/xcp-volume-cc55faf8-84a0-431c-a2dc-a618d70e2c49/0)], ) at Function.wrap (/opt/xo/xo-builds/xen-orchestra-202206111352/packages/xen-api/src/_XapiError.js:16:12) at /opt/xo/xo-builds/xen-orchestra-202206111352/packages/xen-api/src/transports/json-rpc.js:37:27 at AsyncResource.runInAsyncScope (async_hooks.js:197:9) at cb (/opt/xo/xo-builds/xen-orchestra-202206111352/node_modules/bluebird/js/release/util.js:355:42) at tryCatcher (/opt/xo/xo-builds/xen-orchestra-202206111352/node_modules/bluebird/js/release/util.js:16:23) at Promise._settlePromiseFromHandler (/opt/xo/xo-builds/xen-orchestra-202206111352/node_modules/bluebird/js/release/promise.js:547:31) at Promise._settlePromise (/opt/xo/xo-builds/xen-orchestra-202206111352/node_modules/bluebird/js/release/promise.js:604:18) at Promise._settlePromise0 (/opt/xo/xo-builds/xen-orchestra-202206111352/node_modules/bluebird/js/release/promise.js:649:10) at Promise._settlePromises (/opt/xo/xo-builds/xen-orchestra-202206111352/node_modules/bluebird/js/release/promise.js:729:18) at _drainQueueStep (/opt/xo/xo-builds/xen-orchestra-202206111352/node_modules/bluebird/js/release/async.js:93:12) at _drainQueue (/opt/xo/xo-builds/xen-orchestra-202206111352/node_modules/bluebird/js/release/async.js:86:9) at Async._drainQueues (/opt/xo/xo-builds/xen-orchestra-202206111352/node_modules/bluebird/js/release/async.js:102:5) at Immediate.Async.drainQueues [as _onImmediate] (/opt/xo/xo-builds/xen-orchestra-202206111352/node_modules/bluebird/js/release/async.js:15:14) at processImmediate (internal/timers.js:464:21) at process.callbackTrampoline (internal/async_hooks.js:130:17)" }Note

/dev/drbd1031does not exist in/dev/drdbor as a volume.How do I remove the test VM? How to fix the issue with creating new VMs?

-

I have been attempting to use xostore with k8s linstor csi for storage class

on the host on port 3370, it returns the following via via http

API Documentation

As LINBIT SDS subscriber you can install the UI by running apt install linstor-gui or dnf install linstor-gui.Using this guide https://linbit.com/blog/linstor-csi-plugin-for-kubernetes/

This is what it is outputting. The port is accessible with telnet from inside the k8s cluster.I0802 17:56:53.196698 1 csi-provisioner.go:121] Version: v2.0.2-0-g0c6347dbf I0802 17:56:53.196816 1 csi-provisioner.go:135] Building kube configs for running in cluster... I0802 17:56:53.221618 1 connection.go:153] Connecting to unix://10.2.0.19:3370 W0802 17:57:03.221861 1 connection.go:172] Still connecting to unix://10.2.0.19:3370 W0802 17:57:13.221702 1 connection.go:172] Still connecting to unix://10.2.0.19:3370 W0802 17:57:23.221915 1 connection.go:172] Still connecting to unix://10.2.0.19:3370 W0802 17:57:33.221860 1 connection.go:172] Still connecting to unix://10.2.0.19:3370 W0802 17:57:43.221871 1 connection.go:172] Still connecting to unix://10.2.0.19:3370 W0802 17:57:53.221866 1 connection.go:172] Still connecting to unix://10.2.0.19:3370 W0802 17:58:03.221822 1 connection.go:172] Still connecting to unix://10.2.0.19:3370 W0802 17:58:13.221863 1 connection.go:172] Still connecting to unix://10.2.0.19:3370Thoughts?

-

Ah, I had the configuration wrong.

What I am currently debugging is

I0802 20:13:51.419681 1 node_register.go:55] Starting Registration Server at: /registration/linstor.csi.linbit.com-reg.sock

I0802 20:13:51.419859 1 node_register.go:64] Registration Server started at: /registration/linstor.csi.linbit.com-reg.sock

I0802 20:13:51.419991 1 node_register.go:86] Skipping healthz server because port set to: 0

I0802 20:13:52.764092 1 main.go:79] Received GetInfo call: &InfoRequest{}

I0802 20:13:52.787102 1 main.go:89] Received NotifyRegistrationStatus call: &RegistrationStatus{PluginRegistered:false,Error:RegisterPlugin error -- plugin registration failed with err: rpc error: code = Unknown desc = failed to retrieve node topology: failed to get storage pools for node: 404 Not Found,}

E0802 20:13:52.787170 1 main.go:91] Registration process failed with error: RegisterPlugin error -- plugin registration failed with err: rpc error: code = Unknown desc = failed to retrieve node topology: failed to get storage pools for node: 404 Not Found, restarting registration container. -

@ronan-a is off this week, but he'll come back next week!

-

@olivierlambert

Not a problem! I am 99% it is an issue with the CSI.I am making progress, the CSI is trying to use k8s nodename, and has no idea what the hostname which is running the VM.

-

OK I figured it out! I made an init container that gets a manually created node label for the node the pod is running on. This value is the bare metal host for that k8s node. The init contianer then takes that value and makes a script wrapper and then calls linstor-csi with the correct values. After making these changes all the linstor csi containers are running with no errors.

Current problem comes from deploying and using storage class. Started with a basic one that failed, and noticed I did not know what the correct

storage_pool_namename was, so went to http://IP:3370/v1/nodes/NODE/storage-pools and http://IP:3370/v1/nodes/NODE to get information.Still troubleshooting, but wanted to provide info.

-

@AudleyElwine It seems a volume is still open but should be removed by the driver. You can check if a process has a fd on it using (on each host!):

cat /sys/kernel/debug/drbd/resources/xcp-volume-38e34dc1-8947-4b3d-af49-fbc5393c7069/volumes/0/openers.Maybe a tapdisk instance or another process. Also can you send me the related SMlog file please?

-

@ronan-a said in XOSTOR hyperconvergence preview:

@geoffbland Thank you for your tests.

Could you send me the other logs (/var/log/SMlog + kern.log + drbd-kern.log please)? Also check if the LVM volumes are reachable with linstor resource list. Also, you can check with lvs command on each host. EIO error is not a nice error to observe.So after analysis, I will patch the driver to log using DRBD

openersfile instead oflsofbecause there is probably a process that prevents tapdisk from opening the volume.@geoffbland said in XOSTOR hyperconvergence preview:

Note /dev/drbd1031 does not exist in /dev/drdb or as a volume.

Regarding this specific issue if you have this resource in the LINSTOR DB, it's really weird.

So can you upload the logs please? (SMlog + linstor files).

So can you upload the logs please? (SMlog + linstor files).How do I remove the test VM? How to fix the issue with creating new VMs?

You can forget the VDI to remove the VM. If you can't remove properly it, you can use

drbdsetup(detach/del-minor) to force destroy the DRBD volume.

Do you always have this issue when you create new VMs?