SMAPIv3: results and analyze

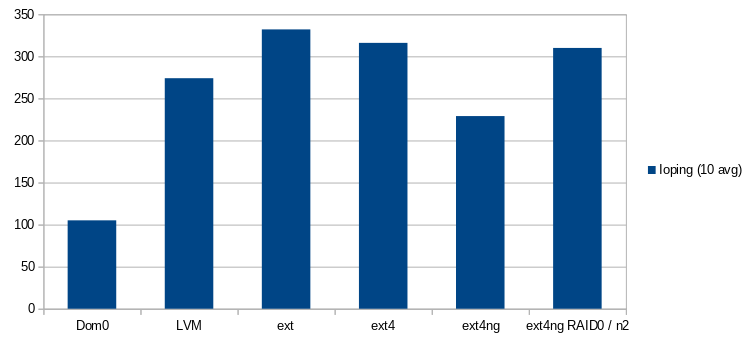

After some investigation, it was discovered that the SMAPIv3 is not THE perfect storage interface. Here are some charts to analyze:

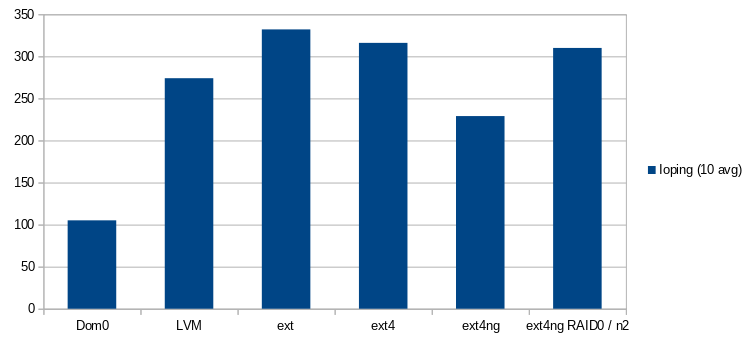

Yeah, there are many storage types:

- lvm, ext (well known)

- ext4 (storage type added on SMAPIv1)

- ext4-ng (a new storage type added on SMAPIv3 for this benchmark and surely available in the future)

- xfs-ng (same idea but for XFS)

You can notice the usage of RAID0 with ext4-ng, but it's not important for the moment.

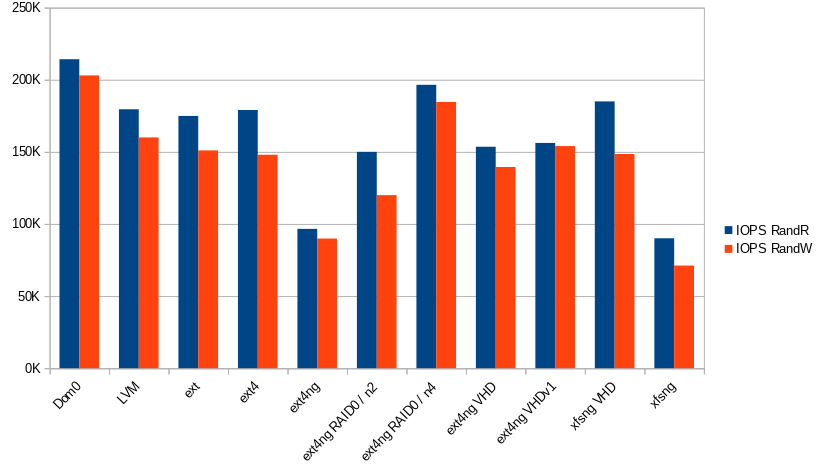

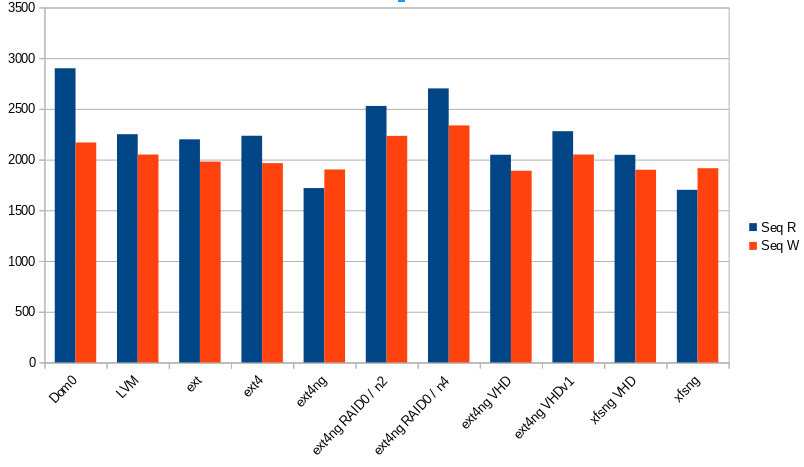

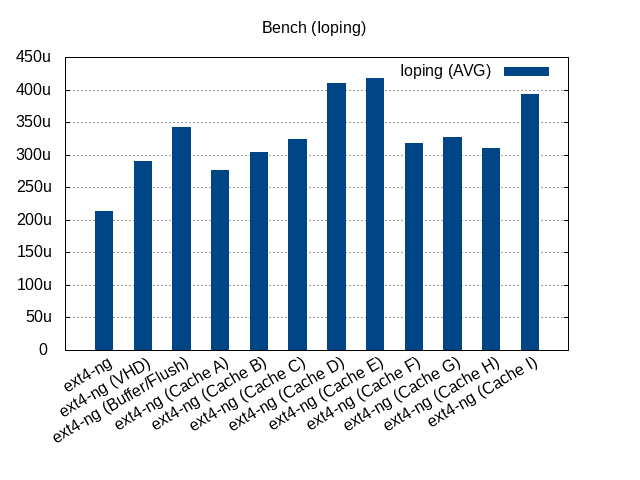

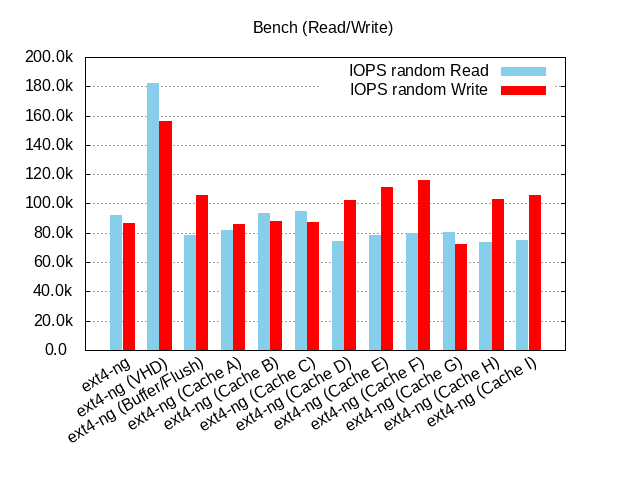

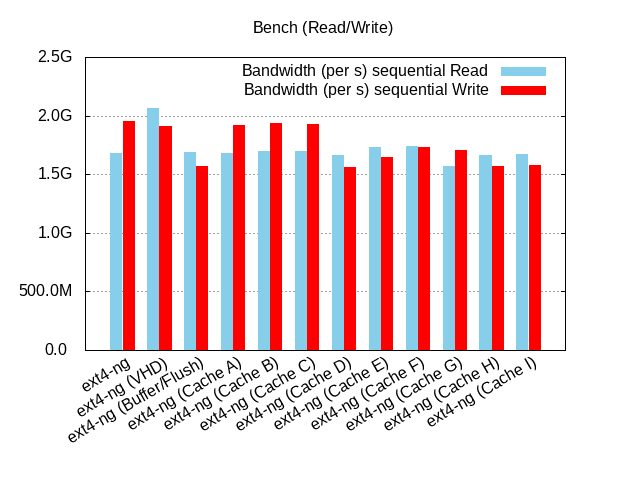

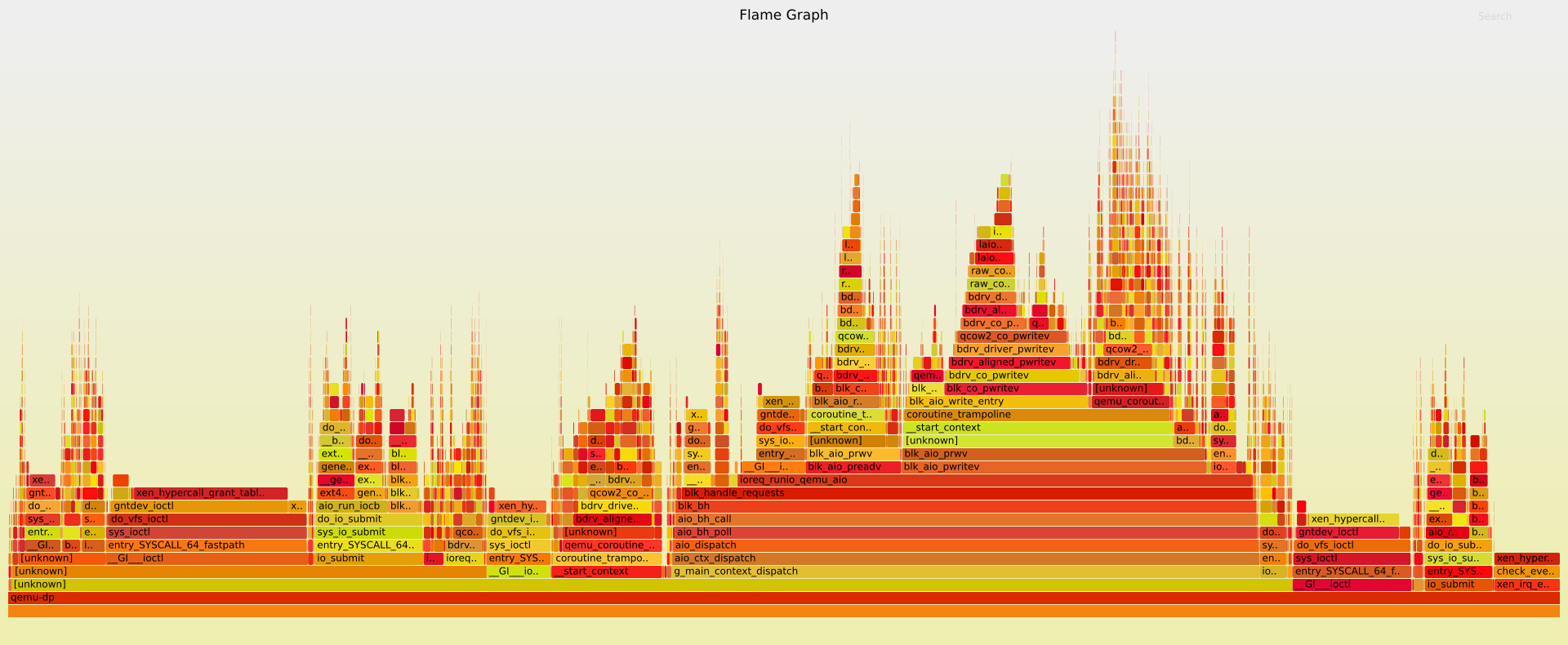

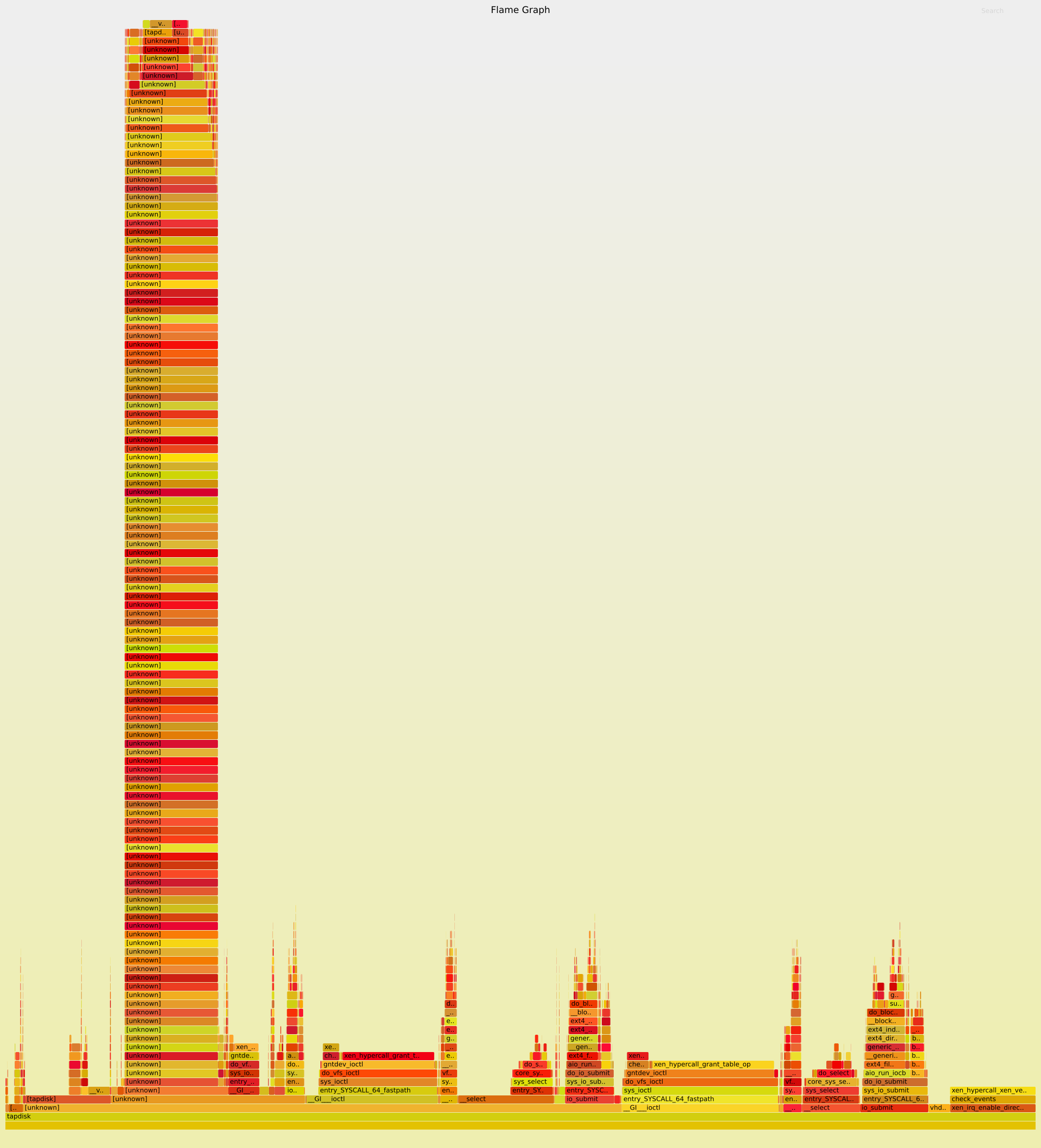

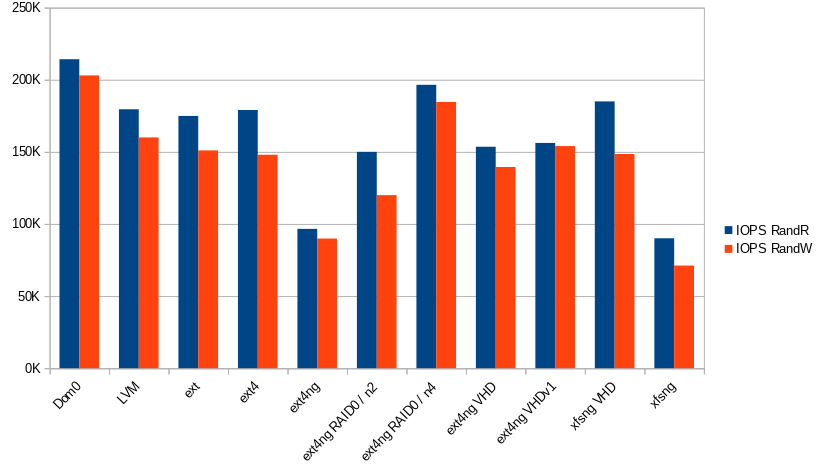

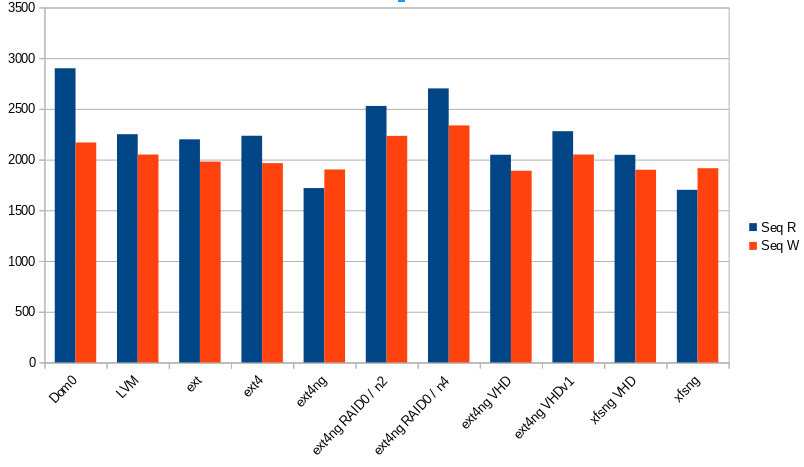

Let's focus on the performance of ext4-ng/xfs-ng! How can we explain these poor results?! By default the SMAPIv3 plugins like gfs2/filebased added by Citrix use qemu-dp. It is a fork of qemu, it's also a substitute of the tapdisk/VHD environment used to improve performance and remove some limitations like the maximum size supported by the VHD format (2TB). QEMU supports QCow images to break this limitation.

So, the performance problem of the SMAPIv3 seems related to qemu-dp. And yes... You can see the results of the ext4-ng VHD and ext4-ng VHDv1 plugins, they are very close to the SMAPIv1 measurements:

- The ext4-ng VHDv1 plugin uses the O_DIRECT flag + a timeout like the SMAPIv1 implementation.

- The ext4-ng VHD plugin does not use the O_DIRECT flag.

Next, to validate a potential bottleneck in the qemu-dp process, two RAID0 have been set up (one with 2 disks and an other with 4), and it seems interesting to see a good usage of the physical disk! There is one qemu process for each disk in our VM, and the disk usage is similar of the performance observed in the Dom0.

For the future

The SMAPIv3/qemu-dp tuple is not totally a problem:

- A good scale is visible with the RAID0 benchmark.

- It's easy to add a new storage type in the SMAPIv3. (Two plugin types: Volume and Datapath automatically detected when added in this system. See: https://xapi-project.github.io/xapi-storage/#learn-architecture)

- The QCow2 format is a good alternative to break the size limitation of the VHD images.

- A RAID0 on the SMAPIv1 does not improve the I/O performance contrary to qemu-dp.

Next steps:

- Understand how qemu-dp is called (context, parameters, ...).

- Find the bottleneck in the qemu-dp.

- Find a solution to improve the performance.

UPDATE AND IMPORTANT INFO

UPDATE AND IMPORTANT INFO