Hello @ronan-a

I will reproduce the case, i will re-destroy one hypervisor and retrigger the case.

Thank you @ronan-a et @olivierlambert

If you need me to tests some special case don't hesit, we have a pool dedicated for this

CTO & Associate @Gladhost

Hello @ronan-a

I will reproduce the case, i will re-destroy one hypervisor and retrigger the case.

Thank you @ronan-a et @olivierlambert

If you need me to tests some special case don't hesit, we have a pool dedicated for this

Hello, @ronan-a

I will reinstall my hypervisor this week.

I will reproduce it and then, resend you the logs.

Bonne journée,

Hello, @DustinB

The https://vates.tech/xostor/ says:

The maximum size of any single Virtual Disk Image (VDI) will always be limited by the smallest disk in your cluster.

But in this case, maybe it can be stored in the "2TB disks" ? Maybe others can answer, i didn't test it.

This test permit to cover the following scenario:

Impact:

Expected results:

We didn't tests other filesystem than XFS for Linux based operating system because we use only XFS.

[hdevigne@VM1 ~]$ htop^C

[hdevigne@VM1 ~]$ echo "coucou" > test

-bash: test: Input/output error

[hdevigne@VM1 ~]$ dmesg

-bash: /usr/bin/dmesg: Input/output error

[hdevigne@VM1 ~]$ d^C

[hdevigne@VM1 ~]$ sudo -i

-bash: sudo: command not found

[hdevigne@VM1 ~]$ dm^C

[hdevigne@VM1 ~]$ sudo -i

-bash: sudo: command not found

[hdevigne@VM1 ~]$ dmesg

-bash: /usr/bin/dmesg: Input/output error

[hdevigne@VM1 ~]$ mount

-bash: mount: command not found

[hdevigne@VM1 ~]$ sud o-i

-bash: sud: command not found

[hdevigne@VM1 ~]$ sudo -i

As we predicted it, the vm is completly fucked-up

Windows VM crash and reboot in loop.

Linstor controller was on node 1, so we will not be able to see linstor nodes status, but we supposed they are in "disconnected" and in "pending eviction", but that doesn't matter a lot, disks are in read only, vm are fucked up after writing, it was our expected bevahior.

Re-plug node 1 and node 2.

Windows boot normally

Linux VM stays in a "broken state"

➜ ~ ssh VM1

suConnection closed by UNKNOWN port 65535

We didn't test a duration up to the eviction states of linstor nodes, but the documentation show that a linstor node restore would works ( see https://docs.xcp-ng.org/xostor/#what-to-do-when-a-node-is-in-an-evicted-state )

We didn't use HA at this time in the cluster, that could helped a bit in the recovery process. but in a precedent experience that i didn't "historize" like this one, the HA was completely down because it was not able to mount a file, i will probably write another topic on the forum to bring my results public.

Having HA change the criticity of the following note.

Thanks to @olivierlambert, @ronan and other people on the discord canal for answering to daily question which permit to this kind of tests to be made. As promissed, i put my result online

Thanks for XOSTOR.

Futher tests to do: Retry with HA

@olivierlambert our opnsense resets the TCP states so the firewall block packet because it forgot about the tcp session.

And then, a timeout occured in the middle of the export.

Hello @olivierlambert

I confirm my issue came from my Firewall so, not related to XO.

However, it could be great to make logs more "clear", i mean:

Error: read ETIMEDOUT"

Become

Error: read ETIMEDOUT while connect to X.X.X.X:ABC

That would permit to understand more quickly my "real and weird" issue

Best regards,

Hello @florent ,

You mean, "check" right ? because if it's doesn't check, the job is finished before the merge and the merge run in background in xen orchestra.

Best regards,

Hello, @Pilow

Thanks for the tips !

But it would be great to see it in the Task UI to find more easily the trick.

Hello @nikade.

I agree but this is my case.

Try to migrate a running VM: error 500

Try to migrate an halted VM: error 500.

Warm migrate: It's okay.

I don't understand myself the difference except it doesn't transfer the "VM" but recreate the VM and import the VDI, ( so , the same things ), but there may be a light difference. I don't know how the "warm migration" works under the hood

Hello,

I was able to perform "Warm" migrate from either the slave or the master.

Yes the master was rebooted.

@henri9813 Did you reboot the master after it was updated? If yes, I think you should be able to migrate back the VM's to the master, and then continue patcting the rest of the hosts.

No, i would migrate back vm from MASTER (updated ) to slave ( not updated ), but wasn't working.

Only warm migrations works.

Hello, @Danp

My pool is in upgrade, not all nodes are updated.

I tried both:

Thanks !

I have an updated pool.

I'm evacuating an host for update to another pool.

But the operation fail, and remains in the XO tasks

VM metadata import (on new-hypervisor) 0%

and in the VM.migrate api call detail.

vm.migrate

{

"vm": "30bb4942-c7fd-a3b3-2690-ae6152d272c5",

"mapVifsNetworks": {

"7e1ad49f-d4df-d9d7-2a74-0d00486ae5ff": "b3204067-a3fd-bd19-7214-7856e637d076"

},

"migrationNetwork": "e31e7aea-37de-2819-83fe-01bd33509855",

"sr": "3070cc36-b869-a51f-38ee-bd5de5e4cb6c",

"targetHost": "36a07da2-7493-454d-836d-df8ada5b958f"

}

{

"code": "INTERNAL_ERROR",

"params": [

"Http_client.Http_error(\"500\", \"{ frame = false; method = GET; uri = /export_metadata?export_snapshots=true&ref=OpaqueRef:75e166f7-5056-a662-f7ff-25c09aee5bec; query = [ ]; content_length = [ ]; transfer encoding = ; version = 1.0; cookie = [ (value filtered) ]; task = ; subtask_of = OpaqueRef:9976b5f2-3381-e79e-a6dd-0c7a20621501; content-type = ; host = ; user_agent = xapi/25.33; }\")"

],

"task": {

"uuid": "9c87e615-5dca-c714-0c55-5da571ad8fa5",

"name_label": "Async.VM.assert_can_migrate",

"name_description": "",

"allowed_operations": [],

"current_operations": {},

"created": "20260131T08:16:14Z",

"finished": "20260131T08:16:14Z",

"status": "failure",

"resident_on": "OpaqueRef:37858c1b-fa8c-5733-ed66-dcd4fc7ae88c",

"progress": 1,

"type": "<none/>",

"result": "",

"error_info": [

"INTERNAL_ERROR",

"Http_client.Http_error(\"500\", \"{ frame = false; method = GET; uri = /export_metadata?export_snapshots=true&ref=OpaqueRef:75e166f7-5056-a662-f7ff-25c09aee5bec; query = [ ]; content_length = [ ]; transfer encoding = ; version = 1.0; cookie = [ (value filtered) ]; task = ; subtask_of = OpaqueRef:9976b5f2-3381-e79e-a6dd-0c7a20621501; content-type = ; host = ; user_agent = xapi/25.33; }\")"

],

"other_config": {},

"subtask_of": "OpaqueRef:NULL",

"subtasks": [],

"backtrace": "(((process xapi)(filename ocaml/libs/http-lib/http_client.ml)(line 215))((process xapi)(filename ocaml/libs/http-lib/http_client.ml)(line 228))((process xapi)(filename ocaml/libs/http-lib/xmlrpc_client.ml)(line 375))((process xapi)(filename ocaml/libs/xapi-stdext/lib/xapi-stdext-pervasives/pervasiveext.ml)(line 24))((process xapi)(filename ocaml/libs/xapi-stdext/lib/xapi-stdext-pervasives/pervasiveext.ml)(line 39))((process xapi)(filename ocaml/xapi/importexport.ml)(line 313))((process xapi)(filename ocaml/libs/xapi-stdext/lib/xapi-stdext-pervasives/pervasiveext.ml)(line 24))((process xapi)(filename ocaml/libs/xapi-stdext/lib/xapi-stdext-pervasives/pervasiveext.ml)(line 39))((process xapi)(filename ocaml/libs/xapi-stdext/lib/xapi-stdext-pervasives/pervasiveext.ml)(line 24))((process xapi)(filename ocaml/libs/xapi-stdext/lib/xapi-stdext-pervasives/pervasiveext.ml)(line 39))((process xapi)(filename ocaml/xapi/xapi_vm_migrate.ml)(line 1920))((process xapi)(filename ocaml/xapi/message_forwarding.ml)(line 2551))((process xapi)(filename ocaml/xapi/rbac.ml)(line 229))((process xapi)(filename ocaml/xapi/rbac.ml)(line 239))((process xapi)(filename ocaml/xapi/server_helpers.ml)(line 78)))"

},

"message": "INTERNAL_ERROR(Http_client.Http_error(\"500\", \"{ frame = false; method = GET; uri = /export_metadata?export_snapshots=true&ref=OpaqueRef:75e166f7-5056-a662-f7ff-25c09aee5bec; query = [ ]; content_length = [ ]; transfer encoding = ; version = 1.0; cookie = [ (value filtered) ]; task = ; subtask_of = OpaqueRef:9976b5f2-3381-e79e-a6dd-0c7a20621501; content-type = ; host = ; user_agent = xapi/25.33; }\"))",

"name": "XapiError",

"stack": "XapiError: INTERNAL_ERROR(Http_client.Http_error(\"500\", \"{ frame = false; method = GET; uri = /export_metadata?export_snapshots=true&ref=OpaqueRef:75e166f7-5056-a662-f7ff-25c09aee5bec; query = [ ]; content_length = [ ]; transfer encoding = ; version = 1.0; cookie = [ (value filtered) ]; task = ; subtask_of = OpaqueRef:9976b5f2-3381-e79e-a6dd-0c7a20621501; content-type = ; host = ; user_agent = xapi/25.33; }\"))

at XapiError.wrap (file:///etc/xen-orchestra/packages/xen-api/_XapiError.mjs:16:12)

at default (file:///etc/xen-orchestra/packages/xen-api/_getTaskResult.mjs:13:29)

at Xapi._addRecordToCache (file:///etc/xen-orchestra/packages/xen-api/index.mjs:1078:24)

at file:///etc/xen-orchestra/packages/xen-api/index.mjs:1112:14

at Array.forEach (<anonymous>)

at Xapi._processEvents (file:///etc/xen-orchestra/packages/xen-api/index.mjs:1102:12)

at Xapi._watchEvents (file:///etc/xen-orchestra/packages/xen-api/index.mjs:1275:14)"

}

My XO is up to date.

I already update the master, i'm processing the slave.

But neither the master ( updated ) nor the slave ( not updated ) can migrate VM to an updated pool.

Do you have an idea ?

Hello @florent ,

You mean, "check" right ? because if it's doesn't check, the job is finished before the merge and the merge run in background in xen orchestra.

Best regards,

Hello, @Pilow

Thanks for the tips !

But it would be great to see it in the Task UI to find more easily the trick.

Hello,

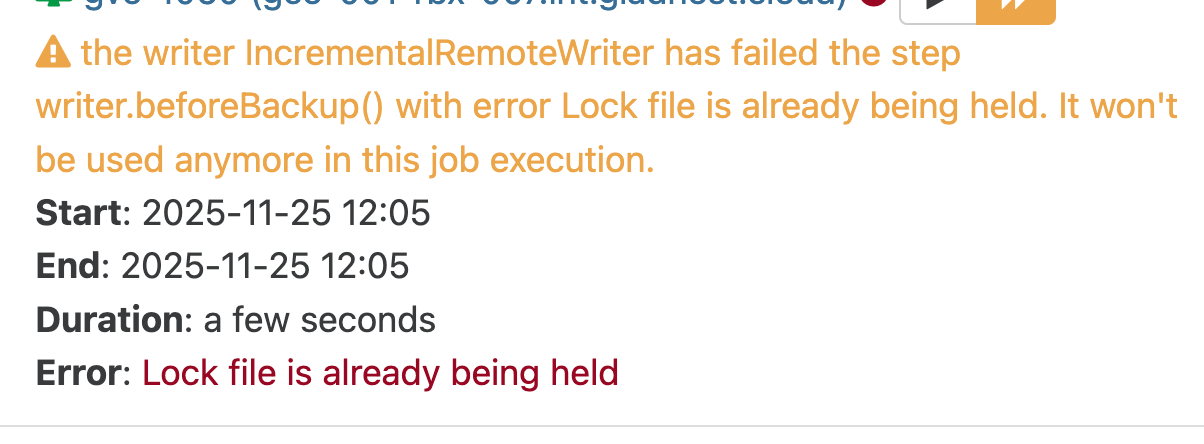

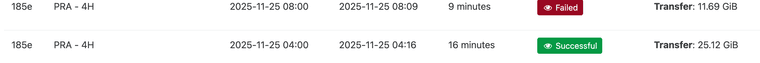

Sometimes, i have on some jobs ( which run every 4 hours ).

Howerver, the previous job is finished in success

Is it possible that has a relation with the mergeWorker of backups which could be running ? if it doesn't finished his operations ?

Example of logs:

xen-orchestra | 2025-11-27T00:44:43.487Z xo:backups:mergeWorker INFO merge in progress {

xen-orchestra | done: 2057,

xen-orchestra | parent: '/xo-vm-backups/f37e259d-beaa-7617-e6f1-be814f21e056/vdis/29e0185e-2f67-44d4-bb9e-ee2a772e2543/b09c0230-219f-4ddf-8e19-bfed1464014f/20251126T070610Z.vhd',

xen-orchestra | progress: 25,

xen-orchestra | total: 8128

xen-orchestra | }

Is it possible to have this in the XO Tasks sections ? it's interessant to see this.

Best regards

Hello,

Thanks for your work !

We have some hypervisors of tests at Gladhost, we can use them with pleasure to test your work on xcp-ng 8.3 !

Best regards

Hello,

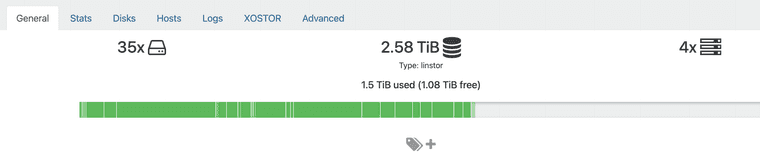

I got my whole xostor destroyed, i don't know how precisely.

I found some errors in sattelite

Error context:

An error occurred while processing resource 'Node: 'host', Rsc: 'xcp-volume-e011c043-8751-45e6-be06-4ce9f8807cad''

ErrorContext:

Details: Command 'lvcreate --config 'devices { filter=['"'"'a|/dev/md127|'"'"','"'"'a|/dev/md126p3|'"'"','"'"'r|.*|'"'"'] }' --virtualsize 52543488k linstor_primary --thinpool thin_device --name xcp-volume-e011c043-8751-45e6-be06-4ce9f8807cad_00000' returned with exitcode 5.

Standard out:

Error message:

WARNING: Remaining free space in metadata of thin pool linstor_primary/thin_device is too low (98.06% >= 96.30%). Resize is recommended.

Cannot create new thin volume, free space in thin pool linstor_primary/thin_device reached threshold.

of course, i checked, my SR was not full

And the controller crashed, and i couldn't make it works.

Here is the error i got

==========

Category: RuntimeException

Class name: IllegalStateException

Class canonical name: java.lang.IllegalStateException

Generated at: Method 'newIllegalStateException', Source file 'DataUtils.java', Line #870

Error message: Reading from nio:/var/lib/linstor/linstordb.mv.db failed; file length 2293760 read length 384 at 2445540 [1.4.197/1]

So i deduce the database was fucked-up, i tried to open the file as explained in the documentation, but the linstor schema was "not found" in the file, event if using cat i see data about it.

for now, i leave xostor and i'm back to localstorage until we know what to do when this issue occured with a "solution path".