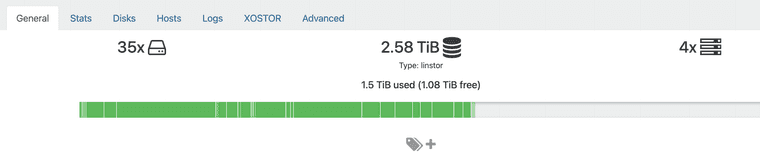

I have an updated pool.

I'm evacuating an host for update to another pool.

But the operation fail, and remains in the XO tasks

VM metadata import (on new-hypervisor) 0%

and in the VM.migrate api call detail.

vm.migrate

{

"vm": "30bb4942-c7fd-a3b3-2690-ae6152d272c5",

"mapVifsNetworks": {

"7e1ad49f-d4df-d9d7-2a74-0d00486ae5ff": "b3204067-a3fd-bd19-7214-7856e637d076"

},

"migrationNetwork": "e31e7aea-37de-2819-83fe-01bd33509855",

"sr": "3070cc36-b869-a51f-38ee-bd5de5e4cb6c",

"targetHost": "36a07da2-7493-454d-836d-df8ada5b958f"

}

{

"code": "INTERNAL_ERROR",

"params": [

"Http_client.Http_error(\"500\", \"{ frame = false; method = GET; uri = /export_metadata?export_snapshots=true&ref=OpaqueRef:75e166f7-5056-a662-f7ff-25c09aee5bec; query = [ ]; content_length = [ ]; transfer encoding = ; version = 1.0; cookie = [ (value filtered) ]; task = ; subtask_of = OpaqueRef:9976b5f2-3381-e79e-a6dd-0c7a20621501; content-type = ; host = ; user_agent = xapi/25.33; }\")"

],

"task": {

"uuid": "9c87e615-5dca-c714-0c55-5da571ad8fa5",

"name_label": "Async.VM.assert_can_migrate",

"name_description": "",

"allowed_operations": [],

"current_operations": {},

"created": "20260131T08:16:14Z",

"finished": "20260131T08:16:14Z",

"status": "failure",

"resident_on": "OpaqueRef:37858c1b-fa8c-5733-ed66-dcd4fc7ae88c",

"progress": 1,

"type": "<none/>",

"result": "",

"error_info": [

"INTERNAL_ERROR",

"Http_client.Http_error(\"500\", \"{ frame = false; method = GET; uri = /export_metadata?export_snapshots=true&ref=OpaqueRef:75e166f7-5056-a662-f7ff-25c09aee5bec; query = [ ]; content_length = [ ]; transfer encoding = ; version = 1.0; cookie = [ (value filtered) ]; task = ; subtask_of = OpaqueRef:9976b5f2-3381-e79e-a6dd-0c7a20621501; content-type = ; host = ; user_agent = xapi/25.33; }\")"

],

"other_config": {},

"subtask_of": "OpaqueRef:NULL",

"subtasks": [],

"backtrace": "(((process xapi)(filename ocaml/libs/http-lib/http_client.ml)(line 215))((process xapi)(filename ocaml/libs/http-lib/http_client.ml)(line 228))((process xapi)(filename ocaml/libs/http-lib/xmlrpc_client.ml)(line 375))((process xapi)(filename ocaml/libs/xapi-stdext/lib/xapi-stdext-pervasives/pervasiveext.ml)(line 24))((process xapi)(filename ocaml/libs/xapi-stdext/lib/xapi-stdext-pervasives/pervasiveext.ml)(line 39))((process xapi)(filename ocaml/xapi/importexport.ml)(line 313))((process xapi)(filename ocaml/libs/xapi-stdext/lib/xapi-stdext-pervasives/pervasiveext.ml)(line 24))((process xapi)(filename ocaml/libs/xapi-stdext/lib/xapi-stdext-pervasives/pervasiveext.ml)(line 39))((process xapi)(filename ocaml/libs/xapi-stdext/lib/xapi-stdext-pervasives/pervasiveext.ml)(line 24))((process xapi)(filename ocaml/libs/xapi-stdext/lib/xapi-stdext-pervasives/pervasiveext.ml)(line 39))((process xapi)(filename ocaml/xapi/xapi_vm_migrate.ml)(line 1920))((process xapi)(filename ocaml/xapi/message_forwarding.ml)(line 2551))((process xapi)(filename ocaml/xapi/rbac.ml)(line 229))((process xapi)(filename ocaml/xapi/rbac.ml)(line 239))((process xapi)(filename ocaml/xapi/server_helpers.ml)(line 78)))"

},

"message": "INTERNAL_ERROR(Http_client.Http_error(\"500\", \"{ frame = false; method = GET; uri = /export_metadata?export_snapshots=true&ref=OpaqueRef:75e166f7-5056-a662-f7ff-25c09aee5bec; query = [ ]; content_length = [ ]; transfer encoding = ; version = 1.0; cookie = [ (value filtered) ]; task = ; subtask_of = OpaqueRef:9976b5f2-3381-e79e-a6dd-0c7a20621501; content-type = ; host = ; user_agent = xapi/25.33; }\"))",

"name": "XapiError",

"stack": "XapiError: INTERNAL_ERROR(Http_client.Http_error(\"500\", \"{ frame = false; method = GET; uri = /export_metadata?export_snapshots=true&ref=OpaqueRef:75e166f7-5056-a662-f7ff-25c09aee5bec; query = [ ]; content_length = [ ]; transfer encoding = ; version = 1.0; cookie = [ (value filtered) ]; task = ; subtask_of = OpaqueRef:9976b5f2-3381-e79e-a6dd-0c7a20621501; content-type = ; host = ; user_agent = xapi/25.33; }\"))

at XapiError.wrap (file:///etc/xen-orchestra/packages/xen-api/_XapiError.mjs:16:12)

at default (file:///etc/xen-orchestra/packages/xen-api/_getTaskResult.mjs:13:29)

at Xapi._addRecordToCache (file:///etc/xen-orchestra/packages/xen-api/index.mjs:1078:24)

at file:///etc/xen-orchestra/packages/xen-api/index.mjs:1112:14

at Array.forEach (<anonymous>)

at Xapi._processEvents (file:///etc/xen-orchestra/packages/xen-api/index.mjs:1102:12)

at Xapi._watchEvents (file:///etc/xen-orchestra/packages/xen-api/index.mjs:1275:14)"

}

My XO is up to date.

I already update the master, i'm processing the slave.

But neither the master ( updated ) nor the slave ( not updated ) can migrate VM to an updated pool.

Do you have an idea ?

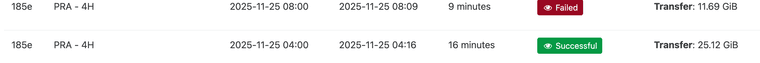

As we predicted it, the vm is completly fucked-up

As we predicted it, the vm is completly fucked-up