Kubernetes cluster recipes not seeing nodes

-

Thank you.

Are all VMs started?

What's the output of

kubectl get pods --all-namespaces? -

@GabrielG said in Kubernetes cluster recipes not seeing nodes:

Are all VMs started?

Yes, all the VMs are up and running

@GabrielG said in Kubernetes cluster recipes not seeing nodes:

What's the output of kubectl get pods --all-namespaces?

debian@master:~$ kubectl get pods --all-namespaces NAMESPACE NAME READY STATUS RESTARTS AGE kube-flannel kube-flannel-ds-mj4n6 1/1 Running 2 (3d ago) 8d kube-flannel kube-flannel-ds-vtd2k 1/1 Running 2 (6d19h ago) 8d kube-system coredns-787d4945fb-85867 1/1 Running 2 (6d19h ago) 8d kube-system coredns-787d4945fb-dn96g 1/1 Running 2 (6d19h ago) 8d kube-system etcd-master 1/1 Running 2 (6d19h ago) 8d kube-system kube-apiserver-master 1/1 Running 2 (6d19h ago) 8d kube-system kube-controller-manager-master 1/1 Running 2 (6d19h ago) 8d kube-system kube-proxy-fmjnv 1/1 Running 2 (6d19h ago) 8d kube-system kube-proxy-gxsrs 1/1 Running 2 (3d ago) 8d kube-system kube-scheduler-master 1/1 Running 2 (6d19h ago) 8dThank you very much

-

@GabrielG Do you think I should delete all the VMs and reun the deploy recipe again? Also is it normal that I no longer have the option to set a network CIDR like before?

-

You can do that but it won't help us to understand what when wrong during the installation of the worker nodes 1 and 3.

Can you show me what's the output of

sudo cat /var/log/messagesfor each nodes (master and workers)?Concerning the CIDR, we are now using flannel as Container Network Interface, which uses a default CIDR (10.244.0.0/16) allocated to the pods network.

-

@GabrielG said in Kubernetes cluster recipes not seeing nodes:

Can you show me what's the output of sudo cat /var/log/messages for each nodes (master and workers)?

From the master:

debian@master:~$ sudo cat /var/log/messages Mar 26 00:10:18 master rsyslogd: [origin software="rsyslogd" swVersion="8.2102.0" x-pid="572" x-info="https://www.rsyslog.com"] rsyslogd was HUPedFrom node1:

https://pastebin.com/xrqPd88VFrom node2:

https://pastebin.com/aJch3diHFrom node3:

https://pastebin.com/Zc1y42NA -

Thank you, I'll take a look tomorrow.

Is it the whole output for the master?

-

@GabrielG yes, all of it

-

@GabrielG did you get a chance to look at the log I provided? Any clues?

-

Hi,

Nothing useful. Maybe you can try to delete the VMs and redeploy the cluster.

-

@GabrielG said in Kubernetes cluster recipes not seeing nodes:

Nothing useful. Maybe you can try to delete the VMs and redeploy the cluster.

Ok I will do that. Whilst I redeploy the cluster, what I am looking for? What log to monitor etc?

-

I'd say any error in the console during the cloud-init installation.

-

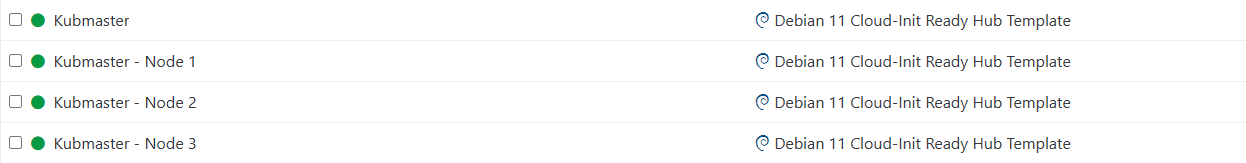

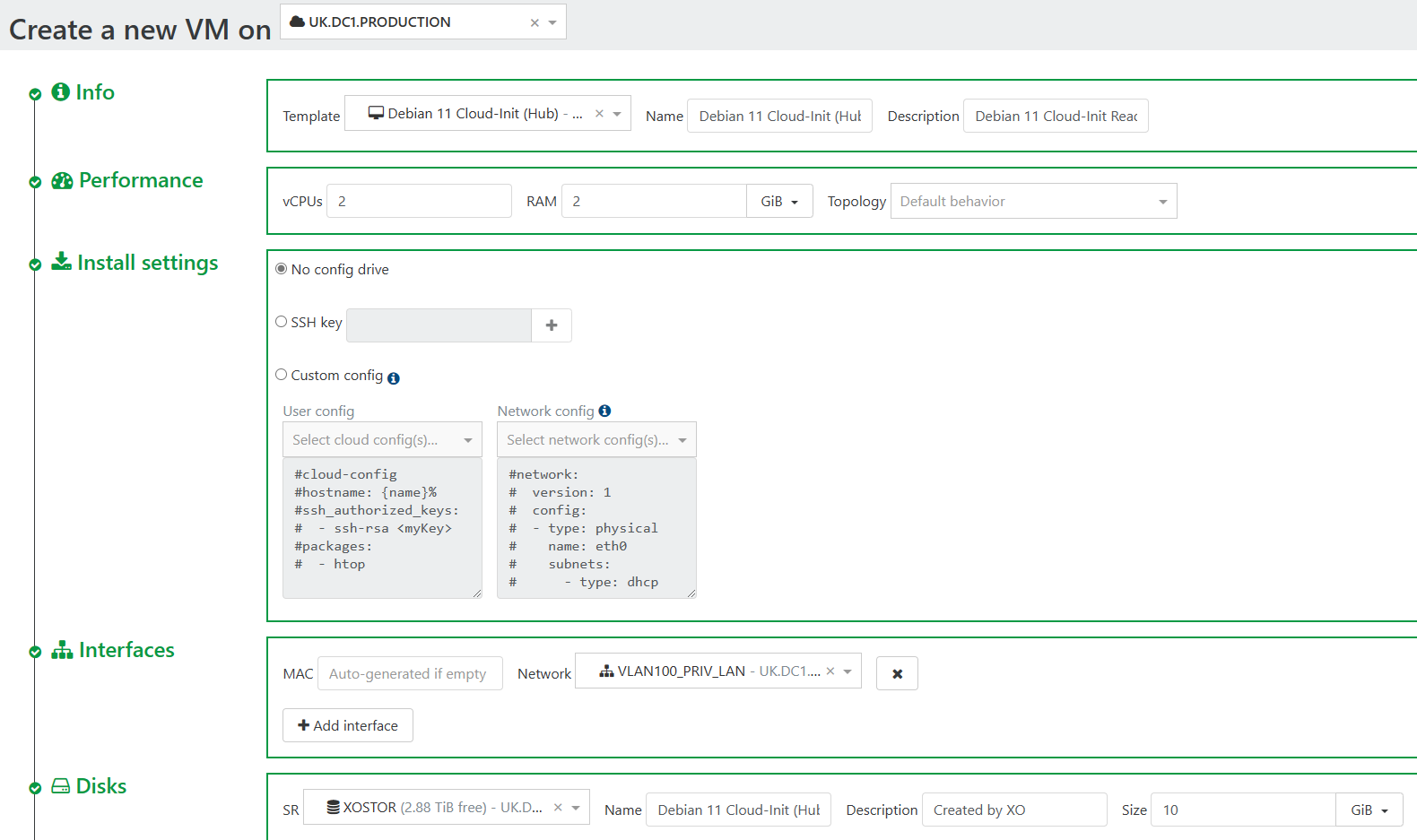

@GabrielG I deleted the VMs and redeployed it with3 nodes.

So far only the Master VM has been created and nothing else. I am missing the 3x nodes.

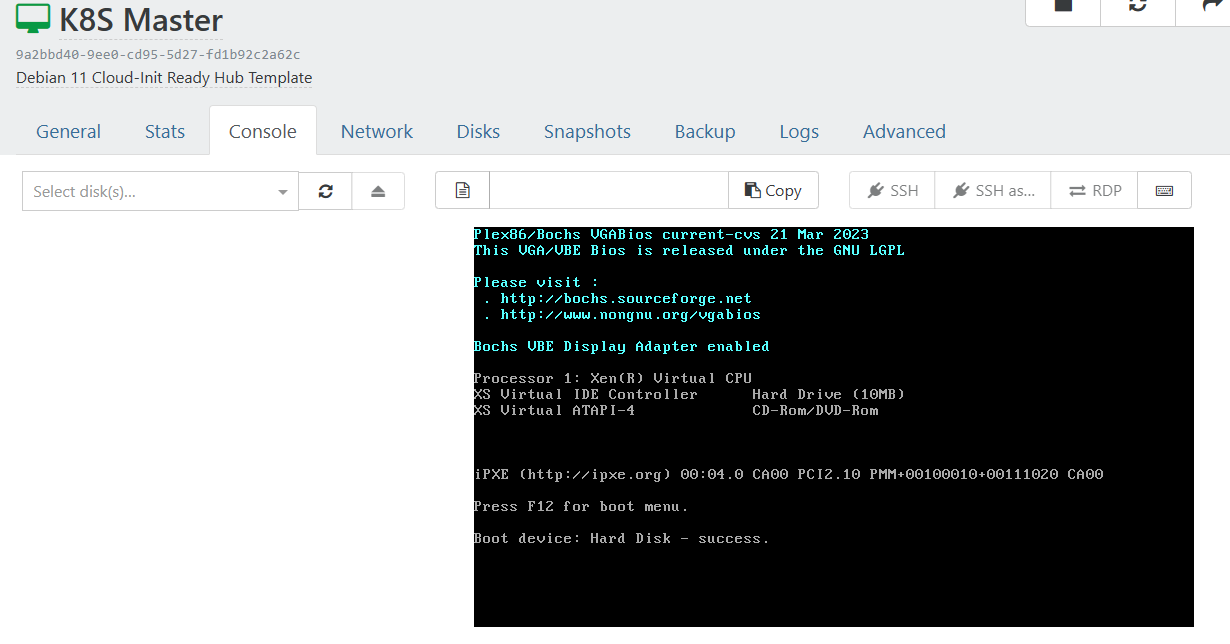

When I look at the console of the master VM, all I get is this:

So the master VM is created but nothing has been deployed

I have no error on Xen Orchestra screen or log

-

The cloud-init installation comes after the step on your screenshot.

Are the 3 nodes VMs started? Can you post the output of

sudo cat /var/log/messages? -

@GabrielG said in Kubernetes cluster recipes not seeing nodes:

Are the 3 nodes VMs started? Can you post the output of sudo cat /var/log/messages?

No node come up. Do you need the message log from all the hosts ?

-

Yes please.

-

@GabrielG I just cleared allthe

/var/log/messageslogs from hosts and Xen Orchestra and started again:

Host1:/var/log/messagesis empty

Host2:/var/log/messagesis empty

Host3:/var/log/messagesis empty

Host4:/var/log/messagesis empty

XOA:/var/log/messageshas the following message repeated 100's time:Apr 4 07:00:40 xoa kernel: [56429.938470] [UFW BLOCK] IN=eth0 OUT= MAC=33:33:00:00:00:01:cc:2d:e0:58:82:9c:86:dd SRC=fe80:0000:0000:0000:ce2d:e0ff:fe58:829c DST=ff02:0000:0000:0000:0000:0000:0000:0001 LEN=205 TC=0 HOPLIMIT=1 FLOWLBL=211291 PROTO=UDP SPT=5678 DPT=5678 LEN=165 Apr 4 07:01:36 xoa kernel: [56485.646598] [UFW BLOCK] IN=eth0 OUT= MAC=33:33:00:00:00:01:c4:ad:34:4a:8d:38:86:dd SRC=fe80:0000:0000:0000:c6ad:34ff:fe4a:8d38 DST=ff02:0000:0000:0000:0000:0000:0000:0001 LEN=206 TC=0 HOPLIMIT=1 FLOWLBL=467355 PROTO=UDP SPT=5678 DPT=5678 LEN=166and this message in the middle of the mist

Apr 4 00:10:03 xoa rsyslogd: [origin software="rsyslogd" swVersion="8.2102.0" x-pid="485" x-info="https://www.rsyslog.com"] rsyslogd was HUPedAs before, the master VM is created but nothing more

No nodes are created and the recipes page just have a spinning wheel with no error message

The XOA web log has the following:

sr.stats { "id": "a20ee08c-40d0-9818-084f-282bbca1f217", "granularity": "seconds" } { "message": "Cannot read properties of undefined (reading 'statusCode')", "name": "TypeError", "stack": "TypeError: Cannot read properties of undefined (reading 'statusCode') at when (/usr/local/lib/node_modules/xo-server/node_modules/xen-api/src/index.js:415:41) at matchError (/usr/local/lib/node_modules/xo-server/node_modules/promise-toolbox/_matchError.js:17:103) at onError (/usr/local/lib/node_modules/xo-server/node_modules/promise-toolbox/retry.js:64:9) at AsyncResource.runInAsyncScope (node:async_hooks:203:9) at cb (/usr/local/lib/node_modules/xo-server/node_modules/bluebird/js/release/util.js:355:42) at tryCatcher (/usr/local/lib/node_modules/xo-server/node_modules/bluebird/js/release/util.js:16:23) at Promise._settlePromiseFromHandler (/usr/local/lib/node_modules/xo-server/node_modules/bluebird/js/release/promise.js:547:31) at Promise._settlePromise (/usr/local/lib/node_modules/xo-server/node_modules/bluebird/js/release/promise.js:604:18) at Promise._settlePromise0 (/usr/local/lib/node_modules/xo-server/node_modules/bluebird/js/release/promise.js:649:10) at Promise._settlePromises (/usr/local/lib/node_modules/xo-server/node_modules/bluebird/js/release/promise.js:725:18) at _drainQueueStep (/usr/local/lib/node_modules/xo-server/node_modules/bluebird/js/release/async.js:93:12) at _drainQueue (/usr/local/lib/node_modules/xo-server/node_modules/bluebird/js/release/async.js:86:9) at Async._drainQueues (/usr/local/lib/node_modules/xo-server/node_modules/bluebird/js/release/async.js:102:5) at Immediate.Async.drainQueues [as _onImmediate] (/usr/local/lib/node_modules/xo-server/node_modules/bluebird/js/release/async.js:15:14) at processImmediate (node:internal/timers:471:21) at process.callbackTrampoline (node:internal/async_hooks:130:17)" }I hope this is enough information to debug the issue

-

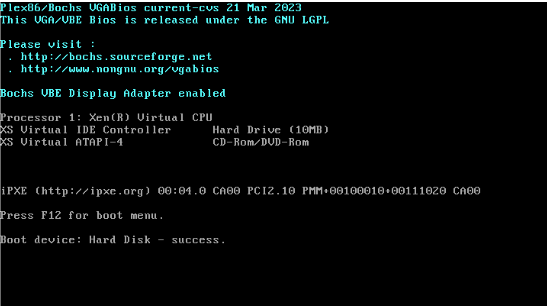

Your first Debian VM isn't booting at all. Are you sure your host is working and supporting HVM guest?

Can you boot the Debian 11 template from the XO Hub?

-

@olivierlambert said in Kubernetes cluster recipes not seeing nodes:

Your first Debian VM isn't booting at all. Are you sure your host is working and supporting HVM guest?

Yes, the hosts has other VM running on them. Also on my original post, the master was created and also the nodes. They simply couldn't see each other

@olivierlambert said in Kubernetes cluster recipes not seeing nodes:

Can you boot the Debian 11 template from the XO Hub?

Yes, I can deploy Debian 11 from the XO Hub but it it won't stay up. The Vm does start and get stuck at the boot process

vm.stats { "id": "9082c067-e945-0ee0-2aca-4b46ab5c02be" } { "message": "Cannot read properties of undefined (reading 'statusCode')", "name": "TypeError", "stack": "TypeError: Cannot read properties of undefined (reading 'statusCode') at when (/usr/local/lib/node_modules/xo-server/node_modules/xen-api/src/index.js:415:41) at matchError (/usr/local/lib/node_modules/xo-server/node_modules/promise-toolbox/_matchError.js:17:103) at onError (/usr/local/lib/node_modules/xo-server/node_modules/promise-toolbox/retry.js:64:9) at AsyncResource.runInAsyncScope (node:async_hooks:203:9) at cb (/usr/local/lib/node_modules/xo-server/node_modules/bluebird/js/release/util.js:355:42) at tryCatcher (/usr/local/lib/node_modules/xo-server/node_modules/bluebird/js/release/util.js:16:23) at Promise._settlePromiseFromHandler (/usr/local/lib/node_modules/xo-server/node_modules/bluebird/js/release/promise.js:547:31) at Promise._settlePromise (/usr/local/lib/node_modules/xo-server/node_modules/bluebird/js/release/promise.js:604:18) at Promise._settlePromise0 (/usr/local/lib/node_modules/xo-server/node_modules/bluebird/js/release/promise.js:649:10) at Promise._settlePromises (/usr/local/lib/node_modules/xo-server/node_modules/bluebird/js/release/promise.js:725:18) at _drainQueueStep (/usr/local/lib/node_modules/xo-server/node_modules/bluebird/js/release/async.js:93:12) at _drainQueue (/usr/local/lib/node_modules/xo-server/node_modules/bluebird/js/release/async.js:86:9) at Async._drainQueues (/usr/local/lib/node_modules/xo-server/node_modules/bluebird/js/release/async.js:102:5) at Immediate.Async.drainQueues [as _onImmediate] (/usr/local/lib/node_modules/xo-server/node_modules/bluebird/js/release/async.js:15:14) at processImmediate (node:internal/timers:471:21) at process.callbackTrampoline (node:internal/async_hooks:130:17)" }I removed the template and re-installed it again. This time i successfully managed to deploy Debian 11 from XO Hub.

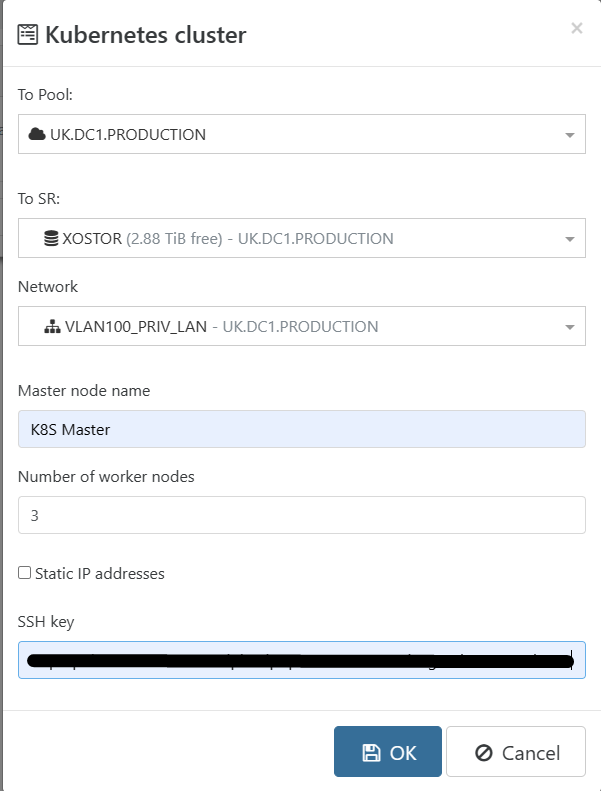

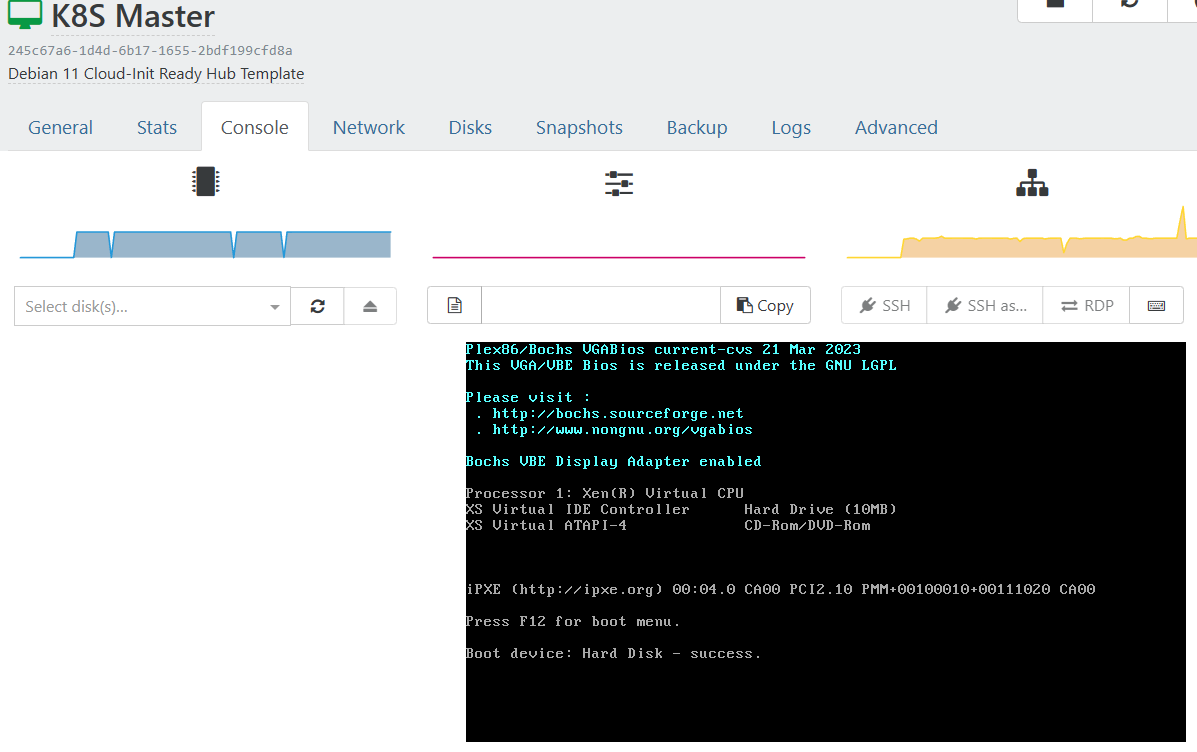

I then tried the Kubernetes recipe again and this time the master is created but is not powering on. Should I manually start the VM? -

xoa.recipe.createKubernetesCluster { "masterName": "K8S Master", "nbNodes": 3, "network": "37559e16-f83d-b1f4-a1ae-b9c3336dca22", "sr": "a20ee08c-40d0-9818-084f-282bbca1f217", "sshKey": "ssh-ed25519 AAAAC3NzaC1lZDI1Nhbilj;jojj'hufsdklk,kp;joFWlpk8BlpKpTO3T2R44YHP6b+g Kubernetes cluster" } { "code": "SR_BACKEND_FAILURE_46", "params": [ "", "The VDI is not available [opterr=Failed to attach VDI during \"prepare thin\": The VDI is not available [opterr=Plugin linstor-manager failed]]", "" ], "call": { "method": "VM.start", "params": [ "OpaqueRef:6ae3475f-7455-4328-904d-168b35b8ce5f", false, false ] }, "message": "SR_BACKEND_FAILURE_46(, The VDI is not available [opterr=Failed to attach VDI during \"prepare thin\": The VDI is not available [opterr=Plugin linstor-manager failed]], )", "name": "XapiError", "stack": "XapiError: SR_BACKEND_FAILURE_46(, The VDI is not available [opterr=Failed to attach VDI during \"prepare thin\": The VDI is not available [opterr=Plugin linstor-manager failed]], ) at Function.wrap (/usr/local/lib/node_modules/xo-server/node_modules/xen-api/src/_XapiError.js:16:12) at /usr/local/lib/node_modules/xo-server/node_modules/xen-api/src/transports/json-rpc.js:35:21" } -

Okay so your problem isn't k8s recipe related, but elsewhere (probably the storage).