after downgrading xcp host from trail 90% of my vms are not booting..

-

pretty much the same issue noted here

Re: FAILED_TO_START_EMULATOR restarted Xen stack tools and rebooted the hosthowever my systems where always in bios mode.

the VMs that use local storage boots fine any vm on the other 2 locak SR's do notall linux vms, all had tools installed...

error is

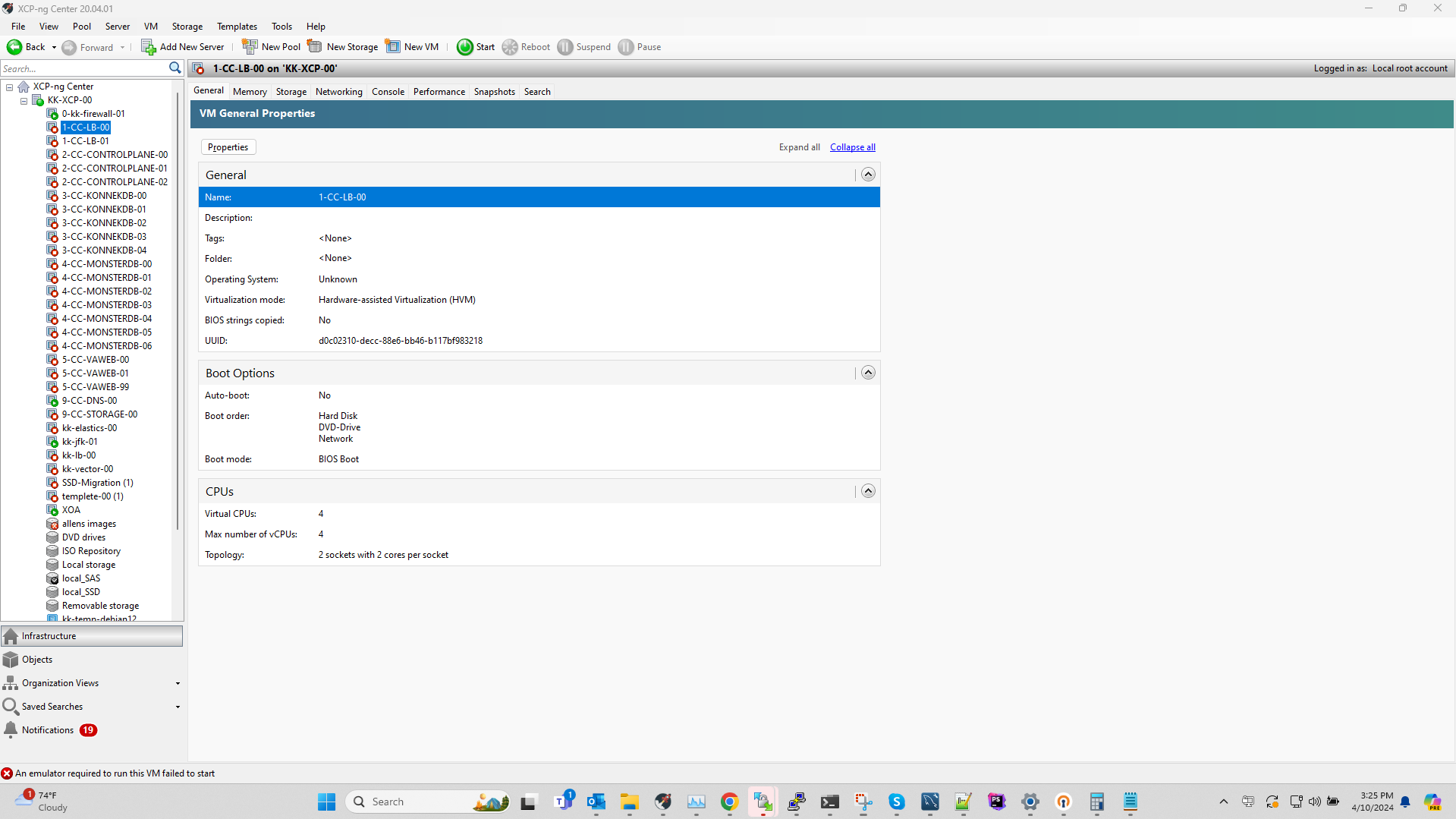

xencenter

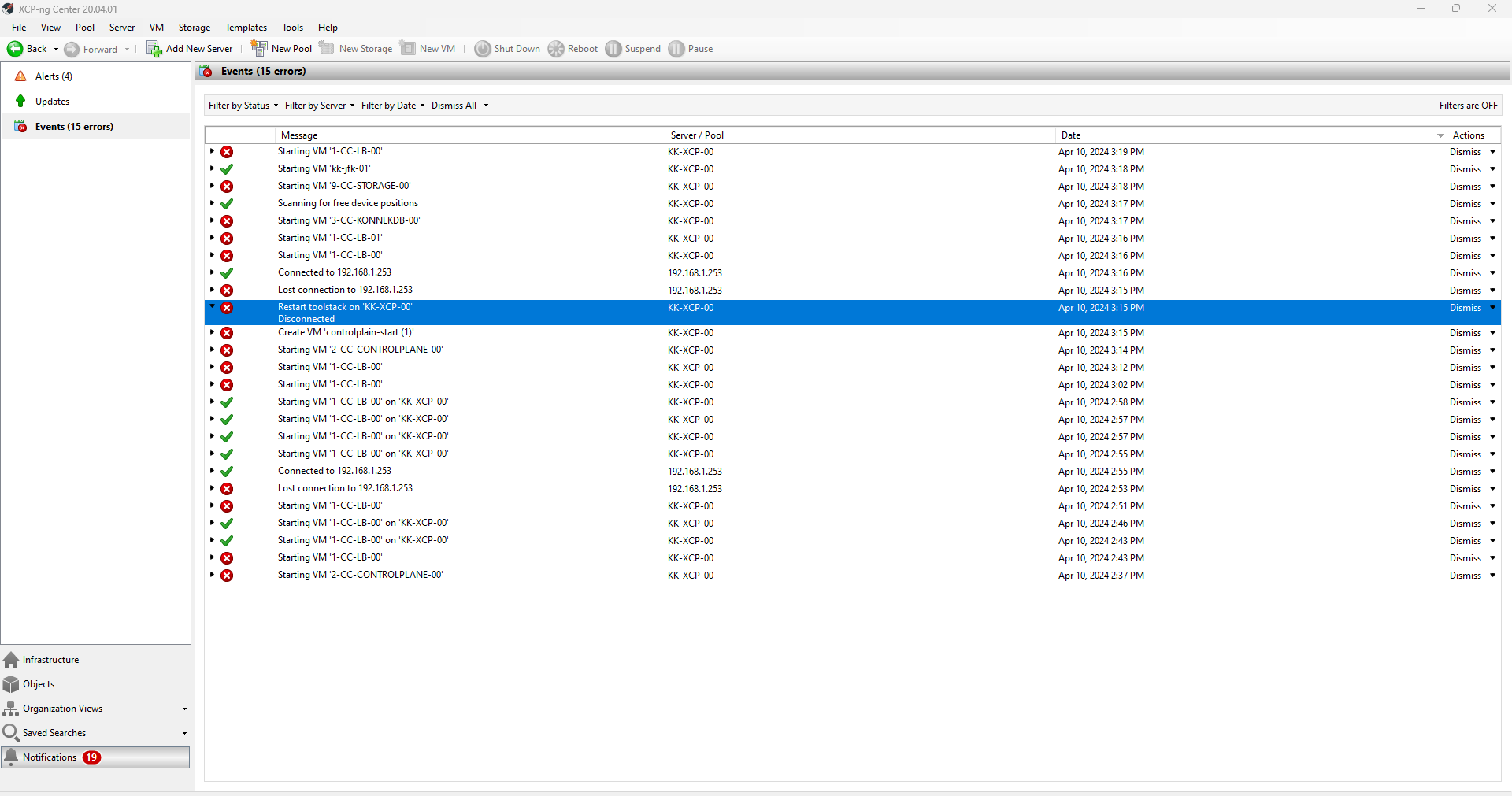

"Failed","Starting VM '1-CC-LB-00'

An emulator required to run this VM failed to start

Time: 00:00:05","KK-XCP-00","Apr 10, 2024 3:02 PM"---XOA

FAILED_TO_START_EMULATOR(OpaqueRef:4ac2e5a9-264d-4739-a52b-2fe7aca867e6, varstored, Daemon exited unexpectedly) -

It would likely help if you provided some additional details, such as --

- You mentioned a trial. Did you mean the 8.3 beta?

- What are the exact steps you performed to downgrade your host?

- Are you now running v8.2.1? Fully patched?

- Is this the pool master?

- You mentioned "other 2 local SRs". Can you tell us more about them?

Does restarting the toolstack fix the issue, even temporarily?

-

-

@Danp

i did not know there was more than 1 trial .

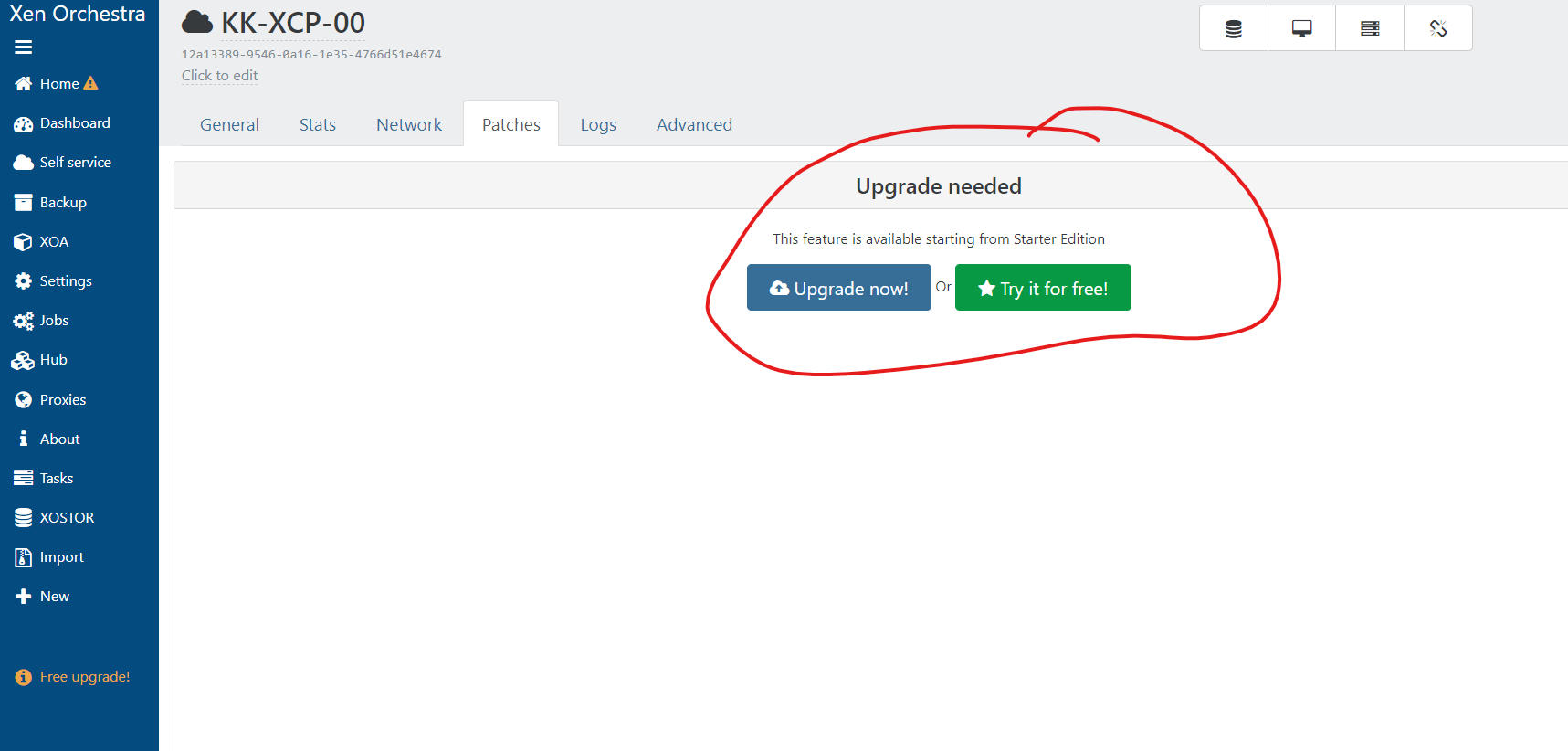

yall have " please try for free listed" everywhere in XOA

it only last 15 days then downgrades everything.. it all worked till i rebooted the vms -

no , restart toolstack or the server does not work.

there is only 1 host

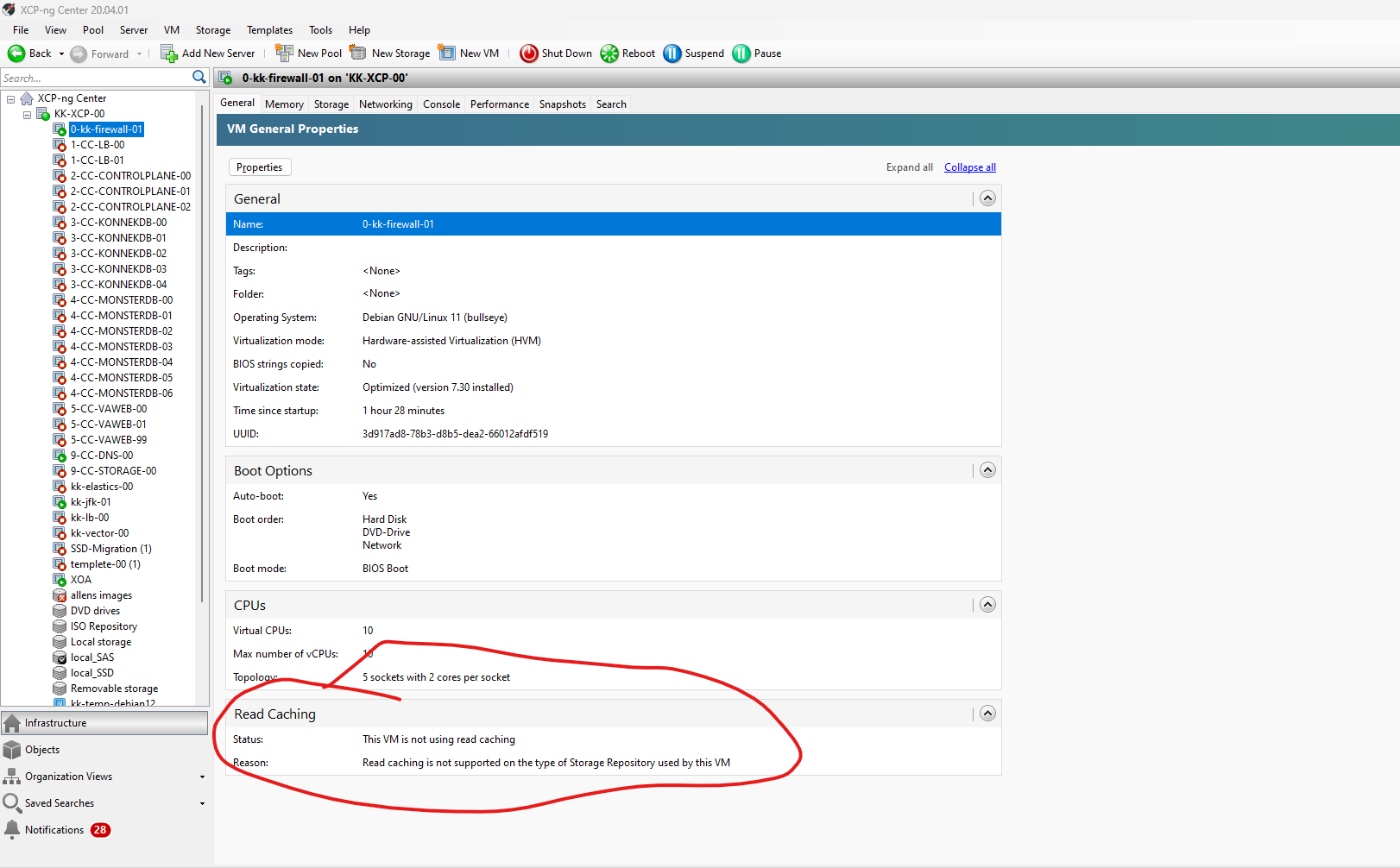

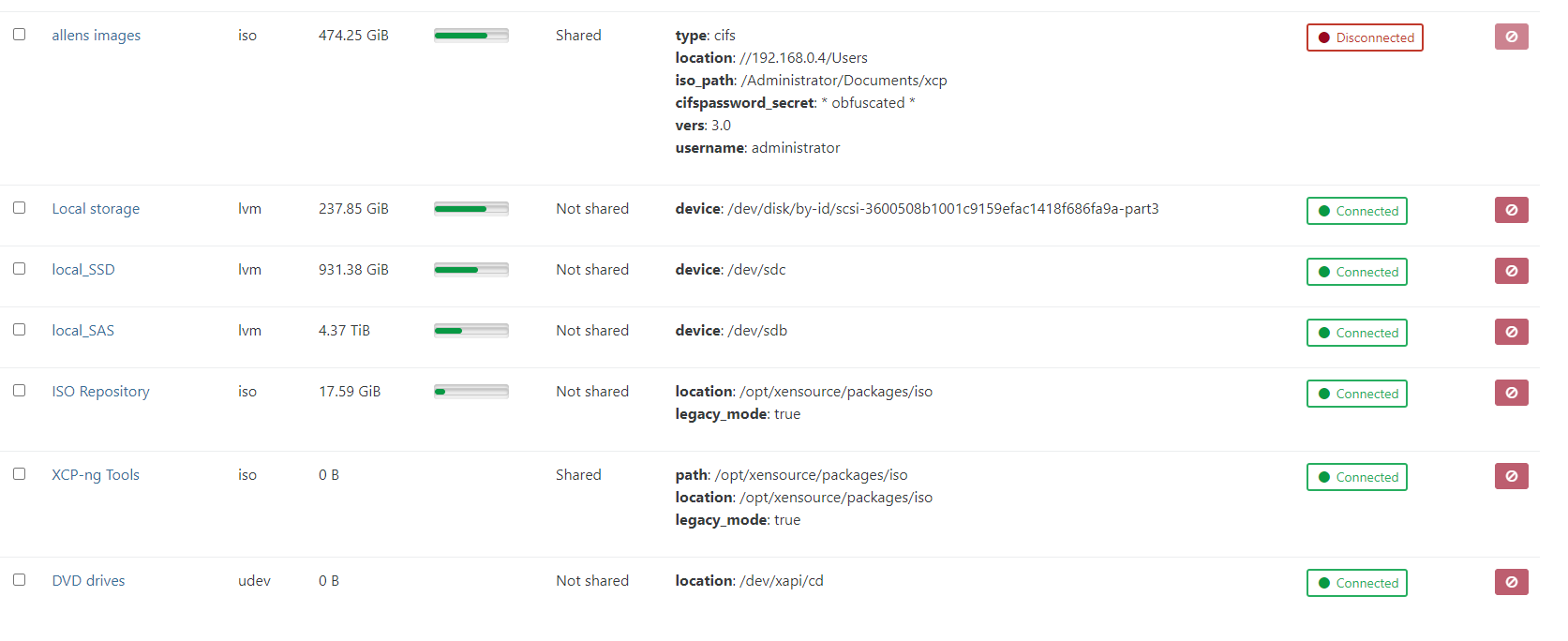

2 Sr's are local.. 1 is SAS drives raid 0 , and the other is SSD SAS raid 0the only one that works is raid 1 local drive. i also see it has a cache read options the others do not have

steps i took to downgrade was what it asked me in the xoa updaters page after the trial ended -

xe host-list params=all uuid ( RO) : e0c75bac-ee42-40f6-a5a3-3a8d60619c76 name-label ( RW): KK-XCP-00 name-description ( RW): Default install allowed-operations (SRO): VM.migrate; provision; VM.resume; evacuate; VM.start current-operations (SRO): enabled ( RO): true display ( RO): enabled API-version-major ( RO): 2 API-version-minor ( RO): 16 API-version-vendor ( RO): XenSource API-version-vendor-implementation (MRO): logging (MRW): suspend-image-sr-uuid ( RW): 37e44f4e-632f-9bfc-23a8-986ade23c001 crash-dump-sr-uuid ( RW): 37e44f4e-632f-9bfc-23a8-986ade23c001 software-version (MRO): product_version: 8.2.1; product_version_text: 8.2; product_version_text_short: 8.2; platform_name: XCP; platform_version: 3.2.1; product_brand: XCP-ng; build_number: release/yangtze/master/58; hostname: localhost; date: 2023-10-18; dbv: 0.0.1; xapi: 1.20; xen: 4.13.5-9.38; linux: 4.19.0+1; xencenter_min: 2.16; xencenter_max: 2.16; network_backend: openvswitch; db_schema: 5.603 capabilities (SRO): xen-3.0-x86_64; hvm-3.0-x86_32; hvm-3.0-x86_32p; hvm-3.0-x86_64; other-config (MRW): MAINTENANCE_MODE_EVACUATED_VMS_MIGRATED: 14cbdb20-23b4-a0db-c557-cddbefba6ea2,0632624b-528a-cc83-1a12-d8586e8955c5,3d917ad8-78b3-d8b5-dea2-66012afdf519,c2bc2168-3c82-d3ed-46e9-5042b76e2041; agent_start_time: 1712776555.; boot_time: 1712087003.; iscsi_iqn: iqn.2023-09.localdomain:2c6b2e0b cpu_info (MRO): cpu_count: 40; socket_count: 2; vendor: GenuineIntel; speed: 2793.288; modelname: Intel(R) Xeon(R) CPU E5-2680 v2 @ 2.80GHz; family: 6; model: 62; stepping: 4; flags: fpu de tsc msr pae mce cx8 apic sep mca cmov pat clflush acpi mmx fxsr sse sse2 ss ht syscall nx rdtscp lm constant_tsc rep_good nopl nonstop_tsc cpuid pni pclmulqdq monitor est ssse3 cx16 sse4_1 sse4_2 popcnt aes xsave avx f16c rdrand hypervisor lahf_lm cpuid_fault ssbd ibrs ibpb stibp fsgsbase erms xsaveopt; features_pv: 1fc9cbf5-f6b82203-2991cbf5-00000003-00000001-00000201-00000000-00000000-00001000-ac000400-00000000-00000000-00000000-00000000-00000000-00000000-00080004-00000000-00000000-00000000-00000000-00000000; features_hvm: 1fcbfbff-f7ba2223-2d93fbff-00000403-00000001-00000281-00000000-00000000-00001000-bc000400-00000000-00000000-00000000-00000000-00000000-00000000-00080004-00000000-00000000-00000000-00000000-00000000; features_hvm_host: 1fcbfbff-f7ba2223-2c100800-00000001-00000001-00000281-00000000-00000000-00001000-9c000400-00000000-00000000-00000000-00000000-00000000-00000000-00000000-00000000-00000000-00000000-00000000-00000000; features_pv_host: 1fc9cbf5-f6b82203-28100800-00000001-00000001-00000201-00000000-00000000-00001000-8c000400-00000000-00000000-00000000-00000000-00000000-00000000-00000000-00000000-00000000-00000000-00000000-00000000 chipset-info (MRO): iommu: true hostname ( RO): kk-xcp-00 address ( RO): 192.168.1.253 https-only ( RW): false supported-bootloaders (SRO): pygrub; eliloader blobs ( RO): memory-overhead ( RO): 3789463552 memory-total ( RO): 274841501696 memory-free ( RO): 255254724608 memory-free-computed ( RO): <expensive field> host-metrics-live ( RO): true patches (SRO) [DEPRECATED]: updates (SRO): ha-statefiles ( RO): ha-network-peers ( RO): external-auth-type ( RO): external-auth-service-name ( RO): external-auth-configuration (MRO): edition ( RO): xcp-ng license-server (MRO): address: localhost; port: 27000 power-on-mode ( RO): power-on-config (MRO): local-cache-sr ( RO): <not in database> tags (SRW): ssl-legacy ( RW): false guest_VCPUs_params (MRW): virtual-hardware-platform-versions (SRO): 0; 1; 2 control-domain-uuid ( RO): a1352c71-c941-4fbb-8074-14a438cfadaa resident-vms (SRO): 14cbdb20-23b4-a0db-c557-cddbefba6ea2; 0632624b-528a-cc83-1a12-d8586e8955c5; 3d917ad8-78b3-d8b5-dea2-66012afdf519; c2bc2168-3c82-d3ed-46e9-5042b76e2041; a1352c71-c941-4fbb-8074-14a438cfadaa updates-requiring-reboot (SRO): features (SRO): iscsi_iqn ( RW): iqn.2023-09.localdomain:2c6b2e0b multipathing ( RW): falseGive this id to the support: 36519

-

@cyford The thread title mentioned XCP host and trial, so I thought that maybe you were referring to the XCP-ng v8.3 beta that is currently available for testing. That's why I asked for more information so that we can be sure how to best assist you.

Downgrading your XOA from a Premium trial to XOA Free wouldn't cause the issues that you are describing, so something else must be happening here. I can see the error message in your screenshot, but otherwise it's not really helpful.

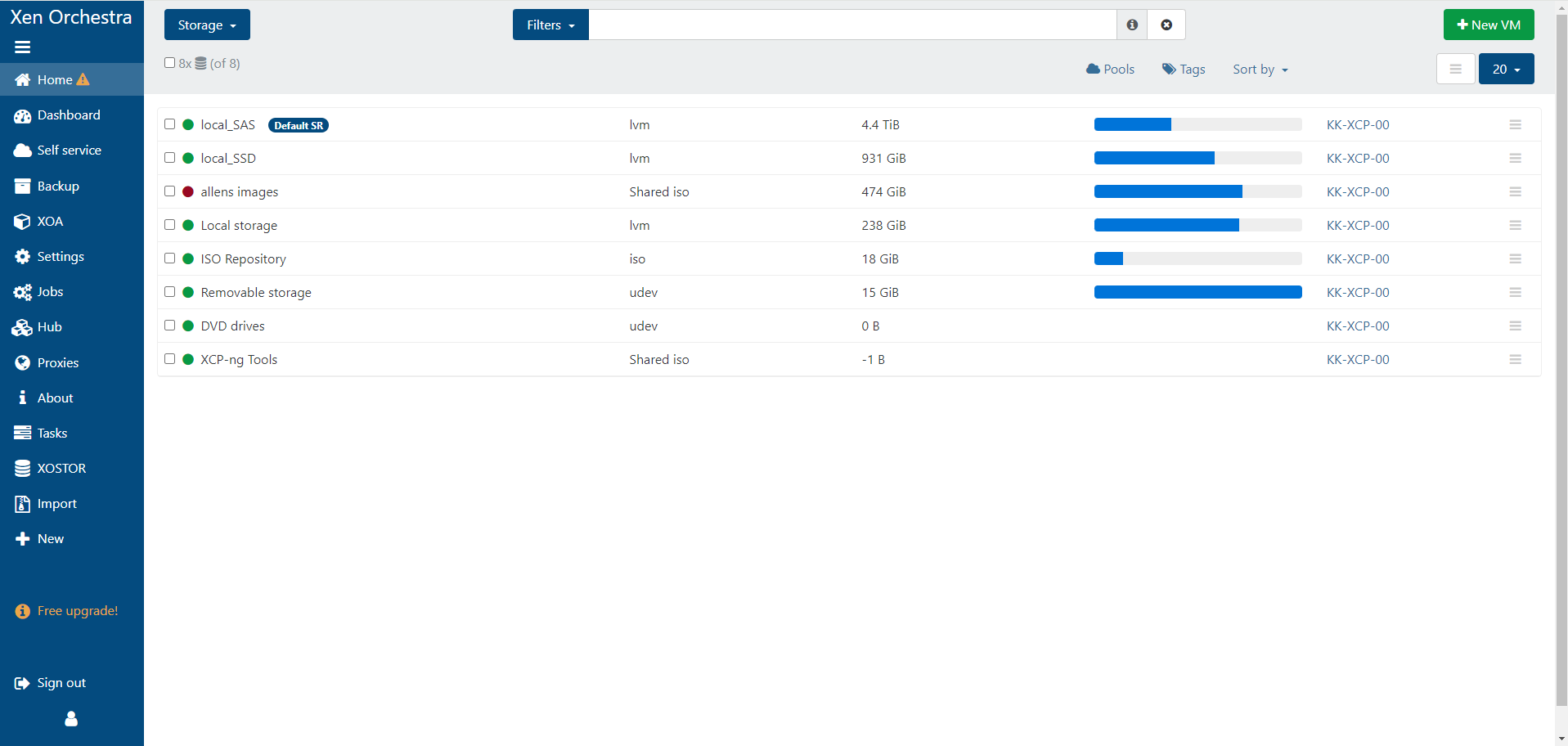

I haven't used the Windows client in forever, so I can't tell if the toolstack restart was successful or failed. Can you try restarting the toolstack using XOA? Also, can you show us a screenshot of the host's Storage tab in XOA?

-

ok yeah i would of said upgrade.. but yes i try to keep it simple so i dont break anything and loose months of work lol

i did the trial to see if i can gain performace, as my last topid was performance issues..

with kubernetes running in vms..then i also opened it up with longhorn pvc provider , in which i learned there seems to be a lack of I/o in these vms

https://github.com/longhorn/longhorn/issues/8303

i xoa locked me aout an wouldnt do anything till i downgrad so i did as it asked.. i do not know what i douwngrated to or what it did behind the scenes..

systems was working for a while but i seen vms started acting up .. so natuarally rebooting them has always fixed it.. not this time.. only vms on /dev/sda are working now

-

@cyford said in after downgrading xcp host from trail 90% of my vms are not booting..:

i xoa locked me aout an wouldnt do anything till i downgrad so i did as it asked.. i do not know what i douwngrated to or what it did behind the scenes..

Your trial expired, so it forced you to revert to XOA Free and no longer you had access to features such as backups but you are still able to manage your VMs.

systems was working for a while but i seen vms started acting up .. so natuarally rebooting them has always fixed it.. not this time.. only vms on /dev/sda are working now

It sounds like you have storage that isn't mounting, which wouldn't have anything to do with XOA and wouldn't have been caused by downgrading from the Premium trial.

-

Thanks Danp , it is curtainly a huge coincidence then .. my i/o issues had me rebooting constantly for weeks.. soon as i downgrade i can not reboot anymore.. Anyways how can i force it being mounted

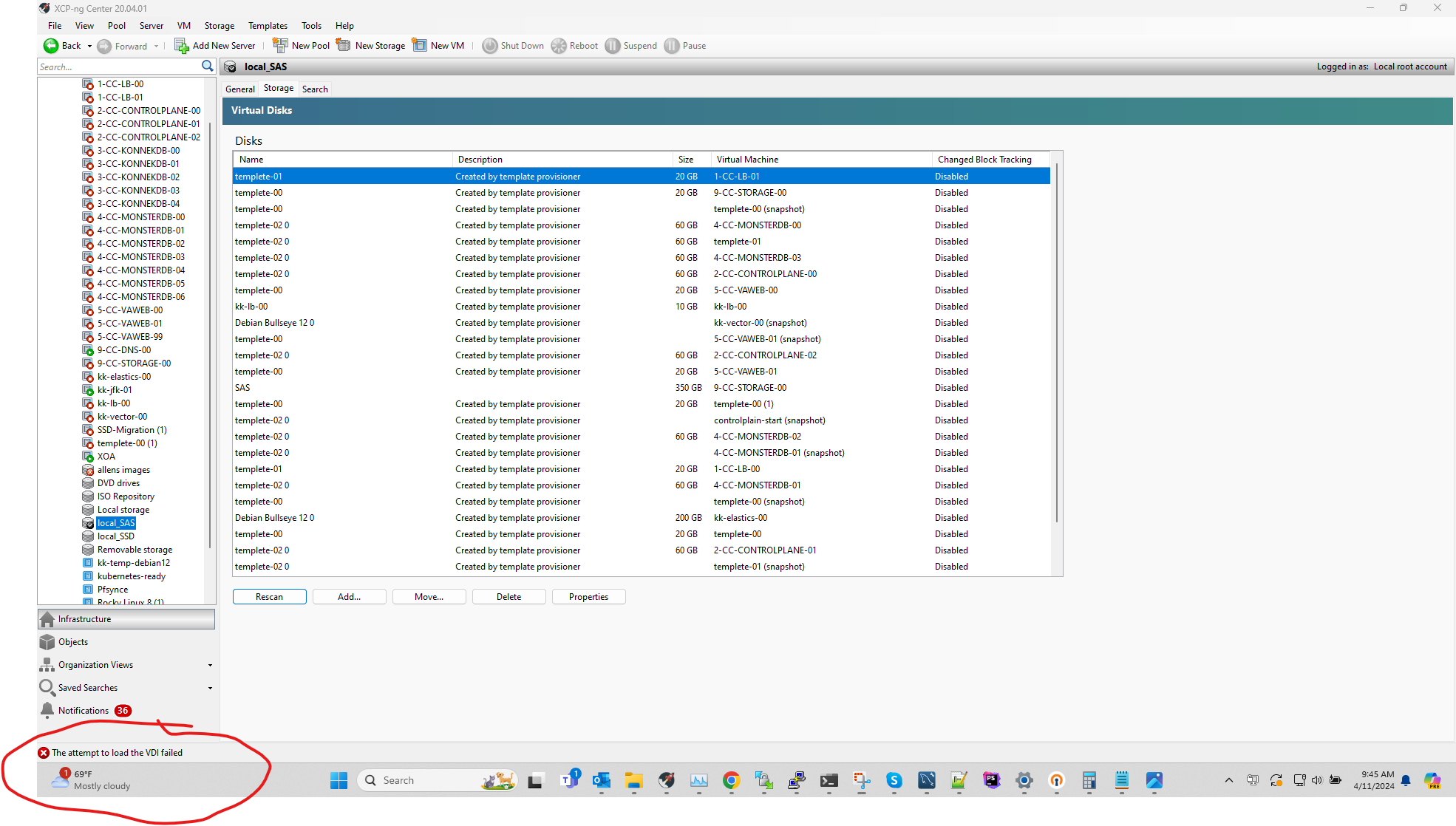

i do see a error about the vdi failed when scaning the repo..

please do not tell me i need to delete all an recreate them

-

@cyford

also when the trial expires the whole panel is useless till you downgrade.. everylink you click takes you to the downgrade.. u can not use xoa till the downgrade is complete -

@cyford How about https://forums.lawrencesystems.com/t/how-to-build-xen-orchestra-from-sources-2024/19913

Takes you 15min

-

@cyford said in after downgrading xcp host from trail 90% of my vms are not booting..:

everylink you click takes you to the downgrade.. u can not use xoa till the downgrade is complete

Yes, that is clearly by design.

my i/o issues had me rebooting constantly for weeks.. soon as i downgrade i can not reboot anymore..

Sounds like you were having storage problems before the XOA downgrade.

i do see a error about the vdi failed when scaning the repo..

please do not tell me i need to delete all an recreate themYou could check

/var/log/SMlogto see if it provides further details related to this VDI. -

thats not correct . only in these vm's there is issues, test proves fine in the host or any other os performance issues and errors is only in the vm's themselves

also in the host it shows as there mounted and all drives test fine and have great speed an performance in the host

-

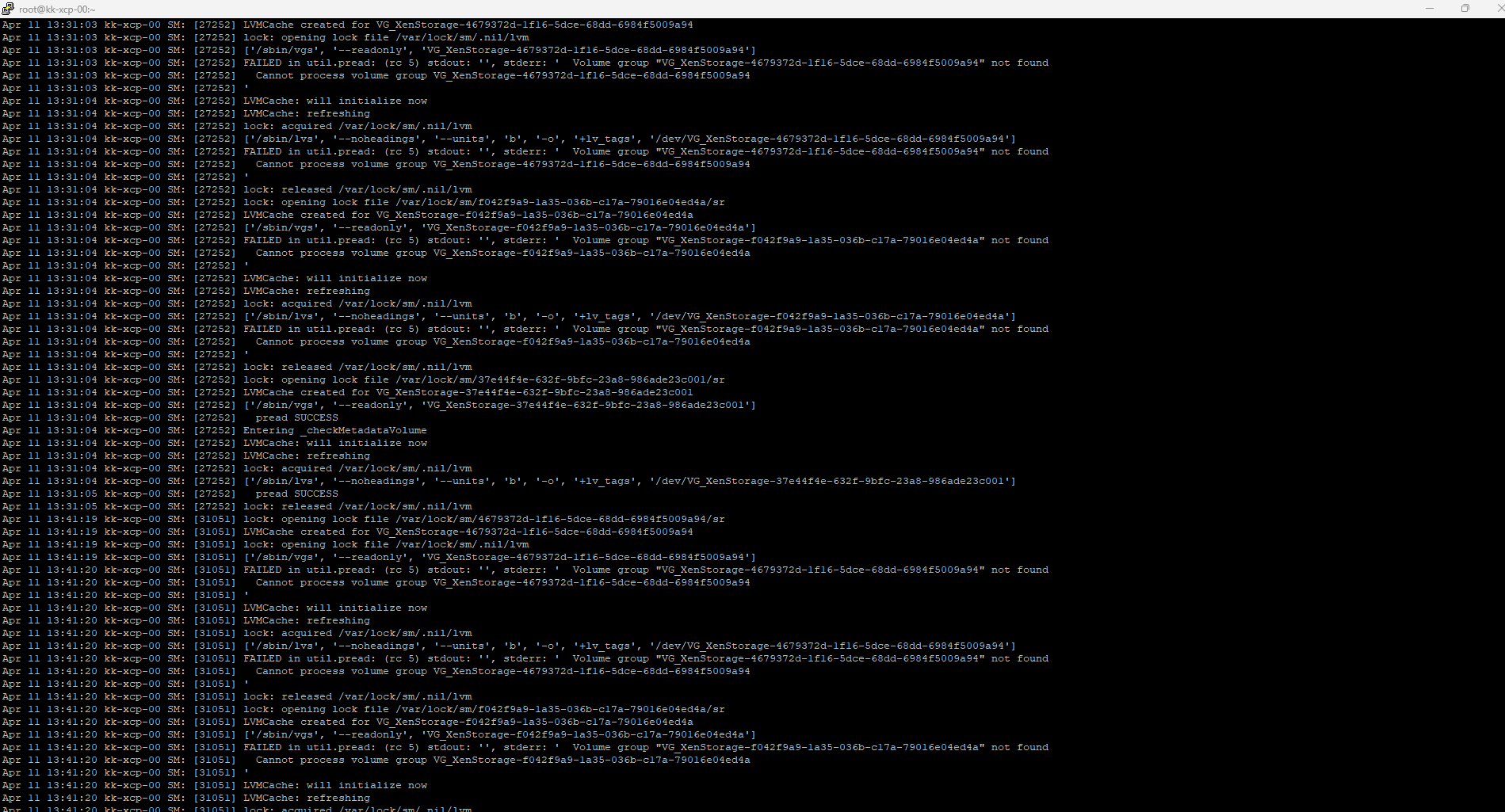

here is the complete logs for smlog

-

@Danp said in after downgrading xcp host from trail 90% of my vms are not booting..:

I haven't used the Windows client in forever, so I can't tell if the toolstack restart was successful or failed. Can you try restarting the toolstack using XOA? Also, can you show us a screenshot of the host's Storage tab in XOA?

Reply

i restarted it in xoa aswell it doesnt workwhen i start a vm this is what i see in the logs:

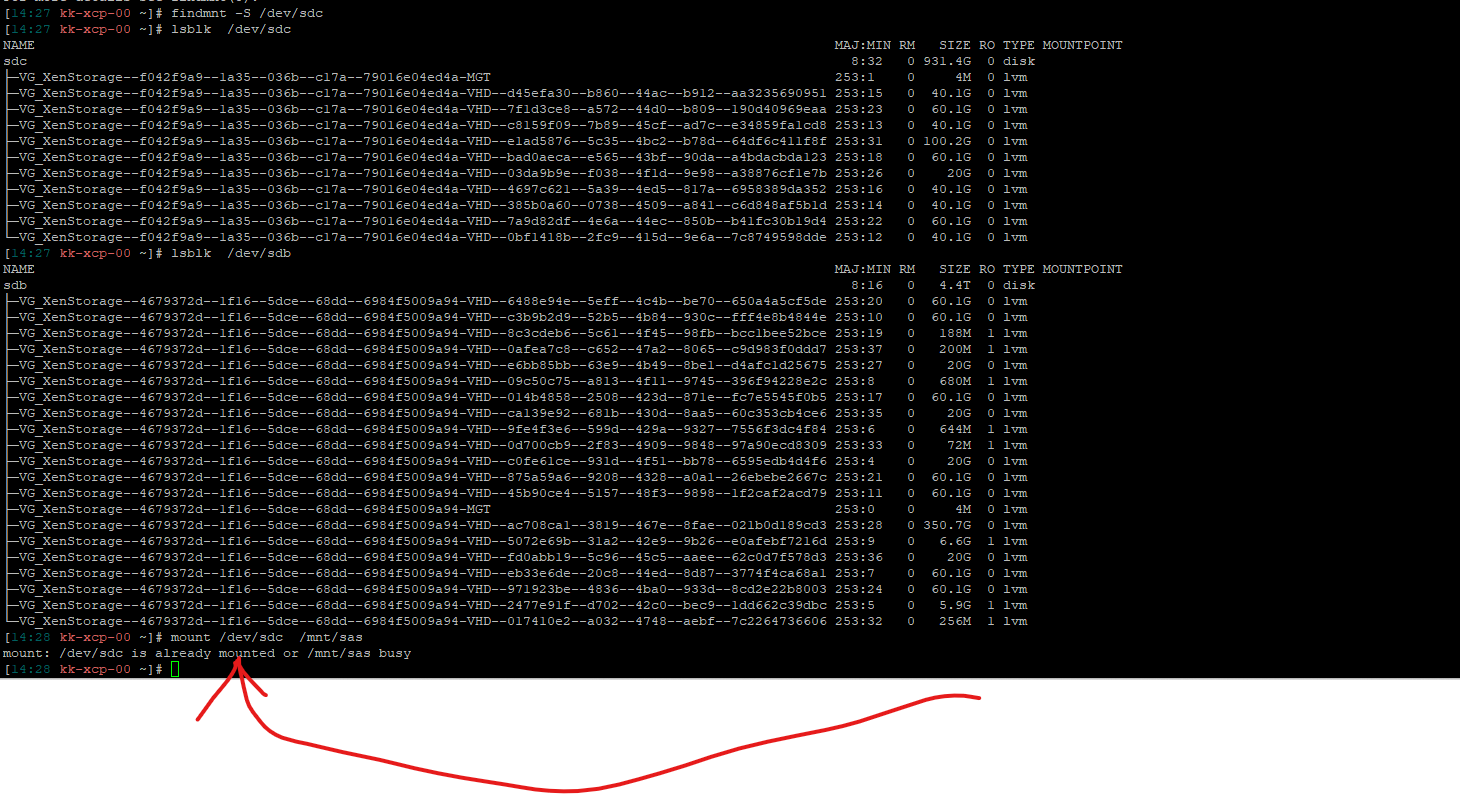

Apr 11 14:59:33 kk-xcp-00 SM: [31761] lock: opening lock file /var/lock/sm/4679372d-1f16-5dce-68dd-6984f5009a94/sr Apr 11 14:59:33 kk-xcp-00 SM: [31761] LVMCache created for VG_XenStorage-4679372d-1f16-5dce-68dd-6984f5009a94 Apr 11 14:59:33 kk-xcp-00 SM: [31761] lock: opening lock file /var/lock/sm/.nil/lvm Apr 11 14:59:33 kk-xcp-00 SM: [31761] ['/sbin/vgs', '--readonly', 'VG_XenStorage-4679372d-1f16-5dce-68dd-6984f5009a94'] Apr 11 14:59:34 kk-xcp-00 SM: [31761] FAILED in util.pread: (rc 5) stdout: '', stderr: ' Volume group "VG_XenStorage-4679372d-1f16-5dce-68dd-6984f5009a94" not found Apr 11 14:59:34 kk-xcp-00 SM: [31761] Cannot process volume group VG_XenStorage-4679372d-1f16-5dce-68dd-6984f5009a94 Apr 11 14:59:34 kk-xcp-00 SM: [31761] ' Apr 11 14:59:34 kk-xcp-00 SM: [31761] LVMCache: will initialize now Apr 11 14:59:34 kk-xcp-00 SM: [31761] LVMCache: refreshing Apr 11 14:59:34 kk-xcp-00 SM: [31761] lock: acquired /var/lock/sm/.nil/lvm Apr 11 14:59:34 kk-xcp-00 SM: [31761] ['/sbin/lvs', '--noheadings', '--units', 'b', '-o', '+lv_tags', '/dev/VG_XenStorage-4679372d-1f16-5dce-68dd-6984f5009a94'] Apr 11 14:59:34 kk-xcp-00 SM: [31761] FAILED in util.pread: (rc 5) stdout: '', stderr: ' Volume group "VG_XenStorage-4679372d-1f16-5dce-68dd-6984f5009a94" not found Apr 11 14:59:34 kk-xcp-00 SM: [31761] Cannot process volume group VG_XenStorage-4679372d-1f16-5dce-68dd-6984f5009a94 Apr 11 14:59:34 kk-xcp-00 SM: [31761] ' Apr 11 14:59:34 kk-xcp-00 SM: [31761] lock: released /var/lock/sm/.nil/lvm Apr 11 14:59:34 kk-xcp-00 SM: [31761] vdi_epoch_begin {'sr_uuid': '4679372d-1f16-5dce-68dd-6984f5009a94', 'subtask_of': 'DummyRef:|a036458a-6043-476f-bce5-21a6ca72d1dc|VDI.epoch_begin', 'vdi_ref': 'OpaqueRef:3a903002-b13c-48b7-990e-f466fe0b6037', 'vdi_on_boot': 'persist', 'args': [], 'vdi_location': 'c0fe61ce-931d-4f51-bb78-6595edb4d4f6', 'host_ref': 'OpaqueRef:e40ba20c-5291-43e3-8a40-bcc6332ad750', 'session_ref': 'OpaqueRef:68bc589a-0524-4ced-81e6-b789e0592581', 'device_config': {'device': '/dev/sdb', 'SRmaster': 'true'}, 'command': 'vdi_epoch_begin', 'vdi_allow_caching': 'false', 'sr_ref': 'OpaqueRef:f2bc7092-9ba7-40ac-b3a1-f01228ee2079', 'vdi_uuid': 'c0fe61ce-931d-4f51-bb78-6595edb4d4f6'} -

I haven't checked your pastebin yet, but those definitely appear to be storage issues to me based on the logs shown in your screen shots. You should post the output of

pvdisplay,lvdisplay, andvgdisplayfor the affected storage. -

reboote the server and it did not come up lol dude these drives are good, hardware is good.. something to do with this hypervisor.. think i may need to reinstall the os tommorow..

if there is a repair option in this iso i will try it..

most is kubernetes so deploying is easy .. but my git was not updated in weeks so im scared i will loose data from one of the vms. i wish i could mount it somehow externally is that possible?

ill run your commands tommorow though

-

@cyford said in after downgrading xcp host from trail 90% of my vms are not booting..:

2 Sr's are local.. 1 is SAS drives raid 0 , and the other is SSD SAS raid 0

the only one that works is raid 1 local drive

I'm confused by the above. Are there 2 local SRs or 3? How were these created?

Which SRs are working correctly and which SRs are giving you problems?

@cyford said in after downgrading xcp host from trail 90% of my vms are not booting..:

when i start a vm this is what i see in the logs:

Apr 11 14:59:34 kk-xcp-00 SM: [31761] FAILED in util.pread: (rc 5) stdout: '', stderr: ' Volume group "VG_XenStorage-4679372d-1f16-5dce-68dd-6984f5009a94" not found

Apr 11 14:59:34 kk-xcp-00 SM: [31761] Cannot process volume group VG_XenStorage-4679372d-1f16-5dce-68dd-6984f5009a94When you create an SR, it builds a file system on the target device. In the above, it is trying to access a volume group that no longer appears to exist. This is a storage problem.

@cyford said in after downgrading xcp host from trail 90% of my vms are not booting..:

reboote the server and it did not come up lol dude these drives are good, hardware is good.. something to do with this hypervisor

I said that you appear to have a storage problem, which doesn't necessarily imply a hardware problem.

i wish i could mount it somehow externally is that possible?

I imagine so, but it depends on many factors that are unknown at this time.

-

just want to say after reinstalling all and recreating the all repositories i do have any disk errors or IO issues